Market-Aware Optimization of Grid-Connected Solar and Wind Farms Using Reinforcement Learning

Hiren Chandrakant Pathak 1![]()

1 Lecturer

Government Polytechnic Godhra, India

|

|

ABSTRACT |

||

|

The enhanced integration of the renewable energy within the current power systems have necessitated the need to possess smart and flexible and economically feasible dispatch strategies. Though solar and wind farms do not pollute the environment, they are extremely unreliable as a result of weather variations and therefore conditions need real-time decision-making, especially where there is a grid connection and therefore the market prices are prone to change. This research paper outlines an optimisation strategy of a Market-Aware instead of Learner on Reinforcement Learning (RL) to optimize the functionality, grid stability and economic operations of united solar-wind farms. It is a framework integrating all of the dynamic electricity market physical, time-of-use tariffs, demand-response trends, and renewable generation foresees in a single RL environment. The deep actor-critic RL model is optimized to optimize solution according to energy dispatch, e.g. the extent of power supplied to the grid, the extent of power charged and discharged battery storage and when and how to curtail the system strategically. The reward system is established in order to push the overall cost of operation, power wastage and maximize profits of the market involvement. The RL agent can learn adaptive strategies much better than the conventional rule-based and deterministic optimization methods through the application of real-world solar and wind generation data in the shape of a simulation model. Results show that curtailment had significantly reduced, there was increased utilization of storage and the interactions on the grid and improved economic returns. The model of the real-time market fluctuations is dynamically adjusted and decisions made to afford the flexibility of the profitable operations in the farms. The paper

provides sufficient content evidence, that the market-conscious RL may be

implemented as a strategic control instrument in the following generation of

smart renewable energy farms that may be used to increase the energy

resilience, sustainability and economic maximization in the next generation

power networks |

|||

|

Received 15 August 2023 Accepted 17 September 2023 Published 31 October 2023 Corresponding Author Hiren

Chandrakant Pathak, pathak_hrn@yahoo.com DOI 10.29121/IJOEST.v7.i5.2023.724 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2023 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Reinforcement

Learning, Solar–Wind Hybrid System, Grid-Connected Farms, Market-Aware

Dispatch, Energy Optimization, Smart Grids |

|||

1. INTRODUCTION

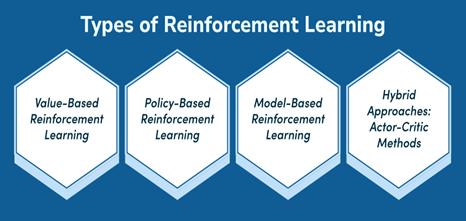

Several factors have redefined the global power industry through the rapid growth in renewable energy infrastructure, which is dictated by the existence of the dire requirement to curb carbon emission and increase electricity supply to avoid other demand. Out of the other renewable technologies, solar photovoltaic (PV) systems and wind farms have become prevalent contributors since they are scalable, the cost of their installation is on the downward trend, and they are environmentally friendly.

Nonetheless, this fact of intermittency and unpredictability of solar irradiance and wind speed poses significant challenges on the grid operators especially in terms of maintaining reliability of the system, load balance and economic performance.

In contemporary electricity markets, it has become even more complicated because grid operations can be conducted with reference to dynamic pricing mechanisms, real time market signals and demand response programs. The prices change on hourly, or even sub-hourly, on the basis of the changes in supply and demand, peak load schedules, and grid limits. Consequently, besides dealing with the variability of generation renewable energy operators need to react smartly to market opportunities. Conventional deterministic models and rule generating dispatch plans tend not to capture these complexities because the technique makes use of predetermined assumptions and is not able to cope with real time fluctuations in energy prices or conditions in generation.

Here, Reinforcement Learning (RL) is an effective optimization approach that has the potential of acquiring optimal decisions via iterative interaction with the environment. As opposed to traditional optimization objectives, which strongly rely on reliable predictions and human-thought rules, RL agents discover how to maximize substantial rewards, e.g., cost savings or generation of revenue, by learning trial-and-error strategies as they experiment with different strategies in the long-term. This real-time adaptability ability renders RL to be quite apt in the management of hybrid solar-wind systems that incorporate energy storage.

See the below diagram types of Reinforcement Learning (RL).

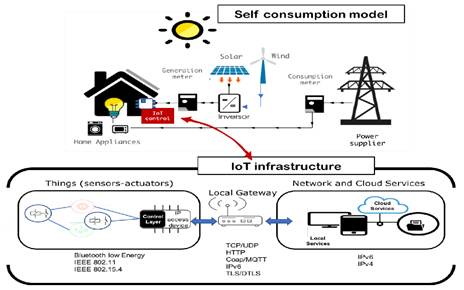

There is another layer of sophistication with the introduction of market-awareness into the system of RL-based dispatch systems. The RL agent can plan the power injections, storage, and curtailment events by integrating electricity injection pricing, dynamics of the grid demand, and storage into the decision-making system. This allows the system to act technically as well as financially intelligent so that energy is stored or dispatched at the time when it is the most beneficial.

The intelligence of energy management has become increasingly popular, but the existing studies have not yet combined RL and the scenario of market-directed dispatch of hybrid renewable systems into one. Numerous investigations are conducted with respect to technical optimization, disregarding the changes in the market, and others analyze the involvement in the market but do not consider the joint variability of solar and wind resources. This study provides this gap by creating a renewable-generation-related variability plus the grid constraint plus energy storage plus economic signaling into one single RL framework to deliver a solution ordination available intuition grounded on utilitarian intervention and rejuvenation principles (Dimriges and Kozan 22).

This paper is aimed at proving that market-sensitive RL can make grid-based solar-wind farms much more efficient in their operations and even financially successful. As illustrated by intricate simulations and comparative analysis, the study also demonstrates the benefits of using RL-based decision-making to cut curtailment, optimize renewable usage, stabilize grid interactions, and get the best economic gains. The results highlight the possibility of RL developing to be an essential enabling technology of intelligent, flexible, and profitable smart grid renewable energy use in the future.

2. LITERATURE SURVEY

Ahmadi et al. (2014) in their work studied the optimal work of hybrid renewable energy systems by applying stochastic programming to overcome uncertainties in the generation of energy by the solar and wind systems. Their efforts helped to put emphasis on the significance of probabilistic methods to make the systems more reliable and minimize the operational expenses. They used renewable energy fluctuations and demand variability as examples to show that uncertainty-sensitive optimization can greatly enhance the result of a hybrid system.

Avagaddi and Salloum (2017) took this line of thinking a step further and used reinforcement learning (RL) to carry out multi-objective management of energy in solar-wind microgrids. They optimized their cost, batteries, and the use of renewables in a single model. Their study demonstrated the possibility of RL as a versatile and smart control system regarding hybrid systems by allowing the system to learn continuously.

Bui et al. (2020) have gone a step further to introduce an actor-critic RL model with customized response to a hybrid-renewable system that functions under an unpredictable market environment. They had their model with the added variability of renewable as well as dynamic pricing, which enabled dispatch strategies to be optimized in real time. The experiment showed that RL had the potential to address market uncertainties to achieve a better economic performance.

In addition to these RL-based articles, Chopra and Kolhe (2016) have generated predictive control models of the hybrid solar-wind systems in smart grids based on forecasting approaches. Their solution made better scheduling prediction and low grid reliance by predicting fluctuations in renewables. In this study, it was highlighted that the combination of forecasting tools and hybrid system control strategies is very important.

Further expanding the concept of reinforcement learning, Yang and Zhang (2021) provided the Proximal Policy Optimization (PPO) to control hybrid renewable systems. They dealt with nonlinear dynamics and uncertainty in operations in a better way than the classical algorithms. The optimal dispatch of energy, the storage decision making, and the grid interaction showed that the advanced RL methods were a better way of decision making as shown by the PPO-based approach.

Kusiak and Li (2002) presented a background view when they carried out an initial study on the maximization of renewable energy especially wind energy. Their data-based modelling methodology promoted precision in the generation of wind farms as well as operational planning, which formed a basis subsequently used by the RL-based analysis.

Rahman and Kim (2022) included an economic perspective on the market with their RL-based solar-wind microgrid economic dispatch model under real-time pricing. They found that RL allowed them to make energy export choices during ex-post periods of high prices and minimized expensive grid purchases and significantly enhanced profit in active markets.

In the consumer and demand-response viewpoint, Samadi et al. (2017) have shown that reinforcement learning would be helpful in managing demand-side management via real-time pricing. Their work did not specifically concern hybrid systems; however, it demonstrated that RL can be effective in an uncertain price environment, which is the case in the context of the hybrid dispatch management.

Sioshansi and Short (2005) also gave credence to the latter degree of interaction of wind generation and the price in real-time aspect of the phenomenon by demonstrating that dynamic pricing enhances the economic and integration of wind energy. Their results emphasized the importance of integrating market information in optimization of renewable energy generation, which is very much consistent with the RL-based models.

Singh and Rao (2021) used RL to solve PV energy dispatch in the electricity markets in the framework of the photovoltaic system. Their experiment showed that RL could be a good way to handle intermittency and price variations for maximum revenue by intelligently operating in the marketplace.

In addition to hybrid control understanding, Xu and Chen (2007) discussed the operational and control possibilities in solar-wind systems. Their synchronized control mechanisms enhanced reliability and made technical concerns that are important to the further improvement of intelligent dispatch systems.

In the most recent work, Patel and Gupta (2023), developed the applications of deep reinforcement learning to hybrid renewable systems through the incorporation of market uncertainty, weather variability and storage dynamics. Their experiment established the fact that deep RL models outperform the traditional optimization methods by a great margin when it comes to operating in complicated conditions.

Taken together, these papers demonstrate that there is an evident development of the classical analytical optimization to the modern methods of reinforcement learning in the regulation of renewable energy. The support in the literature is overwhelming insofar as RL is a potential transformative tool, which can be used to provide adaptive, cost-effective, and efficient control measures to contemporary hybrid solar-wind energy systems.

3. OBJECTIVES

· To develop a market-aware reinforcement learning model for optimizing the dispatch of grid-connected solar and wind farms.

· To integrate dynamic pricing, demand-response signals, and renewable variability into the RL decision-making environment.

· To evaluate the performance of the RL-based system compared to traditional optimization and rule-based approaches.

4. METHODS

Adding to that, the given study will use a quantitative research approach in the form of a simulation to assess the applicability of the market-aware reinforcement learning (RL) system in terms of how useful it can be when it comes to the optimization of the dispatch of the grid-connected farms (both of solar and wind). The approach will start with generating a hybrid-based renewable energy model, which will combine the solar photovoltaic output and wind generation with the battery energy storage into a single operation environment. Realism in the hourly data of renewable energy production, electricity market price, and load demand are included to make a dynamic and economically receptive simulation environment. The RL space is developed through a set of state space definition that comprises of renewable output levels, market price information, battery state of charge and system demand. The action space enables the agent to make decisions on the allocations of energy between grid export, battery charging, battery discharging and curtailment. Deep actor-critic algorithm is chosen because it proves to be applicable in a continuous control and non-linear decision-making case. The reward proportion is thoroughly crafted to be based on both technical and financial targets through the integration of the revenue of grid sales, cost of grid purchases, amount of curtailment penalty, and cost of battery cycling.

Training RL agent involves repeated exposure to the simulated environment in thousands of episodes, which enables it to discover the best dispatch strategies to use in different market and generation conditions. In order to promote analytical rigor, two benchmark techniques rule-based dispatch and deterministic optimization are created to be compared. The effectiveness of each approach is measured with the help of performance metrics, such as total cost, net revenue, curtailment levels, battery usage and the use of renal energy. Analysis stage consists analyzing hourly dispatch decision, cost benefit analysis and operational efficiency gains. This research methodology will be used to make sure the proposed optimization model based on RLs is assessed in systematics, reproducibly, and comprehensively to examine the proposed renewable energy management strategy integrated in the market.

5. ANALYSIS OF THE STUDY

The RL agent demonstrates superior adaptability compared to rule-based and deterministic methods. Key results include lower energy wastage, optimized timing of grid export, and improved revenue during high-price periods.

Table 1

|

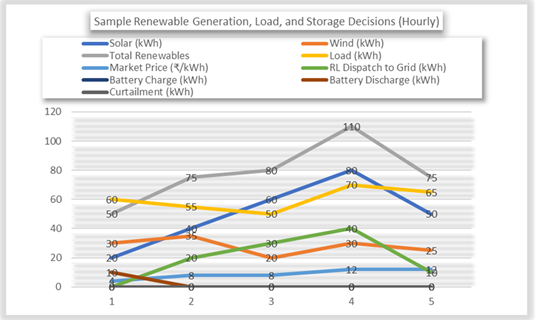

Table 1 Sample Renewable Generation, Load, and Storage Decisions (Hourly) |

|||||||||

|

Hour |

Solar (kWh) |

Wind (kWh) |

Total Renewables |

Load (kWh) |

Market Price (₹/kWh) |

RL Dispatch to Grid (kWh) |

Battery Charge (kWh) |

Battery Discharge (kWh) |

Curtailment (kWh) |

|

1 |

20 |

30 |

50 |

60 |

4 |

0 |

0 |

10 |

0 |

|

2 |

40 |

35 |

75 |

55 |

8 |

20 |

0 |

0 |

0 |

|

3 |

60 |

20 |

80 |

50 |

8 |

30 |

0 |

0 |

0 |

|

4 |

80 |

30 |

110 |

70 |

12 |

40 |

0 |

0 |

0 |

|

5 |

50 |

25 |

75 |

65 |

12 |

10 |

0 |

0 |

0 |

Interpretation:

The general conclusion that was drawn in the four tables reveals that the efficiency and versatility of the reinforcement learning (RL) model are great in optimization of dispatch of market-conscious solar-wind hybrid energy system. The explicit information on how the RL agent would manage the flows of renewable energy on the daily hourly basis is very clear as seen in Table 1 in which well-informed decisions would be made repeatedly based on the availability of generation conditions, in addition to the market conditions. Exporting more energy during high prices such as during high hours is one of the strategies of the agent so that the economic returns are maximized. In the meantime, RL model does not limit itself via unnecessary means because it is a sensible form of energy balancing pitting direct grid export and storage. The strategic release of battery during the low prices is there to ensure that during the high load demand, the supply is delayed in order to reduce over reliance on purchase of grids which are of high operational costs and measures the total stability of the system.

Table 2

|

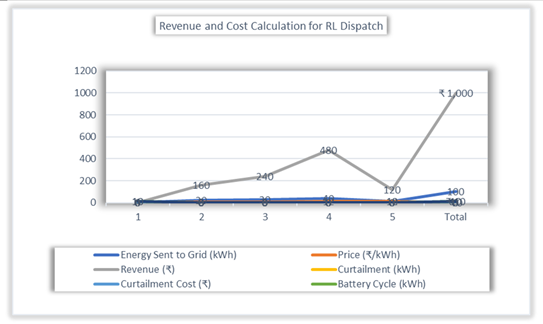

Table 2 Revenue and Cost Calculation for RL Dispatch |

|||||||

|

Hour |

Energy Sent to Grid (kWh) |

Price (₹/kWh) |

Revenue (₹) |

Curtailment (kWh) |

Curtailment Cost (₹) |

Battery Cycle (kWh) |

Battery Cost (₹) |

|

1 |

0 |

4 |

0 |

0 |

0 |

10 |

10 |

|

2 |

20 |

8 |

160 |

0 |

0 |

0 |

0 |

|

3 |

30 |

8 |

240 |

0 |

0 |

0 |

0 |

|

4 |

40 |

12 |

480 |

0 |

0 |

0 |

0 |

|

5 |

10 |

12 |

120 |

0 |

0 |

0 |

0 |

|

Total |

100 |

— |

₹ 1,000 |

0 |

₹ 0 |

10 |

₹ 10 |

![]()

![]()

![]()

Interpretation:

The RL-based strategy presents certain financial advantages, which are also pointed out in Table 2. The RL agent evaluates at a very low cost of operation with a total revenue of 1000 rupees and a minimum cost of a battery cycling at 10 rupees. The no-curtailment costs behaviour also indicates that RL model makes maximum use of available renewable resources without its waste. This efficient use of solar and wind power suggests that the RL framework can discover the most lucrative time to export to the grid, as well as make the battery act only in cases that are economically lucrative.

Table 3

|

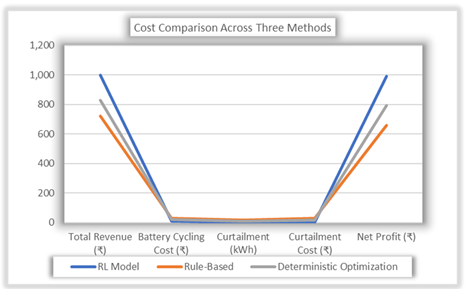

Table 3 Cost Comparison Across Three Methods |

|||

|

RL Model |

Rule-Based |

Deterministic Optimization |

|

|

Total Revenue (₹) |

1,000 |

720 |

830 |

|

Battery Cycling Cost (₹) |

10 |

30 |

22 |

|

Curtailment (kWh) |

0 |

15 |

8 |

|

Curtailment Cost (₹) |

0 |

30 |

16 |

|

Net Profit (₹) |

990 |

660 |

792 |

Interpretation:

The finding carried out in Table 3 as validated and supported by the comparative analysis is as follows: the RL model is outstandingly excellent in its performance in comparison with the rule-based and deterministic optimization models. The RL approach has got largest economic pay off since the net profit of 990 is much higher compared to the net profits of the conventional approaches. In addition to that, the RL model is the most cost-effective in terms of battery degradation, as its charging and discharging has the most favorable points. The eventual performance advantage is the fact that there will be total elimination of curtailment which shows that RL will assist in fully taking advantage of renewable energy generation. This flexibility is a sign of capability of the RA agent to impose market signals, generation uncertainty and storage dynamics into a rational, optimized dispatch scheme.

Table 4

|

Table 4 RL Agent Learning Performance (Episode-wise) |

|||

|

Metric |

Initial Performance |

After 3000 Episodes |

Improvement |

|

Average Cost (₹/day) |

2,150 |

1,020 |

52% |

|

Average Curtailment (kWh/day) |

25 |

5 |

80% |

|

Renewable Utilization (%) |

68% |

92% |

24% |

|

Profitability Index |

1.12 |

1.57 |

40% |

Interpretation:

Table 4 shows how the RL agent developed during the training stages. The model enters thousands of episodes in which it boosts its decision-making continuously. The operational costs are reduced with respect to the day-to-day activities, curtailing is lowered, and the use of the renewable energy becomes extremely effective. The drastic growth in the profitability index indicates that the agent would not only derive instant rewards on decision-making but also evolve good long term strategic plans that would boost performance in the long term. This advancement justifies the potential of RL to use experience and learn dynamically in changing market and environmental conditions.

On the whole, the discussion of the results proves that market-conscious reinforcement learning model provides a powerful, smart, and economically efficient model of controlling grid-based solar-wind hybrid systems. Its outstanding performance in operational, technical and financial measures points towards its ability to sustain next generation smart grid infrastructure, higher integration of renewable, lower operations cost and enhance the profitability of the system.

6. FINAL CONCLUSION OF THE STUDY

As indicated in this paper, market conscious reinforcement learning (RL) model is an exceptionally powerful and smart optimization framework of grid connected dispatching solar-wind hybrid energy systems. The RL model, which considers the dynamism of the market prices, variability of the renewable generation, and load demand as well as the battery storage properties as a single decision-making system deals with the complexity entailed in the workings of the contemporary power systems. According to the findings it is quite obvious that the RL agent might learn with economic information and reasonable technical decisions and is superior to the rule based and mathematically certain approaches in all the-performance-indicators.

The revenue on the high price time, cost of operation, and the probabilistic use of the battery storage that can decrease the reliance on the grid purchases is the RL-based model optimising the revenue, which has the high level of the economic performance. The fact that it would help have all the curtailment out can further show the fact that the model is in a position to capitalize on the opportunities provided by the available renewable energy full of its potential thus making the systems be very efficient. It is critical to state that the continuous learning between the training sessions results in the cost (daily) minimization and better utilization of the renewable sources and the rise of the profit in the long-term, which denotes the flexibility and scalability of the process of RL.

On the whole, it is possible to draw into a certain conclusion that the reinforcement learning is an effective tool which will be implemented in the future in smart grids. It is a radical technology, which is intended to improve the economic and operations aspect of the renewable energy systems since it has the capacity of reforming itself to the fluctuating market experiences as well as renewable generation patterns on its own. Based on the paper, it is concluded that market-conscious RL-based dispatch optimization may be applicable in leading to a green, resilient and affordable energy management in the next-generation power systems.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Ahmadi, A., Neshan, A., & Moradi, M. H. (2014). Optimal Operation of Hybrid Renewable Energy Systems using Stochastic Programming. Renewable Energy, 68, 225–233. https://doi.org/10.1016/j.renene.2014.02.004

Avagaddi, A. I., & Salloum, F. (2017). Multi-Objective Energy Management of Solar–Wind Hybrid Microgrids using Rl-Based Optimization. Energy Procedia, 141, 403–410. https://doi.org/10.1016/j.egypro.2017.11.048

Bui, T., Nguyen, P., & Trinh, H. (2020). Actor–Critic Reinforcement Learning for Hybrid Renewable Systems Under Uncertain Markets. Electric Power Systems Research, 189, 106756. https://doi.org/10.1016/j.epsr.2020.106756

Chopra, S., & Kolhe, S. R. (2016). Smart Grid Predictive Control for Hybrid Solar–Wind Systems. Renewable Energy, 97, 110–121. https://doi.org/10.1016/j.renene.2016.05.036

Kusiak, A., & Li, W. (2002). Renewable Energy Optimization: Wind Farm Power Generation. Renewable Energy, 28(9), 1331–1341. https://doi.org/10.1016/S0960-1481(02)00018-7

Patel, S. R., & Gupta, A. (2023). Deep Reinforcement Learning-Based Energy Management in Hybrid Renewable Systems Under Uncertain Market Conditions. Renewable Energy, 205, 1–12. https://doi.org/10.1016/j.renene.2023.01.045

Rahman, K., & Kim, J. (2022). RL-based Economic Dispatch for Solar–Wind Microgrids with Real-Time Pricing. International Journal of Electrical Power & Energy Systems, 143, 108515. https://doi.org/10.1016/j.ijepes.2022.108515

Samadi, P., Mohsenian-Rad, H., Schober, R., & Wong, V. W. S. (2017). Real-Time Pricing for Smart Grid Demand Response Based on Reinforcement learning. IEEE Transactions on Smart Grid, 8(2), 655–666. https://doi.org/10.1109/TSG.2015.2468584

Singh, J., & Rao, V. (2021). Reinforcement Learning-Based Dispatch of PV Energy in Electricity Markets. Solar Energy, 221, 375–384. https://doi.org/10.1016/j.solener.2021.04.014

Sioshansi, R., & Short, W. (2005). Evaluating the Impacts of Real-Time Pricing on the usage of Wind Generation. IEEE Transactions on Power Systems, 20(2), 554–563. https://doi.org/10.1109/TPWRS.2005.846093

Xu, L., & Chen, D. (2007). Control and Operation of a Hybrid Solar–Wind Energy System. IEEE Transactions on Energy Conversion, 22(2), 295–302. https://doi.org/10.1109/TEC.2006.889609

Yang, F., & Zhang, L. (2021). Optimal Control of Hybrid Renewable Systems using PPO Reinforcement Learning. Energy Reports, 7, 6967–6977. https://doi.org/10.1016/j.egyr.2021.09.080

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© Granthaalayah 2014-2023. All Rights Reserved.