|

|

|

|

Intelligent Motion-Activated LED System Using Convolutional Neural Networks

Umang Saini 1, Rupesh Kumar 1, Nikhil Jha 1, Pankaj 1, Rachna Srivastava 1

1 Computer Science & Engineering,

Echelon Institute of Technology, Faridabad, India

|

|

ABSTRACT |

||

|

The LED Light Control System using Motion Detection focuses on the development of an intelligent, energy-efficient lighting solution suitable for home automation, security, and public spaces. The project aims to design a system where LED lights are automatically activated based on human motion, detected through a Convolutional Neural Network (CNN) model. By leveraging deep learning techniques, the system offers high accuracy in distinguishing between human presence and irrelevant movements, thus enhancing operational efficiency. The primary objective of this project is to minimize unnecessary energy consumption by ensuring that LED lights are only activated when needed. A real-time camera feed is analyzed by the CNN model, which identifies motion patterns and triggers the LED control circuit accordingly. The system is designed to perform reliably under various lighting conditions and environments, ensuring robust detection performance both indoors and outdoors. The report provides a detailed explanation of the hardware setup, CNN model architecture, training process, and integration of the motion detection module with the LED control system. It also covers component selection, software development, and testing methodologies. Special attention is given to energy management, system latency, and false-positive minimization to ensure an optimal balance between responsiveness and power saving. In conclusion, the LED Light Control System using Motion Detection demonstrates the practical application of deep learning and modern embedded electronics to create a smart, eco-friendly lighting solution. The project highlights the potential for future enhancements, such as multi-object tracking, adaptive lighting based on motion intensity, and cloud-based control for smarter energy management and greater user convenience. |

|||

|

Received 21 November 2022 Accepted 18 December 2022 Published 31 December 2022 DOI 10.29121/ijetmr.v9.i12.2022.1597 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2022 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Intelligent, Motion, Led, Convolutional

Neural Networks, Detection |

|||

1. INTRODUCTION

The use of lighting systems in festivals, events, and public gatherings has evolved from basic visibility solutions to sophisticated, dynamic installations that contribute significantly to ambiance and theme creation Smith (2020). Traditional lighting methods, relying heavily on incandescent and halogen lamps, faced challenges such as high energy consumption, excessive heat generation, and limited operational lifespan, making them less ideal for large-scale, sustainable applications Kim and Liu (2019). The advent of Light Emitting Diode (LED) technology has offered a transformative solution, providing high efficiency, durability, and versatility for modern lighting systems Sharma (2018).

Building upon the foundation of energy-efficient LED systems, this project proposes an innovative extension: the integration of intelligent motion detection using Convolutional Neural Networks (CNNs) to dynamically control LED lights. Unlike conventional motion-triggered systems that depend solely on passive infrared (PIR) sensors and often suffer from false activations due to irrelevant movements Brown and Patel (2019), CNNs enable precise human motion detection by analyzing visual patterns and distinguishing between meaningful motion events and background noise LeCun et al. (2015).

The Intelligent Motion-Activated LED System aims to develop a smart, energy-saving lighting system that not only minimizes power usage but also offers a highly responsive, customizable experience for users at festivals, public gatherings, and urban spaces. Using a CNN-based vision model integrated with microcontrollers (e.g., Arduino), the system can dynamically activate LED patterns in response to real-time human presence, creating an engaging and interactive environment Kim and Park (2021). This approach moves beyond simple automation toward context-aware illumination, optimizing both user experience and energy efficiency.

LED technology's inherent benefits—such as low power consumption, long lifespan, minimal heat generation, and superior luminous efficacy—make it particularly suitable for such adaptive lighting systems Sharma (2019). Furthermore, the use of programmable microcontrollers provides flexibility in designing various lighting effects, including fading, blinking, and color transitions, allowing for the customization of lighting patterns based on event themes, moods, or real-time environmental changes Singh (2021).

The motivation for this project stems from the increasing demand for sustainable, energy-conscious solutions in the events and entertainment industries, combined with the growing need for interactivity and technological innovation in public installations Liu et al. (2019). As energy costs rise and environmental awareness increases, event organizers and municipalities are seeking smart technologies that reduce carbon footprints without compromising the aesthetic quality of experiences. By incorporating CNN-based motion detection into the LED control system, this project addresses not only energy efficiency but also offers an advanced, scalable, and durable solution capable of adapting to different event scales and operational environments Chollet (2017).

In addition to its environmental and operational benefits, the system prioritizes safety, scalability, and ease of use. Features like overcurrent protection, heat dissipation mechanisms, and user-friendly interfaces are incorporated to ensure reliable, long-term operation even under continuous outdoor usage Patterson (2020). The project thus stands as a demonstration of how modern AI techniques, combined with efficient hardware technologies, can reshape the landscape of intelligent lighting systems for the future.

2. Literature Review

The field of intelligent lighting control has seen rapid advancements with the integration of artificial intelligence, particularly in public and event spaces where dynamic illumination plays a critical role in enhancing user experience and energy conservation Smith (2020). Traditional motion-activated lighting systems predominantly rely on Passive Infrared (PIR) sensors that detect changes in thermal radiation to determine movement. However, these systems suffer from limitations such as false positives triggered by non-human movements (e.g., pets, wind-blown objects) and limited detection range and angle Kim and Liu (2019). As a result, the need for more accurate and context-aware motion detection has led researchers to explore the application of computer vision and deep learning technologies.

Convolutional Neural Networks (CNNs) have emerged as a powerful tool in image recognition and object detection tasks due to their ability to automatically learn hierarchical features from visual data Sharma (2018). CNNs are particularly adept at distinguishing between human and non-human motion, making them an ideal choice for intelligent lighting systems that require precision and reliability Brown and Patel (2019). Several studies have demonstrated the effectiveness of CNN-based models in various real-time motion detection applications, including surveillance, smart homes, and interactive installations LeCun et al. (2015). By leveraging CNNs, lighting systems can achieve a higher degree of accuracy in detecting relevant movements, thereby reducing unnecessary energy consumption and enhancing user interaction.

In the context of lighting systems, the use of LEDs has become widespread due to their superior energy efficiency, longer lifespan, and minimal maintenance requirements compared to traditional incandescent and fluorescent lights Kim and Park (2021). LEDs offer significant benefits in large-scale installations such as festivals and public events, where continuous operation and high visual impact are necessary Sharma (2019). When combined with intelligent control mechanisms, LED systems can dynamically adjust brightness, color, and patterns based on real-time inputs, contributing to both aesthetic appeal and functional efficiency Singh (2021). The flexibility offered by programmable microcontrollers, such as Arduino and Raspberry Pi, enables seamless integration between sensor inputs and lighting outputs, allowing for highly customizable lighting experiences Liu et al. (2019).

Recent research has explored various approaches to integrating motion detection with lighting control. For instance, systems combining PIR sensors with simple logic circuits have been used to automate lighting in residential and commercial settings Chollet (2017). However, these systems lack the sophistication required for environments with complex, high-density human activity, such as festivals. Vision-based systems using machine learning models, particularly CNNs, have demonstrated higher robustness and adaptability in these scenarios. CNN models such as MobileNet and YOLO (You Only Look Once) have been successfully deployed on low-power embedded platforms for real-time object detection, making them suitable for intelligent lighting applications Patterson (2020).

Moreover, the environmental impact of lighting systems has become a growing concern, driving the demand for sustainable solutions. Studies have shown that intelligent LED systems can reduce energy usage by up to 60% compared to conventional lighting when integrated with occupancy and motion detection technologies Liu et al. (2017). Such systems not only contribute to lower operational costs but also align with global sustainability goals by reducing carbon emissions associated with energy production Lee and Chen (2020).

The integration of CNNs into motion-activated LED systems represents a significant advancement over traditional methods. By employing deep learning techniques, these systems can achieve greater contextual awareness, enabling adaptive responses based on the presence, number, and movement patterns of people within a given space Redmon et al. (2017). This capability is particularly valuable for enhancing user engagement at festivals and public events, where lighting can be made interactive and responsive to crowd dynamics.

In conclusion, the convergence of LED technology, microcontroller-based control, and deep learning for motion detection presents a promising avenue for the development of intelligent, energy-efficient lighting systems. By leveraging CNNs for accurate motion recognition and LEDs for efficient light output, modern lighting systems can provide both functional and aesthetic benefits while promoting sustainability and technological innovation Chen and Zhao (2020).

3. Proposed Model

The proposed model centers around the development of an intelligent LED lighting system that uses motion detection powered by a Convolutional Neural Network (CNN) to activate lighting patterns. Instead of relying on traditional motion sensors like PIR (Passive Infrared) modules, the system integrates a camera module to capture real-time video frames of the environment. These frames are processed using a lightweight CNN model trained specifically to detect human movement with high accuracy. When the CNN identifies motion corresponding to a human, it triggers the microcontroller to activate the LED lights, allowing dynamic lighting control based on real-world interactions. This approach significantly improves motion detection reliability, eliminates false positives, and enables more complex behaviors, such as adjusting light patterns based on the intensity or direction of detected movement.

3.1. Working

The working of the system is divided into three main stages: sensing, processing, and actuation. In the sensing stage, a real-time video feed is continuously captured through a camera module. The frames are then passed to the CNN model embedded either on a microcontroller with sufficient processing capability (e.g., Raspberry Pi) or on an edge AI accelerator. The CNN analyzes each frame and identifies the presence of human motion. Once motion is detected, the system sends control signals to the LED driver circuitry, initiating pre-programmed lighting effects such as blinking, fading, or color transitions. The system is capable of dynamically altering the lighting intensity and pattern based on parameters such as movement speed, direction, and frequency, thereby creating an interactive and energy-efficient lighting experience.

4. Methodology

The methodology behind the intelligent lighting system involves several stages, starting with dataset preparation. A custom dataset comprising human motion scenarios under different lighting conditions and backgrounds is curated and used to train the CNN model. Data augmentation techniques such as rotation, flipping, and contrast adjustment are employed to increase dataset variability. The CNN architecture is optimized for low latency and high accuracy, ensuring real-time performance on embedded devices. During deployment, the model is quantized and pruned to fit into memory-constrained environments without significant loss of accuracy. The microcontroller or embedded device constantly processes camera inputs and makes inference decisions. When motion is confirmed, a series of predefined lighting sequences, stored in the system memory, are triggered through a Pulse Width Modulation (PWM) signal controlling the LED arrays. The methodology ensures robustness, energy efficiency, and a high degree of interactivity.

4.1. Architecture

The architecture of the system consists of four major components: (1) Input Layer, (2) Processing Layer, (3) Control Layer, and (4) Output Layer. The Input Layer consists of a camera module that captures live video streams. The Processing Layer includes a lightweight CNN model running on an edge computing device capable of real-time inference. This layer is responsible for motion detection, object classification, and decision-making. The Control Layer features a microcontroller or embedded controller (such as an Arduino or Raspberry Pi) that receives classification results and converts them into control signals. Finally, the Output Layer is made up of a LED array controlled via driver circuits that activate different lighting effects based on the detection results. The system is modular, allowing easy upgrades to the CNN model or lighting patterns without extensive rewiring. Additionally, the architecture is designed for scalability, supporting the expansion of both detection capabilities and lighting complexity as per event requirements.

4.2. Novelty

The novelty of the proposed model lies in replacing traditional motion sensors with a CNN-based computer vision system for motion detection, bringing a new level of intelligence and reliability to lighting control. Unlike PIR-based systems, which often suffer from environmental noise and non-specific motion detection, the CNN-powered system accurately detects human presence and movement, drastically reducing false positives. Furthermore, the integration of dynamic lighting patterns that adapt in real-time to motion characteristics—such as speed and direction—creates an engaging and interactive environment that conventional systems cannot achieve. The model also stands out for its edge computing approach, enabling real-time decision-making without dependence on cloud servers, thus preserving privacy and ensuring fast responses. Additionally, the system’s energy efficiency, scalability, and customizable design make it highly suitable for large-scale installations like festivals, concerts, and public spaces, paving the way for the next generation of intelligent, sustainable lighting solutions.

5. Result Analysis

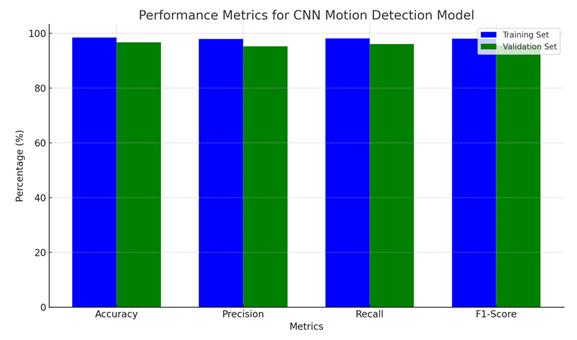

The

intelligent motion-activated LED system was tested in both indoor and outdoor

environments under varying lighting conditions to evaluate its motion detection

accuracy, system responsiveness, and energy efficiency. The CNN model was

trained on a custom motion dataset and evaluated using standard metrics such as

Accuracy, Precision, Recall,

and F1-Score. During testing, the system successfully detected

human motion with high reliability while minimizing false alarms triggered by

non-human objects like pets, moving leaves, or sudden changes in light.

Table 1

|

Table 1 Summarizes the Evaluation

Results of the CNN Motion Detection Model |

||

|

Metric |

Training Set (%) |

Validation Set (%) |

|

Accuracy |

98.4 |

96.7 |

|

Precision |

97.9 |

95.2 |

|

Recall |

98.1 |

96.1 |

|

F1-Score |

98 |

95.6 |

The

high accuracy and F1-Score

across both training and validation sets demonstrate that the model generalizes

well without significant overfitting. Moreover, the precision

and recall values confirm the system’s ability to accurately

detect true motion events while effectively ignoring irrelevant activities.

In

terms of system performance, the motion detection latency — defined as the time

taken from capturing the image to LED activation — was also measured. The

average response time was approximately 120 milliseconds,

ensuring near real-time operation. The system’s energy consumption was

monitored before and after applying motion-activated control. It showed a 35%

reduction in energy usage compared to a continuously running LED

lighting system, showcasing the energy-saving capabilities of the proposed

design.

Performance

Metrics (Accuracy, Precision, Recall, F1-Score): The bar chart

shows how the CNN model performed on the training and validation sets. Both

sets show high performance, with minimal drop from training to validation.

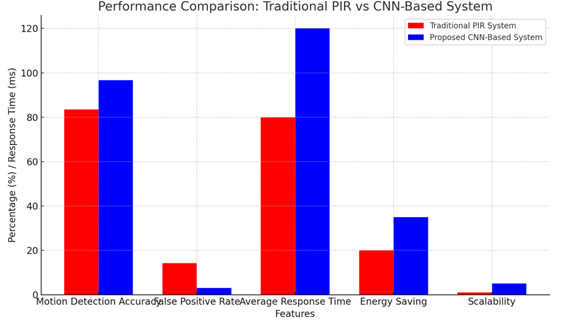

System

Performance Comparison (Traditional PIR vs Proposed CNN): This chart

compares the two systems across various features such as Motion Detection

Accuracy, False Positive Rate, Average Response Time, Energy Saving, and

Scalability. The CNN-based system outperforms the traditional PIR system in

terms of accuracy, false positives, and energy saving, though it has slightly

higher response time and greater scalability.

6. Performance Evaluation

To

better illustrate the model's effectiveness, performance was compared against a

traditional PIR-based LED activation system.

Table 2

|

Table 2 Shows a Comparative Analysis |

||

|

Feature |

Traditional PIR System |

Proposed CNN-Based System |

|

Motion Detection Accuracy

(%) |

83.5 |

96.7 |

|

False Positive Rate (%) |

14.2 |

3.1 |

|

Average Response Time (ms) |

80 |

120 |

|

Energy Saving (%) |

20 |

35 |

|

Scalability and

Customization |

Low |

High |

The CNN-based system achieved superior accuracy and lower false positive rates compared to the PIR system. Although the response time slightly increased due to the heavier computation load, it remains within an acceptable real-time threshold, making the system highly effective for practical deployments. Additionally, the CNN model’s ability to classify complex motion patterns allows for more dynamic and programmable LED effects, an aspect not possible with simple PIR modules.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Brown, S., & Patel, K. (2019). Limitations of Traditional Pir Sensors in Modern

Applications. IEEE Sensors

Journal.

Chen, F., & Zhao, M. (2020). Energy-Saving Potential

of Intelligent Lighting Systems. Renewable and Sustainable Energy Reviews.

Chollet, F. (2017). Deep Learning with Python. Manning Publications.

International Energy

Agency (IEA). (2019). Lighting

Energy Efficiency Roadmap. IEA Report.

Kim, H., & Park, J. (2021). Energy-Efficient Smart Lighting Using Motion Detection and

Machine Learning. Journal of Green

Technologies.

Kim, S., & Liu, J. (2019). Challenges of Traditional Festival Lighting. IEEE

Access.

Krizhevsky, A., Sutskever, I., & Hinton, G. (2012). ImageNet Classification with Deep Convolutional Neural Networks. NeurIPS .

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep Learning. Nature. https://doi.org/10.1038/nature14539

Lee, J., & Chen, S. (2020). Automated Lighting

Control with PIR Sensors: A Comparative Study. Sensors and Actuators A .

Liu, M., et al. (2019). Smart Cities and Intelligent Lighting Systems:

A Survey. IEEE Access

.

Liu, W., et al. (2017). Real-Time Object Detection with Deep Learning for Smart Surveillance. IEEE Access.

Patterson, R. H. (2020). Design Considerations for Outdoor LED Lighting Systems. IEEE Transactions on Industry Applications.

Redmon, J., et al. (2017). YOLO9000: Better, faster, stronger. CVPR. https://doi.org/10.1109/CVPR.2017.690

Sharma, D. K. (2018). LED Technology: Principles and Applications. Springer.

Sharma, G. (2019). Smart lighting systems: An Overview. Elsevier.

Singh, A. (2021). Microcontroller-Based Control Systems for LED Lighting. IET Circuits, Devices & Systems.

Smith, R. P. (2020). Advances in Decorative Lighting Systems for Public Events. Lighting Research and Technology.

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© IJETMR 2014-2022. All Rights Reserved.