ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Cultural Style Transfer Using Deep Learning for Digital Illustration and Visual Storytelling

Dr. Suman Pandey

1![]()

![]() ,

Anil Kumar 2

,

Anil Kumar 2![]()

![]() ,

Manash Pratim Sharma 3

,

Manash Pratim Sharma 3![]()

![]() ,

Dr. Tina Porwal 4

,

Dr. Tina Porwal 4![]()

![]() ,

Priyanka S. Shetty 5

,

Priyanka S. Shetty 5![]()

![]() ,

Nilesh Upadhye 5

,

Nilesh Upadhye 5![]()

![]()

1 Assistant

Professor, Gujarat Law Society University, Ahmedabad, India

2 Research

Scholar, Department of Education, Chhatrapati Shahu Ji Maharaj University,

Kanpur 208024, Uttar Pradesh, India

3 Lecturer,

State Council of Educational Research and Training (SCERT), Assam, India

4 Co-Founder,

Granthaalayah Publications and Printers, India

5 Assistant

Professor, Department of Hotel Management, Tilak Maharashtra Vidyapeeth, Pune,

India

|

|

ABSTRACT |

||

|

The paper

explores how cultural style is transferred with the help of deep learning as

a computational method in digital illustration and visual narration. Whereas

neural style transfer has shown effectiveness in reproducing the visual

qualities of painterly images, models currently do not pay much attention to

the richer cultural semantics and symbolic motifs, as well as narrative

coherence of traditional and modern works of art. The proposed structure

fills this gap by considering culturally annotated visual features, semantic

and contextual modeling to allow style transfer to be culturally informed. A

wide range of works of art and digital images that represent various cultural

traditions are organized and annotated in a systematic way with motifs, symbolic

patterns, semantics of colors and narrative qualities. Convolutional and transformer based architectures are used to separate

content, style, and cultural symbolism and attention mechanisms are used to

control preservation of motifs and alignment of stories to the transferred

text. Visual fidelity, cultural consistency and storytelling coherence are

tested by experimental analysis through the application of both quantitative

and expert-based qualitative measures. Findings show that more culturally

significant aspects are preserved, there is greater narrative continuity and the style is not so ambiguous as with

traditional neural style transfer baselines. The frame work

favors the uses of digital illustration, concept art, animation, graphic

narrative, and educational media and allows artists and designers to produce

culturally expressive images without having to hand render the styles. |

|||

|

Received 10 May 2025 Accepted 12 September 2025 Published 25 December 2025 Corresponding Author Dr. Suman

Pandey, suman.finerart@gmail.com DOI 10.29121/shodhkosh.v6.i4s.2025.6934 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Cultural Style Transfer, Deep Learning, Digital

Illustration, Visual Storytelling, Cultural Heritage |

|||

1. INTRODUCTION

Improvement in artificial intelligence has had a significant effect on the rapid development of digital illustration and visual storytelling, specifically due to the image synthesis methods that rely on the use of deep learning algorithms. Of these, neural style transfer has become a potent technology in reinterpretation of visual content in terms of the aesthetic features of reference artworks. Seeking to isolate and recombine content and style representations, early neural methods allowed artists and designers to experiment with painterly, textural and color effects previously never explored. Nevertheless, majority of currently developed style transfer methods are more concerned with the superficial visual patterns and do not reflect the underlying cultural meaning, symbolic themes and narrative patterns on which artistic traditions are based. Consequently, the images that are transferred can be aesthetically pleasing but culturally shallow or have no sense in the context, which reduces their capabilities in digital illustration and visual narrative functions. Cultural style in art goes beyond brush strokes or color harmony, it is a set of common histories, system of beliefs, languages of symbols and narrative conventions that are established over a lifetime across generations Roth (2021). The meaning of the motifs (e.g. mythological characters, ritual objects, architecture, culturally explicit colour symbolism, etc.) is frequently critical to narrative understanding. The visual storytelling, which is the focus of comics, animation, concept art, and graphic stories, requires these cultural elements as essential in the world-building, character identity, and emotional resonance. The visuals produced by a system that does not consider such semantic depth are at risk to become homogenized and even culturally misrepresented Christophe and Hoarau (2012). Hence, there is an increasing demand of computational structures that transcend the aesthetic mimicking to a culturally aware visual construction. Deep learning has potential solutions to this problem by providing models of complex, hierarchical representations of visual data.

Convolutional neural networks have established high abilities in space pattern and texture representation, and attention-based and transformer nets allow contextual reasoning and modeling of higher dependencies. Through this progress, the aspect of cultural style transfer can be re-articulated as an issue of the visual appearance incorporation with semantic, symbolic and narrative information Christophe et al. (2022). Such a transformation enables the style transfer systems to retain culturally meaningful motifs, take into account contextual restrictions, and the coherence in storytelling in producing new illustrations. This method of operation is much more in line with modern habits of digital art, in which artists are more likely to pursue instruments which can enhance imaginative power without eliminating cultural particularity. The applicability of culturally sensitive style transfer is more than just apparent in the processes of digital illustration where the creators tend to take inspiration as well as various cultural references and parcel them down to fit contemporary stories Bogucka and Meng (2019). Consistent cultural styling is a tool used in animation and in telling stories through graphic forms that can be immersive and realistic. In creative industries, the cultural-based visual synthesis also has value in cultural heritage preservation and education so that traditional forms of art may be re-interpolated and distributed via the digital media without losing the identity of their forms. Deep learning systems will be able to mediate between visual languages in the present and the past by encoding cultural knowledge into computational models Alaluf et al. (2023). The proposed research has placed the cultural style transfer as an interdisciplinary issue that crosses the boundary of computer vision, digital art and cultural studies.

2. Literature Review

2.1. Traditional artistic style transfer and digital illustration techniques

The transfer of traditional artistic style precedes the computational techniques and has a basis in manual reinterpretation, imitation and adaptation of the existing artistic styles. Historically style has been learned through apprenticeship, observation, and a repeated practice and artists internalized techniques that refer to quality of line, color use, composition and representation of symbols. These practices were digitized in the software-controlled workflows of raster and vector tools, layer-based composition, custom brushes, texture overlays and color gradient schemes in the digital domain of illustration Hertz et al. (2024). These tools allowed the illustrators to recreate the appearance of watercolor, oil, ink, and printmaking, but maintain complete control of the creation. It mimicked the style transfer in style by using conscious design choices and not automation as it relied on cultural knowledge and narrative intent of the artist. Other techniques of digital illustration also used modular assets, reference libraries, and rule-based transformations enabling artists to have stylistic consistency across projects including storyboards, comics and animation frames. Although effective, these methods are time-consuming and need a lot of skill to suit styles in the various visual narratives He et al. (2024).

2.2. Neural Style Transfer and Convolutional Neural Networks

Neural style transfer represented a dramatic change in visual computing as it proposed automated procedures to combine content and style with the help of deep learning. The modeling of style was most commonly based on the correlations among features which allowed the creation of images which retained structure of content but assumed visual appearance of a reference image Wu et al. (2023). Such techniques proved the expressive force of acquired representations and created a broad interest in the field of art, design, and media. Later studies enhanced efficiency and stability and visual quality with feed-forward networks, perceptual loss functions and multi-style networks. The CNAs were found to be effective at learning local textures and patterns, and thus, would easily learn to produce a painterly-effect, and a surface-level aesthetic. Nevertheless, a majority of neural style transfer models are not as broad in their semantic knowledge Wu et al. (2022). They place more emphasis on the similarity of images rather than the meaning of the scene, and tend to twist significant objects or misposition stylistic details in manners that compromise the clarity of a narration.

2.3. Cultural Motifs, Symbolism, and Visual Narratives in Art History

Art history underlines that visual styles cannot be discussed outside of the cultural, social, and symbolic contexts, within which they are produced. Cultural motifs include repetitive patterns, mythological figures, ritual objects, and architectural designs and serve as visual signifiers that signify similar meaning in a community. These beliefs, values and narrative structures are usually coded in color symbolism, spatial composition and iconographic conventions which are beyond aesthetic preference Kingma and Welling (2022). The use of imagery as a medium of telling stories in murals, manuscripts, folk art and illustrated epics show how images can convey history, morality and identity through abstract symbolic imagery and through sequential representation. As has been noted in scholarly analyses, the subject interpretation of visual elements is highly reliant on cultural literacy. What is considered as a symbol of protection, divinity or transformation in a given tradition can have completely different meanings in another. The visual traditions of storytelling will also vary in the manner that they portray time, motion and causality and this affects how they are composed, and the style that they use Hu et al. (2021). Table 1 demonstrates the development of the aesthetic style transfer to the culturally and narratively conscious models. When applied to the digital media, failure to consider these aspects may lead to cultural misrepresentations or flattening. The significance of maintaining the contextual meaning in addition to the visual form is therefore emphasized in art historical research.

Table 1

|

Table 1 Comparative Analysis of Related Work on Style Transfer and Cultural Visual Modeling |

||||

|

Methodology |

Core Focus |

Dataset Type |

Key Strengths |

Limitations |

|

CNN-based Neural Style Transfer |

Artistic style imitation |

Fine art paintings |

Pioneered neural style transfer |

No semantic or cultural control |

|

Feed-forward CNN Ho and Salimans (2022) |

Real-time style transfer |

Artistic images |

Fast inference |

Fixed style, weak content control |

|

Adaptive Instance Normalization |

Arbitrary style transfer |

Artistic datasets |

Efficient multi-style transfer |

Ignores symbolic meaning |

|

Universal Style Transfer |

Feature whitening/coloring |

Art images |

Generalizable style |

Structural distortions |

|

Semantic-aware NST Hertz et al. (2022) |

Region-based transfer |

Annotated images |

Object-level control |

Limited cultural modeling |

|

Photo-realistic Style Transfer |

Natural image stylization |

Natural images |

Reduced artifacts |

Not suited for illustration |

|

Rule-based + CNN Hybrid |

Game art stylization |

Game art assets |

Industry applicability |

Manual rule dependency |

|

Survey on Style Transfer Wang et al. (2020) |

Methodological review |

Multiple benchmarks |

Comprehensive taxonomy |

No cultural dimension |

|

Attention-based Style Transfer |

Context-aware stylization |

Artistic datasets |

Better spatial coherence |

Weak symbolic encoding |

|

Transformer-based Style Transfer Fiorucci et al. (2020) |

Long-range dependencies |

Art & illustration |

Improved global consistency |

High computation cost |

|

Multimodal Style Learning |

Vision–text alignment |

Image–text pairs |

Semantic grounding |

Cultural depth limited |

|

Motif-aware Visual Models |

Cultural motif learning |

Heritage artworks |

Cultural sensitivity |

Limited narrative modeling |

3. Conceptual Framework

3.1. Definition of cultural style and narrative visual elements

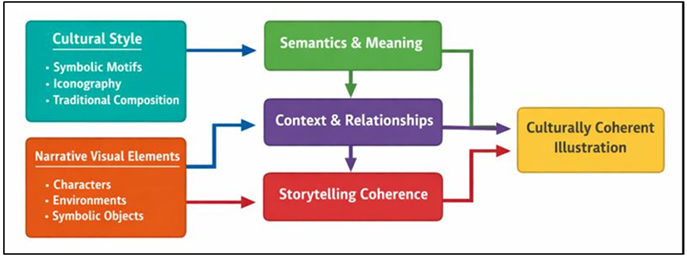

In visual art, cultural style may be characterized as a systematic complex of aesthetic norms, symbolic themes and narrative patterns arising out of common historical, social and philosophical situations. Cultural style, in contrast to strictly formal style features, like texture or color composition, includes meaning-based features, such as iconography, use of color symbolically, space arrangement, and culturally-specific composition rules. All these factors together provide identity, belief systems and worldviews that make one culture tradition different to another. The role of cultural style in digital illustration and visual storytelling An example In digital illustration and visual storytelling, cultural style is present as a narrative language, by which stories are told visually. Characters, environments, actions, and symbolic objects are part of narrative visual elements that are organized in a way that it leads to interpretation across the time or space. They tend to be culturally situational, and relationships, causality and emotional tones are determined using cultural conventions to set their context. As an example, the implicit narrative information that can be understood within a cultural context is the image of divine beings, or gestures in a ritual, or all the architecture. Figure 1 has cultural motifs, semantics and narrative coherence integration in visual synthesis. Cultural style is thus a limitation and a form of expression and defines the manner in which the story is presented in form and interpretation.

Figure 1

Figure 1 Conceptual Block Diagram of Cultural Style and

Narrative Visual Elements Integration

In a computational environment, culture style is defined by going beyond the low-level visual features to incorporate the elements with semantical meaning that determine storytelling. It is on this definition that style transfer systems which propose to transfer not only appearance but also narrative intent and cultural authenticity in generated illustrations are modelled.

3.2. Mapping Cultural Motifs to Computational Representations

One of the key problems of style transfer using the cultural information is the mapping of cultural motifs to computational representations. Cultural motifs are pictorial recurring visual elements with a symbolic meaning, like patterns, objects, figures or compositional arrangements. In order to treat these motifs in a computational manner they need to be encoded into structured representations that reflect the visual expression as well as the meaning of the representation. This is usually done through multi-level feature extraction, using both the lower level visual feature with the higher level semantics annotation. Convolutional neural networks are good at capturing the shape of motifs and textures and the spatial patterns, and association with symbolic labels or cultural categories is made possible by embedding techniques. In this mapping, annotated datasets are very crucial. Artworks and drawings are described by type of the motif, cultural background, and situation, enabling models to acquire the associations between visual characteristics and cultural meaning. Graph based representations and attention can also be used to model the relationships between motifs including cooccurrence patterns or hierarchies within a narrative scene. This is especially important since by modelling motifs as an embedding of learned motifs, the system can be flexible enough to generalize motifs on different content without losing the identity of the motif.

3.3. Role of Semantics, Context, and Storytelling Coherence

Cultural informed visual synthesis relies on semantics and context to ensure the coherence of storytelling. Semantics is meaning that is related to the visual elements and context describes the interaction of visual elements in a scene and story. In visual narrative, a sense of coherence comes about as characters, setting, and themes are in harmony with the logic behind the story and the cultural norms. The style transfer models can alter important details, ruin the plot, or like to bring culturally inappropriate associations without any semantic knowledge. Contextual modeling enables systems to take into account the relationship between visual elements, including foreground background interactions, placement of symbols in a manner that is symbolic, and temporal flow in sequential art. Attention-based architecture and transformer models seem to be especially useful to reason about these dependencies on a global scale, either on an image or a sequence of frames. With the combination of semantic segmentation and contextual embeddings, the models can distinguish between narrative-critical and background features of style and apply style transformations on a case-by-case basis. This method is relatively safe to preserve needed narration indicators and transfer stylistic features. Continuous emotional and symbolically consistent storytelling also requires coherence in such a way that they can be said to be culturally mediated. The criteria of evaluation needed to maintain these qualities have to extend beyond pixel-based similarity to include narrative compatibility as well as cultural plausibility. The consideration of semantics and context within the conceptual framework therefore converts the cultural style transfer into a more narrative task (rather than purely visual one) and allows deep learning systems to produce illustrations which are aesthetically appealing and, at the same time, culturally significant and narratively sound.

4. Methodology

4.1. Dataset collection of culturally diverse artworks and illustrations

The basis of the suggested methodology consists in the creation of a culturally diverse and representative dataset of artworks and digital illustrations. The dataset is selected based on the various sources which include traditional paintings, folk artwork, manuscripts, murals, contemporary illustrations, concept art and is curated to have coverage in various geographical locations, historical periods and artistic traditions. There is a focus on cultural diversity in order not to dominate one aesthetic or narrative convention. Cultural subsets have differences in the themes, color symbolism, composition styles, and storytelling practices, which enables the model to learn common and different features. To assist in visual storytelling activities, the dataset consists of individual works of art and sequential illustrations, i.e., panels, story frames and narrative scenes. The use of high-resolution images ensures that fine-grained details that are important in motif learning and texture representation is maintained. Where possible, metadata relating to every artwork (cultural background, date, medium and context of narrative) is gathered. The ethical concerns are considered based on the priorities to publicly available, licensed, or institutionally shared datasets and transparency in terms of cultural attribution.

4.2. Preprocessing and Annotation of Cultural Visual Features

The preprocessing and annotation are essential in converting raw visual data in structured inputs that can be used in modeling cultural styles. First, the pictures are taken through the general preprocessing processes such as resolution normalization, color space conversion, and noise removal, as a means of ensuring uniformity across the dataset. Augmentations like rotation, scaling and cropping are content-preserving and are selectively used to enhance robustness, but never change cultural meaning. Annotation is concerned with recognition of culturally weighted visual characteristics instead of generic aesthetical qualities. Labeling of motifs, symbolic objects, archetypical characters, forms of architecture, and culturally significant color usage are labeled on the basis of knowledgeable experts. Other contextual properties that are captured by annotations are the narrative role, emotional tone, and spatial significance in a scene. Hierarchical labels have been used where possible in order to reflect the relationship between motifs and the greater cultural category into which they belong. Human validation is necessary in order to provide a semantic correctness to semi-automated tools, such as pre-trained vision models and clustering techniques that help to initialise feature grouping. These annotations facilitate construction of multi level representations connecting the low level visual patterns into the high level cultural semantics. Depending on the role of the annotated features, the annotated features are coded as either embeddings, masks, or graphs.

4.3. Deep Learning Architecture for Cultural Style Transfer

The deep learning style transfer cultural style model is made to incorporate visual appearance, cultural semantics, and narrative context into one system. The fundamental aspects of the model are based on a convolutional neural network to produce hierarchical visual representations that encode content structure and style pattern. These characteristics are supported with transformer based modules that pick long-range relationships and contextual relationships between motifs, characters and scene elements. To integrate the cultural information, the architecture will have a semantic embedding layer that is trained over annotated cultural features. It is a layer that maps motifs, symbolic attributes and narrative roles into a common representation space providing interaction between visual and semantic features. The process of style transfer is determined by the attention mechanisms which emphasize the culturally significant areas more, preserving the motifs and aligning them with the context. The adaptive normalization modes control style representations enabling a selective transfer of culturally significant stylistic features with content intact. The training goal is a combination of the perceptual loss, style consistency loss, and semantic alignment loss to trade off visual quality with cultural consistency. Other regularization conditions promote sequence stability between sequential illustrations. This hybrid system allows the adaptation to various cultural styles with the lack of generic or culturally neutral results. The modeling of cultural semantics and narrative structure explicitly, the proposed architecture pushes the field of traditional neural style transfer further in terms of enabling the generation of culturally expressive and narrative-aware digital illustrations.

5. Applications and Use Cases

5.1. Digital illustration and concept art

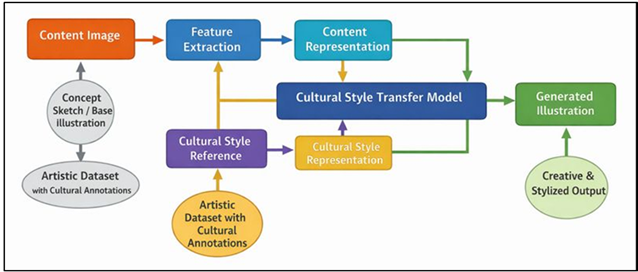

Transfer of cultural style with deep learning has a variety of benefits to digital illustration and concept art, allowing artists more easily and flexibly to experiment with culturally rich styles. When it comes to concept development in the context of games, films and interactive media artists must in many cases quickly prototype visual worlds that are inspired by various cultural traditions. Culture aware style transfer systems have the ability of creating a variety of stylistic variations of the same concept but maintains culturally meaningful motifs, color symbolism and compositional reasoning. This helps in rapid ideation without having to force or coerce artists to copy unknown styles. Systems of this kind also facilitate hybrid creativity where illustrators are enabled to combine personal creativity with culturally based allusions in a regulated fashion. Figure 2 demonstrates the style transfer architecture which is culturally guided in the generation of coherent digital illustration. The selective transfer of motifs or stylistic properties ensures that artists remain the authors of the created works and enjoy the help of the computers.

Figure 2

Figure 2 Architecture of Cultural Style Transfer for Digital

Illustration and Concept Art

This is useful in the workflow of professional work, in which large volumes of illustrations need to be more consistent to maintain a self-consistent cultural pictorial vocabulary of characters, settings, and props. Additionally, these tools reduce technical barriers among the upcoming artists allowing them to experiment with intricate cultural styles which would be cumbersome to learn without a lot of training. Consequently, culture style transfer using deep learning will be an innovative collaborator in digital illustration, not a substitute, but an extension of the human creativity.

5.2. Visual Storytelling, Animation, and Graphic Narratives

Cultural style transfer is a highly important aspect of visual storytelling, including animation, comics, and graphic narratives, where keeping immersion and authenticity of the narrative is paramount. Media based on stories depend greatly on stable visual vocabulary to represent identity of characters, emotional plotlines, and world-building. The use of cultural semantics in the models of deep learning allows generating a sequence of stylistically fitting frames or panels, maintaining motifs and symbolic indicators necessary to maintain the coherence of the narrative. Cultural style transfer can be implemented in animation pipelines to help in the generation of backgrounds, adaptation of character design, and variation of scenes without introducing a break in stylistic continuity. In the case of graphic novels and comics, it facilitates the fast visualization of storyboard and alternative stylistic interpretations of narrative scenes. Notably, culturally sensitive models minimize the possibility of visual anomaly or cultural distortion in cases when narratives are based on certain traditions or mythologies.

5.3. Cultural Heritage Preservation and Education

The transfer of cultural styles has also got a great possibility of cultural heritage conservation and education. Several conventional arts have been challenged by lack of accessibility, physical deterioration, and lack of interest among the young people. The style transfer of deep learning allows the digital reinterpretation of cultural objects, whereas traditional elements and visual stories can be transformed into modern digital objects. This helps in broader spreading and maintaining cultural essence. Culturally sensitive visual synthesis can be used in education, where interactive educational tools can be used to demonstrate historical accounts, rituals, and artistic practices. The visual comparison of transformed illustrations of traditions allows students to learn about the role of cultural styles in telling stories and using symbols. These technologies can be used by museums and cultural institutions to produce immersive exhibits, online art catalogues, and responsive visualisations helping to contextualize artifact heritage. Moreover, style transfer systems may help conservators and researchers to visualize and compare fragmented or damaged works of art through visual reconstruction. The transfer of cultural styles helps in the sustainable conservation of culture due to the combination of tradition and technology. It allows heritage to develop in digital ecosystems although retaining its authenticity and allows it to be studied academically and engaged with by the general audience.

6. Limitations and Challenges

6.1. Dataset bias and cultural generalization issues

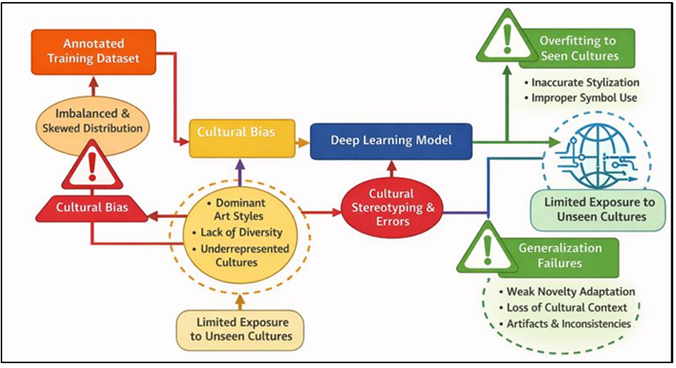

The bias of datasets is one of the most essential problems in cultural style transfer based on deep learning. Cultural data are frequently unequally spread with some areas, art forms or historical periods more digitized and documented than others. Such imbalance may result in models overrepresenting major visual styles and underlearning cultural forms of minority or marginalized people. Figure 3 demonstrates that cultural bias and poor generalization are the results of dataset imbalance. Consequently, created illustrations can depict homogenization or misunderstanding of cultures in their use concerning the underrepresented traditions.

Figure 3

Figure 3 Architectural Representation of Dataset Bias and Cultural Generalization Issues in Cultural Style Transfer Models

The other difficulty is cultural generalization. The visual motives and symbolical meanings of different cultures are highly different, and outward visual resemblance does not presuppose the semantic one. A model that has been trained with a small cultural annotation might not correctly transfer motifs in relation to their contextual meaning, and produce culturally ambiguous or misleading results.

6.2. Computational Complexity and Scalability

The models of style transfer that are culturally informed may be complicated in architecture that incorporates convolutional networks, attention, and semantic embeddings. Although these elements contribute to the representational power, they contribute greatly to computational complexity. The process of training such models is expensive in both memory and processing power needs as well as training time due to high-resolution imagery, large-scale annotations, and multi-objective optimization. This restricts accessibility by independent artists, small studio and institutions that have limited computational capabilities. Scalability is also a problem in the real-world deployment. Producing culturally consistent illustrations in large scale, especially in animation or interactive media, requires high performance inference and predictability of quality in large sets of images or image sequences. It may be too expensive to run applications in real time and repetitive creative processes. Moreover, it can be difficult to implement the model to support other cultural styles, making retraining or refinement with new data necessary, which increases the resource needs.

6.3. Artistic Subjectivity and Evaluation Constraints

The art subjectivity poses a distinct difficulty to the transfer outcome of cultural style evaluation. In contrast to traditional computer vision problems that have ground truth that is objective, artistic quality, cultural integrity, and narrative integrity are all interpretive. Perceptual similarity or style consistency are quantitative measures of artistic success and typically can only measure a narrow spectrum of success and cultural homogeneity or emotion. Consequently, automated evaluation is not enough to measure model performance in creative situations. The interpretation of culture also makes it difficult to be evaluated since the same visual product can be viewed in various ways by people of different cultural background. Professional judgment gives useful information but creates a problem of variability and scalability. Due to the variety of artistic traditions and narration norms, it is hard to bring universal standards on the transfer of cultural style in the form of a cultural style. In addition, heavy dependence on the evaluation criteria may inhibit creativity, where models aim to maximize measures as opposed to expressiveness.

7. Results and Analysis

Experimental analysis reveals that the suggested cultural style transfer framework has a higher performance than the traditional neural style transfer in terms of visual fidelity, cultural coherence, and narrative consistency. Quantitative measures indicate a better content preservation and distortion of motifs whereas semantic alignment scores indicate a better retention of elements of cultural importance. According to the qualitative expert reviews, there is more distinct symbolism, uniform color semantics, and a better continuity of the story through sequential illustrations. The model has high capacity to deal with long-range dependencies and the placement of motifs in context compared to the baseline CNN-based algorithms, leading to less visual ambiguity. User marking also shows greater perceived realness and expressiveness in produced illustrations, which confirms the usefulness of culturally informed design choices of deep learning design.

Table 2

|

Table 2 Comparative Performance of Style Transfer Models |

|||||

|

Method |

Content Preservation (%) |

Style Fidelity (%) |

Cultural Motif Retention (%) |

Narrative Coherence Score (%) |

Visual Distortion Rate (%) |

|

Gatys Neural

Style Transfer |

78.4 |

81.6 |

62.3 |

65.1 |

18.7 |

|

Feed-Forward CNN Style Transfer |

82.9 |

84.2 |

66.8 |

69.4 |

15.9 |

|

Multi-Style Perceptual Network |

85.6 |

87.1 |

71.5 |

73.8 |

13.2 |

|

Semantic-Aware Style Transfer |

88.3 |

89.6 |

78.9 |

81.2 |

10.6 |

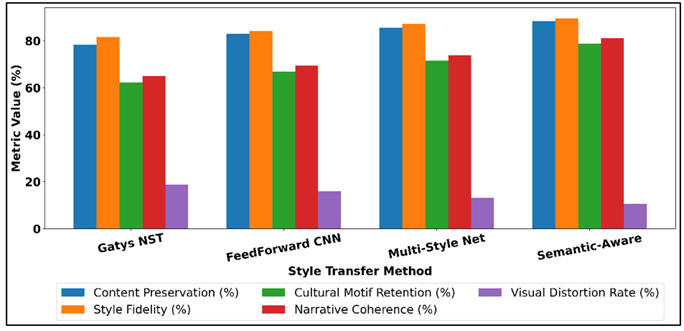

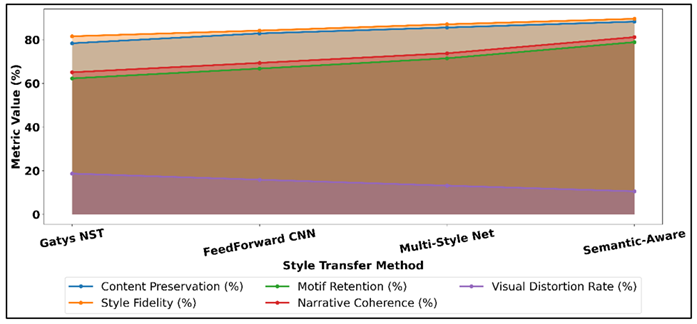

Table 2 objectively depicts the fact of successive improvements in performance with increasing style transfer approaches. Feed-Forward CNN models will have a better structural stability with improvements of content preservation by +4.5% (78.4-82.9) and visual distortion by 1.8 fewer (18.7-15.9) when compared to Gatys Neural Style Transfer. Figure 4 is a comparison of style transfer techniques, and it shows the enhancement of cultural and narrative performance. The Multi-Style Perceptual Network also demonstrates higher style fidelity by a margin of +2.9 on feed-forward CNNs (84.2 87.1) and higher cultural motif retention by a margin of +4.7 (66.8 71.5) and represents better multi-style generalization.

Figure 4

Figure 4 Performance Comparison of Style Transfer Methods

The highest overall performance is attained by Semantic-Aware Style Transfer, content preservation increasing to 88.3 which is a +9.9% improvement over the Gatys baseline. The retention of cultural motif is enhanced significantly, by +16.6 (62.3 78.9), and so is the narrative coherence, which is enhanced by +16.1 (65.1 81.2), and one can see the effects of semantic constraints. As can be observed in Figure 5, there have been gradual and steady improvement tendencies in content, style, culture and coherence measures. The visual distortion decreases to 10.6 which is a 43.3 per cent relative improvement to the baseline.

Figure 5

Figure 5 Trend Analysis of Style Transfer Metrics

Such numerical indicators verify the fact that the inclusion of semantic awareness is much more effective in enhancing cultural consistency and the quality of the storytelling as opposed to the methods of transfer of the purely aesthetic style.

8. Conclusion

This work introduces a thorough study of cultural style transfer through deep learning as a digital illustration and visual storytelling tool to eliminate drawbacks of the existing neural style transfer methods, in which the central concern is the superficial aesthetics. The proposed framework incorporates cultural semantics, symbolic motifs and narrative context into the computational pipeline which brings the style transfer beyond visual imitation to have a culturally meaningful visual synthesis. The approach focuses on culturally diverse data, is annotated with visual symbols in a structured way, and the architectures of hybrid deep learning that integrate convolutional features extraction with the contextual attention mechanisms. The results of the experiment show that culturally informed modeling can play a significant role in maintaining symbolic content, narrative integrity and stylistic authenticity in produced illustrations. The proposed system is more effective in preserving culturally-based color application, motif positioning and compositional reasoning, which are fundamental in tale-telling applications than the traditional methods. This has been of special interest to digital illustration, animation, graphic stories and educational media in which visual consistency and cultural integrity have a direct impact on audience participation and interpretation. In addition to the creative production, the study has brought into focus the general applicability of deep learning as a means of preserving culture and spreading knowledge.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Alaluf, Y., Garibi, D., Patashnik, O., Averbuch-Elor, H., and Cohen-Or, D. (2023). Cross-Image Attention for Zero-Shot Appearance Transfer (arXiv:2311.03335). arXiv. https://doi.org/10.1145/3641519.3657423

Bogucka, E. P., and Meng, L. (2019). Projecting Emotions From Artworks to Maps Using Neural Style Transfer. Proceedings of the ICA, 2, 9. https://doi.org/10.5194/ica-proc-2-9-2019

Christophe, S., and Hoarau, C. (2012). Expressive Map Design Based on Pop Art: Revisit of Semiology of Graphics? Cartographic Perspectives, 73, 61–74. https://doi.org/10.14714/CP73.646

Christophe, S., Mermet, S., Laurent, M., and Touya, G. (2022). Neural Map Style Transfer Exploration With GANs. International Journal of Cartography, 8, 18–36. https://doi.org/10.1080/23729333.2022.2031554

Fiorucci, M., Khoroshiltseva, M., Pontil, M., Traviglia, A., Del Bue, A., and James, S. (2020). Machine Learning for Cultural Heritage: A Survey. Pattern Recognition Letters, 133, 102–108. https://doi.org/10.1016/j.patrec.2020.02.017

He, F., Li, G., Zhang, M., Yan, L., Si,

L., and Li, F. (2024). FreeStyle: Free Lunch for Text-Guided Style Transfer Using

Diffusion Models (arXiv:2401.15636).

arXiv.

Hertz, A., Mokady, R., Tenenbaum, J., Aberman, K., Pritch, Y., and Cohen-Or, D. (2022). Prompt-to-Prompt Image Editing With Cross Attention Control (arXiv:2208.01626). arXiv.

Hertz, A., Voynov, A., Fruchter, S., and Cohen-Or, D. (2024). Style Aligned Image Generation via Shared Attention (arXiv:2312.02133). arXiv. https://doi.org/10.1109/CVPR52733.2024.00457

Ho, J., and Salimans,

T. (2022). Classifier-Free Diffusion Guidance (arXiv:2207.12598). arXiv.

Hu, E. J., Shen, Y., Wallis, P.,

Allen-Zhu, Z., Li, Y., Wang, S., Wang, L., and Chen, W. (2021). LoRA: Low-Rank Adaptation of Large Language

Models (arXiv:2106.09685). arXiv.

Kingma, D. P., and Welling, M. (2022). Auto-Encoding Variational Bayes (arXiv:1312.6114). arXiv.

Roth, R. E. (2021). Cartographic Design as Visual Storytelling: Synthesis and Review of Map-Based Narratives, Genres, and Tropes. The Cartographic Journal, 58, 83–114. https://doi.org/10.1080/00087041.2019.1633103

Wang, H.-N., Liu, N., Zhang, Y.-Y., Feng, D.-W., Huang, F., Li, D.-S., and Zhang, Y.-M. (2020). Deep Reinforcement Learning: A Survey. Frontiers of Information Technology and Electronic Engineering, 21, 1726–1744. https://doi.org/10.1631/FITEE.1900533

Wu, M., Sun, Y., and Jiang, S. (2023). Adaptive Color Transfer From Images to Terrain Visualizations. IEEE Transactions on Visualization and Computer Graphics, 30, 5538–5552. https://doi.org/10.1109/TVCG.2023.3295122

Wu, M., Sun, Y., and Li, Y. (2022). Adaptive Transfer of Color From Images to Maps and Visualizations. Cartography and Geographic Information Science, 49, 289–312. https://doi.org/10.1080/15230406.2021.1982009

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.