ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Evaluating Originality in AI-Generated Contemporary Works

Gurpreet Kaur 1![]() , Manikandan Jagarajan 2

, Manikandan Jagarajan 2![]()

![]() ,

Dr. Jyoti Saini 3

,

Dr. Jyoti Saini 3![]()

![]() ,

Deepak Bhanot 4

,

Deepak Bhanot 4![]()

![]() ,

Darshana Prajapati 5

,

Darshana Prajapati 5![]()

![]() ,

Bhupesh Suresh Shukla 6

,

Bhupesh Suresh Shukla 6![]()

1 Associate

Professor, School of Business Management, Noida International University, India

2 Assistant

Professor, Department of Computer Science and Engineering, Aarupadai

Veedu Institute of Technology, Vinayaka Mission’s Research Foundation (DU),

Tamil Nadu, India

3 Associate Professor, ISDI - School of Design and Innovation, ATLAS

Skill Tech University, Mumbai, Maharashtra, India

4 Centre of Research Impact and Outcome, Chitkara University, Rajpura-

140417, Punjab, India

5 Assistant Professor, Department of Interior Design, Parul Institute of

Design, Parul University, Vadodara, Gujarat, India

6 Department of Engineering, Science and Humanities Vishwakarma

Institute of Technology, Pune, Maharashtra, 411037, India

|

|

ABSTRACT |

||

|

The fast

development of the generative artificial intelligence has considerably

altered the modern artistic operations, posing the essential concerns about

the matter of originality, authorship, and artistic worth of the AI-generated

products. Although AI systems can create visually attractive and

stylistically varied results, it is a more pressing problem how to judge

whether these were original creations or just recombinations

of acquired information. This analysis suggests a holistic analysis of

originality in AI modern art by joining the computational evaluation with

human analysis. The study constructs originality as a multidimensional

phenomenon that covers novelty, non-traditionality, intention to create

something new, and relevance to its contexts in terms of cultural and

historical reference space. The proposed framework is based on the theories

of computational creativity and human-AI co-creativity, but it also considers

the shared authorship models where originality is created through the interaction

between artists, datasets, algorithms, and curatorial choices. The

originality assessment model based on AI is presented and is a combination of

visual, semantic, stylistic, and contextual feature extraction with

embedding-based similarity and divergence analysis. The quantitative measure

of originality in terms of novelty scores, stylistic distance measures and entropy based diversity measures are used to represent the

structural and statistical aspects of originality. These calculation tests

are then complemented by qualitative tests such as the art experts, curators

and audience perception studies in order to cover

the subjective and interpretive aspects which most automated programs fail to

cover. A comparative study of AI-based evaluation and traditional originality

assessment methods shows the advantages and the constraints of the former. |

|||

|

Received 18 June 2025 Accepted 02 October 2025 Published 28 December 2025 Corresponding Author Gurpreet

Kaur, gurpreet.kaur@niu.edu.in

DOI 10.29121/shodhkosh.v6.i5s.2025.6925 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI-Generated Art, Originality Evaluation,

Computational Creativity, Human–AI Co-Creativity, Novelty Metrics,

Contemporary Art |

|||

1. INTRODUCTION

The advent of artificial intelligence as a creative agent has changed the modern creative practice significantly. Generative models that include generative adversarial networks (GANs), diffusion models, and transformer-based models have allowed machines to create paintings, photographs, installations, music, and multimedia works that mimic, recreate or even exceed some of the formal qualities of artistic work created by human hands. With AI-generated art coming more and more into view in galleries, auctions, and online platforms and educational settings, the debate on the nature of creativity, authorship, and value has ceased to be a hypothetical discussion, becoming an urgent matter instead. One of the most debated and tricky ideas in the analysis of contemporary works created by AI is originality, as one of these questions. The traditional concepts of originality in artworks have been linked to the intentionality of a human being, individuality and breaking down of the current styles or norms. This is because art historical models tend to define originality by innovation, contextual disruption, or the creation of new aesthetic discourses Abbas et al. (2024). Nevertheless, the AI systems put pressure on such assumptions because it works based on the statistical learning of large collections of existing artworks, cultural images, and stylistic patterns. This has caused serious arguments whether AI-made outputs can be considered as pure creative originality or advanced recombinations of learnt material. The challenge is not just on the technical operation of AI models but also the philosophical and cultural comprehension of the very concept of creativity. Current discourses tend to be polarizing in their views on originality of AI. At one end, the AI-generated art is disregarded as a derivative, one that is not intentionally created and the experience of subjectivity Alexander et al. (2023).

On the other, it is hailed as a new way of creativity which stretches the boundaries of art to the limits of human ability. Both roles display the inefficacy of traditional originality standards directly involved with AI-based creative systems. Consequently, there is an increasing demand of the structured, transparent, and interdisciplinary paradigms that could assess originality in AI-generated pieces without necessarily based on human-related or purely computational assumptions. In order to assess originality in contemporary art that is created by AI, it is necessary to redefine originality as a multidimensional phenomenon Davar et al. (2025). Instead of seeing the originality as a single quality, it has to be perceived in terms of the interacting dimensions, including novelty, contrast to previously learned styles, situational appropriateness, cultural allusions, and the contribution of human-AI cooperation. In most modern-day practices artists curate datasets, create prompts, fine-tune models, and choose outputs, placing originality as an emerging feature of co-creative systems as opposed to an act of authorship. This change compels evaluators not to consider either of the two extremes of original versus copied but rather to make more sophisticated judgments based on the computed and human-perceived evaluation Al-Zahrani (2024). The other issue is that there is a break between algorithmic measures of similarity and human view of originality. Although AI systems may be used to measure distances in embedding spaces or identify that they have been stylistically overlaid with training data, these metrics are not the full picture of how originality is perceived by the audiences, critics and curators.

2. Related Work and Literature Review

2.1. Studies on AI-generated art and creative systems

The study of AI-generated art has grown at a fast pace as generative adversarial networks, variational autoencoders, diffusion models, and large transformer-based models that are able to create high-quality visual and multimedia art have become available. The initial research work revolved on technical feasibility where machines created images, paintings and designs to copy existing artistic styles. Later research moved away towards the role of AI as a creative system, as opposed to a tool, and explored the role of algorithms in ideation, variation, and aesthetic exploration Haenlein and Kaplan (2019). The role of the artist as a creator, system designer or collaborator has been explored by scholars in relation to AI-generated artworks displayed in auction-houses and galleries. Creative system studies emphasize various approaches to human-AI interaction, such as entirely autonomous generation, mixed-initiative co-generation and human-oriented prompt-based workflows Zhao et al. (2024). These researchers maintain that creativity is the result of datasets, algorithms and human decision making as opposed to the AI model itself. Nonetheless, the works of many authors admit the inadequacy of modern systems, including reliance on training data and the absence of semantic meaning or purpose. Consequently, originality has become a discussion that is usually referred to in an indirect way with the context being the stylistic novelty or variety of an output as opposed to a greater conceptual inventiveness Yeo (2023). Although these works demonstrate that AI-generated art is a valid field of creativity, they also show that standardized systems of assessing originality are lacking in anything more than shallow novelty and technical prowess.

2.2. Computational Creativity and Originality Metrics in Prior Research

In computational creativity studies, a long running attempt to formalize creativity using standardized criteria has often had originality as a measure, often based on criteria of novelty, surprise and value. Existing literature suggests quantitative measures including statistical novelty scores, distance measures on feature or embedding space, entropy-based measures of diversity and rarity-based measures as compared to training data. In visual art, the idea of originality is commonly measured by the stylistic distance based on the convolutional neural network features, latent space interpolation, or clustering techniques detecting the deviations of the learned patterns Weber-Wulff et al. (2023). They are attractive because these approaches are scalable and repeatable measures, which are useful to assess large bodies of AI-generated works. Nonetheless, the metrics that are currently in existence have significant limitations. Measures of novelty can encourage random or unrelated performance, and measures of similarity can punish significant reference to culture and style. In addition, the majority of the computational methods presuppose a fixed reference set and ignore the dynamic character of artistic traditions and redefinition of context. Other researchers are trying to solve this problem by using semantic embeddings or cross-modal representations, which connect visual features and textual descriptions or concepts labels Ibrahim (2023). Other suggest hybrid assessment systems that have novelty with aesthetic ratings or coherence ratings.

2.3. Human Perception Studies on AI Originality

The research on human perception offers critical information on how originality in art created by AI can be perceived by audiences, artists, and experts. Empirical studies, survey and controlled experiments, and evaluations like exhibitions indicate that the contextual details are a potent factor of perceptions of originality, including the awareness of viewers that a piece of art is produced by an AI, the involvement of human contribution, and the context of how a piece of art was created. Researchers have found that audiences tend to perceive the AI-generated content as attractive to the eye but question their originality, especially when they are told about the training data relationships Gaumann and Veale (2024). Curatorial studies, and art critic studies, that are expert-centered display more refined judgments. Instead of the originality judged by the form, professionals focus on the conceptual framing and relevance to the culture and the intentional use by the artist of AI as a medium. When created in a co-creative environment, the works created in the process of intended human-AI collaboration are seen as more innovative than entirely human creation. Also, according to cross-cultural research, the rates of originality evaluation depend on cultural backgrounds, differences in attitude towards tradition, imitations and technological authorship can be observed Perkins (2023). Table 1 presents the approaches of assessing originality of AI-generated contemporary artworks. These results reveal the lack of correlation between the measures of algorithmic originality and human aesthetic preference. Human judgements combine emotional response, symbolic significance, and socio-historical place-dimensions, which are hard to define computationally.

Table 1

|

Table 1 Related Work on Evaluating Originality in AI-Generated Contemporary Works |

|||||

|

AI Technique Used |

Art Domain |

Originality Definition |

Evaluation Method |

Metrics Used |

Limitations |

|

Evolutionary Algorithms |

Generative Art |

Novelty + Value |

Computational + Expert

Review |

Novelty Score |

Limited to rule-based

systems |

|

Creative AI Systems Waltzer et al. (2024) |

Visual Art |

Surprise and Value |

Rule-based Evaluation |

Surprise Index |

Weak human perception

linkage |

|

GAN (AICAN) |

Fine Art |

Stylistic Innovation |

Style Space Analysis |

Style Distance |

Context ignored |

|

ML-based Generative Models |

Digital Art |

Conceptual Novelty |

Critical Analysis |

Qualitative Review |

No numeric metrics |

|

Conceptual AI Models |

Computational Creativity |

Psychological Novelty |

Theoretical Framework |

Conceptual Metrics |

Lacks operationalization |

|

CNN + Clustering |

Visual Design |

Visual Novelty |

Embedding Similarity |

Cosine Distance |

Style bias present |

|

Co-Creative AI Tools |

Interactive Art |

Emergent Originality |

User Studies |

Engagement Scores |

Subjective outcomes |

|

VAE + GAN Ardito (2025) |

Abstract Art |

Statistical Novelty |

Distribution Analysis |

Entropy |

Weak semantic meaning |

|

AI Media Systems |

New Media Art |

Cultural Originality |

Media Analysis |

Contextual Indicators |

Non-computational |

|

Multimodal Transformers Birks and Clare (2023) |

Visual–Text Art |

Semantic Originality |

Cross-modal Evaluation |

Embedding Alignment |

Dataset dependent |

|

Diffusion Models |

Generative Painting |

Divergence from Style |

Style Manifold Analysis |

KL Divergence |

High compute cost |

|

Human–AI Co-Creation |

Contemporary Art |

Process-Based Originality |

Expert + Audience Review |

Creativity Index |

Limited scale |

|

Vision–Language Models Sukhera (2022) |

AI Art Systems |

Contextual Novelty |

Hybrid Evaluation |

Novelty + Perception |

Interpretability challenges |

3. Conceptual Framework for Originality Evaluation

3.1. Dimensions of originality: novelty, divergence, intent, and context

The contemporary works created by the AI cannot be measured by a single criterion to determine its originality; it is created by the combination of various dimensions. Novelty is the extent to which an artistic work brings into existence forms, patterns, or ideas that are statistically/perceptually new compared to what is in existence. Novelty is frequently quantified in feature or embedding space in computational terms. But novelty is not enough since arbitrary variation might pass as new even though it is not creatively significant. Divergence is an addition to novelty, as it evaluates the extent to which a work of art has broken away and opposed conservative styles, genres, or acquired distributions, yet still has internal consistency Das Deep et al. (2025). Divergence points out intentional deviation instead of random difference. Intent provides a very crucial human aspect. Intent is not necessarily embedded in the algorithm of AI generated art but can be in the decisions of artists, designers or curators (such as the choice of datasets, prompt engineering and model configuration or curation of output). The assessment of intent should be done based on the interpretation of the application of AI as a tool to achieve conceptual or expressive objectives. Context also puts originality in the context of culture, social and historical context. The originality of an artwork is predetermined by its connection to the modern discourse, the previous artistic movements and the conditions under which it is presented. Contextual originality acknowledges the fact that a re-use/re-interpretation of forms can be original when put in new conceptual/cultural context. Collectively, the dimensions create the complex picture that goes beyond reductive measures of novelty and seeks to conceive originality in the Artificial Intelligence-based creative practice.

3.2. Human–AI Co-Creativity and Shared Authorship Models

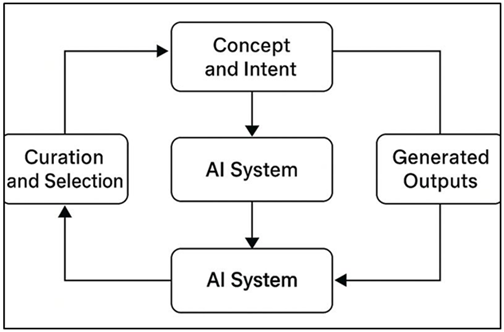

Human and AI co-creativity has become a paradigm of the modern AI-generated art that threatens the previous tradition of showing individual authorship. Co-creativity does not consider AI an autonomous creator or a passive tool, but instead of that, artistic production is viewed as an interactive process where human and algorithmic participants play separate but mutually reliant roles in it. Conceptual intent is defined by artists, datasets are curated, prompt designs and outputs are selected, whereas AI systems are used to create variations and explore latent spaces, and unexpected possibilities are surfaced. Human- AI co-creative workflow is supported in the model of shared authorship (Fig 1). The dynamics of interaction is the source of originality in this model and not either of the agents.

Figure 1

Figure 1

Human–AI Co-Creative

Workflow and Shared Authorship Model

Shared authorship models acknowledge that creative authority is being distributed among more than two actors, programmers, contributors of datasets, artists and even institutions that influence technological and cultural landscape. Such dispersion makes it hard to evaluate originality since it becomes hard to trace the original creative choices to one source. Other frameworks suggest that originality is assessed at the system level with the creative unit being the human-AI workflow. Others stress that the artist makes AI processes the main place of originality deliberately framed. Notably, ethical and legal factors to do with credit, ownership, and accountability are also presented by co-creative models. Assessments Evaluatively, as an assessment of originality, shared authorship allows the evaluation of human intent and algorithmic capability combination to be judged. This outlook identifies originality with process, interaction and creative strategy and not only with the final output characteristics.

3.3. Cultural, Stylistic, and Historical Reference Spaces

The contemporary pieces created by AI are necessarily placed in the space of extremely complicated cultural, stylistic, and historical references based on the training data and traditions of art. These spaces of reference determine the opportunities and limitations of originality. Visual languages, genres, and formal conventions acquired by AI models through learning on existing artworks are referred to as stylistic reference spaces. The originality, in this regard, means moving within, or bringing together or converting these stylistic coordinates instead of acting outside of them altogether. Too much similarity can indicate imitation and meaningful change can indicate creative reinterpretation. Cultural reference spaces imply symbolic meanings, values and accounts which affect the perception of originality. Training AI systems to work with international data sets will introduce a variety of cultural elements, which question the notion of appropriation and authenticity and the ability to interpret the situation contextually. To determine originality, it is thus necessary to be sensitive to the specifics of culture and the morality of cross-cultural synthesis. Historical space reference also puts the AI-generated works into perspective through placing them within a larger art-historical trajectory. Something that is new in one time or society may be traditional in the other.

4. Proposed AI-Based Originality Assessment Model

4.1. System architecture and evaluation pipeline

The originality evaluation model, proposed to be based on AI, is a modular multi-stage framework, which combines computational assessment with human-centered assessment. The architecture has an input layer that consumes AI-created works of art and related metadata, such as prompts, model parameters, reference to a dataset, and context of creation. The information is preprocessed in order to standardize forms and allow cross-modal analysis. The basic assessment pipeline comprises of parallel analytical modules working on visual, semantic, stylistic and contextual aspects of novelty. A feature extraction layer applies deep learning models specific to each modality to perform processing on artworks and come up with structured representations that are comparatively and interpretively suitable. These representations are transferred to similarity and divergence analysis modules that calculate quantitative originality measures referring to reference datasets and stylistic corpora. These indicators are then aggregated into composite originality scores by an aggregation layer without compromising transparency by having interpretable sub-metrics.

4.2. Feature Extraction: Visual, Semantic, Stylistic, and Contextual Features

The originality assessment model relies on feature extraction as the model captures various dimensions of AI-generated artworks. Such features allow low and middle level analysis of the form and aesthetics arrangement. The semantic features go beyond physical appearance by connecting works of art to some concept, symbol or a story. This is carried out by using multimodal models that align images with textual descriptions, prompts or artist statement resulting in common representations of embedding. Stylistic features are concerned with artistic styles, genres and influences. The style encoders are analyzed as brushstroke, tonal rhythms, or geometric arrangement and the model uses such to place each piece of artwork within a stylistic manifold. The system does not take style as a categorical term but instead, it takes it as a continuous space, which makes it possible to analyse the stylistic proximity and transformation on a fine-grained level. The contextual features include the external information like cultural references, historical periods, exhibition settings, and the workflow of creation. This information about the context is encoded as metadata and represented as a knowledge graph and integrated into the evaluation process.

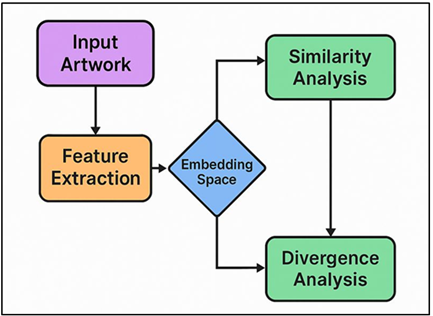

4.3. Similarity and Divergence Analysis Using Embedding Spaces

The key point of originality quantification in the intended model is similarity and divergence analysis. Fetched features are mapped into high dimensional embedding space within which the relationships among AI generated artworks and reference corpora can be studied in a systematic manner. Similarity analysis determines how close an artwork is to existing works by the distance measure of either cosine similarity or Euclidean distance. A high similarity can be a sign of the imitation of style or a powerful influence, whereas a moderate similarity can be a purposeful reference to the existing traditions or dialog. Figure 2 represents embedding based framework that analyses similarity and divergence to originality. Divergence analysis is a complement to similarity, which measures the extent to which an artwork deviates to the prevalent clusters or distributions of an embedding space.

Figure 2

Figure 2 Embedding-Based Similarity and Divergence Analysis

Framework for Originality Evaluation

Cluster dispersion, density estimation, and outlier detection are the techniques used to provide an evaluation on whether or not the deviations are meaningful to be explored or merely incoherent variation. Temporal embeddings also enable comparison of historical datasets thus permitting consideration of originality with respect to particular periods or movements as opposed to a fixed corpus. To prevent the possibility of oversimplification, the similarity and divergence measures are calculated individually in the visual, semantic, stylistic, and contextual space and then viewed in combination. Such multi-space analysis can be used to tell when something is superficial novelty and when it is conceptual originality. Interpretability and expert review are supported with the help of visualization tools, including the integration of maps and the plots of the trajectories. The model offers an ideologically sound but flexible method of comprehending the placement of AI-generated works as part of a larger context in modern art by basing the originality assessment on the embedding-based relational analysis.

5. Evaluation Metrics and Methodology

5.1. Quantitative metrics: novelty score, stylistic distance, entropy measures

The quantitative analysis of novelty in AI-generated current works is based on the measures that reflect statistical deviation, variety, and structural originality. The novelty score is a comparison of the relevance of an artwork compared to a reference data set based on the calculation of the distance in the visual and semantic embedding space. The increase in novelty scores implies that there is more variation or deviation of familiar patterns, which implies new forms or concepts are introduced. Nonetheless, novelty is understood along with measures of coherence to prevent the encouragement of haphazard or aesthetically incompetent response. Stylistic distance is a measure of the distance of a work of art that has been measured against the existing artistic styles or prevailing clusters in a stylistic embedding space. This measure is determined by modeling style as a continuous manifold to determine whether an artwork is a valuable transformation of the existing styles, or simply an imitation. A moderate degree of stylistic distance can be a sign of creative re-interpretation, whereas extreme degree can be a sign of either innovation or loss of stylistic reference. Entropy-like measures are the measures of unpredictability and internal heterogeneity of the generated outputs. High entropy indicates more diversity of visual patterns, semantic themes or compositional structures, which imply exploratory creativity. Entropy is also employed at the system level to measure the diversity of the outputs of a given model repeated multiple times.

5.2. Qualitative Metrics: Expert Judgment, Curator Evaluation, Audience Perception

Qualitative assessment is used in conjunction with quantitative measures, to represent subjective and contextual elements of originality which cannot be well represented by automated techniques. Expert judgments include evaluations by artists, art historians, and critics who make judgments about works through conceptual substance, novelty and fit to the current discourse. The deliberate application of AI, the originality of ideas, and the contribution that the work makes to the continued artistic discourse are also the aspects that experts tend to take into consideration instead of paying attention to formal novelty only. With curator evaluation, the focus is on contextual originality, in which the output of AI-generated works is evaluated in the context of an exhibition narrative, a thematic framework or an institutional context. The curators evaluate whether a work is introducing new perspectives, working against convention or addressing issues of cultural and social significance in a meaningful way. They are based on their audience involvement as well as spatial display and curatorial purpose, which makes them especially applicable to galleries and museum settings. The research on audience perception brings an understanding of the way the non-expert viewers perceive originality. Perceptions of novelty, emotional impact and authenticity are gained through surveys, interviews and behavior analysis. The reactions of the audiences to these works vary frequently to the opinions of the experts, which is why it is essential to focus on the plurality of opinions. In particular, the knowledge of AI involvement may have a strong impact on the perception of originality. The evaluation methodology involves systematic incorporation of expert, curatorial, and audience comments, so the originality evaluation can be based on the variety of interpretation systems, and the computational analysis can be brought into the same framework as the aesthetic experience of a human.

5.3. Comparative Analysis with Traditional Originality Assessment Methods

Comparative analysis places the AI-based originality assessment and the conventional ones applied to art criticism and art history. The traditional methods of measuring originality are based on qualitative interpretation and historical comparison, as well as the critical discourse, with the primary consideration of the aspects of innovation, influence, conceptual rupture. Such techniques are very effective at preserving the cultural and contextual meaning, but can be difficult to scale and repeat with large datasets or highly changing works of AI machines. The suggested methodology will compare the computational metrics and the classic expert-based scoring in order to define the areas of blurring and separation. When the high scores on novelty are in line with expert ratings of innovation, the computational scores improve the established ratings of evaluations. On the other hand, inconsistencies help to expose the weaknesses of the quantitative methods especially when it comes to conceptual intent or symbolism. Comparing the quality of AI-generated artworks with the similarity of human-created works assessed according to conventional standards, the analysis does point at how AI undermines the very concept of originality. It can be seen as well in the comparison that hybrid evaluation systems can be developed that combine the strength and generalizability of the computational analysis with the richness of interpretation of the traditional approaches. This kind of comparative method is not aimed at substituting the conventional art-critical practices but rather at supplementing them by providing a more comprehensive and more transparent system of evaluating the originality in the changing environment of AI-generated contemporary art.

6. Results and Analysis

The analysis outcomes reveal that the concept of originality in AI-generated modern artworks should be perceived as a hybrid evaluation framework. Quantitative data demonstrate obvious differences between imitation in style and intentional deviation as works with moderate novelty scores and equal distance in the style are rated uniformly higher by specialists. High entropy generation enhanced diversity yet it was not always associated with perceived originality which points to the weakness of solely statistical gauges. As per the evaluations of quality by qualitative measures, it was observed that works produced through contextual framing and human-AI cooperation were rated high in originality as compared to works that were produced independently without the influence of a partner. The results verify that originality is a result of relational, contextual and interpretive aspects other than only computational novelty.

Table 2

|

Table 2 Quantitative Originality Metrics for AI-Generated Contemporary Works |

|||||

|

Artwork Category |

Novelty Score (%) |

Stylistic Distance (%) |

Semantic Divergence (%) |

Entropy Index |

Composite Originality Score

(%) |

|

Fully Autonomous AI Output |

71.4 |

64.2 |

58.6 |

0.82 |

66.1 |

|

Prompt-Guided AI Output |

76.8 |

69.5 |

63.9 |

0.79 |

71.7 |

|

Human–AI Co-Created Work |

83.6 |

74.8 |

72.4 |

0.76 |

78.9 |

|

Style-Constrained AI Work |

62.9 |

51.7 |

49.3 |

0.68 |

58.4 |

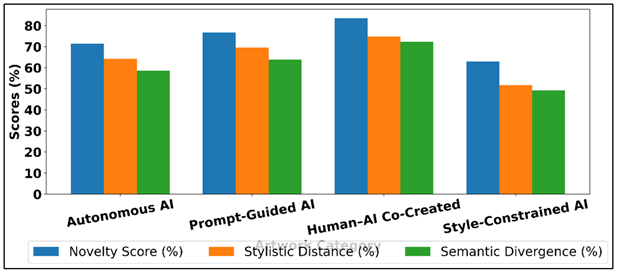

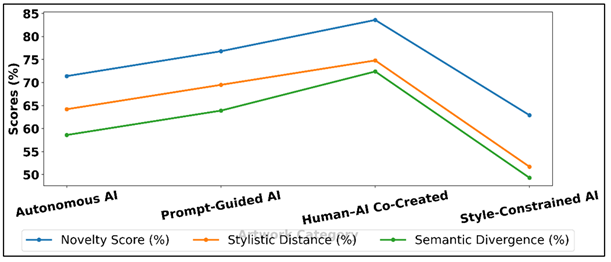

Table 2 provides a comparative study of the concepts of originality in various AI-based artistic workflows, showing evident performance differences between the types of artworks. Human-AI co-created works have the highest composite originality score (78.9%), as they have high levels of novelty (83.6%), high levels of stylistic distance (74.8%), and high levels of semantic divergence (72.4%). It means that the creative exploration of AI potential is promoted by the intentional human intervention, and conceptual consistency is not compromised. In Figure 3, a comparison of the novelty, stylistic distance, and semantic divergence across works of art has been made.

Figure 3

Figure 3 Comparison of Novelty, Stylistic Distance, and

Semantic Divergence Across Artwork Categories

Timely-guided AI results are also good, with a cumulative score of 71.7% of the usefulness of structured prompts in directing generative models to produce more original results than using generative models that are not guided at all. Figure 4 presents the comparison of the metrics of creativity between the category of AI and human-AI artworks. Full autonomy AI outputs are moderately novel (66.1), with acceptable novelty but reduced levels of semantic divergence (58.6), indicating them to be dependent on recombination of learned patterns without a high conceptual direction.

Figure 4

Figure 4 Creativity Metrics Across AI and Human–AI Artwork

Categories

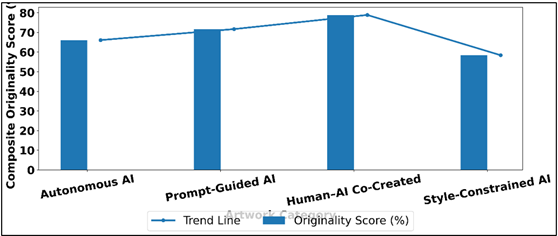

On the contrary, the lowest composite originality score (58.4%) is observed in style-constrained AI works. The small distance in their styles (51.7) and the divergence in semantics (49.3) reveal the ways in which adherence to fixed style bars creativity. These trends are further explained by the values of entropy. Figure 5 indicates originality score bar line comparison among the categories of artworks.

Figure 5

Figure 5 Composite Originality Score: Bar–Line Comparison

Across Artwork Categories

Although there is an increase in entropy in fully autonomous products, which are characterized by high entropy (0.82), the fact remains that perceived originality is not a direct result of diversity, which suggests that randomness is not enough. In general, the findings validate that the originality of AI-generated contemporary art is at its highest when algorithmic generation occurs with a human intent instead of a limitation or a fully automated procedure.

7. Conclusion

This paper will answer this question and will thus suggest and support a multidimensional system of assessment of originality in AI-generated contemporary art that combines both computational and human-oriented evaluation systems. With artificial intelligence systems becoming more and more an influence on the creative work of the artists, traditional concept of originality based on human authorship and creative style becomes inadequate. The findings of this study show that creativity in AI-created works cannot be a unique and absolute factor but rather an outcome of novelty, deviation, purpose, context, and human-AI interaction. The suggested AI-driven model of originality determining is a step forward of the current methods as it goes beyond considering novelty on the surface level, and systems originality into the reference space. Scalable and reproducible measures of structural difference and diversity are presented by quantitative measurements like novelty scores, stylistic distance and entropy. Nevertheless, the paper is able to confirm that such measures cannot always be sufficient to reflect the depth of concept, cultural meaning or artistic intent. Expert, curator, and audience qualitative assessments are important in explaining originality especially in co-creating environments where human judgment and curatorial context play a major role in shaping the outcome. Significantly, the results demonstrate the significance of the shared authorship models in the modern AI art practice. Art created by humans and AI in deliberate cooperation was always perceived as more original compared to the work of completely automated generation, and it indicated that the creative approach and the creative process is the source of the originality, and not the algorithmic generation. The relative analysis to the old conventional ways of originality evaluation further proves that AI-based evaluation needs to be added to the existing art-critical models, rather than substituting them.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Abbas, M., Jam, F. A., and Khan, T. I. (2024). Is it Harmful or Helpful? Examining the Causes and Consequences of Generative AI usage Among University Students. International Journal of Educational Technology in Higher Education, 21, Article 10. https://doi.org/10.1186/s41239-024-00444-7

Alexander, K., Savvidou, C., and Alexander, C. (2023). Who Wrote this Essay? Detecting AI-Generated Writing in Second Language Education in Higher Education. Teaching English with Technology, 23(3), 25–43. https://doi.org/10.56297/BUKA4060/XHLD5365

Al-Zahrani, A. M. (2024). The Impact of Generative AI Tools on Researchers and Research: Implications for Academia in Higher Education. Innovations in Education and Teaching International, 61(5), 1029–1043. https://doi.org/10.1080/14703297.2023.2271445

Ardito, C. G. (2025). Generative AI Detection in Higher Education Assessments. New Directions for Teaching and Learning, 2025(181), 11–28. https://doi.org/10.1002/tl.20624

Birks, D., and Clare, J. (2023). Linking Artificial Intelligence Facilitated Academic Misconduct to Existing Prevention Frameworks. International Journal for Educational Integrity, 19, Article 20. https://doi.org/10.1007/s40979-023-00142-3

Das Deep, P., Martirosyan, N., Ghosh, N., and Rahaman, M. S. (2025). ChatGPT in ESL Higher Education: Enhancing Writing, Engagement, and Learning Outcomes. Information, 16(4), Article 316. https://doi.org/10.3390/info16040316

Davar, N. F., Dewan, M. A. A., and Zhang, X. (2025). AI Chatbots in Education: Challenges and Opportunities. Information, 16(3), Article 235. https://doi.org/10.3390/info16030235

Gaumann, N., and Veale, M. (2024). AI Providers as Criminal Essay Mills? Large Language Models Meet Contract Cheating Law. Information and Communications Technology Law, 33(3), 276–309. https://doi.org/10.1080/13600834.2024.2352692

Haenlein, M., and Kaplan, A. (2019). A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence. California Management Review, 61(4), 5–14. https://doi.org/10.1177/0008125619864925

Ibrahim, K. (2023). Using AI-Based Detectors to Control AI-Assisted Plagiarism in ESL Writing: “The Terminator Versus the Machines”. Language Testing in Asia, 13, Article 46. https://doi.org/10.1186/s40468-023-00260-2

Perkins, M. (2023). Academic Integrity Considerations of AI Large Language Models in the Post-Pandemic Era: ChatGPT and Beyond. Journal of University Teaching and Learning Practice, 20(2), 1–24. https://doi.org/10.53761/1.20.02.07

Sukhera, J. (2022). Narrative Reviews: Flexible, Rigorous, and Practical. Journal of Graduate Medical Education, 14(4), 414–417. https://doi.org/10.4300/JGME-D-22-00480.1

Waltzer, T., Pilegard, C., and Heyman, G. D. (2024). Can you Spot the Bot? Identifying AI-Generated Writing in College Essays. International Journal for Educational Integrity, 20, Article 11. https://doi.org/10.1007/s40979-024-00158-3

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., Šigut, P., and Waddington, L. (2023). Testing of Detection Tools for AI-Generated Text. International Journal for Educational Integrity, 19, Article 26. https://doi.org/10.1007/s40979-023-00146-z

Yeo, M. A. (2023). Academic Integrity in the Age of Artificial Intelligence (AI) Authoring Apps. TESOL Journal, 14(3), Article e716. https://doi.org/10.1002/tesj.716

Zhao, Y., Borelli, A., Martinez, F., Xue, H., and Weiss, G. M. (2024). Admissions in the Age of AI: Detecting AI-Generated Application Materials in Higher Education. Scientific Reports, 14, Article 26411. https://doi.org/10.1038/s41598-024-77847-z

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.