ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

GAN-Based Reconstruction of Vintage Prints

Mary Praveena J 1![]()

![]() ,

Dr. Vandana Gupta 2

,

Dr. Vandana Gupta 2![]()

![]() , Dr. Smita Rath 3

, Dr. Smita Rath 3![]()

![]() , Tanveer Ahmad Wani 4

, Tanveer Ahmad Wani 4![]() , Sahil Suri 5

, Sahil Suri 5![]()

![]() , Vishal Ambhore

6

, Vishal Ambhore

6![]()

1 Assistant

Professor, Department of Computer Science and Engineering, Aarupadai

Veedu Institute of Technology, Vinayaka Mission’s Research Foundation (DU),

Tamil Nadu, India

2 Assistant

Professor, Department of Fashion Design, Parul Institute of Design, Parul

University, Vadodara, Gujarat, India

3 Associate Professor, Department of

Computer Science and Information Technology, Siksha 'O' Anusandhan

(Deemed to be University), Bhubaneswar, Odisha, India

4 School of Sciences, Noida

International University, Greater Noida-203201, India

5 Centre of Research Impact and

Outcome, Chitkara University, Rajpura- 140417, Punjab, India

6 Department of E and TC Engineering, Vishwakarma

Institute of Technology, Pune, Maharashtra, 411037 India

|

|

ABSTRACT |

||

|

Vintage prints

are crucial to preserve the cultural, historical, and artistic heritage and

although traditional techniques of restoration are important challenges,

physical deterioration, including fading, stains, ripping, and noise are

major obstacles to preserve printed images. Manual conservation and classical

methods of digital inpainting can be time-consuming, subjective and unable to

match the level of fine textuality and stylistic fidelity. This paper

presents a GAN-based reconstruction model of the high-quality reconstruction

of the damaged vintage prints with the deep generative learning and

style-conscious constraints. The suggested method uses an adversarial

learning paradigm where a generator network aims at restoring missing

structures, textures and tonal continuity and a discriminator network is used

to assess realism, stylistic consistency and historical plausibility. The

extensive art collection maintained in museums, libraries, and personal

collections is filtered, including various patterns of degradation and

printing styles. The high-level preprocessing, such as noise normalization,

contrast enhancement, degradation-sensitive annotation, and others,

facilitates the powerful training. The model considers content similarity

preserving loss functions, similarity of perception, and consistency of style

as content preserving goals in order to retain artistic integrity. Massive

experiments indicate that the suggested structure significantly improves the

performance of standard restoration and baseline deep learning structures in

terms of structural and perceptual quality and visual authenticity. The

effectiveness of the reconstructed outputs as the art historians and painting

experts confirm the effectiveness of these measures

in preserving original aesthetic character also through qualitative

evaluations. The findings in the article suggest that GAN-based

reconstruction is a scalable, customizable, and culturally aware way to

conserve digital data and allow long-term preservation, accessibility of archival

data, and scholarly study of delicate vintage prints. |

|||

|

Received 04 May 2025 Accepted 08 August 2025 Published 28 December 2025 Corresponding Author Mary

Praveena J, marypraveena.avcs092@avit.ac.in DOI 10.29121/shodhkosh.v6.i5s.2025.6913 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: GAN-Based Image Restoration, Vintage Print

Reconstruction, Digital Art Conservation, Image Degradation Recovery,

Adversarial Learning, Cultural Heritage Preservation |

|||

1. INTRODUCTION

Vintage prints Vintage prints contain historical photographs, lithographs, etchings, manuscripts and early printed artifacts which represent invaluable cultural and artistic archives of historical social history, aesthetics and technological change. Such artifacts are bound to get physically and chemically damaged over time by the deteriorated aging materials, their exposure to the environment, errors in their storage and handling. Typical types of degradation are fading of inks and pigments, stain on the surface, yellowing of the paper, cracks, tears, missing areas, and noises added during the process of scanning or duplication. This degradation not only reduces the quality of visual details, but also poses a risk to the legibility, authenticity and readability of such works, the conservation of which is a particularly sensitive issue in museums, archives, libraries and even the interpretation of such works by researchers. The customary methods of restoration are very dependent on the human conservators, which are skilled in applying manual procedures to fix the physical damages or through digital editing of scanned images Lepcha et al. (2023). Although the restoration by experts is quite effective and can produce some spectacular outcomes, it may be long and expensive, as well as inapplicable to large archival collections. In addition, the subjective bias might be held by the hands of the manual interventions, which can change the original creative purpose or historical nature of the print. Traditional digital image processing, including interpolation, diffusion-based inpainting, and filtering, can provide the partial automation, but they usually do not work well with complicated textures, extensive missing areas, and style fidelity Liu et al. (2024). These limitations point to the necessity of smart, data-based restoration methods that can cause a balance between accuracy, efficiency, and authenticity. The latest development of deep learning has revolutionized the image restoration system whereby models are now capable of learning complex visual patterns directly through data.

Convolutional neural networks have been shown to be successful in activities like denoising, super-resolution, and inpainting. Nevertheless, CNN based methods tend to give over-smoothing results and are incapable of synthesising fine textured art and historical prints, which are vital to artistic and historical printing. To mitigate these issues, Generative Adversarial Networks (GANs) introduce an adversarial learning scheme according to which a generator generates restored images whereas a discriminator checks their authenticity Ye et al. (2023). This contest stimulates the creation of visual plausible images with more edible details and deep textures, thus GANs are especially applicable to the reconstruction of deteriorated vintage prints. GAN-based reconstruction has special opportunities and challenges in the context of the preservation of cultural heritage. As opposed to natural pictures, vintage prints are characterised by a variety of artistic styles, printing methods, and material peculiarities, which should be properly restored during the process. Each reconstruction approach should thus transcend the visual enhancement to guarantee stylistic consistency, structural integrity and historical authenticity Moser et al. (2023). The application of GANs with content preserving, perceptual, and style-conscious constraints may be used to train neural networks to recover lost or damaged areas of the artwork without affecting the original aesthetic and contextual information of the artwork. This feature makes GAN-based methods become very effective in digital conservation and archival restoration.

2. Literature Review

2.1. Traditional restoration and digital inpainting techniques

Conservation Traditional restoration of old prints has traditionally been based on the use of physical and chemical treatment by human skill to stabilize and mend damaged artifacts by human hands. Paper mending, deacidification, stain removal and pigment retouching are techniques with conservation ethics that lean towards reversibility and intervention at the least level. Although these techniques maintain the material authenticity, they are labor intensive, expensive and cannot be applied in large archival collections. Additionally, physical restoration is associated with a chance of permanent changes in case it is not managed with high precision Li et al. (2024). As the cultural heritage became digitalized, digital inpainting and image enhancement became the complementary solutions. Classical digital methods consist of interpolation based filling, diffusion models, exemplar based inpainting and partial differential equation (PDE) methods. They work well in repairing small cracks, scratches and noise (these techniques spread surrounding pixel data to the damaged areas) and are useful in areas where tiny cracks exist Wang et al. (2023). The methods of texture synthesis also expanded the inpainting abilities by imitating comparable patches to the existing parts. Nonetheless, these techniques are very sensitive to parameter choices and they tend to break down in the case of large missing regions, intricate textures or non-homogeneous patterns of degradation. The biggest weakness of conventional digital restoration is that it does not have the capacity to interpret semantic content or artistic context. Consequently, rebuilt areas can be aesthetically incongruent, tedious or structurally flawed Cao et al. (2023). Moreover, the techniques are not flexible to various print styles and types of degradation, so each object requires controlling by hand. Therefore, the current state of digital inpainting and traditional restoration is great, but its scalability, consistency, and awareness of the context are rather limited, which is why the opportunities of learning-based restoration methods are to be explored.

2.2. Deep Learning Approaches for Image Restoration

Image restoration has been greatly improved by deep learning which allows the data-driven learning of complex visual representations. Denoising, deblurring, super-resolution and image inpainting are just some of the tasks that convolutional neural networks (CNNs) have been used in. The ability of encoder and decoders enables the model to preserve both the global and local features, which result in enhanced accuracy of restoration versus the traditional encoder and decoder models Hassanin et al. (2024). Structured learning systems that were trained using paired degraded and clean images have shown excellent results in the recovery of missing or corrupted information. Auto encoders and U-Net based architectures also improved the quality of restoration by maintaining the spatial context using skip connections. These models are especially efficient in preservational structural coherence, and therefore are applicable to document and artwork restoration. More recently, attention mechanisms and transformer-based models have been presented to learn the long-range dependencies to enhance the reconstruction of complex patterns and global consistency Yang et al. (2021). Nevertheless, the progress in deep learning methods usually has a tendency to use pixel-wise similarity indicators like mean squared error or peak signal-to-noise ratio, which might lead to too smooth results. When applied to vintage prints, the over-smoothing of the image is unwelcome since it kills smaller textures, brush strokes and print-artifacts, which adds to the vintage look.

2.3. GAN Architectures in Art and Document Reconstruction

GANs have become strong tools of image synthesis and restoration in high-fidelity where perceptual realism is needed. The machine consists of a generator, which tries to rebuild or create images, and a discriminator, which analyses the nature of such images. This adversarial training scheme promotes the creation of prudent details, naturalistic textures, and coherent structures of the appearance, overcoming the blur limitations of the previous deep learning methods Vo and Bui (2023). Historical photo colorization, painting restoration, manuscript inpainting, and damage removal are some of the tasks that GANs have been used in in art and document restoration. Conditional GANs are able to restore their state on the basis of damaged inputs and multi-scale discriminators enhance the precision of the global layout and the local texture. Variants of style-based GAN also assist in preserving artistic attributes through the disentangling of content and style representations. Such functions become especially applicable with the vintage prints, where the original aesthetic identity is important. Adversarial loss functions with perceptual and content preserving loss equivalents have been demonstrated to increase structural accuracy and stylistic consistency. Domain knowledge is sometimes used by edge-aware losses or style embeddings learnt on reference artworks Lee et al. (2024). Regardless of their superiority, GANs have some weaknesses, including instability in training, mode collapse and vulnerability to bias in the datasets. Table 1 recaps the previous studies on image restoration and the GAN-based reconstruction. However, the current advancement of architecture design and training plans keep on increasing their dependability. In general, GAN-based procedures are a major improvement to the art and document reconstruction procedures, providing a middle ground between realism, authenticity, and automation.

Table 1

|

Table 1 Related Work on Image Restoration and GAN-Based Reconstruction |

||||

|

Dataset Type |

Core Method |

Key Architecture |

Strengths |

Limitations |

|

Synthetic Images |

PDE-Based |

Diffusion Model |

Effective for small cracks |

Fails on large missing areas |

|

Natural Images |

Exemplar-Based |

Patch Matching |

Texture continuity |

Repetitive artifacts |

|

Natural Images Wan et al. (2023) |

Deep Learning |

SRCNN |

Fast convergence |

Over-smoothing |

|

Scene Images |

GAN |

Context Encoder |

Semantic filling |

Blurry textures |

|

Faces, Objects |

GAN |

DCGAN |

Realistic synthesis |

Optimization instability |

|

Real Images |

Global–Local GAN |

Dual Discriminator |

Global consistency |

Style inconsistency |

|

Natural Images |

GAN |

DeblurGAN |

Sharp outputs |

Limited texture modeling |

|

Places2 Chen et al. (2022) |

GAN |

EdgeConnect |

Edge-aware recovery |

Edge prediction errors |

|

Historical Docs |

CNN + GAN |

U-Net GAN |

Text preservation |

Weak artistic modeling |

|

Paintings |

GAN |

StyleGAN Variant |

Style consistency |

High data demand |

|

Archival Photos Zhang et al. (2022) |

GAN |

Multi-Scale GAN |

Robust degradation handling |

Limited authenticity control |

|

Museum Prints Chu et al. (2024) |

GAN + Perceptual Loss |

Attention GAN |

Fine texture recovery |

Training complexity |

|

Archives and Museums |

Style-Aware GAN |

Conditional Multi-Scale GAN |

High realism and style

fidelity |

Computationally intensive |

3. Problem Definition and Dataset Description

3.1. Types of degradation in vintage prints (noise, fading, tears, stains)

Vintage prints are prone to the entire gamut of degradation processes which are occasioned by the ageing of the material, its exposure to the environment, and mechanical forces. Noise is one of the most common types of degradation as it appears in the form of graininess, speckling, or scan lines that have been added during the digitalization or by the chemical decay of inks and paper fibres. Noise dismisses details and makes the texture and line structures less clear. Another serious problem is fading which takes place because of the extended exposure to light, dampness, and oxidation. It causes contrast loss, saturation of pigments, and uneven tonal changes in a big way and this changes the original visual appearance considerably Gao and Dang (2024). Figure 1 illustrates flowchart that depicts the type of degradation in vintage prints. Tears, cracks and missing areas introduce more complicated problems to digital restoration.

Figure 1

Figure 1 Flowchart of Degradation Types in Vintage Prints

In comparison to noise or fading, tears need rodent intelligence to infer the missing information, and not merely to enhance it. Water damage, mould growth, ink bleeding, or handling residues leave stains and blotches that result in local and global imbalanced discolouration and texture variations disrupting both the local and global image patterns. The types of degradation are often found to be co-occurring, forming the very diverse profile of damage in individual print Chen and Shao (2024). The issue targeted in this study is the automated re-building of such multi-damaged olden prints without structural breakdown, fine details and stylistic genuineness. The problem is that it is hard to imitate various patterns of degradation and rebuild lost or ruined areas without creating artifacts and deformity of the style.

3.2. Dataset Sources: Archives, Museums, and Private Collections

The data on GAN-based reconstruction of vintage prints is filtered on the diverse and authoritative sources to guarantee the stylistic diversity and historical significance. The main sources of data are institutional archives and museums, where digitized collections of historical photographic materials, prints, carvings, manuscripts, and early artifacts can be located. Such institutions frequently preserving high-resolution scans with metadata, including period, technique, material, and provenance, are useful in efforts to come up with a contextual analysis and style-conscious restoration. Along with the archives of institutions, libraries and research repositories provide rare documents and printed works that are subject to the effects of natural aging. Such collections contain newspapers, books and illustrative prints frequently with typical wear and tear patterns like yellowing and bleed. In order to even more augment diversity, there are also private collections, the offerings of which include some of the unique prints that are hard to find in any institutional dataset. These materials capture different storage environment and handling processes, which produces realistic and complex degradation profiles. Ethical and legal implications are also well met when acquiring data such as the rights of using the acquired data, rights of reproducing the data and anonymization where required. The data is heterogeneous with respect to artistic styles, printing processes and eras, allowing the model to project across very diverse visual features. The data set balances both institutional and the real-life variability of degradation by integrating institutional and non-institutional sources. This is necessary to train a powerful GAN framework to be able to reconstruct old prints under varying cultural, artistic, and material settings.

3.3. Data Preprocessing and Annotation Strategy

To successfully train a dependable GAN-based reconstruction model, it is important to preprocess and annotate it. It starts with the preprocessing pipeline that consists of resolution normalization in order to normalize the input dimensions without altering aspect ratios or fine details. The color correction and contrast normalization is done selectively in order to minimize the scanning anomalies yet not to eliminate the real aging factors. The estimation of noises is to determine the differences between artifacts caused by degradation and deliberate textures, like grain or pattern prints. In order to provide the supervised and semi-supervised learning, the dataset is divided into paired and unpaired samples. Good reference images are made out of well-preserved prints or high-quality reproductions, whereas damaged ones are either obtained by chance or created synthetically to represent certain types of damage, including fading, stains, tears, etc. Synthetic degradation modeling is capable of controlled training over a wide range of damage conditions in a realistic visual appearance. The automated and expert-assisted processes are referred to as annotation. The masking of the regions on a region level determines areas of damage, areas lost, and the boundaries of the stains to facilitate specific learning of reconstruction. The metadata annotations record style, period, printing method and type of degradation, which are used in conditioning style-awareness during training. Accuracy of annotation and cultural sensitivity are guaranteed by expert authentication by the conservators and art historians.

4. Proposed GAN-Based Reconstruction Framework

4.1. Overall system architecture

The constructions of the proposed reconstruction framework using GANs are a modular, end-to-end system that aims at restoring damaged vintage prints without degrading the structural integrity and virtue of the style. The architecture is conditional adversarial learning in which both content and style constraints are satisfied and degraded images are used as the input, and reconstructed images are produced as the output. This system is composed of four main components: preprocessing module, generator network, discriminator network and post-reconstruction validation module. Preprocessing module normalizes the input resolution as well as normalizes the intensity distributions and derives additional feature maps like edges and degradation masks. These characteristics are passed as conditional inputs and used to steer the generator to damage-conscious reconstruction. The generator generates a reconstructed image through the acquisition of degraded inputs to visually appealing outputs through mappings. The discriminator is used, in order to assure faithful restoration, to compare generated images with real high-quality preserved references and measure global realism and local consistency of texture. Multi-scale processing is implemented so that the different degradation sizes are dealt with and therefore the framework may deal with tiny cracks and also large holes.

4.2. Generator Network Design for Texture and Structure Recovery

The generator network is the key part that is involved in the process of restoring missing and damaged parts of ancient prints, as well as maintaining finer textures and structural consistency. It is trained based on encoder-decoder architecture with residual blocks and skip connections to encode the information of both the global and the local. The encoder gradually sprays hierarchical information about the degraded input, edges, contours, and texture information, whereas, compressing space information within a latent representation. To deal with the complicated degradation trends, multi-scale feature extraction layers are introduced into the generator that can allow to learn both rough structural layouts and fine-grained textures at the same time. Skips that exist between matching encoder and decoder layers also make sure that no spatial information is lost and that there is no loss of any significant information. There is also residual learning, which makes the network stable in training and enables it to concentrate on restoring degraded sections instead of restoring intact content. The attention mechanisms are combined to give more emphasis to areas that are highly damaged like tears and stains and the network gives more representational capacity to the important areas. Stylistic modulation layers are also conditioned by style representations, which are sensitive to style, and the generator is also trained to be consistent with the original artistic features. To ensure tonal continuity in regions that have been reconstructed and those that have not, the output layer uses adaptive normalization.

4.3. Discriminator Design for Authenticity and Style Validation

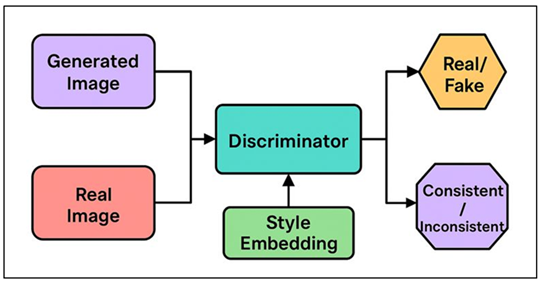

The discriminator network is essential in the imposition of realism, authenticity and faithful style of the reconstructed vintage prints. It is a multi-scale convolutional classifier, which considers the global image coherence and local texture realism. This can be used on a wide variety of resolutions to determine both high-level structure consistency and low-level structures like paper texture, micro-grain of ink and preciseness of the lines. In order to be more sensitive to artistic and historical features, the discriminator is extended with style-sensitive branches conditioned on metadata embeddings of print period, technique or artistic genre. As illustrated in Figure 2, style-conscious discriminator confirms authenticity in the reconstruction of vintage prints. This allows the discriminator to differentiate both between real and generated images but also stylistically consistent and inconsistent reconstructions.

Figure 2

Figure 2 Style-Aware Discriminator Architecture for Authenticity

Validation in Vintage Print Reconstruction

Patch-based discrimination is used in order to target localized authenticity, whereby reconstructed areas are merged seamlessly with areas that have not been destroyed. To stabilize adversarial training and decrease mode collapse, feature matching methods are applied to the training process.

5. Training Methodology

5.1. Training pipeline and optimization strategy

The GAN-based reconstruction framework proposed in this paper will be trained following a pipeline structure that will guarantee stability, convergence, and quality restoration of images with a variety of degradation patterns. The training commences by the preparation of paired and unpaired datasets with degraded vintage prints matched with other clean or reference images where possible. Where it lacks precise ground truth, the weakly supervised and adversarial learning methods are used. He or Xavier initialization is used to initialize the generator and the discriminator so that they support rollout of gradients. Training is performed as alternating optimization sequence, during which, the discriminator gets updated to enhance its performance in distinguishing real reference prints and generated reconstructions and subsequently the generator also gets updated to minimize both adversarial and reconstruction losses. They include gradient penalty and spectral normalization that are used to promote adversarial stability. Patch-based learning is used to train to manage large high-resolution prints, to efficiently use memory and capture local details. Structural and perceptual metrics are used to check overfitting by periodically performing validation and early stopping is directed. Checkpoints Model checkpoints are stored depending on performance with regard to validation to keep the best settings. This training pipeline is systematic to provide a reliable convergence, as well as the framework can scale well with large volumes of archives in preserving the quality and consistency of restoration.

5.2. Style-Aware and Content-Preserving Constraints

The main concept of responsible digital restoration is to preserve the original artistic style and semantic content of vintage prints. In order to do so, the training technique incorporates various style-sensitive and content-conserving constraints in the objective of the generator. Preservation Content Pixel-based content preservation is achieved by loss of reconstruction at pixel level, e.g. L1 loss or smooth L1 loss, which promote the proper recovery of structural features without excessive smoothing. On top of these, semantic consistency through the comparison of high-level representations is guaranteed by perceptual loss functions based on the use of pretrained feature extractors, and not on pixel values. The constraints are style conscious to preserve the texture, tonal features and print specific features. The correlations between feature maps are also learnt by style loss functions based on Gram matrix comparisons and allow the generator to recreate genuine artistic textures. Metadata-conditioned embeddings also ensure that the model is sensitive to stylistic characteristic attributes of a particular type of print or period. They have edge-consistency and gradient-based losses to maintain line integrity, typography, fine contours and characteristics of vintage prints. These constraints are supplemented by the adversarial loss which tries to promote outputs which are not distinguishable to the real but are well-preserved prints. Through collective optimization of content, style and adversarial goals, the framework attains a restoration balance that would neither be structural distortion nor style deviation. This multi-objective constraint approach means that images that are rebuilt by this technique are still loyal to the original artistic idea but are improved in terms of visual clarity and completeness.

5.3. Data Augmentation for Robustness to Degradation Patterns

Augmented data is essential in enhancing the strength as well as generalization of the proposed reconstruction framework. As there are few clean reference images and differences in degradation are large in the real world, augmentation strategies are created to model realistic damaging situations that occur during old print. To increase training diversity, synthetic degradation processes are used to cause Gaussian and Poisson noise, color fading, contrast loss, blur, uneven illumination, etc. in clean and partially restored images. In simulating structural damage, random masking, scratch generation, tear-like occlusions and stain overlaying with irregular shapes and textures, are simulated. These additions leave the generator capable of dealing with sophisticated restoration problems, which allows it to acquire methods of recovery of missing and corrupted areas effectively. The augmentation parameters are not kept constant throughout training, but they are varied in order to avoid the development of overfitting to particular degradation patterns in the model. Besides degradation-based augmentation, geometric transformations are also used to augment invariance to scanning inconsistency and orientation as well as flipping. Additions to the color space such as hue and saturation changes are highly limited to not cause distortions that are not realistic. Domain randomization methods also enhance flexibility between prints between different sources and digitization conditions.

6. Results and Discussion

As experimentation shows, the suggested GAN-based reconstruction structure can indeed enhance the quality and lifelike character of obsolete vintage photographs to a great extent. Quantitative performance indicates that the structural similarity, perceptual quality and texture preservation are steadily increased in comparison with the conventional inpainting technique and CNN-based restoration techniques. The structure is successful in rebuilding large areas that have been lost and preserves edge continuity and tonality. It has been proven by qualitative evaluation by domain specialists that restored outputs still have stylistic integrity and historical properties, without being smoothed or otherwise made to look artificial. The findings also show high robustness in various types of degradation, which proves the efficiency of adversarial learning with a combination of style-conscious and content-preserving constraints to digital restoration tasks.

Table 2

|

Table 2 Quantitative Performance Comparison of Restoration Methods |

||||

|

Model / Method |

SSIM |

PSNR (dB) |

LPIPS |

Texture Fidelity (%) |

|

Traditional Inpainting |

0.71 |

22.8 |

0.312 |

68.4 |

|

PDE-Based Restoration |

0.74 |

24.1 |

0.284 |

71.6 |

|

CNN Autoencoder |

0.81 |

26.9 |

0.198 |

79.3 |

|

U-Net Restoration |

0.84 |

27.8 |

0.176 |

82.5 |

|

GAN (Baseline) |

0.88 |

29.6 |

0.142 |

87.9 |

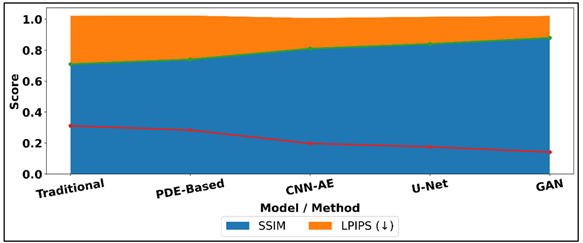

Table 2 provides quantitative results of restoration approaches, where one can easily see the gradual enhancement of models as they advance to the state of sophisticated GAN-based models. Figure 3 presents the comparison of SSIM and LPIPS among image restoration methods. Traditional inpainting has minimum SSIM (0.71) and PSNR (22.8 dB), which is a combination of poor noise management and structural preservation, whereas its big LPIPS value (0.312) is an indication of perceptual differences between original prints and inpainting.

Figure 3

Figure 3 Comparison of SSIM and LPIPS Across Image

Restoration Methods

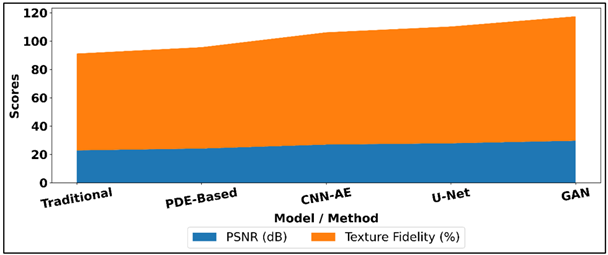

PDE-based restoration is less effective in structural similarity and texture fidelity, but there are still serious limitations to fulfilling complex artistic motifs. Deep learning techniques are much better than classical techniques. CNN autoencoder has higher SSIM and PSNR of 0.81 and 26.9 dB respectively, with more effective feature learning; but with moderate values of LPIPS, which shows perceptual smoothness. Figure 4 displays PSNR and texture fidelity visualization on the methods of restoration. U-Net model also provides continuity and texture preservation with skip connections, with 0.84 SSIM, and texture preservation at 82.5 percent.

Figure 4

Figure 4 Visualization of PSNR and Texture Fidelity Across

Restoration Methods

The baseline GAN provides the best performance in all the metrics, where SSIM of 0.88, PSNR of 29.6 dB with the lowest LPIPS at 0.142. These findings validate the claim that GANs can reconstruct fine textures and perceptual realism and so suit better to restore visually and historically nuanced old prints.

7. Conclusion

This paper introduced a complex GAN-based architecture to reconstruct damaged age-old prints, overcoming the most important issues in digital art preservation and cultural heritage protection. Through the combination of adversarial learning, content-preserving, and style-aware constraints, the suggested method can produce a balanced restoration that can sharpen the visual clarity and at the same time preserve the authenticity of historical pieces. The framework shows better performance in terms of restoring fine textures, structural continuity, and stylistic consistency in various degradation patterns, such as noise and fading as well as stains and physical damage than traditional restoration and conventional deep learning approaches. The trained model was made to learn real degradation properties and adjust to different print styles because of the curated dataset based on the archives, museums, and personal collections. An effective training methodology with strong data augmentation and multi-objective optimization helped to maintain the convergence and achieve high generalization to the real-world restoration cases. Quantitative measures as well as the qualitative comparison of the proposed system by experts proved that the system is effective to work with and give out visually plausible and culturally sensitive reconstructions. In addition to technical functionality, the study demonstrates that GAN-based restoration systems have a wider range of potential in facilitating mass-scale digitization of objects, conservator off-loading, and enabling the education, research, and engagement of fragile objects. The framework can be used decision-support tool instead of an object that replaces human experience so that the conservator can have options to investigate restoration options and uphold a sense of ethics. The future research can focus on the ability to combine multimodal data including textual annotations and material metadata and on how to extend the framework to other types of cultural artifacts, including manuscripts, paintings, and textiles.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Cao, J., Hu, X., Cui, H., Liang, Y., and Chen, Z. (2023). A Generative Adversarial Network Model Fused with a Self-Attention Mechanism for the Super-Resolution Reconstruction of Ancient Murals. IET Image Processing, 17(9), 2336–2349. https://doi.org/10.1049/ipr2.12795

Chen, Q., and Shao, Q. (2024). Single Image Super-Resolution Based on Trainable Feature Matching Attention Network. Pattern Recognition, 149, 110289. https://doi.org/10.1016/j.patcog.2024.110289

Chen, Z., Zhang, Y., Gu, J., Zhang, Y., Kong, L., and Yuan, X. (2022). Cross Aggregation Transformer for Image Restoration (arXiv:2211.13654). arXiv. https://doi.org/10.1109/ICCV51070.2023.01131

Chu, S.-C., Dou, Z.-C., Pan, J.-S., Weng, S., and Li, J. (2024). HMANet: Hybrid Multi-Axis Aggregation Network for Image Super-Resolution (arXiv:2405.05001). arXiv. https://doi.org/10.1109/CVPRW63382.2024.00629

Gao, H., and Dang, D. (2024). Learning Accurate and Enriched Features for Stereo Image Super-Resolution (arXiv:2406.16001). arXiv. https://doi.org/10.1016/j.patcog.2024.111170

Hassanin, M., Anwar, S., Radwan, I., Khan, F. S., and Mian, A. (2024). Visual Attention Methods in Deep Learning: An In-Depth Survey. Information Fusion, 108, 102417. https://doi.org/10.1016/j.inffus.2024.102417

Lee, D., Yun, S., and Ro, Y. (2024). Partial Large Kernel CNNs for Efficient Super-Resolution (arXiv:2404.11848). arXiv.

Lepcha, D. C., Goyal, B., Dogra, A., and Goyal, V. (2023). Image Super-Resolution: A Comprehensive Review, Recent Trends, Challenges and Applications. Information Fusion, 91, 230–260. https://doi.org/10.1016/j.inffus.2022.10.007

Li, J., Pei, Z., Li, W., Gao, G., Wang, L., Wang, Y., and Zeng, T. (2024). A Systematic Survey of Deep Learning-Based Single-Image Super-Resolution. ACM Computing Surveys, 56(11), Article 249. https://doi.org/10.1145/3659100

Liu, H., Li, Z., Shang, F., Liu, Y., Wan, L., Feng, W., and Timofte, R. (2024). Arbitrary-Scale Super-Resolution Via Deep Learning: A Comprehensive Survey. Information Fusion, 102, 102015. https://doi.org/10.1016/j.inffus.2023.102015

Moser, B. B., Raue, F., Frolov, S., Palacio, S., Hees, J., and Dengel, A. (2023). Hitchhiker’s Guide to Super-Resolution: Introduction and Recent Advances. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(8), 9862–9882. https://doi.org/10.1109/TPAMI.2023.3243794

Vo, K. D., and Bui, L. T. (2023). StarSRGAN: Improving Real-World Blind Super-Resolution (arXiv:2307.16169). arXiv.

Wan, C., Yu, H., Li, Z., Chen, Y., Zou, Y., Liu, Y., Yin, X., and Zuo, K. (2023). Swift Parameter-Free Attention Network for Efficient Super-Resolution (arXiv:2311.11277). arXiv. https://doi.org/10.1109/CVPRW63382.2024.00628

Wang, X., Sun, L., Chehri, A., and Song, Y. (2023). A Review of Gan-Based Super-Resolution Reconstruction for Optical Remote Sensing Images. Remote Sensing, 15(20), 5062. https://doi.org/10.3390/rs15205062

Yang, L., Zhang, R.-Y., Li, L., and Xie, X. (2021). SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021) (11863–11874). PMLR.

Ye, S., Zhao, S., Hu, Y., and Xie, C. (2023). Single-Image Super-Resolution Challenges: A Brief Review. Electronics, 12(13), 2975. https://doi.org/10.3390/electronics12132975

Zhang, D., Huang, F., Liu, S., Wang, X., and Jin, Z. (2022). SwinFIR: Revisiting the SwinIR with Fast Fourier Convolution and Improved Training for Image Super-Resolution (arXiv:2208.11247). arXiv. https://arxiv.org/abs/2208.11247

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.