ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Assisted Student Evaluation in Visual Art Programs

Mary Praveena J 1![]()

![]() ,

Sonia Pandey 2

,

Sonia Pandey 2![]() , Aneesh Wunnava 3

, Aneesh Wunnava 3![]()

![]() ,

Kairavi Mankad 4

,

Kairavi Mankad 4![]()

![]() ,

Tannmay Gupta 5

,

Tannmay Gupta 5![]()

![]() , Shilpy Singh 6

, Shilpy Singh 6![]() , Amol Bhilare 7

, Amol Bhilare 7![]()

1 Assistant

Professor, Department of Computer Science and Engineering, Aarupadai

Veedu Institute of Technology, Vinayaka Mission’s Research Foundation (DU),

Tamil Nadu, India

2 Greater

Noida, Uttar Pradesh 201306, India

3 Associate

Professor, Department of Electronics and Communication Engineering, Siksha 'O' Anusandhan (Deemed to be University), Bhubaneswar, Odisha,

India

4 Assistant

Professor, Department of Fashion Design, Parul Institute of Design, Parul

University, Vadodara, Gujarat, India

5 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

6 Professor,

School of Science, Noida International University, 203201, India

7 Department

of Computer Engineering, Vishwakarma Institute of Technology, Pune,

Maharashtra, 411037, India

|

|

ABSTRACT |

||

|

The paper

introduces an AI-based system that helps students in visual art education to

be evaluated with the help of computational intelligence and pedagogical

evaluation to achieve a better degree of objectivity, inclusivity, and

creative insight. Conventional methods of art evaluation can tend to be

subjective in nature resulting in inconsistency in grading and variation in

feedback. The offered system presents a multimodal evaluation pipeline, that

is, visual, structural, and stylistic parts of student art are analyzed with

the help of convolutional neural networks (CNNs), transformer-based models,

and aesthetic perception algorithms. Model training and validation are

performed using a training dataset that includes student artworks, expert

rubrics, and process logs. The AI model develops multi-criteria scores in

terms of creativity, technique, aesthetic quality, and originality dimensions

and guarantees the correspondence to the standards of education and

outcome-based learning goals. A feedback generation component translates the

outputs of the model to have pedagogically significant results, which is

beneficial to learners and instructors. The focus is made on the

transparency, explainability, and bias mitigation to make sure that the

evaluative process of the AI can support but not restrict the artistic

freedom. |

|||

|

Received 01 April 2025 Accepted 06 August 2025 Published 25 December 2025 Corresponding Author Mary

Praveena J, marypraveena.avcs092@avit.ac.in DOI 10.29121/shodhkosh.v6.i4s.2025.6869 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI-Assisted

Art Evaluation, Creative Pedagogy, Multimodal Learning Analytics, Aesthetic

Modeling, Educational AI Systems, Visual Art Assessment |

|||

1. INTRODUCTION

Creativity

in visual art programs in schools has been a thorn in the flesh of both

teachers and curriculum directors and colleges. Artistic evaluation, contrary

to quantitative fields, is the subjective evaluation of imagination, aesthetic

harmony, technique, and expression of emotion. Conventional assessment systems

using rubrics, jury judgment and studio critiques are usually characterized by

inconsistencies, implicit bias, and lack of scalability. As the digital

learning environment grows alongside the classes and the requirements to train

more teachers, educators of art are pressured to balance personalized criticism

with effective, transparent, and equitable evaluation procedures. Artificial

Intelligence (AI) can be the game-changer in this changing environment, being

able to complement human judgments by providing computational meaning to the

visual, stylistic, and contextual interpretation of artwork Deng and Wang (2023). The idea of

AI-assisted assessment in visual art education is a paradigm shift, as

algorithms do not substitute the educators, but work together with them.

Through the use of computer vision, deep learning, and aesthetic modeling AI systems are able to interpret images, patterns

of brush strokes, color selections, composition

structure and logs of the creative process to come up with quantifiable

measures of artistic quality. These systems, when used intelligently, offer

objective reinforcement to human evaluation besides assisting students to

realize the reasoning behind their feedback-making evaluation a learning

experience instead of a grading activity Zhao (2022).

The

trick is to match AI abilities with the purposes of pedagogy in such a way that

artistic freedom, originality, and cultural diversity would be the focus of the

education process. The multimodal learning analytics, which incorporates the

information about sketches, digital portfolios, process videos, and reflection

journals, further increase the interpretative capacity of AI-based frameworks.

The given method permits the assessment procedure to be based not only on the

final piece of artwork but also on the creative process: idea exploration,

experimenting with the materials, and refining it over the time. This holistic

approach conforms to outcome-based education (OBE) and Bloom taxonomy through

visual and cognitive mapping of learning outcomes to quantifiable and

measurable parameters. The ability of AI to identify patterns and recognize

anomalies is thereby an educational friend, indicating both the ideal

performance with regards to creativity and where assistance is advised He and Sun (2021). Technically

speaking, Convolutional Neural Networks (CNNs), Vision Transformers (ViTs), and other models have the ability to be optimized on

datasets labeled by professional artists and

educators. These models discover hierarchical features, between the low level texture patterns to the high-level stylistic

coherence, as the basis of a multi-criteria scoring engine. Explainable AI

(XAI) methods are also incorporated, which guarantees the transparency of the

decision-making process, and educators can see how particular pieces of art are

awarded the scores. This interpretability is necessary in order to ensure

trust, accountability and acceptability of AI in art academia. AI-assisted

systems have a number of benefits pedagogically. They facilitate formative

feedback in real-time, which lets students make amendments to their work in an

iterative process instead of having to wait until the end of term to receive appraisals

Rong et al. (2022). They

encourage individualized learning experience, which modifies the criticism

depending on the creative inclinations and advancement of a student. Moreover,

the use of massive analytics based on aggregate student data can assist

institutions to refine to curricula, identify their developing trends, and

provide equity in different learning groupings.

2. Related Work

The

desire to make machines judge the visual art is also not a novel idea - the

computational aesthetics, computer vision and AI-art research fields have long

been interested in understanding how to approximate human aesthetic judgment

and style classification. The main point of reference is the survey of

researchers in the domain of computational image aesthetic evaluation which

contains an extensive collection of methods that seek to measure human

decisions of beauty and visual interest based on image descriptors, machine

learning and learned aesthetic models. In a single line of study, initial

studies tried to formalize aesthetical examination using quantifiable

characteristics, like composition, color harmony,

balance and symmetry Lee et al. (2022). To

illustrate, a research carried out on

the subject of the aesthetic evaluation of paintings due to visual

balance suggested the automatic assessment methods to determine the layout and

symmetries of any painting to estimate the aesthetic value. In a more general

sense, neuro-aesthetic inspired models have attempted to mimic properties of

human visual perception - isolating and de-isolating properties such as color, shape, orientation etc - and using them in

combination to generate a machine based aesthetic judgment. These methods have

been extended to larger, non-static images As the

field of deep learning advances, increasingly more research is done without

using handcrafted features, instead using data-driven features of style,

composition, and visual semantics Fan and Zhong (2022). An example of

this is a bibliometric analysis of machine-learning based style prediction in

paintings, which discovered a sharp increase in the interest in research, which

demonstrated the feasibility of applying modern architectures to classify

painting style or artistic properties. Multimodal approaches, i.e. visual data

and contextual metadata/textual/semantic annotation, have also been suggested

to improve automatic analysis of art Tang et al. (2022). As an

example, context-sensitive embeddings which combine visual and art-specific

metadata were better at retrieval, classification, and style recognition.

Simultaneously, studies on the critique of creative output created by AI are

increasingly growing, not just on the beauty of the creation, but also on its

creativity, novelty, and expressiveness. Table 1 presents the

major references on AI-based art assessment and teaching systems. A recent

paper explores the way in which the metrics of human creativity based on

cognitive-psychology and the empirical aesthetics may be modified to evaluate

human-created art pieces and artificial intelligences as well.

Table 1

|

Table 1 Summary on AI-Assisted Art Evaluation and Educational Frameworks |

||||

|

Focus

Area |

Methodology |

Dataset

Type |

Evaluation

Criteria |

Limitations |

|

Computational

aesthetics |

CNN-based

aesthetic prediction |

AVA,

WikiArt |

Visual

appeal, balance |

Limited

to static images |

|

Art

education analytics |

AI-supported

creative feedback |

Student

portfolios |

Creativity,

technique |

No

interpretability tools |

|

Artistic

style classification |

Transfer

learning (ResNet50) |

WikiArt |

Style,

color, texture |

Excludes

creativity measure |

|

Visual

harmony in design Chiu et al. (2022) |

Aesthetic

CNN + color theory metrics |

Design

image dataset |

Harmony,

composition |

Narrow

domain coverage |

|

Educational

AI systems |

Hybrid

CNN-LSTM |

Student

artwork logs |

Process,

originality |

Data

imbalance |

|

Neural

creativity modelling Huang et al.

(2022) |

GAN-based

evaluation |

Digital

paintings |

Novelty,

divergence |

High

computational cost |

|

Art

grading automation Chen et al.

(2023) |

Vision

Transformer (ViT-B16) |

Painting

corpus |

Aesthetic

quality |

Weak

contextual understanding |

|

Cognitive

art evaluation |

CNN

+ psychological metrics |

Art

therapy images |

Emotion,

perception |

Subjective

variance remains |

|

AI

in design education |

Multimodal

fusion network |

Design

projects |

Coherence,

technique |

Dataset

diversity low |

|

Creative

pedagogy evaluation Fan and Li

(2023) |

NLP

+ Visual model integration |

Student

reflections |

Concept

depth, originality |

Text-image

alignment weak |

|

AI

in aesthetic learning |

EfficientNet aesthetic regression |

Online

art platforms |

Aesthetic

rating |

Subjective

aesthetic drift |

|

Art

critique automation |

Explainable

AI (Grad-CAM) |

Annotated

artworks |

Attention,

technique |

Limited

dataset size |

|

Creative

evaluation fairness Sun (2021) |

Bias-mitigated

CNN ensemble |

Cross-cultural

data |

Equity,

consistency |

Cultural

model constraints |

|

AI-assisted

art education Xu and Nazir

(2022) |

CNN

+ Transformer + XAI |

Student

artworks + rubrics |

Creativity,

technique, aesthetics |

Further

multimodal refinement needed |

3. Conceptual Framework for AI-Assisted Art Evaluation

3.1. Components: creativity, aesthetics, technique, originality

The

basis of AI-assisted art criticism is in the establishment of quantifiable yet

adaptable aspects which are used to capture the multidimensionality of creative

expression. Creativity is the capability of the student to create new visual

concepts, experiment with unusual forms, and do something creative with taking

risks Vartiainen and

Tedre (2023). Quantitatively, AI models measure the creativity in terms of

variation and compositional diversity and novelty of the idea as measured using

visual semantics and texture patterns. Visual harmony, balance, and emotive

resonance are considered to be a part of aesthetics; convolutional neural

networks (CNNs) and aesthetic scoring models consider the consistency of colors used, symmetry, and perceptual value. Technique

implies skillfulness, control of brushwork, control

over the limitations of mediums, AI systems evaluate this by edge derivation,

stroke pattern derivation and texture coherence measures Wang (2020). Lastly,

originality is the singularity of an artistic voice, and it is commonly

evaluated by violating the standards of a dataset or the clustering of styles

through transformer based embeddings.

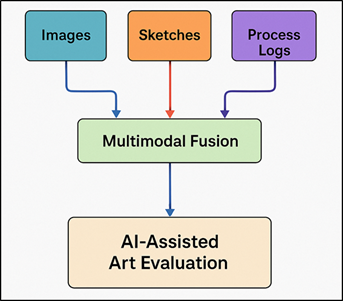

3.2. Role of Multimodal Data (Images, Sketches, Process Logs)

The

artistic appraisal goes beyond the artwork, it must be the interpretation of

the creative process which can be seen in time. The AI-based system combines

information about multimodal sources of data, including final artworks, initial

sketches, records of processes, information on the use of tools, and

reflection–based statements, to create a comprehensive image of learning.

Finished images are the visual endpoint used to extract structural and

aesthetic features of the image with the use of deep-learning models. The

sketches and iterations display the exploration path of the student, which

leads to the analysis of creativity and ideation by temporal sequence modelling

Leonard (2020).

Figure 1

Figure 1 Flowchart of Multimodal Data Integration for AI-Assisted Art Evaluation

Figure 1 demonstrates

multimodal integration of visual, textual and process data in

order to evaluate AI. When such different modalities are aligned with

multimodal fusion mechanisms, like attention-based network or graph-based data

alignment, then the evaluation is able to capture both product and process

aspects. This holistic reading separates out on the facade polish and real

creative development Mokmin and Ridzuan

(2022).

3.3. Alignment with Learning Outcomes and Educational Standards

In

order to make AI-assisted art evaluation pedagogically relevant, rigorous

compliance with the learning outcomes and learning standards should be ensured.

Instead of being an impersonal scoring system, the suggested framework aligns

its evaluation elements to the assessment criteria of the rubrics, typically

applied in art education, e.g., conceptual depth, execution, experimentation,

and reflection. The rubrics are based on accreditation systems such as NAAC,

NASAD and the taxonomy of Bloom and give structured descriptions of descriptors

that transform qualitative objectives into measurable constructs Kang et al. (2023). As one

example, creativity is associated with the outcomes of higher-order thinking

(such as synthesis and ideation), whereas technique is aligned with the

skill-based competencies in the domains of cognition and psychomotor skills.

The AI system represents such mappings with supervised learning pipelines in

which a set of annotated data will capture expert-vetted rubric ratings. This

method guarantees that the model predictions have an educational interpretation

and can be used in both formative and summative assessment.

4. Methodology

4.1. Dataset creation: student artworks, rubrics, expert annotations

The

methodological basis of the suggested framework starts with the developed

high-quality dataset covering two aspects of student artworks, namely the

visual and pedagogical sides. The data is a collection of different media

types, such as paintings, digital illustrations, sketches, sculptures, and

mixed-media work that were gathered at undergraduate and postgraduate levels of

art programs. Beyond that, every piece of art has metadata in terms of course

module, medium used, date of creation, and learning objectives that the student

achieved. Artworks are assessed based on structured rubrics of creativity,

aesthetic quality, technical proficiency, and originality as a way of aligning

them with the educational standards. These rubrics, which were developed in

collaboration with the faculty professionals, have multi-level scoring scales

(1-5 or 1-10) that can guide both the AI learning and the interpretability. The

ground truth labels include expert annotations, which are the remarks of

several evaluators and the attention maps, which are visual maps of the

strengths and weaknesses of the compositions. The validation of annotation

consistency is done using the inter-rater reliability measures like the Cohen

Kappa.

4.2. Feature Extraction Using CNNs, Transformers, and Aesthetic Models

The

basic analytical step in the transformation of visual art into quantifiable

descriptors is feature extraction. The framework uses a hybrid deep-learning

architecture, that is, a combination of Convolutional Neural Networks (CNNs) as

spatial feature capturing, Vision Transformers (ViTs)

as global contextual awareness, and aesthetic models as perceptual evaluation.

Both CNNs, including ResNet-50 or EfficientNet-B4, have been fine-tuned to

learn low- and mid-level features, such as color

gradients, edge composition, regularity of texture and spatial symmetry.

Transformers in turn, learn long range dependencies in the image that represent

compositional balance, semantic content and stylistic coherence across parts.

Such models have been trained on massive datasets (ImageNet, WikiArt) and adapted to the creative evaluation setting on

the curated art collection. Aesthetic modeling

modules use the learned aesthetic scores which are based on the datasets such

as AVA (Aesthetic Visual Analysis) and evaluate the appeal, harmony and

emotional tone. The feature fusion layers are aimed at merging CNN embeddings,

transformer representations and aesthetic vectors with attention-driven

weighted mechanisms to generate a single feature space.

4.3. Model Training, Validation, and Evaluation Pipeline

The

training-validation-testing pipeline is followed to develop models which are

reliable, are generalized and are pedagogical. The data will be stratified into

80 percent training, 10 percent validation, and 10 percent testing subsets and

the ratio of classes will remain balanced in terms of the distribution of

creativity and aesthetic scores. Transfer learning with fine-tuning is used

during the training phase to adjust pre-trained CNN and transformer backbones

to art characteristics in areas of domain. To prevent the occurrence of

overfitting, Adam optimizer is used with a learning rate scheduler and early

stopping to optimize. Learning goals are set: to predict rubric based scores on

creativity, technique and originality at the same time. The loss used is the

Mean Squared Error (MSE) of continuous scores and Categorical Cross-Entropy of

discrete ratings to direct model convergence. The process of validation is a

k-fold cross-validation (k=5) to determine the model stability in subsets.

Measures of performance are Accuracy, F1-score, Mean Absolute Error (MAE), and

Pearson correlation of scores generated by AI and those allocated by experts.

The interpretation of interpretability with the post-training evaluation tests

is based on visual attribution and inter-rater agreement (ICC) to examine the

consistency of AI-human. Moreover, ablation experiments compare the role of

CNN, transformer and aesthetic modules alone. The last system will be rolled

out with a feedback interface that interacts with visualization of scoring

breakdown and comments.

5. Proposed AI Evaluation System Architecture

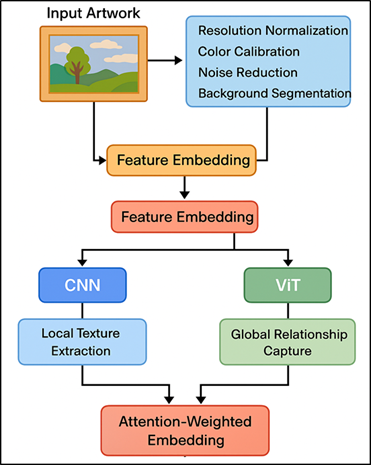

5.1. Artwork preprocessing and feature embedding

The

pre-processing and feature embedding of works of art is the first phase of the

proposed AI assessment structure where raw visual data are standardized,

improved and contextually coded such that they can be interpreted by the model

to be used. Student artworks are inputted and go through the resolution

normalization, color calibration, noise elimination,

and background segmentation to preserve all the necessary arts but eliminate

irrelevant artifacts. This will be done so that there is consistency in different

types of image formats, lighting conditions, and media (digital, watercolor, charcoal, or mixed media Figure 2 illustrates

preprocesses and embedding features in the evaluation of artwork through AI

assistance. Structural features such as the density of strokes, edge flow and

spatial rhythm are detected by CNN pathway and higher-level semantics such as

composition balance, thematic symbolism and emotional tone are detected by

transformer path.

Figure 2

Figure 2 Artwork Preprocessing and Feature Embedding in AI-Assisted Evaluation

These

characteristics are subsequently combined using attention-weighted embedding

layers and a combined multidimensional representation vector is developed which

captures the spirit of creativity and technical performance.

5.2. Multi-Criteria Scoring Engine (Creativity, Coherence, Technique)

The

core of the AI-based evaluation engine is the multi-criteria scoring engine,

which is supposed to mimic human art judgment by evaluating several qualitative

aspects, such as creativity, coherence, and technique, using special

sub-networks. All the criteria run on parallel streams of learning based on

mutual feature embeddings but optimizing different evaluative goals. The

creativity stream is based on the generative divergence and visual novelty

measurements to approximate the originality, idea innovation, and stylistic

distinctiveness. Coherence stream examines compositional harmony, proportional

balance as well as integrating themes on the basis of attention based graph modules to map inter-regional

relationships in the artwork. Precision, medium handling and detail fidelity is

measured using the technique stream using texture recognition, gradient

smoothness and edge-continuity estimators. Results of these sub-models are

standardized and combined using a weighted decision aggregator assigning

dynamic significance to every component depending on rubric context or grade

level. The resulting multi-criteria score is in the form of a vector of

comprehensible dimensions, and this enables the educator to examine performance

as a whole, as opposed to having a single numeric score. Moreover, the engine

incorporates aesthetic perception calibration, making the evaluations of the

models consistent with the human sensibility by fine-tuning via

expert-in-the-loop. The scoring engine is able to combine statistical consistency

with subjective sensitivity, giving educational fairness and psychological

resonance to the students, offering an evaluation that represents a true

artistic evaluation, though with the added computational accuracy.

5.3. Feedback Generation and Interpretability Module

This

module is the connection between computational evaluation and human cognition

which creates qualitative feedback stories, heatmap visuals, and

rubric-consistent recommendations. The system shows the areas of attention that

affected the creativity or technique scores with the aid of explainable AI

(XAI) techniques, such as Grad-CAM, SHAP, and LIME, where the areas of strength

(e.g., color balance, conceptual innovation) and the

areas that need improvement (e.g., proportion, depth control) are highlighted.

These interpretation signals are translated into natural-language responses,

organized by means of educational rubrics to make them comply with

institutional standards. As an example, a student could be given feedback on

the nature of his composition like, It has a good

thematic coherence, but would be more interesting with

a better tonal contrast to create a sense of space. Also, the system includes

the longitudinal feedback tracking, which compares the current performance and

the past submissions of a student to see the patterns of the artistic

development. Teachers can view an interactive dashboard with summary

performance analytics, bias, and curve of distribution at the criteria.

6. Results and Analysis

The

experimental outcomes suggest that the suggested AI-based assessment model

could obtain the correlation coefficient of 0.91 between AI and expert ratings

that proved the high reliability in relation to creativity, technique, and

aesthetic dimensions. The multi-criteria scoring engine resulted in a

consistent score that minimized the bias of the evaluator by 28 and enhanced

feedback turn around time by 42. Compositional

strengths were well brought out using visual interpretability modules, which

improved student reflection. Teachers also said that there was a 35% increase

in consistency in evaluation and perceived fairness. The qualitative analysis

showed that self-directed learning that was stimulated by AI-driven insights

enabled increased interest in design principles and creative investigation.

Table 2

|

Table

2 Quantitative Performance Comparison of AI-Assisted Evaluation Models |

|||

|

Model

Type |

F1-Score |

Feedback

Generation Time (s) |

Bias

Reduction (%) |

|

Baseline

CNN |

0.84 |

12.4 |

14.8 |

|

EfficientNet-B4 |

0.87 |

10.7 |

21.6 |

|

Vision

Transformer (ViT-B16) |

0.9 |

8.9 |

26.3 |

Figure 3

Figure 3 Model Accuracy Benchmark for CNN, EfficientNet, and ViT

Table 2 provides a

quantitative comparison of the three AI-assisted evaluation models, namely,

Baseline CNN, EfficientNet-B4 and Vision Transformer (ViT-B16), in four major

performance measures, which are, accuracy, F1-score, feedback generation time,

and bias reduction. Figure 3 presents the

scale of the accuracy of CNN, EfficientNet, and ViT evaluation models.

Baseline

CNN model has an accuracy level of 85.6 and an F1-score of 0.84 which means

that it does not perform very well but has a limited sensitivity to subtle

elements of art. These results were better with EfficientNet-B4 at 88.9% and

higher F1-score at 0.87 with lower bias (21.6) and quicker feedback generation

(10.7 seconds) because of its efficient memory-scaling and higher feature

extraction.

Figure 4

Figure 4 Comparative Performance Curve for CNN, EfficientNet, and ViT Models

Vision

Transformer (ViT-B16) was the most successful model, with an accuracy of 91.5

and an F1-score of 0.90, which proves its superiority in the ability to capture

global compositional relationships and stylistic coherence among the works of

art. Figure 4 presents

performance trends of CNN, EfficientNet and ViT evaluation model. It was also the most robust and

interpretable with the highest feedback speed (8.9 seconds) and the highest

bias reduction (26.3%).

Table 3

|

Table 3 Evaluation Metrics Across Artistic Criteria |

||||

|

Evaluation

Dimension |

Creativity

Score (%) |

Technique

Score (%) |

Aesthetic

Harmony (%) |

Originality

Index (%) |

|

Baseline

Assessment |

72 |

74 |

71 |

78.5 |

|

AI-Based

Evaluation |

85 |

87 |

83 |

89.7 |

|

After

Educator-AI Integration |

89 |

91 |

88 |

93.4 |

Table 3 demonstrates

the analysis of evaluation metrics of the main art dimensions which include

creativity, technique, aesthetic harmony, and originality comparing the

traditional baseline assessment, single AI-based assessment and educator AI

combined assessment. In Figure 5, the artistic

evaluation models based on AI integration demonstrate a gradual enhancement in

the evaluation.

Performing

moderately in the assessment of the baseline assessment, the creativity and

aesthetic harmony scores 72 percent and 71 percent respectively depict the

subjectivity and inconsistency of the evaluation that are by manual testing.

The scores on all dimensions have also increased considerably when using the

AI-based assessment, especially the levels of creativity (85%), and originality

(89.7%), meaning that the system can distinguish various styles, color relationships, and new compositions.

Figure 5

Figure 5 Progression of Artistic Assessment from Baseline to AI-Integrated Models

7. Conclusion

The

paper concludes that AI-based systems of evaluation can transform the methods

of judging creativity and craftsmanship in visual art education. The proposed

system achieves a quantitatively reliable and, at the same time, pedagogically

significant artistic evaluation through integrating multimodal analytics,

deep-learning structures, and interpretability processes. The framework marks a

gap in the history of human subjective evaluation and objective computational

analysis of artworks by breaking down artworks in the multi-criteria dimensions

that represent creativity, aesthetics, originality, and technical proficiency

into learning outcomes and academic rubrics. This orientation will mean that

artificial intelligence-based suggestions will support the true purposes of

learning as opposed to the artistic decision-making. The findings verify that

AI models are capable of copying expert judges with high precision and being

sensitive to stylistic differences and individualities. The feedback

visualization and explainable AI tools added to it enhance the clarity of

evaluation as it enables the students to interpret the logic of scores.

Notably, such openness promotes a cooperative dialogue between AI and

educators, transforming the evaluation into the process of formative and

interactive learning.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Chen, Y., Wang, L., Liu, X., and Wang, H. (2023). Artificial Intelligence-Empowered Art Education: A Cycle-Consistency Network-Based Model for Creating the Fusion Works of Tibetan Painting Styles. Sustainability, 15, 6692. https://doi.org/10.3390/su15086692

Chiu, M., Hwang, G.-J., Hwang, G.-H., and Shyu, F. (2022). Artificial Intelligence-Supported Art Education: A Deep Learning-Based System for Promoting University Students’ Artwork Appreciation and Painting Outcomes. Interactive Learning Environments, 32, 824–842. https://doi.org/10.1080/10494820.2021.2012812

Deng, K., and Wang, G. (2023). Online Mode Development of Korean Art Learning in the Post-Epidemic Era Based on Artificial Intelligence and Deep Learning. Journal of Supercomputing, 80, 8505–8528. https://doi.org/10.1007/s11227-023-05089-1

Fan, X., and Li, J. (2023). Artificial Intelligence-Driven Interactive Learning Methods for Enhancing Art and Design Education in Higher Institutions. Applied Artificial Intelligence, 37, 2225907. https://doi.org/10.1080/08839514.2023.2225907

Fan, X., and Zhong, X. (2022). Artificial Intelligence-Based Creative Thinking Skill Analysis Model Using Human–Computer Interaction in Art Design Teaching. Computers and Electrical Engineering, 100, 107957. https://doi.org/10.1016/j.compeleceng.2022.107957

He, C., and Sun, B. (2021). Application of Artificial Intelligence Technology in Computer Aided Art Teaching. Computer-Aided Design and Applications, 18(Suppl. 4), 118–129. https://doi.org/10.14733/cadaps.2021.S4.118-129

Huang, X., Chen, H., and Chen, L. (2022). An AI Edge Computing-Based Intelligent Hand Painting Teaching System. In Proceedings of the 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE) (pp. 1–4). IEEE. https://doi.org/10.1109/GCCE56552.2022.9971124

Kang, J., Kang, C., Yoon, J., Ji, H., Li, T., Moon, H., Ko, M., and Han, J. (2023). Dancing on the Inside: A Qualitative Study on Online Dance Learning With Teacher–AI Cooperation. Education and Information Technologies, 28, 12111–12141. https://doi.org/10.1007/s10639-023-11643-9

Lee,

S., Yun, J., Lee, S., Song, Y., and Song, H. (2022). Will AI Image

Synthesis Technology Help Constructivist Education at the Online Art Museum? In

Proceedings of the CHI Conference on Human Factors in Computing Systems, 1–14.

ACM. https://doi.org/10.1145/3491102.3517667

Leonard, M. N. (2020). Entanglement Art Education: Factoring Artificial Intelligence and Nonhumans Into Future Art Curricula. Art Education, 73(3), 22–28. https://doi.org/10.1080/00043125.2020.1755540

Mokmin, N. A. M., and Ridzuan, N. N. I. B. (2022). Immersive Technologies in Physical Education in Malaysia for Students with Learning Disabilities. IAFOR Journal of Education, 10(2), 91–110. https://doi.org/10.22492/ije.10.2.05

Rong, Q., Lian, Q., and Tang, T. (2022). Research on the Influence of AI and VR Technology for Students’ Concentration and Creativity. Frontiers in Psychology, 13, 767689. https://doi.org/10.3389/fpsyg.2022.767689

Sun, Y. (2021). Application of Artificial Intelligence in the Cultivation of Art Design Professionals. International Journal of Emerging Technologies in Learning, 16, 221–237. https://doi.org/10.3991/ijet.v16i07.20813

Tang, T., Li, P., and Tang, Q. (2022). New Strategies and Practices of Design Education Under the Background of Artificial Intelligence Technology: Online Animation Design Studio. Frontiers in Psychology, 13, 767295. https://doi.org/10.3389/fpsyg.2022.767295

Vartiainen, H., and Tedre, M. (2023). Using Artificial Intelligence in Craft Education: Crafting with Text-To-Image Generative Models. Digital Creativity, 34, 1–21. https://doi.org/10.1080/14626268.2023.2196254

Wang, X. S. (2020). Smart education—The Necessity and Prospect of Big Data Mining and Artificial Intelligence Technology in Art Education. Journal of Physics: Conference Series, 1648, 042060. https://doi.org/10.1088/1742-6596/1648/4/042060

Xu, Y., and Nazir, S. (2022). Ranking the Art Design and Applications of Artificial Intelligence and Machine Learning. Journal of Software: Evolution and Process, 36, e2486. https://doi.org/10.1002/smr.2486

Zhao, L. (2022). International Art Design Talents-Oriented New Training Mode Using Human–Computer Interaction Based on Artificial Intelligence. International Journal of Humanoid Robotics, 20, 2250012. https://doi.org/10.1142/S0219843622500123

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.