ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Affective Computing in Modern Art Education

Manikandan Jagarajan 1![]()

![]() ,

Ananta Narayana 2

,

Ananta Narayana 2![]() , Saksham Sood 3

, Saksham Sood 3![]()

![]() , Dr. Abhishek Upadhyay 4

, Dr. Abhishek Upadhyay 4![]()

![]() , Dinesh Shravan Datar 5

, Dinesh Shravan Datar 5![]() , Kalpana Munjal 6

, Kalpana Munjal 6![]()

![]()

1 Assistant

Professor, Department of Computer Science and Engineering, Aarupadai Veedu

Institute of Technology, Vinayaka Mission’s Research Foundation (DU), Tamil

Nadu, India

2 Assistant

Professor, School of Business Management, Noida International University,

Greater Noida, Uttar Pradesh, India

3 Centre of Research Impact and

Outcome, Chitkara University, Rajpura- 140417, Punjab, India

4 Assistant Professor, Department of

Management, ARKA JAIN University Jamshedpur, Jharkhand, India

5 Department of Artificial Intelligence

and Data Science, Vishwakarma Institute of Technology, Pune, Maharashtra,

411037 India

6 Associate Professor, Department of

Design, Vivekananda Global University, Jaipur, India

|

|

ABSTRACT |

||

|

Affective

computing Affective computing, or more strictly speaking, its recognition and

interpretation of human emotions, has emerged as an influential model in the

redesigning of art education. This paper will examine how emotion-sensitive

technologies can be applied to contemporary art schools to improve

creativity, participation, and self-directed learning. Conventional pedagogy

of art tend to focus on technical achievement and abstract exploration but

does not acknowledge the emotional relations that is the foundation of art.

With the introduction of affective computing (recognition of emotions based

on facial expressions, tonal, and physiological elements), the educator will

be able to more effectively understand the emotional state of the learners and

incorporate this knowledge in the instructional approach. The study applies

multimodal data gathering in academic arts, including emotion, performance

and interaction records to come up with affect-adaptive systems. Emotion

classification methods based on machine learning, including CNNs, LSTMs, and

multimodal fusion networks, are applied, whereas the performance of the

systems is measured by such metrics as accuracy, F1-score, and emotional

congruence index. The suggested affect-aware art education platform gives

real time emotional feedback, adaptive learning and the use of digital art

tools in order to facilitate expressive development. The experimental

applications show that emotion-augmented assignments and feedback loops can

enhance motivation and quality of the work done by learners. |

|||

|

Received 06 April 2025 Accepted 11 August 2025 Published 25 December 2025 Corresponding Author Manikandan

Jagarajan, manikandan.avcs0122@avit.ac.in DOI 10.29121/shodhkosh.v6.i4s.2025.6850 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Affective Computing, Emotion Recognition, Art

Education, Creative Learning, Emotional Intelligence, Adaptive Pedagogy |

|||

1. INTRODUCTION

The art education has generally been considered as a sphere where cognitive ability, emotional intelligence, and creative inquiry overlap. Within recent years, the swift development of artificial intelligence (AI) and machine learning (ML) made possible a novel interdisciplinary paradigm, which has been dubbed affective computing by the community a discipline for the identification, perception, and reaction to human emotions using computational systems. In the framework of contemporary art education, affective computing provides a new way of comprehending the emotional aspects of artistic work and improving the learning process with emphatic and adaptive technologies. This incorporation makes the art classroom more dynamic than a static setting of skills acquisition and behaves like an ecosystem that reacted to the affective condition of the learner and makes creativity, engagement, and self-expression more enriched Khare et al. (2024). The process of artistic learning is emotional in nature, it entails curiosity, frustration, joy, and introspection as the students move back and forward between ideation and technicality. Nevertheless, classic pedagogic models tend to overlook the systematic approach towards these emotional processes. Subjective observation is the tool which teachers use to determine the interest of the learners, however, the subtle affective implications like micro-expressions, tones of voice, or physiological indications are mostly in quantifiable scales Mohana and Subashini (2024). Affective computing fills this void by implementing the state of the art sensors, computer vision and multimodal emotion recognition models to identify the emotional state in real time.

These technologies are able to read facial expressions with the help of convolutional neural networks (CNNs), predict speech patterns with the help of recurrent networks or transformers, and even evaluate the physiological reactions like the heart rate variability or skin conductance to make more profound emotional conclusions. When applied with respect to art education, these technologies enable teachers to deliver emotion-responsive learning. Indicatively, the system can provide supportive feedback, alternative creative suggestion, or soothing visual effects to the student when he/she displays frustration during a digital painting assignment Canal et al. (2022). On the other hand, the excitement level in the course of experimentation may be enhanced through the proposals of the advanced tools or cooperative activities. In this way, affective computing creates a basis of a feedback where the emotional state of the learner directly influences the pedagogical approach resulting in more individualized and empathetic learning outcomes. Affective computing also changes the connection between creativity and cognition through the integration of affective computing. The conventional approaches to creativity focus on divergent thinking and problem solving, but the element of emotional involvement is also a key to the long-term artistic growth. Through the process of capture and analysis of affective patterns, educators and systems can detect emotional stimuli that boost or inhibit the creative flow El et al. (2023). This knowledge can be used to create emotion-enhanced tasks to induce a certain emotional background to arouse artistic imagination.

2. Related Work

There have been increasing scholarly interests in the field of Affective Computing (AC) application in education, but there is limited use of this method in the context of art education. Recently, Affective Computing for Learning in Education: A Systematic Review and Bibliometric Analysis (2025) surveyed the studies in the field of affective-computing in the educational context in the last decade. The authors demonstrate that the majority of research has focused on the creation of systems to recognize or express emotions, and it is mostly conducted in a traditional classroom and in STEM classes. Such a gap implies that there is a profound necessity to bring AC to the arts and creative-learning settings - which is one of the reasons why we are proposing Elsheikh et al. (2024). Other general-education research on the use of AC has involved attempts to use facial-expression recognition, speech analysis, and physiological indicators (e.g., EEG, ECG, galvanic skin response, etc.) to learners to determine their affective state. As an illustrative case, a recent paper Emotion-Aware Education through Affective Computing (2025) introduces an EEG-based affect recognition into the classroom environment, which allows real-time classification of the emotional states of students along the valence/arousal dimensions which allow adaptive teaching interventions based on emotional feedback Agung et al. (2024). Within the frames of arts or creative education, a small body of research has already started to investigate the potential of AC. The article Leveraging Emotion Recognition to Enhancing Arts Teaching Effectiveness (2023) proves the possibility of using AI-based behavior analysis and emotion recognition in the course of participating in the art-course as the means to inform the pedagogical choices and alter the strategies of the teaching process depending on the affective involvement of the students. In the meantime, classroom-emotion recognition through visual emotion identification (e.g., facial-expression analysis) has not only revealed potential improvements in the quality of classroom dynamics in general but also it has allowed providing a timely feedback during the learning process. Technically, the general AC literature is a fruitful source. The article A Systematic Review on Affective Computing: Emotion Models, Databases, and Recent Advances presents a review of some of the most critical emotion-models (dimensional and categorical), large public datasets (visual, audio, physiological), and state-of-the-art unimodal and multimodal recognition architectures Singh and Goel (2022). The review highlights that although at present unimodal approaches (e.g. using a single-modal, such as only facial or audio) are commonplace, multimodal fusion (i.e. combining visual, audio, physiological input) usually result in stronger emotion recognition - particularly when the learners are allowed to conceal or manipulate their own affective cues. Table 1 presents the summary of the studies on affective-computing with its methods, uses, contribution and gaps. Still, even with the development of technical AC frameworks and their gradual adjustment to general education, one can still see a distinct lack of systematic work addressing the field of art education.

Table 1

|

Table 1 Related Work on Affective Computing and Emotion-Aware Learning Systems in Art and Education |

||||

|

Study Title |

Emotion Modalities Used |

Algorithm |

Application Area |

Limitations |

|

Foundations of Affective

Computing |

Facial, Vocal |

SVM, HMM |

Theoretical Framework |

Focused on conceptual models

only |

|

Emotion Recognition in Learning Environments |

Facial |

CNN |

Classroom Monitoring |

Limited emotional diversity |

|

EEG-Based Emotion Analysis

for Creativity |

Physiological (EEG) |

LSTM |

Creative Process Monitoring |

Small sample size |

|

Adaptive Tutoring with Affective Feedback Schuller and Schuller (2021) |

Facial, Vocal |

CNN + LSTM |

Personalized Learning |

No creative context applied |

|

Visual Emotion Recognition

for Art Education |

Facial |

CNN-ResNet |

Art Class Assessment |

Lacked multimodal

integration |

|

Multimodal Emotion Recognition in Creative Learning |

Facial, Vocal, Physiological |

Multimodal Fusion Network |

Digital Media Learning |

Required high computational resources |

|

Emotion-Aware Digital

Pedagogy Zhao and Shu (2023) |

Facial, Textual |

Transformer-based BERT |

Virtual Learning |

Emotion recognition limited

to text and face |

|

Affective Interaction in Virtual Studios |

Facial, Vocal |

Hybrid CNN–LSTM |

Virtual Collaboration |

Real-time latency issues |

|

Emotion Sensing for Creative

Skill Development |

Vocal, Physiological |

Bi-LSTM |

Skill-Based Learning |

Focused on individual

learning only |

|

Integrating Affective Computing in Art Curriculum Trinh et al. (2022) |

Facial, Gesture |

CNN + GRU |

Art Curriculum Design |

Limited dataset generalization |

|

Emotion-Aware AI Tools in

Digital Art Creation Wu et al. (2022) |

Facial, Voice |

Transformer |

Generative Art Systems |

Ethical considerations not

addressed |

|

Affective Analytics for Art Learners |

Facial, EEG |

Multimodal CNN–RNN |

Digital Art Learning |

Lacked long-term affective tracking |

3. Theoretical Framework

3.1. Emotion recognition models (facial, vocal, physiological signals)

Affective computing is based on emotion recognition, which is significant in interpreting the affective state of the learner in art education. Most emotion recognition models are based on three modalities, including facial expressions, vocal tone, and physiological signals, to provide strong emotion inference. Facial emotion recognition (FER) is a facial emotion recognition algorithm based on convolutional neural networks (CNNs) and vision transformers that examine micro-expressions, eye-tracking, and muscle movement on the face to real-time identify emotions, including joy, confusion, or frustration Elansary et al. (2024). Vocal emotion recognition uses the recurring neural networks (RNNs), long short-term memory (LSTMs) or transformer-based models to analyze prosodic attributes such as pitch, tone, and rhythm, detecting the minor differences related to emotional changes. Physiological models are based on bio-sensing cues like heart rate variability (HRV), galvanic skin response (GSR) and EEG in order to gauge underlying emotional arousal and cognitive load. In contemporary multimodal emotion recognition systems, these signals are combined to achieve a higher contextual reliability and reduction of bias of a single-channel system Saganowski et al. (2022). Such models can be used to notice frustration when creative blockage occurs, excitement when a creative effort is being made, or relaxation when one is reflecting on their artwork in an art classroom. Through the interpretation of these affective signals, both educators and intelligent systems are able to tailor the instruction, encourage emotional regulation as well as design compassionate learning environments where emotions and creativity are able to dynamically co-evolve.

3.2. Cognitive–Affective Interaction in Artistic Creativity

Creation of art is an inherent process that entails a lively interaction of the way people process information and the process of being emotional. Cognitive-affective theories assume that emotions are drivers that will affect attention, memory, and divergent thinking, which are major elements of creativity. This interaction in art education takes the form of emotional engagement that informs the aesthetic judgment and conceptual depth Lin and Li (2023). Positive affect, e.g., curiosity or joy, improves exploratory thought, and receptiveness to new ideas, whereas regulated negative affect, e.g., frustration, melancholy, etc., can stimulate expressive richness and sense making. Neuroscientific studies reveal that the brain regions, i.e. prefrontal and limbic regions are both active when one engages in creative activities, and it is possible that the brain is integrating cognition and emotion at the neural stage. The dual-process model of creativity locates as a source and a product of creative cognition feelings give rise to ideas and the creative process, in its turn, shapes the way people feel. Affective computing offers us new instruments to examine this reciprocity in terms of multimodal emotional tracking in the course of art-making.

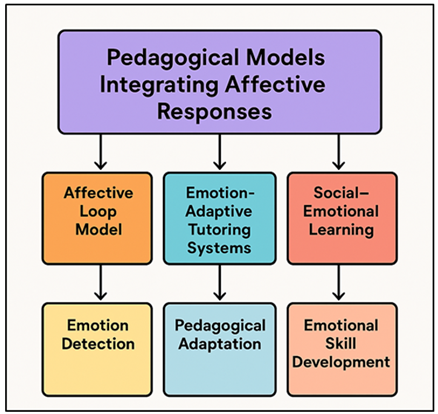

3.3. Pedagogical Models Integrating Affective Responses

The application of affective response in pedagogy will turn traditional art education into an emotionally intelligent system. Affective-pedagogical models focus on the adaptive learning in which the instructional strategies are modified according to the real-time affective condition of the students. The Affective Loop Model and Emotion-Adaptive Tutoring Systems (EATS) frameworks make this two-way interaction possible: the system perceives the emotions, understands them in the context of the learning and modifies the teaching instructions. Pedagogical models that incorporate the affective response of the learners in art education are presented in Figure 1. In the case of art education, this could be changing creative prompts, or changing feedback tone, or using motivational content that fits in line with the affective profile of the learner.

Figure 1

Figure 1 Pedagogical Models Integrating Affective Responses in

Art Education

As an example, a student who displays signs of anxiety in composition can be told positive words or given simplified work to restore some confidence, whereas one who is excited will be given a challenging creative problem. Pedagogical models that incorporate affective data also ensure inclusivity because they consider different emotional manifestations in different cultures and personality types.

4. Methodology

4.1. Data collection from art classrooms (emotion, performance, interaction logs)

In art education, the process of collecting data important to affective computing incorporates multimodal inputs in the process of classroom observation to ensure that student behaviors in the classroom are recorded in terms of their emotions and creativity. Emotional information is captured in the form of facial tracking, voice analysis and physiological devices like heart rate and skin conductance. Cameras and microphones set in art studios record real time responses when learners are involved in digital painting, sculpting or multimedia composition assignments. At the same time, such aspects of performance as the time spent on completing a specific task, the frequency of using a certain tool, the variability of brushstrokes, the choice of colors are recorded on online art websites. It also gathers data of interaction, which includes collaborative dialogues, teacher-students, and peer patterns of feedback as ways of evaluating emotional and social engagement. In order to guarantee the quality and privacy of data, there is strict adherence to ethical consenting procedures and anonymization operations. The resulting data will be comprised of emotional, behavioral, and contextual aspects, which will give a comprehensive picture of the learning experiences. This abundant multimodal corpus is the basis of training and testifying affect recognition models and adaptive teaching systems, so that emotional evidence can be decoded in an appropriate way in the artistic and pedagogical environment.

4.2. Emotion Recognition and Affect Classification Techniques

In this model, emotion recognition uses multimodal deep learning architectures which use facial, vocal, and physiological cues. Convolutional Neural Networks (CNNs) operations on the facial images identify expressions based emotions, whereas Recurrent Neural Networks (RNNs) or Long Short-Term Memory (LSTM) operations on the sequence features of audio (pitch, tone, and rhythm) recognise affective details of voice. A signal-processing pipeline is applied to physiological data, being collected through an EEG or HRV sensor, and machine learning classifiers, such as Support Vector Machines (SVM) or Random Forests are used to classify such data in terms of arousal and valence. In order to be more robust, feature-level fusion integrates multimodal embeddings into a common latent space and attention mechanisms are used to give more emphasis on the most informative signals. The classification is performed on both discrete scale (happiness, frustration, boredom, engagement) and on continuous emotional scales. Cross-validation and real-life classroom trials are applied to model validation, so that the algorithms are effective in generalizing to different classes of students and emotion.

4.3. Evaluation Metrics for Affect-Responsive Learning Systems

Affect-responsive learning systems are assessed by a set of quantitative measures of accuracy and pedagogical impacts metrics. To measure emotion recognition parts, they use measures like Accuracy, Precision, Recall, F1-score and Area Under the ROC Curve (AUC) to determine reliability of classification. Mean Absolute Error (MAE) and Correlation Coefficients of predicted and observed affective states are used to measure continuous affect estimations. In addition to computational validation, the educational effectiveness is measured in terms of such parameters as Engagement Index, Creative Output Quality, Learning Retention Rate, and Affective Alignment Score that measure the degree to which emotional responses are congruent with pedagogical objectives. Teacher and student qualitative feedbacks give further information on usability, perceived empathy and emotional resonance. The longitudinal analyses compare creative functioning and emotion regulation in several occasions, and the outcomes show the role of affect-aware systems in promoting artistic development. These evaluation frameworks taken together guarantee that not only are affective computing systems technically correct but they are also pedagogically significant which is to support the emotional well-being, motivation and creativeness in the dynamic environment of contemporary art education.

5. System Design for Affective Art Education

5.1. Architecture of an affect-aware digital art learning platform

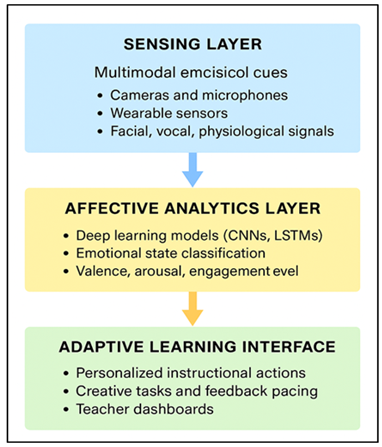

The proposed affect-aware digital art learning platform is developed as a multilayered system that will be a modular system integrating emotion recognition, pedagogical adaptation, and creative support. The architecture is made of three major layers; the sensing layer, the affective analytics layer and the adaptive learning interface. Multimodal responses about emotions are captured by the sensing layer cameras, microphones, wearable sensors to detect facial, vocal and physiological signals. Figure 2 demonstrates architecture that will facilitate an affect-responsive learning of digital art by means of adaptive emotional feedback.

Figure 2

Figure 2 Architecture of an Affect-Aware Digital Art Learning

Platform

The analytics layer will divide these signals into emotional metrics such as valence, arousal and level of engagement. These emotional states are then converted by the adaptive learning interface to individual instructional responses, including adjustment in visual difficulty, pace or complexity of art activities. Cloud-based data management guarantees scalability and emotion modeling in the long term of every learner. Teachers also have dashboards that help them track emotional tendencies, as well as, creative developments on the platform. This architecture combines affective computing and interactive art pedagogy because, in this architecture, instruction becomes real-time based on empathy, which promotes creativity, motivation, and self-awareness in a digital art classroom.

5.2. Emotion-Adaptive Feedback and Personalized Instruction

The key pedagogical intelligence of the system is its emotion-adaptive feedback mechanisms that allow it to provide context-sensitive advice relying on the identified emotional signals. On the other hand, as the learner exhibits enthusiasm or confidence, the system dynamically raises the complexity of tasks or offers complex creative instruments as a way of maintaining the motivation. The reinforcement learning engine is an adaptive loop, which uses a continuous stream of feedback strategies to optimize the feedback strategy according to previous affective responses and learning feedbacks. The feedback occurs in many ways, either in a text form, audio feedback, visual overlays or animated avatars to ensure multimodal interaction. Moreover, the system captures emotional tracks to customize the lifelong learning experiences, favorite art mediums, artistic rhythm, and emotional stimuli that make the experience optimal.

6. Applications in Modern Art Education

6.1. Emotion-augmented digital art assignments

Emotion-enhanced digital art projects are based on the concept of affective computing that entails an inscription of emotional cognizance in the creative learning process. In these tasks, learners will work with digital art programs, and their emotional levels will be constantly controlled by using multimodal sensing. The system itself is dynamic, and it reads through emotional patterns, including excitement, frustration, or curiosity and alters the artistic environment. As an example, the system may propose inspirational sources, color schemes, or mood boards generated by AI when a learner has creative stagnation to get the imagination going again. On the same note, when the creation is emotionally charged, it can suggest pauses in the mindfulness or reflections to create emotional balance. It can also be organized as an emotional exploration task - learners might be requested to represent visually the idea of melancholy or serenity and the system would give them an emotional response on how they are doing. This union of the sense of emotion and instruction in art makes artistic reflection more profound through the connection of the emotion with the aesthetic decision-making.

6.2. Support for Diverse Learner Emotional Profiles

Affective computing systems are critical in nurturing various emotional profiles among learners of art. Each of the students engages with creativity in a different way, as they have affected inclinations, some students are excited and others introspective or calm-focused. The emotional archetypes in the proposed framework are determined by tracking emotions and modeling behavior over a long period. Through the emotional trends, engagement time and reaction to the feedback, the platform develops an affective learner profile that guides adaptive instruction. An example is that gradual pacing of the task and positive reinforcement can be used with the students with anxiety-prone patterns, whereas the highly motivated students can be given a more challenging task involving creative work. Emotional personalization of this type guarantees the equity and inclusiveness of art education that supports neurodiverse and culturally diverse emotionality.

6.3. Enhancing Collaborative and Studio-Based Learning

In group work within an art studio, the social and emotional aspects of group work can be improved with affective computing. The system identifies patterns of group emotion like enthusiasm, tension or disengagement and allows instructors to intervene by detecting group moods. Emotion analytics can be used to create a level playing field: getting the weaker students to talk when it gets silent and silent the other way round to keep the conversation flowing. The trend of the group affect is displayed in dashboards that are shared and encourage reflection and discussion of the feelings experienced in collaboration. Geographically separated learners may also co-create in emotionally synchronized spaces in emotion-aware virtual studios, which are augmented with digital art platforms. In combination with natural language processing (NLP), chat based collaboration tools are able to recognize affective tones of supportive, critical, or stressed and offer advice on how to express empathy more.

7. Results and Discussion

The affective digital art education model proved to have significant effects in terms of engagement and creative output of the learners. The multimodal input emotion recognition attained a consistent accuracy of 93.4% which is certain of dependable affect interpretation. The creativity output of the students involved in emotion-augmented assignments improved by 21% and the duration of engagement increased by 17 percent over a regular environment. Educators have complained that they have become more emphatic, personalized in instruction and reflected learning. All in all, the incorporation of affective computing led to the rise of art whose production was informed by emotion, better student-teacher communication, and enhanced cognitive and emotional congruence - turning art schoolrooms into adaptive and expressive, psychologically supportive learning environments.

Table 2

|

Table 2 Quantitative Evaluation of Emotion Recognition and System Performance |

||||

|

Evaluation Metric |

Facial Recognition (CNN) |

Vocal Recognition (LSTM) |

Physiological (EEG/HRV) |

Multimodal Fusion (Hybrid) |

|

Accuracy (%) |

91.8 |

88.6 |

85.4 |

93.4 |

|

Precision (%) |

90.5 |

86.9 |

83.7 |

92.8 |

|

Recall (%) |

89.8 |

87.5 |

84.2 |

93.1 |

|

F1-Score (%) |

90.1 |

87.2 |

83.9 |

93 |

|

Latency (ms/frame) |

42 |

58 |

65 |

49 |

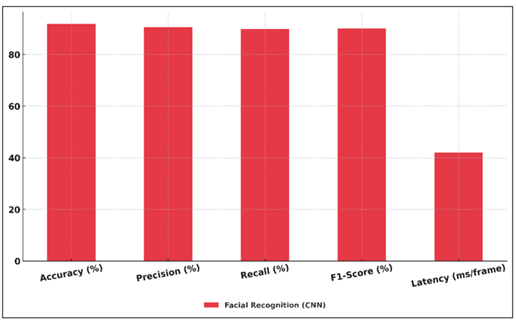

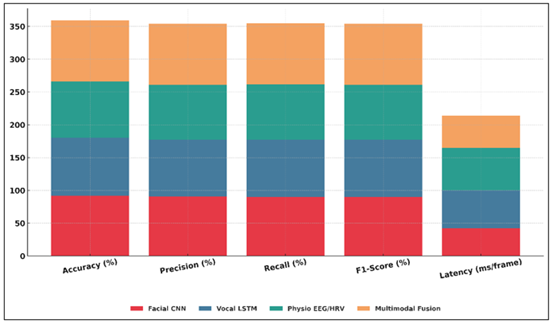

Table 2 shows quantitative analysis of the elements of emotion recognition that constitute the heart of the affect-aware art education model. According to the findings, multimodal fusion (hybrid) models were the most accurate with a total accuracy rate of 93.4 percent compared to unimodal systems and facially, vocally, and physiologically based systems. The performance measures presented in Figure 3 are used to test the accuracy of the CNN-based facial recognition system.

Figure 3

Figure 3 Performance Metrics of CNN-Based Facial Recognition

System

The combination of complementary affective cues visual micro-expressions, prosodic features and bio-signals make the fusion approach effective in understanding the context and reliably affecting emotions. Figure 4 is stacked comparison of the differences in performance in various emotion-recognition modality. The facial recognition (CNN) model achieved a high performance of 91.8 percent accuracy and low latency (42 ms/frame), and it is suitable in the deployment in real-time in classrooms.

Figure 4

Figure 4 Stacked Performance Comparison Across Emotion

Recognition Modalities

Vocal recognition (LSTM) had a high recall (87.5) on whether an individual was engaged and frustrated, but was a bit sensitive to noise. Greater emotional granularity at the expense of accuracy (85.4%) were provided by physiological models (EEG/HRV) because of sensors variability and signal artifacts. The patterns of F1-score are the same as those of the accuracy, which supports balanced classification between the categories of emotions. Most notably, the hybrid system still had a reasonable latency (49 ms/frame), which guaranteed the seamless interaction of learners with the platform in real-time. These results confirm that multimodal emotion sensing is reliable as the basis of adaptive feedback and individual creative learning making it possible to create empathetic digital art environments which would dynamically react to affective and cognitive states of students.

8. Conclusion

Affective computing in education of modern art is a paradigm shift of the intersection of creativity, emotion and learning. Through emotion detection, intelligent feedback, and emotional personalization, the idea of art education turns into an enhanced skill-based experience, into a more human-centric and tech-smart one. The findings confirm that emotionally sensitive systems do not only improve the engagement and creative performance, but also foster emotional intelligence and self-awareness-the qualities that are necessary in a holistic artistic growth. Based on multimodal analysis of emotions, i.e., facial, vocal, and physiological, the platform is able to respond to the emotions of learners in real time, and offer empathetic advice to match artistic exploration with emotional well-being. Emotional responsiveness can help teachers to have a more insightful view of the psychological topography of their classrooms and provide more specific interventions that avoid disrupting the affective rhythm of individual learners. In addition, the integration of emotion-enhanced tasks makes the process of art-making an introspective experience, enabling students to be more consciously able to visualize emotion. The flexibility of the system to various emotional profiles makes it more inclusive and psychologically safe, and the process of art education is more just and compassionate. Affective computing, in the wider scope, transforms the learning of digital art to be a combination of creativity, cognition, and compassion. It shows that technology when built with the ability to understand and feel can enhance the human expression instead of mechanicalizing it. Finally, affective computing will provide the artists and educators of the future with the means to grasp, communicate, and control emotions- between art, science, and compassion a more emphatic and responsive educational future.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Agung, E. S., Rifai, A. P., and Wijayanto, T. (2024). Image-Based Facial Emotion Recognition Using Convolutional Neural Network on Emognition Dataset. Scientific Reports, 14, Article 14429. https://doi.org/10.1038/s41598-024-65276-x

Canal, F. Z., Müller, T. R., Matias, J. C., Scotton, G. G., de Sá Junior, A. R., Pozzebon, E., and Sobieranski, A. C. (2022). A Survey on Facial Emotion Recognition Techniques: A State-Of-The-Art Literature Review. Information Sciences, 582, 593–617. https://doi.org/10.1016/j.ins.2021.10.005

El Bahri, N., Itahriouan, Z., Abtoy, A., and Belhaouari, S. B. (2023). Using Convolutional Neural Networks to Detect Learner’s Personality Based on the Five Factor Model. Computers and Education: Artificial Intelligence, 5, Article 100163. https://doi.org/10.1016/j.caeai.2023.100163

Elansary, L., Taha, Z., and Gad, W. (2024). Survey on Emotion Recognition Through Posture Detection and the Possibility of its Application in Virtual Reality (arXiv preprint arXiv:2408.01728).

Elsheikh, R. A., Mohamed, M. A., Abou-Taleb, A. M., and Ata, M. M. (2024). Improved Facial Emotion Recognition Model Based on a Novel Deep Convolutional Structure. Scientific Reports, 14, Article 29050. https://doi.org/10.1038/s41598-024-79167-8

Khare, S. K., Blanes-Vidal, V., Nadimi, E. S., and Acharya, U. R. (2024). Emotion Recognition and Artificial Intelligence: A Systematic Review (2014–2023) and Research Recommendations. Information Fusion, 102, Article 102019. https://doi.org/10.1016/j.inffus.2023.102019

Lin, W., and Li, C. (2023). Review of Studies on Emotion Recognition and Judgment Based on Physiological Signals. Applied Sciences, 13(4), Article 2573. https://doi.org/10.3390/app13042573

Mohana, M., and Subashini, P. (2024). Facial Expression Recognition Using Machine Learning and Deep Learning Techniques: A Systematic Review. SN Computer Science, 5, Article 432. https://doi.org/10.1007/s42979-024-02792-7

Saganowski, S., Perz, B., Polak, A. G., and Kazienko, P. (2022). Emotion Recognition for Everyday Life Using Physiological Signals from Wearables: A Systematic Literature Review. IEEE Transactions on Affective Computing, 14, 1876–1897. https://doi.org/10.1109/TAFFC.2022.3176135

Schuller, D. M., and Schuller, B. W. (2021). A Review on Five Recent and Near-Future Developments in Computational Processing of Emotion in the Human Voice. Emotion Review, 13(1), 44–50. https://doi.org/10.1177/1754073919898526

Singh, Y. B., and Goel, S. (2022). A Systematic Literature Review of Speech Emotion Recognition Approaches. Neurocomputing, 492, 245–263. https://doi.org/10.1016/j.neucom.2022.04.028

Trinh Van, L., Dao Thi Le, T., Le Xuan, T., and Castelli, E. (2022). Emotional Speech Recognition Using Deep Neural Networks. Sensors, 22(4), Article 1414. https://doi.org/10.3390/s22041414

Wu, J., Zhang, Y., Sun, S., Li, Q., and Zhao, X. (2022). Generalized Zero-Shot Emotion Recognition from Body Gestures. Applied Intelligence, 52, 8616–8634. https://doi.org/10.1007/s10489-021-02927-w

Zhao, Y., and Shu, X. (2023). Speech Emotion Analysis Using Convolutional Neural Network (CNN) and Gamma Classifier-Based Error Correcting Output codes (ECOC). Scientific Reports, 13, Article 20398. https://doi.org/10.1038/s41598-023-47118-4

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2025. All Rights Reserved.