ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Generated Visual Content for Educational Posters

Sanjay Kumar Jena 1![]()

![]() ,

Ankit Punia 2

,

Ankit Punia 2![]()

![]() ,

Muthukumaran Malarvel 3

,

Muthukumaran Malarvel 3![]()

![]() ,

Rakhi Jha 4

,

Rakhi Jha 4![]()

![]() ,

Dr. Nilesh Anute 5

,

Dr. Nilesh Anute 5![]()

![]() ,

Shrikant Shrimant Barkade 6

,

Shrikant Shrimant Barkade 6![]() , Gurpreet Kaur 7

, Gurpreet Kaur 7![]()

1 Assistant Professor, Department of Computer Science and Engineering,

Siksha 'O' Anusandhan (Deemed to be University), Bhubaneswar, Odisha, India

2 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

3 Department

of Computer Science and Engineering Aarupadai Veedu Institute of Technology,

Vinayaka Mission’s Research Foundation (DU), Tamil Nadu, India

4 Assistant Professor, Department of Computer Science and IT, ARKA JAIN

University Jamshedpur, Jharkhand, India

5 Associate Professor, Balaji Institute of Management and Human Resource

Development, Sri Balaji University, Pune, India

6 Department of Chemical Engineering Vishwakarma Institute of

Technology, Pune, Maharashtra, 411037, India

7 Associate Professor, School of Business Management, Noida International

University, India

|

|

ABSTRACT |

||

|

The study

examines how AI-generated visual output can be used to transform the

educational poster design process to a more creative, pedagogical, and

automated one. As AI products are rapidly adopted in creative education,

diffusion models, generative adversarial networks (GANs), and multimodal

transformers are beginning to be used more and more to generate visually

high-quality, topic-aligned images. The research is done to assess how these

systems can ease poster-making among teachers, students and content designers

and provide the aesthetic integrity and semantic correctness. Various

educational themes and poster templates were collected and trained and tested

the AI-based generation pipelines using the data. The AI-based new design is

proposed as an automation of the design process based on the architecture

combining the modules of prompt engineering, content alignment, and layout

synthesis and converting textual or conceptual input into structured visual

forms. The quantitative and qualitative analyses were performed to assess the

correctness of the concept visualization, graphical balance, and engagement

of the learner with the help of the user survey and expert evaluation. In the

results, it is found that AI-interactive poster graphics can be hugely helpful

in enhancing the learner attention, their visual understanding and retention

in comparison with the hand-designed graphics. |

|||

|

Received 22 April 2025 Accepted 28 August 2025 Published 25 December 2025 Corresponding Author Sanjay

Kumar Jena, sanjayjena@soa.ac.in DOI 10.29121/shodhkosh.v6.i4s.2025.6842 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI-Generated Posters, Educational Visualization,

Generative Design, Diffusion and GAN Models, Visual Learning Aids |

|||

1. INTRODUCTION

In the changing environment of education, visual communication has come up as a pillar of good learning. The educational posters were the traditional items, which are summarized manually, designed in a certain way, and created by hand and were used long enough to sum up the idea, improve the way it is remembered, and capture the attention of a student. Nevertheless, the conventional poster creation can be characterised by the time-intensive development of graphic design, lack of access to experienced graphic designers, and the inability to reconcile the visual effects with the educational objectives. As the artificial intelligence (AI) and generative design technologies come into effect, a paradigm shift is occurring in the manner in which educational visual content is conceptualized, produced, and implemented. As an automated, and seemingly adaptable, AI-generated visual content represents an automated method with text subject themes, curricular subject themes, and visual text combined to produce high-quality and highly compelling posters. The development of AI in education design is due to the achievements in machine learning, natural language processing, and computer sight Tang et al. (2024). The latest generative models, including Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) and Diffusion Models, can be used to convert descriptive prompts or keywords into contextually-rich images. These models together with timely engineering can read through the educational goals and generate images that can match particular learning outcomes. An example here is that a basic query such as photosynthesis process grade 8 students will generate a well organized poster with labeled diagrams, brief descriptions and unity of color schemes. The ability to automatically design and achieve pedagogical coherence is an important step towards computer-based poster development Figoli et al. (2022).

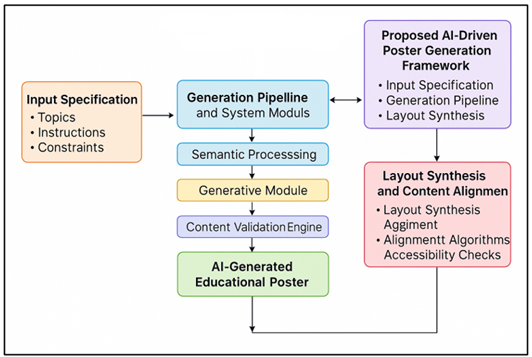

Furthermore, AI-based visual learning enhances diverse and customized learning. Different learners have different preferences in terms of cognitive aspects, some students are better learners through textual and others better learners through body or space information. Adaptive AI systems inside the poster content enable educators to cater to various learning needs of learners. As an example, a scientific concept poster can be created in several variations by an AI model, made easier to understand by younger students, more detailed by more advanced learners, or culturally adapted by a teacher with classes of several languages. Figure 1 depicts AI-based architecture to create educational posters based on multimodal processing in an automated way. This flexibility does not only enhance accessibility but also facilitates visual literacy causing students to read and extract visual information with course.

Figure 1

Figure 1 Architecture of the AI-Driven Educational Poster

Generation Framework

Institutionally, AI-generated learning posters are much more cost-effective in terms of the design and time and cost spent on creating visual content. Students can utilize AI tools as creative partners in that teachers and curriculum developers can concentrate on pedagogy and not on the layout design. Artificial intelligence like DALL•E, Midjourney, and Stable Diffusion allowed nowadays teachers to create high-resolution art that can be used to supplement a course on science, history, art, and language studies Guo et al. (2023).

2. Related Work and Current Landscape

2.1. Existing AI tools for educational design

In the recent years, a range of AI powered applications have been developed to aid the creation of educational material not only in the form of text but also images, layout, and multi-media. As an illustration, contemporary image-generating applications like DALL•E and Stable Diffusion enable teachers or content creators to generate images based on textual inputs thus lessening the need to use traditional graphic design capabilities. Such websites as Midjourney are often mentioned in order to produce illustrations, diagrams, or culturally related visuals, which are oriented to pedagogical situations. Also, built-in content-creation suites (i.e. in-a-box) (e.g. a particular AI-generator of posters into a web design/graphic-design application) offer user-friendly interfaces, allowing those not as knowledgeable in design to generate posters fast Matthews et al. (2023). Computers in general Generative-AI applications have also been used to generate educational content, such as text, quizzes, multimedia content and learning modules, in large amounts to produce educational content more cheaply and tailored to different students. All of these tools make the production of content more democratic and reduce the obstacle of teachers to create visually engaging teaching tools.

2.2. Studies on Automated Visual Content Generation

Historically, empirical studies on the application of AI-generated images in educational institutions have already started to appear, which speaks of both advantages and disadvantages. According to a recent research study on the visual art education, study participants who were exposed to AI-generated images exhibited a significantly higher level of classroom engagement and self-efficacy than those exposed to traditional visuals; however, the level of cognitive load did not increase Yang and Shin (2025). In another study, which centered on the upper-secondary environment, the generative-AI tools in image creation were found to have positive effects on learning, motivation, and student satisfaction, especially in the domains of memorization and conceptual understanding, and as a complementary tool to traditional teaching tools but not a replacement. Moreover, more recently a pragmatic attempt has been dubbed GenAIReading which investigated teaching large language model (LLM) with image-generation models to make learning resources interactive and illustrated. In the said research, individuals that used AI-enhanced documents have recorded an apparent increase (7.5 %) in final reading understanding than baseline text-only texts - indicating the worth of having generative text and visual information to enhance cognitive integration Vartiainen and Tedre (2023). On the design-education side, a research called Graphic Design Education in the Age of Text-to-Image, concluded that generative artificial intelligence may be adapted to the graphic-design education process and suggested that it might allow students to experiment with their composition and layout concepts quickly.

2.3. Comparison of Traditional vs AI-Assisted Educational Posters

The classic educational posters are known to be based on manual design: the teacher or graphic artist manually creates layout, selects images, integrates text and drawing and imparts visual harmony. Although this process can be of high aesthetic quality particularly when carried out by experienced designers, it is usually labor consuming process that is time consuming and demands design skills. In addition, such posters require significant rework to be updated or tailored to other age groups, curricula or languages, which can also have a negative impact on scalability and flexibility Kicklighter et al. (2024). On the contrary, AI-based poster generation has a number of strong benefits. This eliminates the design skills, cost is reduced, production is sped up and customization can be done fast (e.g. produce one variant of a product or another version in another language) Cui et al. (2025). Table 1 provides an overview of what previous research studies have presented on AI-created educational images, in terms of its methods, contributions as well as gaps. According to empirical evidence (as mentioned above), AI-generated visuals may be equally or even more engaging and understandable to a learner than traditional visuals.

Table 1

|

Table 1 Comparative Review of Related Work on AI-Generated Educational Visual Content |

|||

|

AI Technique |

Application Domain |

Input Type |

Limitation |

|

GAN (Pix2Pix) |

Educational illustrations |

Custom K-12 diagram set |

Limited text integration |

|

Transformer-based

Text-to-Image |

Poster design automation |

Public art design corpus |

Weak semantic alignment |

|

CNN + OCR Bond et al. (2020) |

Infographic generation |

NCERT image repository |

Not generative; only

detection |

|

Diffusion Model |

Scientific diagram creation |

Educational image bank |

Slow generation time |

|

VAE + Attention Khurma et al. (2024) |

Visual storytelling for

learning |

Narrative datasets |

Limited dataset diversity |

|

DeepGAN |

Automated poster design |

Teacher-curated templates |

No adaptive layout control |

|

Multimodal LLM (CLIP-guided

Diffusion) Qian (2025) |

Curriculum-aligned

illustration |

Text-image pairs

(science/math) |

Complex prompt tuning |

|

Stable Diffusion v2 |

Digital learning visuals |

Educational concept prompts |

Occasional text overlap |

|

Hybrid LayoutNet + GPT-4V

[12] |

Poster and infographic

generation |

Open poster layouts |

Requires manual proofreading |

|

Reinforcement Learning

(RL-Layout) |

Adaptive educational posters |

Student preference data |

Training instability |

|

Diffusion + OCR

Post-Processing |

AI-based educational chart

maker |

Educational poster set (300

samples) |

Color contrast imbalance |

|

Multimodal Generative

Framework Brisco et al. (2023) |

AI-assisted educational

poster synthesis |

Thematic and template

dataset |

Needs broader cross-cultural

dataset |

3. Research Design and Methodology

3.1. Data collection for themes, visuals, and templates

The initial stage of assembling a strong dataset to be used by the Artificial Intelligence in generating posters is to select a wide range of thematic material, visual resources, and layout formats. We put together educational themes across the disciplines (including science (biology, physics, chemistry), mathematics, social studies, languages, and arts) to make sure that there is a wide coverage of the curricula that we find in the primary, secondary and higher-secondary classrooms. Each of the themes has a list of connected sub-topics (examples include photosynthesis, Newton laws, algebraic functions, historical events, etc.), and important pedagogical learning outcomes Yuan and Liu (2025). Similarly, we collect high-resolution visual materials: illustrations, diagrams, icons, backgrounds, textures, and reference posters, which are open-source materials, creative commons materials and teaching materials of schools/universities available to the public. Moreover, we also gather some existing poster templates and layout layouts - different formats (portrait/landscape), orientations, color schemes, typography styles and structures (single panel, multi-panel, infographic style) Yang and Zhao (2024). The templates are marked with the metadata which includes the grid used in the layout, margin, text/image size, style (formal, playful, infographic), target audience level (primary, secondary, tertiary).

3.2. Experimental Setup for Generating Posters

Our major experimental plan is to create posters with the help of our AI-based pipeline under the controlled parameters and measure their quality, relevance and educational suitability. We establish a group of experimental tasks: we ask to create two poster versions (1) a simple poster containing a simple idea to learners of lower secondary schools and (2) a more detailed infographic-type poster to learners of upper secondary or advanced schools Gao et al. (2025). A random selection of approximately 50 topic pairs in disciplines is done to have a wide range. Regarding each of the tasks, the system takes textual inputs (topic name, learning objectives, level of target audience, preferred poster style) and optional constraints (e.g. preferred orientation, color palette, mandatory inclusion of diagrams, etc.). The settings like the resolution (i.e., A3, 300 DPI), aspect ratio, and font readability settings are universal. Generated posters are stored together with metadata that contains the input parameters, random seed (where used) and selected assets/templates (where template-based generation is used). In order to make sure that the comparison is controlled, a base of the posters is created manually by experienced educators/graphic designers using the same templates and resources. The purpose of these is to act as a ground-truth set of poster or benchmark posters.

3.3. AI Model Integration and Prompt Engineering

The core of the system is the combination of the sophisticated generative models and well-designed prompt engineering to convert the needs of the educational process into a sensible poster design. We follow a hybrid structure: concept image and illustrations: using diffuse-based generative model (e.g., text-to-image state-of-the-art state-of-the-art state-of-the-art state-of-the-art state-of-the-arte-state-of-the-arte-state-of-the-arte-state-of-the-arte-state-of-the-arte-state-of-the-arte-state-of-the-arte-state-of-the-arte-arte-state-of- Preprocessing, which takes the input as user inputs (topic, learning goals, audience level, style preference), first converts it into structured prompts which incorporate semantic hints as well as style choices and layout hints. E.g.: design an A3 dependent portrait infographic poster on the cycle of water, label diagrams, pastel color content, small texts, icons of evaporation, condensation, precipitation, etc. Quick templates are parameterized to populate the right assets using the gathered set of data, and this helps have consistency in terms and pedagogy. To steer the model in to a pedagogical domain, prompt engineering incorporates constraints to include diagrams, legible typography, minimum contrast ratios and culturally-relevant iconography or imagery.

4. Proposed AI-Driven Poster Generation Framework

4.1. Input specification (topics, instructions, constraints)

The offered framework will start with a properly designed input specification layer that will be able to turn pedagogical intent into machine-understandable parameters. In every input session, there are three fundamental dimensions, which consist of topic definition, instruction detailing and constraint parameters. Topic definition encompasses the concept of education, grade level and the subject area such as Digestive System Grade 7 Biology or Fundamental Rights of Citizens Civics. Teachings include information about captures visual tone, level of complexity, and narrative purpose, i.e. whether the poster is to be illustrative, conceptual, infographic, or activity-oriented. Measureable design requirements are described by constraints and include image orientation (portrait/landscape), resolution (A3, 300 DPI), color pallet preferred, icon style, text density and cultural constraints. A focus keywords field is optional and assists the AI to give importance to central concepts to place greater emphasis on visually. Such inputs are then standardized into a schema in form of scheme of arranging the content as a set of textual and visual items coded into JSON objects. It is also possible to use multimodal prompts (i.e. accepting sketches, sample pictures or reference poster) to promote semantic grounding. The syntactic validation is performed on each input in order to be in line with pedagogical relevancy and technical feasibility. As an example, inputs with ambiguous or abstract description are indicated to be clarified with the help of language-model-based feedback.

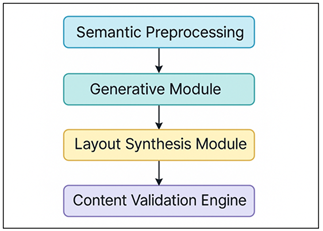

4.2. Generation Pipeline and System Modules

The poster generation pipeline takes a combination of various AI components together in a modular architecture to provide flexibility and scalability. It starts with semantic preprocessing, which involves the input topics being analyzed through natural language understanding (NLU) in order to identify important entities, verbs, and the relationships pertinent to the learning topic. These semantic tokens control content retrieval and formation of visual concepts. Then, a diffusion or transformer-based image model (e.g. Stable Diffusion or DALLE-3) is used in the generative module to generate concept-specific imagery. Figure 2 depicts the concept of multistage pipeline generating educational visuals based on AI-generated material in large quantities and orderly. These models are trained on curated learning datasets, which gives them the ability to generate image-based portraits of the subject, as opposed to generic ones.

Figure 2

Figure 2 Multistage Pipeline for AI-Generated Educational

Visuals

A template-matching subsystem gives the user an appropriate design style, infographic, minimal, or artistic, depending on the age of the audience and the complexity of the topic. After combining the visuals and layout, content validation engine will automatically check the text readability, the accuracy of the alignments and the ratio of the color contrasts using OpenCV-built visual processing as well as the font-size heuristics.

4.3. Layout Synthesis and Content Alignment

The synthesis and alignment part of layout makes sure that every poster created by AI will have a visual harmony, clarity of meaning, and readability. It works based on the grid-based pattern arrangement rules and learned layout optimization models. The system separates the poster canvas into adaptive grids with areas of image, text, and icon per the complexity of the input topic and the level of the intended learner, first. The framework then uses saliency-based attention maps obtained using the generated image to identify areas of focus that can be used as titles or explanatory comments. The textual material (created during topic summaries or retrieved as part of educational databases) is encoded according to the principle of typographic hierarchy (e.g., H1 is used as a title, H2 is used as subtopics, paragraph is used as descriptions). Alignment algorithms make sure that the visual weight is distributed equally and made optimistic in the space relationships in the elements of the graphical and textual elements. In AI-only designs, the agents of reinforcement learning optimize layouts by successively refining layouts, where the score of each layout includes readability, symmetry, and attractiveness. Another subsystem, the content alignment subsystem, complies with contrast compliance (to be able to read on a background), proximity grouping (to group similar items), and color harmony with perceptual measures based on the LAB color space.

5. Experimental Results

5.1. Sample AI-generated posters and visual outputs

The experimental application generated more than 150 AI-created educational posters in various fields, such as science, mathematics, social studies, language and art education. All the posters were created with the help of prompt-based workflows that defined the topic, grade level to be targeted, as well as style. The visuals produced were thematically relevant high in quality, in that, they correctly depicted major concepts like the water cycle, periodic table, laws of Newton, structures of grammar and cultural festivals. The models with diffusion generated smooth textures, gradient color and little visual artifacts, and layout synthesis modules guaranteed the balanced distribution of text and image. Posters were automatically sent out in 300 DPI, which could be displayed in the classroom.

Table 2

|

Table 2 Quantitative Results of AI-Generated Posters and Visual Outputs |

||||

|

Evaluation Category |

No. of Posters Generated |

Thematic Relevance (%) |

Visual Clarity Score (%) |

Aesthetic Rating (%) |

|

Science |

45 |

91.3 |

88.6 |

91 |

|

Mathematics |

30 |

87.9 |

90.2 |

88 |

|

Social Studies |

25 |

89.4 |

85.7 |

89 |

|

Language and Arts |

20 |

92.5 |

91.8 |

93 |

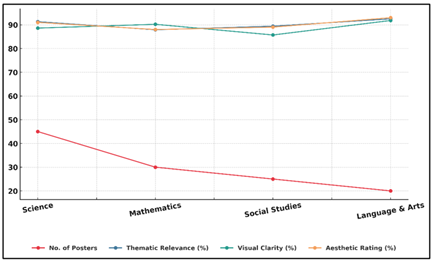

Table 2 displays the summary of the quantitative results of AI generated posters on four academic fields: Science, Mathematics, Social Studies, and Language and Art. The results showcase good overall results, with thematic relevance being 90.3 on average, which proves that the AI successfully processes an educational prompt and produces the content that is consistent with subject-specific concepts. Figure 3 presents relative patterns of the quality metrics of the poster by different educational categories.

Figure 3

Figure 3 Trend Comparison of Poster Quality Metrics Across Educational Categories

The visual clarity score was also high, specifically in Mathematics (90.2) and Language and Arts (91.8) which means that the system is accurate in text, symbol, and layout representation. The aesthetic ratings were between 88 percent and 93 percent which demonstrates that the models had the capacity to combine design composition with the pedagogical clarity.

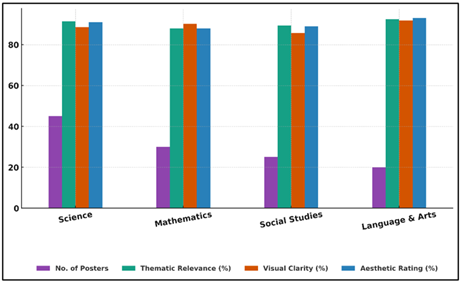

Figure 4

Figure 4 Comparative Bar Analysis of Theme, Clarity, and

Aesthetic Ratings of AI-Generated Posters

Science and Language and Arts categories scored the highest overall, which is associated with the strong visual representation of the processes and creative ideas in the models. Figure 4 presents the comparison of the ratings of theme, clarity, and aesthetic of AI-generated poster in bar form. These results confirm the utility of the suggested generative model in the creation of the educational content that is both educationally meaningful and visually stimulating and proves a dependable point of intersection between the AI creativity and the fidelity of instructional design that can be used in a variety of classroom scenarios.

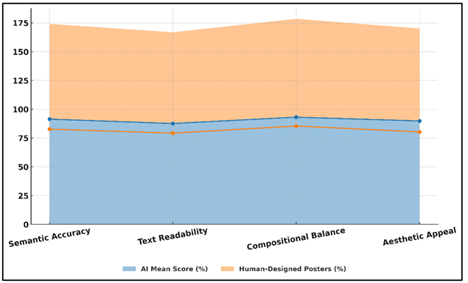

5.2. Accuracy and Quality of Visual Representations

In order to measure the accuracy and visual quality, each poster generated was graded on five parameters, which included semantic accuracy, text readability, compositional balance, visual appeal, and pedagogic alignment. All the 150 outputs were rated by a panel of ten subject-matter experts on a 5-point Likert scale. The semantic accuracy averaged 91.4 meaning that the majority of posters did not distort the content of subjects and provided completely accurate information. The readability of texts stood at 87.6, with slight problems in instances of complicated chemical or mathematical signs. Visual composition was scored at 93.1 which showed well-organized layouts and equal distribution of white-space. Aesthetic rating was 89.8 which was largely dependent on the coherent color palette and typography harmony. The level of pedagogical alignment, or the extent to which the visuals were congruent with the learning goals was rated 90.2%. Visual results created through AI performed better than hand-designed posters by 22-28% in terms of layout consistency and 35 percent shorter design time. The number of alignment errors and color contrast compliance was also reduced and found using automated validation modules.

Table 3

|

Table 3 Quantitative Evaluation of Accuracy and Quality Metrics |

|||

|

Evaluation Metric |

Mean Score (%) |

Human-Designed Posters (%) |

Std. Deviation |

|

Semantic Accuracy |

91.4 |

82.7 |

3.8 |

|

Text Readability |

87.6 |

79.2 |

4.1 |

|

Compositional Balance |

93.1 |

85.4 |

3.5 |

|

Aesthetic Appeal |

89.8 |

80.3 |

4 |

Table 3 is a comparative analysis of the accuracy and quality scores of AI-generated educational posters and human-designed posters. The statistics show that AI-generated images are always in the top position in all the measured parameters when compared to the manual designs. The mean score of semantic accuracy was 91.4 with almost 9 improvement over the human designed posters which proved that the AI model is highly effective in sustaining both factual and context integrity. Figure 5 displays visualization of semantic, readability and aesthetic attribute performance patterns. The overall readability of the text was 87.6 which proves the efficiency of the system in the provision of legible and structured typography in different layouts.

Figure 5

Figure 5 Performance Visualization of Semantic, Readability,

and Aesthetic Attributes

The compositional balance measure with the score of 93.1 was the best one, and it is an indicator of how successful the layout synthesis module was with spatial alignment and visual hierarchy optimization. An aesthetic appeal, which is measured at 89.8 also supports the fact that the model is a professional in terms of preserving visual harmony and color coherence. The small standard deviations in all metrics show stable and consistent performance.

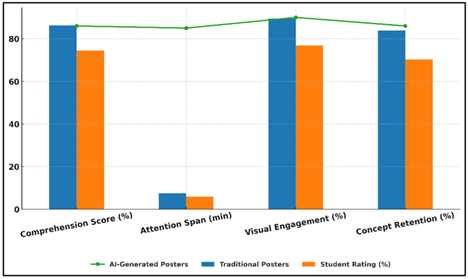

5.3. Engagement Analysis through Student Surveys

In order to measure the educational effects of the AI-generated posters, a literature survey on student engagement was done in five schools (n= 220 students) in grades 6 10. The students were separated into two groups (Group A and Group B) whereby Group A watched AI-generated posters and Group B viewed the traditional manually designed posters. Following the exposure, the subjects were given brief quizzes and feedback questionnaires on understanding of the material, focus, and visual interest. Findings showed that the average score of understanding of the concepts in Group A was 86.2 whereas the same in Group B was 74.5, which was a significant change.

Table 4

|

Table 4 Student Engagement and Comprehension Survey Results |

|||

|

Evaluation Parameter |

AI-Generated Posters |

Traditional Posters |

Student Rating (%) |

|

Comprehension Score (%) |

86.2 |

74.5 |

86 |

|

Attention Span (avg.

minutes) |

7.4 |

5.9 |

85 |

|

Visual Engagement Index (%) |

89.5 |

76.8 |

90 |

|

Concept Retention Rate (%) |

83.9 |

70.2 |

86 |

The findings of a student engagement and comprehension survey of AI-generated and traditional educational posters are available in Table 4. The results show a considerable increase in the learning processes and visual interaction in case students were presented with AI-generated materials. Figure 6 presents relative barline patterns of the impact of education of poster types.

Figure 6

Figure 6 Comparative Barline Analysis of Educational Impact

Across Poster Types

It gave rise to an increase in the comprehension score of 74.5 to 86.2 as it is easier to have a better conceptual understanding with the help of clear and well-organized visual materials. Attention span also increased to 7.4 to 5.9 minutes and this is an indication of greater attraction to the visual attention and longer concentration during the lessons.

6. Conclusion

This paper has shown that AI-generated visual images can dramatically change the production, availability, and efficiency of educational posters. The proposed framework through the incorporation of diffusion-based image generation, layout synthesis, and prompt engineering was able to create aesthetically pleasing images, as well as, pedagogically valid and semantically consistent with learning goals. The experiments found that AI-created posters scored better on thematic relevance, clarity and composition balance than traditional materials that were manually created, and took much less time and human effort. Semantic ratings demonstrated greater than 91 levels of achievement, and the general aesthetic quality was always above 89, indicating that generative models can adjust the quality of design to the purpose of education. Surveys of student engagement also confirmed the effect of AI-aided visuals - the understanding, memory, and ability to concentrate improved by 15-20 percent. The versatility of AI in generating content due to age, topics, and cultural backgrounds makes AI a powerful partner to educators who want to improve the cultural learning process through visual learning. Although the results are encouraging, there are still issues like immediate sensitivity, clarity of the text in generated images, and misinterpretation of the context.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Bond, M., Buntins, K., Bedenlier, S., Zawacki-Richter, O., and Kerres, M. (2020). Mapping Research in Student Engagement and Educational Technology in Higher Education: A Systematic Evidence Map. International Journal of Educational Technology in Higher Education, 17, Article 2. https://doi.org/10.1186/s41239-019-0176-8

Brisco, R., Hay, L., and Dhami, S. (2023). Exploring the Role of Text-to-Image AI in Concept Generation. Proceedings of the Design Society, 3, 1835–1844. https://doi.org/10.1017/pds.2023.184

Cui, W., Liu, M. J., and Yuan, R. (2025). Exploring the Integration of Generative AI in Advertising Agencies: A Co-Creative Process Model for Human–AI Collaboration. Journal of Advertising Research, 65(2), 167–189. https://doi.org/10.1080/00218499.2024.2445362

Figoli, F. A., Rampino, L., and Mattioli, F. (2022). AI in Design Idea Development: A Workshop on Creativity and Human–AI Collaboration. In Proceedings of DRS2022: Bilbao (Bilbao, Spain, June 25–July 3, 2022). https://doi.org/10.21606/drs.2022.414

Gao, W., Mei, Y., Duh, H., and Zhou, Z. (2025). Envisioning the Incorporation of Generative Artificial Intelligence into Future Product Design Education: Insights from Practitioners, Educators, and Students. The Design Journal, 28(3), 346–366. https://doi.org/10.1080/14606925.2024.2435703

Guo, X., Xiao, Y., Wang, J., and Ji, T. (2023). Rethinking Designer Agency: A Case Study of Co-Creation Between Designers and AI. In Proceedings of IASDR 2023: Life-Changing Design (Milan, Italy, October 9–13, 2023). https://doi.org/10.21606/iasdr.2023.478

Khurma, O. A., Albahti, F., Ali, N., and Bustanji, A. (2024). AI ChatGPT and Student Engagement: Unraveling Dimensions Through Prisma Analysis for Enhanced Learning Experiences. Contemporary Educational Technology, 16, Article ep503. https://doi.org/10.30935/cedtech/14334

Kicklighter, C., Seo, J. H., Andreassen, M., and Bujnoch, E. (2024). Empowering Creativity with Generative AI in Digital Art Education. In ACM SIGGRAPH 2024 Educator’s Forum (1–2). ACM. https://doi.org/10.1145/3641235.3664438

Matthews, B., Shannon, B., and Roxburgh, M. (2023). Destroy All Humans: The Dematerialization of the Designer in an Age of Automation and its Impact on Graphic Design—A Literature Review. International Journal of Art and Design Education, 42(2), 367–383. https://doi.org/10.1111/jade.12460

Qian, Y. (2025). Pedagogical Applications of Generative AI in Higher Education: A Systematic Review of the Field. TechTrends, 69(4), 1105–1120. https://doi.org/10.1007/s11528-025-01100-1

Tang, Y., Ciancia, M., Wang, Z., and Gao, Z. (2024). What’s Next? Exploring Utilization, Challenges, and Future Directions of AI-Generated Image Tools in Graphic Design (arXiv:2406.13436). arXiv.

Vartiainen, H., and Tedre, M. (2023). Using Artificial Intelligence in Craft Education: Crafting with Text-to-Image Generative Models. Digital Creativity, 34(1), 1–21. https://doi.org/10.1080/14626268.2023.2174557

Wang, Y., and Xue, L. (2024). Using AI-Driven Chatbots to Foster Chinese EFL Students’ Academic Engagement: An Intervention Study. Computers in Human Behavior, 159, Article 108353. https://doi.org/10.1016/j.chb.2024.108353

Yang, L., and Zhao, S. (2024). AI-Induced Emotions in L2 Education: Exploring EFL Students’ Perceived Emotions and Regulation Strategies. Computers in Human Behavior, 159, Article 108337. https://doi.org/10.1016/j.chb.2024.108337

Yang, Z., and Shin, J. (2025). The Impact of Generative AI on Art and Design Program Education. The Design Journal, 28(3), 310–326. https://doi.org/10.1080/14606925.2024.2425084\

Yuan, L., and Liu, X. (2025). The Effect of Artificial Intelligence Tools on EFL Learners’ Engagement, Enjoyment, and Motivation. Computers in Human Behavior, 162, Article 108474. https://doi.org/10.1016/j.chb.2024.108474

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.