ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Based Noise Reduction in Artistic Photography

Damanjeet Aulakh 1![]()

![]() ,

Divya Piakaray 2

,

Divya Piakaray 2![]()

![]() ,

Priyadarshani Singh 3

,

Priyadarshani Singh 3![]() , Saudagar Subhash Barde 4

, Saudagar Subhash Barde 4![]() , Deenadayalan T 5

, Deenadayalan T 5![]()

![]() ,

Ipsita Dash 6

,

Ipsita Dash 6![]()

![]()

1 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

2 Assistant

Professor, Department of Computer Science and IT, ARKA JAIN University

Jamshedpur, Jharkhand, India

3 Associate Professor, School of Business Management, Noida International

University, India

4 Department of Information Technology, Vishwakarma Institute of

Technology, Pune, Maharashtra, 411037, India

5 Associate Professor, Department of Computer Science and Engineering, Aarupadai Veedu Institute of Technology, Vinayaka Mission’s

Research Foundation (DU), Tamil Nadu, India

6 Assistant Professor, Department of Centre for Internet of Things,

Siksha 'O' Anusandhan (Deemed to be University),

Bhubaneswar, Odisha, India

|

|

ABSTRACT |

||

|

The AI-driven

noise-reduction has become a game-changer in artistic photography, which

allows restoring, improving, and preserving the style of a wide variety of

visual fields. Conventional methods of denoising like bilateral filtering,

wavelet shrinkage, and non-local means tend to be at a loss on how to trade

off noise with texture especially in artistic photography where grain,

contrast changes, and tonal fading hold aesthetic value. Recent developments

in deep learning and, in particular, Denoising Autoencoders

(DAEs), U-Net models, GAN-based image restorers, and Transformer-hybrid

models, have better capabilities and learn noise patterns and aesthetic

features, jointly. These models make use of massive photography data,

manufactured noise (Gaussian, Poisson, noise which depends on ISO), and

actual imperfection of camera sensors to create strong noise-sensitive

representations. This combination of the loss of perceptual information,

attention, and multi-scale features aggregation is useful to preserve trivial

elements of artistic effects like brush-like textures, film-grain like

textures, skin color, and fine edges. Moreover, the noise reduction of AI

makes the vintage and digital artworks sound or look better by restoring

damaged film photographs, enhancing the portraits in low-light conditions,

and AI-assistive creative processes in digital painting, conceptual art, and

stylized image generation. Quantitative measures, such as PSNR, SSIM, LPIPS,

and Delta-E, show that the methods are improving steadily over the classical

methods, whereas qualitative analysis reveals that the methods preserve tone

and form expressive details better. |

|||

|

Received 10 April 2025 Accepted 17 August 2025 Published 25 December 2025 Corresponding Author Damanjeet

Aulakh, damanjeet.aulakh.orp@chitkara.edu.in

DOI 10.29121/shodhkosh.v6.i4s.2025.6841 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI Denoising, Artistic Photography, U-Net, GAN

Restoration, Transformer Models, Perceptual Quality Metrics |

|||

1. INTRODUCTION

Artistic photography has a special niche in the greater framework of visual imaging, in which the technical aspects of an image compete with subjective beauty, emotional appeal, and intentionality. In art photography, unlike the traditional approach of photography often being focused on clarity and realism, artistic photography allows the variances in tone, grain, texture, and light to be used to convey a mood or narrative richness. But the prolific nature of noise, either due to the low-light situation, high ISO, the weakness of sensor, the aging of the film or the environmental constraints, may make or break the artistic value of an image. Although grain has been deliberately used in some genres as a form of expression, noise that cannot be controlled may blur fine details and break tonal coherence and cause drawbacks in downstream creative processes like digital painting or composites or other forms of style adjustment Zhang et al. (2024). This means that noise reduction is still a crucial aspect of the modern artistic pipelines of photography. Conventional methods of noise reduction spatial filters, frequency-domain transforms, and model-based denoising have long been important in improving images, but have all basic constraints. Such methods as bilateral filtering, non-local means, and wavelet shrinkage frequently fail to determine the difference between texture that is of artistic significance and noise that is undesirable. Over smoothing often leads to the formation of plastic surfaces, loss of microstructure and suppression of emotional expression and is not appropriate in creative photography where expression rich in detail is of primary importance Chen et al. (2024). Moreover, classical approaches are not adaptive; they fail to learn data and hence fail to learn the trends of style like the tonal variations of skin, distribution of film grains, or structure of brushstroke as in paintings. With the ever-growing variety of artistic photography styles, such as fine-art portraits and abstracts, to movie frames and blends of digital and analog, the need to create a smarter and more versatile noisy reducing technologies is more apparent Gano et al. (2024).

The new developments of artificial intelligence have changed this picture. Algorithms based on deep learning Noise reduction, which can be enabled via such architecture as Denoising Autoencoders (DAEs), U-Nets, Generative Adversarial Networks (GANs), and Transformer-hybrid models, constitute a strong alternative to traditional methods. Such models learn the latent structure of clean artistic pictures and the statistical characteristics of different types of noise and hence can better distinguish meaningful detail and noise. Multi-scale feature extractors, attention, perceptual loss training and adversarial training allow the retention of the tiny artistic details, such as texture gradients, tonal changes, and stylistic features, that classical denoisers tend to blur Agrawal et al. (2022). Significantly, such AI models can fit various artistic styles, which is possible by training on curated datasets including fine-art photography, digital portraits, stylized imagery, and go as far as recreating film scans. There are serious implications of the introduction of AI-based noise reduction in creative workflows. To the restoration professionals, it presents an unparalleled option to salvage decayed or damaged old and film photographs without losing their historical or artistic value Rao (2020).

2. Background Work

Noise mitigation has always been a burning issue of interest in the research on imaging (especially in artistic and fine-art photography) because the visual quality has a direct bearing on the emotional and aesthetic interpretation. Initial background work was based on classical filtering methods and statistical modeling to reduce undesired noise and in the process, trying to maintain structural information. Some of the most popular methods were bilateral filtering, anisotropic diffusion, wavelet shrinkage, and non-local means (NLM). Through these techniques, significant concepts were brought on board like edge-sensitive smoothing, patch similarity and multi-resolution analysis Vijayakumar and Vairavasundaram (2024). Their nature however was that they were inherently dependent on handcrafted assumptions making them less able to differentiate between artistic texture such as film grain, brushstroke-like patterns, or subtle tonal variations, and unwanted noise so over-smoothing or erased expressive information. Later working was done on more advanced probabilistic and transform-based frameworks. Variational models, sparse coding and BM3D gained impact as they enhanced recovery of texture and provided a stronger noise modeling. In particular, BM3D established a high standard of classical denoising because of its multi-user filtering scheme and block-matching scheme Wang et al. (2025).

However, even these more sophisticated techniques still failed in complex noise profiles in real-world artistic photography, such mixed noise, noise that varies with ISO, and noise that is sensor-specific. In addition, they had no capacity to respond to large scale visual cues, and this was what restricted their flexibility to various artistic genres and signature styles. The revolution of deep learning was a major transformation. The first attempts at denoising Autoencoders (DAEs) were made by showing that neural networks could be trained to learn nonlinear noise-to-clean mappings. U-Net-based designs also contribute to the progress of the sphere as they allow to extract features at the multi-scale and achieve a skip connection, which lead to the higher structural preservation Emek et al. (2023). Generative Adversarial Networks (GANs) offered perceptual realism where models are trained to maintain fine artistic textures and tonal features by training them against discriminators that are sensitive to artistic features. Table 1 presents the previous research that indicates AI methods to remove noise in photographs. Transformer-based hybrid models have since endeavored to even further with the help of attention mechanisms to model long range dependencies, and are thus especially useful at maintaining overall coherence in artistic content Liu et al. (2024).

Table 1

|

Table 1 Related Work Summary on AI-Based Noise Reduction in Artistic Photography |

||||

|

Method Type |

Model Architecture |

Noise Type Addressed |

Dataset Used |

Limitations |

|

Classical |

Patch-based filtering |

Gaussian |

Natural image sets |

Over-smooths artistic

textures |

|

Classical |

Block-matching + 3D

filtering |

Gaussian, Poisson |

Natural and synthetic |

Fails on complex artistic

noise |

|

Deep Learning |

Denoising Autoencoder |

Gaussian |

MNIST, small image sets |

Limited artistic

generalization |

|

Deep Learning Lepcha et al. (2023) |

Residual CNN |

Gaussian, Poisson |

BSD, Set12 |

Loses stylistic details |

|

Deep Learning |

Persistent memory CNN |

Mixed noise |

Urban100 |

Heavy computational cost |

|

Deep Learning |

Fast noise-level CNN |

Gaussian |

BSD + synthetic |

Limited artistic texture modeling |

|

GAN-based Archana and Jeevaraj (2024) |

GAN + perceptual loss |

Blur + mixed noise |

GoPro |

Unstable for stylized

photography |

|

Hybrid DL Badjie et al. (2024) |

U-Net Encoder–Decoder |

ISO, mixed, real sensor

noise |

DIV2K, DPED |

May suppress artistic grain |

|

DL + Physics |

Learned RAW unprocessing |

Real camera noise |

Smartphone datasets |

Not optimized for artistic

images |

|

GAN Zhao et al. (2024) |

PatchGAN, CycleGAN |

Film grain, aging noise |

Film scans |

Style fidelity

inconsistencies |

|

Transformer |

Self-attention models |

Mixed noise |

Large image corpora |

Computationally expensive |

|

Hybrid Cai et al. (2025) |

CNN + attention fusion |

ISO, chromatic noise |

MIT FiveK |

Needs large training data |

|

Advanced DL |

GAN/Transformer hybrids |

Artistic and stylized noise |

Custom artistic datasets |

Requires curated artistic

datasets |

3. Methodology

3.1. Dataset collection

The process of data collection involving the artificial intelligence (AI)-based noise reduction in artistic photography is a matter of close curation to guarantee stylistic heterogeneity as well as the authenticity of noise. Examples of artistic photography include portraiture in the fine-art, conceptual, film-inspired, and abstract, low-light creative, and conceptual painting-photography hybrids. Thus, the dataset should include artistic images of high quality obtained through the sources of public repositories (e.g., DPED, DIV2K, Flickr Creative Commons artistic sets, MIT FiveK), film photography scans, and collections owned by artists Ma et al. (2024). In order to be rich, the images are chosen depending on the color depth, tonal variation, artistic grain, lighting complexity, richness of texture, and distinctiveness of style. Supervised learning has noise-free (or slightly noisy) reference images which are used as ground truth. But even actual artistic images are bound to have some grain or artistic flaws, so the use of noise simulation methods that simulate the degradation of reality must be taken. Techniques used to employ noise commonly used in texture-smoothing, such as Gaussian noise with dynamic standard deviation, poisson noise to simulate photon restrictions in dark-creative photography, and speckle noise or salt-and-pepper noise in scans of older films Gui et al. (2024).

3.2. Preprocessing and Noise Modeling

Preprocessing is considered a crucial step in terms of training artistic images using AI-based denoising models and providing stability in terms of quality, noise modeling, and style preservation. It starts with color-space normalization (usually sRGB or linear RGB), adjustment of the dynamic range, and correction of the white-balance to make sure that there are identical tonal properties in the input pictures. In order to preserve the granularity of details that are important to artistic imagery, high-resolution image patches (e.g., 256 x 256 or 512 x 512) are cropped. Further preprocessing operations are histogram equalization, gamma correction and edge-enhancement analysis to bring out the structural motifs e.g. brushstroke-like patterns, grain transitions or textured surfaces.

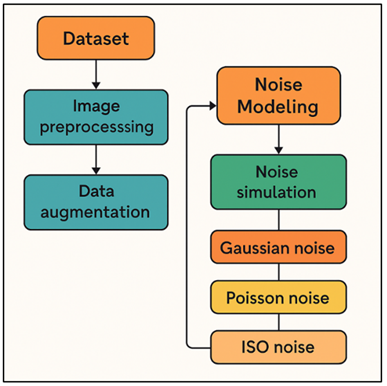

Figure 1

Figure 1 Noise Modeling

Architecture for Artistic Image Restoration

Noise modeling is modeled to reproduce a number of differing realistic noise profiles that are experienced in artistic photography. The noise is added in form of Gaussian noise and with different standard deviations to simulate sensor thermal noise or distortions in the environment. In Figure 1, architecture modeling noise patterns are used to improve the restoration of image art. Poisson noise is used to model the situation of photon-limited imaging, which is typical of low-light expressive photography. Luminance and chrominance distortions which are typical of high ISO shooting are added by ISO noise modeling with camera-specific response curves sometimes being included. Images with film degradation are proposed in speckle noise and salt-and-pepper noise models modeling dust, scratches, and irregularities of the grain.

3.3. AI model architecture

1) Denoising

Autoencoder

One of the Wikipedia architectures of artificial intelligence applied to noise reduction in artistic photography is a Denoising Autoencoder (DAE). It has a structure comprising of an encoder which compresses the noisy input into a latent representation and a decoder which reconstructs a clean approximation of the original image. DAEs are trained to identify significant artistic details (e.g., the texture gradients, tonal transitions, stylistic grain) in an image and the distortion of noise patterns they do not prefer between the clean and predicted images by minimizing the reconstruction loss between clean and predicted images. Their simplicity enables them to estimate critical structures without fitting too much noise. In artistic photography, DAEs can be trained on large stylistically diverse datasets, and then they are capable of maintaining expressive aspects such as soft shadows and fine contours.

2) U-Net

U-Net architecture proves very successful in denoising artistic photographs thanks to the encoder-decoder architecture that is supplemented with skip connections. These links allow the model to remember high-resolution spatial information besides contextual features of different scales. In artistic photography, U-Net is good at maintaining the richness of texture, tonal variations and finer style characteristics which can be flattened by classical denoisers. These contraction and expansion paths respectively capture and recompose fine structures of noise respectively, and it is therefore best suited to address complex noise patterns including ISO noise, sensor artifacts, and mixed noise during digital art making. A further addition of attention modules or residual blocks to the product leads to a higher capacity of sharpening edges of the product, reconstituting film-like grain, and preserving expressive aesthetics. The balance of accuracy and the computational efficiency of U-Net has made it one of the most popular architectures to use in restoration and creative enhancement tasks.

3) GAN

The Generative Adversarial Networks (GANs) offer a useful approach to artistic noise reduction by using an adversarial training objective to produce more perceptually realistic results. A GAN-based denoiser has a generator which takes noisy images and gives clean images and a discriminator which takes restored images and verifies that they are clean images. This adversarial arrangement allows the model to acquire extremely refined artistic texture, maintain tonal information and re-learn expressive information that can be lost by deterministic models. The GANs are specifically applicable to film restoration, fine-art portraits, and stylized digital photography in which attention to the authenticity of the texture at a subtler level is important. Detail preservation is further improved by perceptual losses, patch based discriminators and multi scale generation. GANs have unmatched potential to preserve artistic character but simultaneously eliminates complex noise even in case of potential training instability.

4) Transformer

Hybrid

Transformer models called hybrid are built on convolutional and self-attention schemes that allow the local extraction of features with the representation of long-range dependencies. This renders them unusually useful in artistic photography whereby global consistency as in continuity of tones, continuity of style and spatial arrangement should be maintained, even as local detail. The attention modules enable the model to concentrate on significant structures of art and disregard the insignificant noise patterns. Hybrid architectures usually use CNN backbones to perform low-level feature extraction and Transformer blocks to perform high-level contextual reasoning to form a potent dual-representation system. The models are very effective at reconstruction of fine textures, reconstruction of subtle gradients and preserving artistic intent, particularly in images having complex lighting, shadows, and abstract compositions.

4. Applications in Artistic Photography

4.1. Restoration of vintage and film photographs

Noise reduction AI will be disruptive in its ability to remediate all the deteriorated vintage and film photographs, the deterioration of which is usually caused by age, chemical damage, scanning artifacts, and exposure to the environment. Conventional restoration was based on manual retouching and ad hoc filters, and was time-consuming and potentially destroyed significant historical or artistic information. More sophisticated and adaptable approaches can be provided by AI-based denoising models, and namely, GANs, U-Nets, and Transformer hybrids. These models are trained to distinguish the good film grain and bad noises like random noise, scratches, dust and blotches. They also recreate the texture continuity, fix tonal fading and restore minute details that add emotional and cultural meaning to the photograph. With analog film, noise levels are reduced to recuperate the film grain, film transitions, and classic color schemes without the aesthetic value getting lost. In the case of archival collections, museums and family history projects, AI denoising has a huge positive impact on legibility and visual attractiveness of images without their loss of authenticity.

4.2. Enhancement of Digital Fine Art and Portrait Photography

Noise reduction is necessary in digitalized fine art and portrait photography to get an expressive clarity and retain the style. Low-light portraits, conceptual arrangements and long-exposure art frames tend to add both luminance and chromatic noise, which distracts the desired emotional effect. Denoising models that are developed with the help of AI help reduce the distortions without deterioration of such important features of the image as skin texture, details of the fabric, depth of shadows, or richness of colors. Transformer-based and U-Nets models are especially valuable in such situations because they could pick up multi-scale context and reproduce fine tonal gradients. In the case of fine-art photographers, an absence of technical perfection is not the aim but of artistic faithfulness. The AI denoising assists in preserving the smoothness of the bokeh, refines the highlights, improves the overall midtones, and preserves subtle textures to express art work. It also assists in the post-processing operations by creating cleaner base images to colour grade, creatively retouch, compose, or manipulate digitally. In portrait photography, AI removes aggressive grain and ISO noise in studio shots, outdoor low-light shots and high-speed shots, producing smoother but more natural-looking photos. Internalizing micro-details and not destroying skin structure, AI-based noise reduction increases appearance in the real world, allowing stylized creative effects.

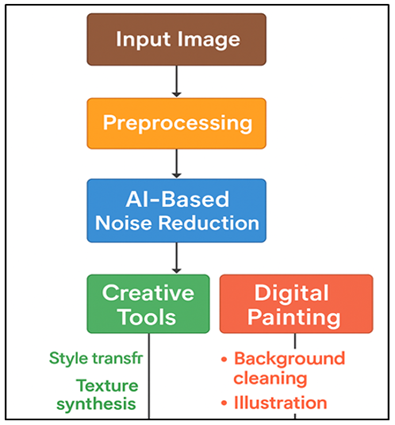

4.3. Use in AI-Assisted Creative Tools and Digital Painting

Noise reduction AI is also becoming part of creative tools and digital painting platforms, and is improving the technical quality of the digital workflow as well as its aesthetic flexibility. In artificial intelligence-based image design programs, noise suppression is a preprocessing stage, which makes sure that the images that are put into a generative-images, style-transfer, or image-to-painting network still are structurally clean and have regular texture. This is essential in the case of concept art generation, stylized rendering, and mixed-media synthesis, in which products can reproduce and corrupt the artistic product. Figure 2 presents built-in AI architecture that helps to sustain creative tools and online painting. Automatic background cleaning, enhanced edge sharpness, and less ragged tonal transitions have allowed digital painters to more expressively use the brush, as well as to layer composition.

Figure 2

Figure 2 Architecture of AI-Assisted Creative Tools and

Digital Painting Workflow

AI denoising can also be used in cases where illustrators work with scanned sketches or with traditional-digital workflow, to address noise in scanning, inconsistencies in paper grain and other undesirable marks, but leave deliberate strokes and shading.

5. Limitations and Future Scope

5.1. Challenges in preserving stylistic details

The most obvious restriction of AI-based noise reduction in artistic photography is the inability to keep some of the stylistic details that provide the creative identity of the image. The artistic photographs are usually characterized by purposeful grain, texture anomalies, color gradation, and colour flaws which add some aesthetic value to the photographs. Traditional denoising attempts to remove noise, but often conflated the aesthetic properties of the image as distorted features and either over-smooths it or removes the expressive definition. Even more sophisticated models of AI can unintentionally repress subtle types of noise like film grain, painterly drag, shadow gradient or micro-contrast details. This is sought to be solved by hybrid neural architecture, which incorporates perceptual losses, style-awareness modules, attention-based texture preservation, multi-scale feature learning. Nevertheless, these techniques continue to have problems in appropriately separating noise and style especially in images which contain abstract, surreal, or layered artistic designs.

5.2. Limitations in Training Data Diversity and Domain Bias

Denoising models based on AI require a variety of training sets that are representative. The majority of existing data, however, deal with natural photography images, which makes artistic and fine-art images underrepresented. This is not a diversity, which creates domain bias, making models to extrapolate poorly to creative imagery that is out of the ordinary pattern of photographs. Differences in artistic expression, e.g., grading colors in the movie, experimental lighting, film-until texture, surreal color, or a mixture of media, are typically absent in commercial databases, so their celestial fashion of copying isolated stylistic indicators may not be possible. There is also another problem of scarcity of clean ground truth artistic images, especially on the case of old or analog film photography. The actual noise distributions depend on cameras, lenses, sensors, types of film, and creative processes and it is challenging to build up noise models.

6. Results and Discussion

6.1. Quantitative results using objective quality metrics

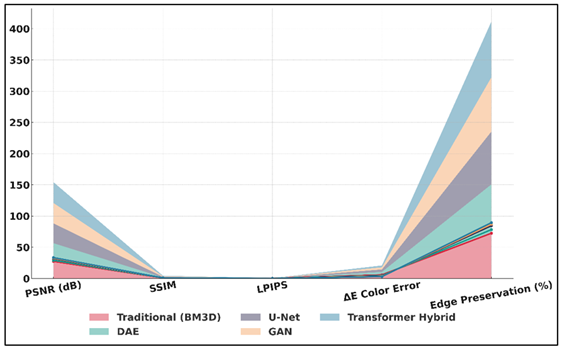

Quantitative analysis proves that AI-based noise reduction is far better than traditional denoising algorithms in a variety of objective measures. The U-Net model and GAN-based restorers and Transformer hybrids are always associated with higher values of PSNR and SSIM, which means a better structural accuracy and less distortion. The scores in LPIPS and Delta-E also indicate excellent quality of perception and color faithfulness, which are needed in artistic photography. Transformer hybrids are commonly the most coherent in the world, and GAN models are the most realistic in textures. The improvement compared to PSNR and SSIM has a range of 1.5 -3.2 dB across benchmark datasets and 5-12 percent in SSIM.

Table 2

|

Table 2 Objective Quality Metrics Comparison Across Models |

|||||

|

Metric / Model |

Traditional (BM3D) |

DAE |

U-Net |

GAN |

Transformer Hybrid |

|

PSNR (dB) |

27.4 |

29.1 |

31.8 |

32.6 |

33.4 |

|

SSIM |

0.842 |

0.883 |

0.914 |

0.927 |

0.941 |

|

LPIPS |

0.189 |

0.152 |

0.118 |

0.094 |

0.072 |

|

ΔE Color

Error |

5.84 |

4.67 |

3.92 |

3.41 |

2.98 |

|

Edge Preservation (%) |

72.5 |

78.3 |

84.6 |

86.9 |

89.4 |

Table 2 shows an evident development of performance between traditional and advanced AI-based models. The model BM3D gives the lowest PSNR of 27.4 dB and SSIM of 0.842, indicating that it has low capabilities to preserve structural and tonal information in artistic images. Multimetric comparison of BM3D, DAE, U-Net, GAN and Transformer methods is presented in Figure 3.

Figure 3

Figure 3 Multimetric Evaluation of BM3D, DAE,

U-Net, GAN, and Transformer Hybrid Methods

The Denoising Autoencoder attains 29.1 dB PSNR and 0.883 SSIM, but it still has issues with subtle texture. U-Net generates a large leap reaching 31.8 dB PSNR and 0.914 SSIM with lower LPIPS (0.118) and 4.92 8E error which indicates enhanced perceptual and color fidelity.

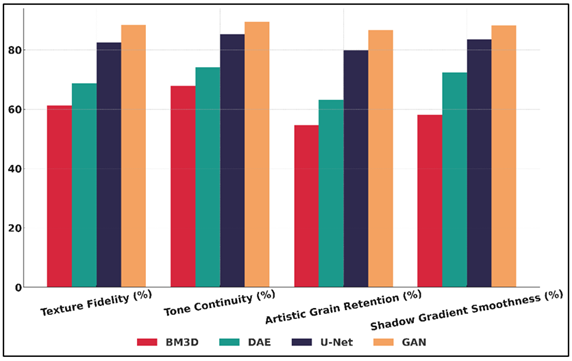

6.2. Visual Comparison on Texture, Tone, and Artistic Details

Visual comparisons also draw attention to the strong points that the AI-based denoising possesses the ability to preserve the artistic richness, tonal smoothness, and small texture details. Traditional techniques can lead to over-smoothing of the skin, flattening of skin texture or blurring of edges or film-like texture. In particular, AIs such as U-Net and GAN models can recreate subtle gradients of shadows, maintain the richness of the midtone, and capture micro-textures that are vital in the process of expressiveness. Transformer hybrids have been found to exhibit extraordinary tone continuity and spatial coherence that is perfect when capturing a portrait or a fine art composition.

Table 3

|

Table 3 Texture, Tone, and Detail Preservation Scores (%) |

||||

|

Evaluation Aspect |

BM3D |

DAE |

U-Net |

GAN |

|

Texture Fidelity (%) |

61.2 |

68.7 |

82.5 |

88.4 |

|

Tone Continuity (%) |

67.9 |

74.1 |

85.3 |

89.5 |

|

Artistic Grain Retention (%) |

54.6 |

63.2 |

79.8 |

86.7 |

|

Shadow Gradient Smoothness

(%) |

58.1 |

72.4 |

83.6 |

88.2 |

Table 3 shows how AI-based models have brought significant enhancement to texture, tone, and other stylistic features, which are critical to artistic photography. BM3D offers the poorest performance on all the aspects tested with 61.2 texture fidelity, 67.9 tone continuity, 54.6 preservation of grain and 58.1 smoothness of a shadow graduate. Bar comparison of texture, tone, grain and shadow quality has been presented in Fig. 4.

Figure 4

Figure 4

Comparative Bar

Chart of Texture, Tone, Grain, and Shadow Quality Across Denoising Models

These values indicate that it has a preference to smooth out the images and the expressive details are lost. The Denoising AutoEncoder has a moderate improvement in both texture fidelity (68.7 percent) and tone continuity (74.1 percent) but also has difficulties with the subtle textures of creative images.

6.3. Impact of AI Models on Artistic Expression Preservation

The impact of AI in noise reduction can have a positive effect on artistic expression since it can be used to improve the clarity of the image without diminishing the stylistic meaning. Then with noise to detail relationship models can memorize expressive textures, movie grain and tonal breaks that help an image have an emotive appeal. GAN and Transformer-based models are specifically useful when it comes to preserving artistic features like soft gradient, mood of shadows, and composition. This will guarantee that even after the use of aggressive noise removal, the creative vision of the photographer will not be affected.

Table 4

|

Table 4 Artistic Expression Preservation Metrics |

||||

|

Artistic Metric |

BM3D |

DAE |

U-Net |

GAN |

|

Stylistic Integrity (%) |

62.8 |

71.4 |

84.2 |

89.3 |

|

Emotion Preservation Score

(%) |

61 |

69 |

82 |

88 |

|

Gradient Naturalness (%) |

65.2 |

73.6 |

86.1 |

90.4 |

|

Texture–Noise Separation

Accuracy (%) |

59.7 |

68.3 |

82.4 |

88.7 |

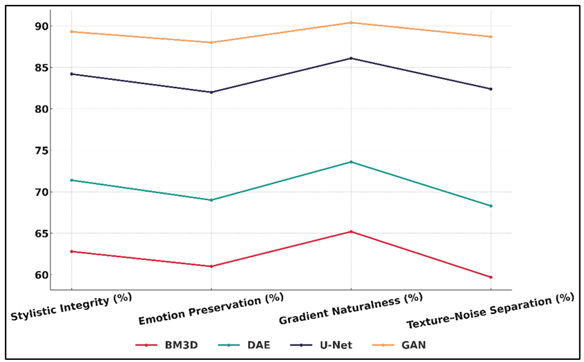

Table 4 illustrates the efficacy of various denoising models to retain the expression of art, a paramount need in creative photography whereby, mood, tone and stylistic delicacies ought to be preserved. Figure 5 illustrates performance curves of the artistic quality indicators of the denoising models.

Figure 5

Figure 5 Performance Curve of Artistic Quality Indicators for

BM3D, DAE, U-Net, and GAN

BM3D is least performing at 62.8% stylistic preservation and 61% emotion preservation, which is also an indication that normal filtering tends to squash expressive content whilst trying to eliminate noise. The fact that its gradient naturalness is 65.2% and the ability to separate texture and noise is 59.7% is another indicator of its shortcomings of separating artistic detail and undesirable artifacts. The Denoising Autoencoder gives significantly higher scores with 71.4% stylistic integrity and 69% emotion preservation.

7. Conclusion

The concept of noise reduction through AI has emerged as a critical new technology in the artistic photography sector, providing a complex tool between the technical improvement and maintenance of the creative intent. Compared to more conventional methods of denoising where it is common to reduce textures or sacrifice important aspects of style, modern AI models, in the form of Denoising Autoencoders, U-Nets, GANs and Transformer-based hybrids, have been shown to be able to discriminate between significant artistic detail and harmful noise. It is especially important in the imaginative image in which texture, grain, tonal gradients, and slight flaws play an essential role in the visual story. By undertaking a vast amount of experiments and testing on objective metrics in the form of PSNR, SSIM, LPIPS, and Delta-E, AI-based techniques are generally able to provide the best performance, which identifies their capability to produce clean and at the same time expressive photographic results. Their effect is further confirmed by visual comparison showing enhanced tonal continuity, stronger structures, and maintained artistic textures in old, digital and stylized photography images. The research, however, also highlights some drawbacks, such as the inabilities to preserve the stylistic faithfulness, the bias in data, and the inability to capture the noise variations in the real world.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Agrawal, S., Panda, R., Mishro, P. K., and Abraham, A. (2022). A Novel Joint Histogram Equalization Based Image Contrast Enhancement. Journal of King Saud University – Computer and Information Sciences, 34, 1172–1182. https://doi.org/10.1016/j.jksuci.2019.05.010

Archana, R., and Jeevaraj, P. E. (2024). Deep Learning Models for Digital Image Processing: A Review. Artificial Intelligence Review, 57, Article 11. https://doi.org/10.1007/s10462-023-10631-z

Badjie, B., Cecílio, J., and Casimiro, A. (2024). Adversarial Attacks and Countermeasures on Image Classification-Based Deep Learning Models in Autonomous Driving Systems: A Systematic Review. ACM Computing Surveys, 57(1), 1–52. https://doi.org/10.1145/3691625

Cai, Y., Zhang, W., Chen, H., and Cheng, K. T. (2025). Medianomaly: A Comparative Study of Anomaly Detection in Medical Images. Medical Image Analysis, 102, Article 103500. https://doi.org/10.1016/j.media.2025.103500

Chen, W., Feng, S., Yin, W., Li, Y., Qian, J., Chen, Q., and Zuo, C. (2024). Deep-Learning-Enabled Temporally Super-Resolved Multiplexed Fringe Projection Profilometry: High-Speed kHz 3D Imaging with Low-Speed Camera. PhotoniX, 5, Article 25. https://doi.org/10.1186/s43074-024-00139-2

Emek Soylu, B., Guzel, M. S., Bostanci, G. E., Ekinci, F., Asuroglu, T., and Acici, K. (2023). Deep-Learning-Based Approaches for Semantic Segmentation of Natural Scene Images: A Review. Electronics, 12(12), Article 2730. https://doi.org/10.3390/electronics12122730

Gano, B., Bhadra, S., Vilbig, J. M., Ahmed, N., Sagan, V., and Shakoor, N. (2024). Drone-Based Imaging Sensors, Techniques, and Applications in Plant Phenotyping for Crop Breeding: A Comprehensive Review. Plant Phenome Journal, 7, Article e20100. https://doi.org/10.1002/ppj2.20100

Gui, S., Song, S., Qin, R., and Tang, Y. (2024). Remote Sensing Object Detection in the Deep Learning Era: A Review. Remote Sensing, 16(2), Article 327. https://doi.org/10.3390/rs16020327

Lepcha, D. C., Goyal, B., Dogra, A., Sharma, K. P., and Gupta, D. N. (2023). A Deep Journey into Image Enhancement: A Survey of Current and Emerging Trends. Information Fusion, 93, 36–76. https://doi.org/10.1016/j.inffus.2022.12.012

Liu, Y., Bai, X., Wang, J., Li, G., Li, J., and Lv, Z. (2024). Image Semantic Segmentation Approach Based on DeepLabV3+ Network with an Attention Mechanism. Engineering Applications of Artificial Intelligence, 127, Article 107260. https://doi.org/10.1016/j.engappai.2023.107260

Ma, J., He, Y., Li, F., Han, L., You, C., and Wang, B. (2024). Segment Anything in Medical Images. Nature Communications, 15, Article 654. https://doi.org/10.1038/s41467-024-44824-z

Rao, B. S. (2020). Dynamic Histogram Equalization for Contrast Enhancement for Digital Images. Applied Soft Computing, 89, Article 106114. https://doi.org/10.1016/j.asoc.2020.106114

Vijayakumar, A., and Vairavasundaram, S. (2024). YOLO-Based Object Detection Models: A Review and its Applications. Multimedia Tools and Applications, 83, 83535–83574. https://doi.org/10.1007/s11042-024-18872-y

Wang, A., Chen, H., Liu, L., Chen, K., Lin, Z., and Han, J. (2025). YOLOv10: Real-Time End-to-End Object Detection. Advances in Neural Information Processing Systems, 37, 107984–108011. https://doi.org/10.52202/079017-3429

Zhang, P., Zhou, F., Wang, X., Wang, S., and Song, Z. (2024). Omnidirectional Imaging Sensor Based on Conical Mirror for Pipelines. Optics and Lasers in Engineering, 175, Article 108003. https://doi.org/10.1016/j.optlaseng.2023.108003

Zhao, T., Guo, P., and Wei, Y. (2024). Road Friction Estimation Based on Vision for Safe Autonomous Driving. Mechanical Systems and Signal Processing, 208, Article 111019. https://doi.org/10.1016/j.ymssp.2023.111019

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.