ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI and Digital Painting: Reimagining Human–Machine Collaboration

P. Thara 1![]()

![]() ,

Dr. Roopa Traisa 2

,

Dr. Roopa Traisa 2![]()

![]() , Swati Chaudhary 3

, Swati Chaudhary 3![]() , Priya Modi 4

, Priya Modi 4![]()

![]() , Vivek Saraswat 5

, Vivek Saraswat 5![]()

![]() , Hitesh Kalra 6

, Hitesh Kalra 6![]()

![]() , Chandrashekhar Ramesh Ramtirthkar 7

, Chandrashekhar Ramesh Ramtirthkar 7![]()

1 Department

of Computer Science and Engineering Aarupadai Veedu

Institute of Technology, Vinayaka Mission’s Research Foundation (DU), Tamil

Nadu, India

2 Associate

Professor, Department of Management Studies, JAIN (Deemed-to-be University),

Bengaluru, Karnataka, India

3 Assistant Professor, School of

Business Management, Noida International University 203201, Greater Noida,

Uttar Pradesh, India

4 Assistant Professor, Department of

Development Studies, Vivekananda Global University, Jaipur, India

5 Centre of Research Impact and

Outcome, Chitkara University, Rajpura- 140417, Punjab, India

6 Chitkara Centre for Research and

Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

7 Department of Mechanical Engineering,

Vishwakarma Institute of Technology, Pune, Maharashtra, 411037 India

|

|

ABSTRACT |

||

|

In this paper,

I examine the digital transformation the artificial intelligence (AI) has on

digital painting by examining how the relationship between humans and

machines is changing as a new artistic creation paradigm. It follows the

historical heritage of machine-assisted art, studies the underlying ground of

technology of GANs, diffusion models, and reinforcement learning, and

evaluates how these systems grapple with artists, in real-time feedback and

adaptive learning. The paper presents case studies of such large platforms like DALL3, Midjourney, Runway ML, and Adobe Firefly to

show that AI is not an independent agent, but a cognitive partner, who

expands the imagination of humans and redefines authorship and aesthetic

agency. The move of aesthetics toward posthuman, collective authorship, and

the need to be transparent in the use and attribution of data is revealed in

philosophical and ethical analyses. The conclusion of the paper will be a

futuristic projection of a hybrid creative future in which human emotion and

artificial cognition will come together to create a generative, ethical and

interactive art ecosystem. |

|||

|

Received 15 February 2025 Accepted 16 June 2025 Published 20 December 2025 Corresponding Author P. Thara,

thara.cse@avit.ac.in

DOI 10.29121/shodhkosh.v6.i3s.2025.6753 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Digital Painting, Human–Machine Collaboration,

Generative Models, Diffusion Models, Algorithmic Creativity, Hybrid

Intelligence, Posthuman Aesthetics, Ethical Authorship |

|||

1. INTRODUCTION

The contact point between artificial intelligence and digital painting is a radical change in the conceptualization, production, and experience of artistic creation. Previously thought of as a field of creative endeavor dominated by the human intuition and emotion, painting has taken a turn into the world where the computational intelligence has become a leading force in the creative process. The adoption of deep learning architectures, especially the Generative Adversarial Networks (GANs) and diffusion models, have enabled algorithms to learn the visual data in large corpora and generate novel works that resemble human artist expression and go beyond the traditional boundaries of artistic expression. These systems do not take the place of the artist instead they expand the creative agency creating unexpected shapes, textures and color combos that provoke human imagination in return Goodfellow et al. (2025). This new form of dialogue between an artist and an algorithm reconstructs the concept of authorship, collaboration and aesthetic intent during the digital age. Art and technology have always been a contemporary relationship and this was in mutual development. Since the Renaissance art with the invention of perspective, the Impressionism with the use of references of photographs, artists have been adapting to technology. As of today, AI systems act as dynamic partners, who can learn the style of an artist, and forecast the pattern of his/her brush strokes, and even suggest new compositions Gatys et al. (2015). Examples of this trend include DALL •E 3, Midjourney, and Runway ML, which are textual prompts that can be translated by neural networks into highly detailed and lifelike images that compete with other forms of digital painting. Artists self-improve machine generated results through feedback mechanisms, and combine both humans and their algorithmic spontaneity Elgammal et al. (2017). The result of this collaboration is a new form of aesthetic of co-creation, but one that is not characterized by domination, but by compromise between intuition and computation.

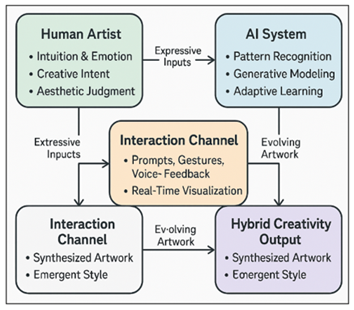

Figure 1

Figure 1 AI and Digital Painting: Human–Machine Co-Creation

Flow

The human-machine cooperation in digital painting at the cognitive level defies the existing ideas of creativity. The artistic decision making process, which used to rely on the unconscious feelings or aesthetic judgment, has been mediated by the use of data-driven reasoning and probabilistic imagination. Having studied millions of current works of art, AI models acquire structural balance, color contrast, and composition rhythm. but the spark of creation, what art philosopher R. G. Collingwood has called the articulation of emotion, continues to be generated in the human being artist McCormack et al. (2019). AI is a phenomenon that is not phenomenologically engaged in the sense that it has the beauty or the purpose to engage in; rather it is a cognitive magnifier, uncovering the hidden potentials in data as shown in Figure 1. These possibilities are then infused by the artist as curator and interpreter into the meaningful visual narratives. The socio-ethical implication of the emergence of AI-based digital painting is also present. Authorship, originality and authenticity questions undermine established artistic models. Does an AI generated painting get authored by the person who trained the model, the set of artist whose work it was informed by, or the algorithm? By these arguments, it is clear why the time has come to build algorithmic literacy among the creative groups of people- that creativity in the AI era is distributed and participatory McCormack et al. (2019). With human imagination becoming mixed with the logic of computers, digital painting turns out to be a reflection of hybrid intelligence where not only what human can learn by the machine but also what man can learn by the machine is reflected. The rest of this paper will discuss this changing relationship, and suggest that the future of art will be based on the co-evolution of human perception and artificial thinking Utz and DiPaola (2020).

2. Technological Frameworks in AI Digital Painting

The digital painting has been transformed by artificial intelligence into a multi-layered technological ecosystem with a blend of data-informed modeling, visual cognition and the interactive feedback loop. Generative Adversarial Networks (GANs), Diffusion Models, and Reinforcement Learning (RL)-based adaptive systems are the foundations of this ecosystem and each of them has a unique contribution to creative intelligence. The synthesis of images by these frameworks is also not only automated, but also possible to co-evolve, with the intuition of the human artist and the generative power of the algorithm falling into a symbiotic relationship Déguernel and Sturm (2024). Generative Adversarial Networks (GANs) are one of the principles of visual art based on AI. GANs, which were first created by Ian Goodfellow in 2014, consist of two neural elements a generator and a discriminator that are involved in a dynamical adversarial game. The generator tries to generate images that have a similar distribution as a training set, whereas the discriminator tries to test the authenticity of the images Guo et al. (2022), Li et al. (2024). Through repeated cycles, the generator gets to learn how to generate more and more realistic images. When applied to digital painting, GANs help to produce original visual forms by training on the intricate stylistic patterns of brushwork density, color palette balance, compositional rhythm and so on. Such models as ArtGAN, StyleGAN, and CycleGAN played a significant role in transmitting the artistic styles, replicating the hand-painted texture, and creating artificial art objects that cannot be distinguished based on their similarity to human-created compositions.

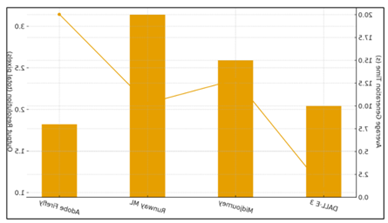

Figure 2

Figure 2 Framework of AI-Based Digital Painting Framework

A more modern and transformative technology, diffusion models, is a creativity method that uses noise modeling and refinement steps. These models (including Stable Diffusion and DALL•E 3) start with the corrupting images with random noise and later they learn to uncorrupt them, restoring consistent visual representations out of data that seems to have been corrupted. The outcome is an emergent ability to imagine forms by description of texts- transforming natural language to multifaceted pictorial products Tamm et al. (2022). Diffusion models have larger diversity and coherence than GANs, and have more controls available to artsists, including guidance scale, prompt weighting, and aesthetic conditioning as shown in Figure 2. The visual semantics can be easily manipulated through diffusion models, democratizing the creative process, as ideas represented in text can be spontaneously turned into visual art without any artistic training, yet at the same time, professional artists have new means of expression. Reinforcement learning (RL) Wammes et al. (2018) and Human-in-the-Loop (HITL) Xie and Zhou (2024)systems are additional systems that develop AI collaboration with artists by providing constant feedback. Other frames In RL-based models, user interactions provide reward signals to the AI, e.g. brush preference, color adjustment, composition approval, etc., and the results are optimized by repeating the process. By successfully learning the style of the individual artist, this dynamic model can develop as a co-creator of its own person. Combined with diffusion or GAN models, RL improves creative autonomy and flexibility, which promotes systems that develop alongside the aesthetic development of a human artist. The effect of such synergy is what researchers term Augmented Creativity Systems (ACS) hybrid environments created when cognition, computation, and emotion approach each other.

3. Cognitive Synergy: Human–AI Interaction Models

The artistic collaboration between human mind and artificial intelligence makes digital painting a form of interactive perception, intention and adaptation dialogue. Technology can offer accuracy of computation and predictability but the artist furnishes emotional appeal, interpretive understanding, and taste Cheng (2022). This is a dynamic relationship that can be visualized in the form of a cognitive synergy in which both the human and the machine learns off each other and is co-evolutionary in nature.

Figure 3

Figure 3 Cognitive Synergy Model in Human–AI Digital Painting

Mutual adaptation is at the centre of cognitive synergy. The artist perceives and edits the machine generated outputs and the AI model learns the user feedback to improve the creative parameters. In contrast to conventional design software, which only performs commands, AI-based painting software is a part of an iterative learning process. Reinforcement learning Systems Systems trained by reinforcement learning or human-in-the-loop (HITL) systems use user corrections as rewards, which enables them to develop the nuances of the style as per the artistic intent of the user Oksanen (2023). In this way, the AI basically turns into a creative apprentice and is taught the likes, rhythm, and color sense of its human partner. Cognitively, this partnership is a combination of divergent and convergent thinking that is represented in Figure 3. Human artist is divergent cognition, intuition, experimentation, whereas the machine is convergent logic, pattern recognition, probabilistic reasoning and optimization of data. They jointly constitute an emergent hybrid cognition they can attain not individually. An example is that whereas a diffusion model can suggest abstract variations of a visual concept, the artist can discover meaningful configurations with evocative narrative or symbolism in them, leading the AI to produce emotionally coherent results Guo et al. (2023). This feedback in several repetitions is what can be called a transformation of randomness into design. Multi-modal communication is also a requirement in the synergy. Text clues, gesture control, voice directions and direct brush communication are all expressive means by which the artist conveys purpose. At the same time, the AI instantly interprets these clues with the help of attention and semantic embeddings and converts them into the dynamics of changes in composition, light, and color grading. The outcome is an embodied dialogue a dance between intuition and computation of the mind. The modern systems like Adobe Firefly and Runway ML can demonstrate how this integration can be achieved since they enable users to refine the outputs of generative engines to see the changing artwork on screen in real time.

4. Case Studies in AI-Assisted Painting Tools

Real-world systems can most clearly explain the practical development of AI to digital painting, as the examples of collaborative creativity. The AI art engines introduced in modern AI art, including DALL•E 3, Midjourney, Runway ML, and Adobe Firefly, are unique philosophies of human-machine co-creation Lou (2023). Both systems exhibit a distinctive degree of autonomy in generative processes and user control, creating a variety of ecosystems of interaction that produce a total transformation of artistic production.

4.1. Case Study: DALL·E 3

The machine created by OpenAI is called DALL•E 3, and it can combine linguistic intelligence and visual imagination. It provides artists with the opportunity to explore the artistic technique of conceptual abstraction and narrative composition by transforming textual prompts into logical visual compositions. The advantage of the model is its semantic accuracy, which converts the descriptive sentences into the painterly images with exceptional free flow. Midjourney, on the other hand, focuses on diversity in aesthetics and creativity as a community and combines community feedback with algorithmic development Tang et al. (2020). Its diffusion base produces highly stylized film-like pictures with abundant lighting effect and texture. Runway ML has the capabilities mapped into video and mixed-media art, including GANs and transformers to create moving art, combining painting (for video) and animation. Lastly, Adobe Firefly is the most professionally integrated AI painting environment, specializing in licensed data, ethical transparency, and to be integrated seamlessly into design software., The technological foundations of these systems are outlined in Table 1, which highlights the architectural models, source of data and features of their output.

Table 1

|

Table 1 Technical Comparison of AI Painting Tools |

|||||

|

Platform

/ Tool |

Core

AI Architecture |

Training

Dataset Source |

Resolution

Output (px) |

Generation

Speed (avg) |

Customization

Options |

|

DALL·E

3 Oksanen (2023) |

Diffusion

model (Transformer backbone) |

Licensed

+ public domain images |

1024

× 1024 |

~10

s / image |

Text

prompts, style tuning |

|

Midjourney Guo et al. (2023) |

Diffusion + proprietary

style encoder |

Curated community data |

1536 × 1536 |

~15 s / image |

Prompt weighting, aspect

ratio |

|

Runway

ML Lou (2023) |

Hybrid

GAN + Transformer + Autoencoder |

Mixed

open datasets |

1920

× 1080 (video) |

~20

s / frame |

Visual

GUI, style transfer layers |

|

Adobe Firefly Tang et al. (2020) |

Diffusion + RLHF |

Adobe Stock (licensed) |

Variable (print-ready) |

~8 s / image |

Brush integration, palette

control |

Although these platforms vary in terms of technology, they also reflect various aesthetic inclinations and artistic conclusions. Their productions are photorealism up to painterly abstraction in terms of control over style, unpredictability and narrative expression. The table below gives a summary of these aesthetic and creative differences. In its turn, Midjourney is a community-oriented platform that focuses on aesthetic exploration and stylistic variety. By instantaneously generating images under directives, as well as his collective feedback with artists, Midjourney has been able to develop an online atelier in which generative models can constantly be improved through interactions with users. The diffusion architecture of the platform generates surreal and painterly results with a characteristic artistic signature - which tends to focus on the atmosphere, lighting, and color balance. In this case, it goes beyond the individual and it becomes a social intelligence network in which common experimentation creates growth in algorithms.

4.2. Case Study: Runway ML

Runway ML brings collaborative paradigm to video and mixed-media art. It combines several deep-learning models- GANs, autoencoders and transformers into a simple interface, which allows users to combine image synthesis, motion tracking and style transfer.

Table 2

|

Table 2 Artistic Style and Output Characteristics |

|||

|

Platform |

Dominant

Aesthetic Traits |

Strengths

in Artistic Output |

Common

Limitations |

|

DALL·E

3 [18] |

Realistic,

concept-driven compositions |

Precise

semantic alignment between text and image |

Occasionally

rigid composition symmetry |

|

Midjourney [19] |

Painterly, cinematic,

atmospheric |

Rich color harmony and

lighting depth |

Style saturation; limited

semantic control |

|

Runway

ML [20] |

Experimental,

motion-based, hybrid |

Enables

animated paintings and mixed media |

High

computational demand |

|

Adobe Firefly |

Professional, polished,

editorial |

Seamless integration with

creative suites |

Limited abstraction and

unpredictability |

An example of how AI can change visual art into an interdisciplinary form of storytelling is Runway ML, in which it combines the stagnant digital painting with the movement of a visual story. It is employed by artists to bring to life painted sequences or even simulate the motion of a brushstroke or to mix live-action with generated imagery.

4.3. Case Study: Adobe Firefly

With the expansion of AI-assisted painting, other important aspects of trust and sustainability can be identified in the form of ethical design and dataset transparency. The legitimacy of AI-generated works is determined by the governance of artistic data, in particular, the provenance and licensing of training materials.

Table 3

|

Table 3 User Experience and Collaboration Index (In %) |

||||

|

Criterion |

DALL·E

3 |

Midjourney |

Runway

ML |

Adobe

Firefly |

|

Ease

of Use |

85% |

70% |

80% |

95% |

|

Interactivity / Feedback

Loop |

88% |

92% |

86% |

84% |

|

Community

Collaboration |

40% |

95% |

65% |

55% |

|

Professional Workflow

Integration |

65% |

60% |

78% |

96% |

|

Learning

Adaptability (AI Personalization) |

90% |

72% |

70% |

88% |

Table 3 provides the summary of the ethical performance of these tools evaluating their mechanisms of integrity and accountability of the dataset. Adobe Firefly is a creatively oriented product with a specific target demographic of professional creatives that adds AI assistance in painting to general digital art processes. Firefly is built into Adobe Photoshop and Illustrator to use content-aware generator fill algorithms and content-aware diffusion to refine compositions, lengthen backgrounds, or adjust styles to a particular color palette. It represents the example of co-creative assistant AI, that is, AI that collaborates with the artist invisibly, making them more productive without replacing intuition. Its moral code, which focuses on the clarity of sources of data and the information on content ownership, resolves the current controversy on authorship and copyright on the art created by AI users as well.

Table 4

|

Table 4 Ethical and Data Transparency Assessment |

||||

|

Platform |

Dataset

Licensing |

Attribution

Mechanism |

Bias

Mitigation Strategy |

Ethical

Rating |

|

DALL·E

3 |

Partially

licensed, filtered |

Metadata

tagging |

Prompt

filtering, bias detection |

High |

|

Midjourney |

Proprietary community data |

Limited transparency |

Manual moderation |

Medium |

|

Runway

ML |

Mixed-source

open data |

Project-level

attribution |

Creative

Commons compliance |

High |

|

Adobe Firefly |

Fully licensed (Adobe Stock) |

Embedded metadata |

Transparent dataset

disclosure |

Very High |

All these comparative analyses prove that AI-assisted painting tools cannot replace each other; instead, each can make its contribution to the ecosystem of hybrid creativity. The linguistic specificity of DALL•E 3, the expressivity of the community in Midjourney, the expansion into the field of multimedia of Runway ML, and the ethical consideration of Adobe Firefly describe a range of human-machine co-creation models. They represent the future of art not as the substitute of the human agency, rather as the redefinition of creativity as the collaboration of cognition and computation.

5. Discussion

As competing technologies, each system, namely, DALL•E 3, Midjourney, Runway ML, and Adobe Firefly, has a niche in the ever-changing ecosystem of computational creativity. They both prove that digital painting is no longer an activity involving a set of tools, but rather a dialogue between both the artist and the algorithm. Technically speaking, the interconnection between processing efficiency and output resolution takes the centre stage. According to the performance data as visualized below, architecture in platforms is a direct determinant of responsiveness to creativity and scalability.

Figure 4

Figure 4 Technical Performance of AI Painting Tools

Figure 4, indicates that Runway ML has the highest output resolution (video-oriented) but it is also the slowest in processing, which is multimedia-oriented. Conversely, the Adobe Firefly provides the shortest generation time with the professional output quality that is suitable in the design workflow. DALL•E 3 and Midjourney strike the right balance between these two, implying the trade-off between accuracy and artistic diversity. Aesthetic distinction also explains the role played by each platform in the creation of art. The artistic results are very different depending on the architecture and training information as can be seen by the subsequent stacked-bar representation of the stylistic distribution.

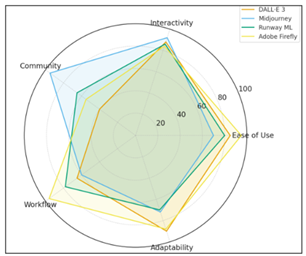

Figure 5

Figure 5 Artistic Style Profile of AI Painting Tools

In Figure 5, Midjourney is more likely to create painterly, cinematic imagery that has a substantial sense of atmosphere, and DALL•E 3 creates conceptually consistent and realistic imagery that can be used ideation and visual narrative. Runway ML is more experimental abstraction, whereas Adobe Firefly is more professional and compositional. Such stylistic extremities represent the way in which each system codifies its own aesthetical signature, which continues to support the notion that AI systems introduce new forms of artistic dialect instead of the alleged imitation of that which already exists. The experience of collaboration to the users is also very important. The user experience and collaboration index shows that the level of comfort, responsiveness of feedback, and compatibility with the workflow is different across these tools.

Figure 6

Figure 6 User Experience and Collaboration Index (Radar Plot)

Figure 6 points out that Adobe Firefly will provide the most balanced user experience especially on ease of use (95%) and integration with workflow (96%). With its active feedback system, Midjourney leads in interactivity (92%) and community collaboration (95%), and represents the participatory art culture. DALL•E 3 is highly adaptable (90%), which corresponds to its prompt based learning that is iterative in nature and Runway ML performs consistently in all categories. Taken together, these findings highlight that meaningful co-creation is most likely to be maintained by user interaction and not automation. Nevertheless, AI implementation into the creative processes needs ethical responsibility as well. With the increase of the role of data sources and training transparency, the ethical heatmap illustrates the extent to which each platform is responsible in dealing with dataset licensing, bias management, and attribution.

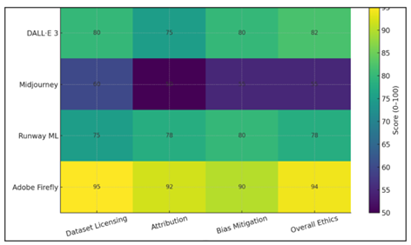

Figure 7

Figure 7 Ethical and Transparency Assessment Heatmap

As Figure 7 shows, Adobe Firefly is the leader in all of the ethical categories, which is due to its comprehensive licensed Adobe Stock datasets and the transparent metadata tagging. The next ones are DALL•E 3 and Runway ML, which have moderate transparency with partial disclosure of the data. Although a pioneering artistic approach to creativity, Midjourney records the worst in terms of dataset responsibility, which highlights the conflict between open creative experimentation and ethical regulation. These differences imply that the creation of art should be accompanied by the control and ethical accountability of AI-led art. When the performance, the quality of art, user experience and ethics are evaluated altogether, there are obvious tendencies. A synthesis of all dimensions in the multi-metric line graph below shows the entire holistic performance of the platforms.

Figure 8

Figure 8 Overall Multi-Dimensional Performance of AI Painting

Tools

Figure 8 indicates that Adobe Firefly is always ranked at the top of each dimension, making it the most moderate place of professional hybrid art. Midjourney is more expressive in arts and less so in adhering to ethics, whereas DALL•E 3 is well-rounded in utility by doing well in all categories. Runway ML is competitive, which straddles motion and still art creation. These discoveries affirm that no single platform is best, rather, each works best in a creative setting where various priorities are laid, portraying the pluralism that is a characteristic of hybrid creativity.

The AI systems such as Firefly enhance systematized, open co-creation; Midjourney encourages the shared imagination; DALL-E 3 weighs between semantics and style; and Runway ML extends art to the timeplane. Collectively, they create a spectrum of digital creativity, with machine autonomy and human control as the singular and incomplete aspects of the creativity manifested as the developing dialog between cognition and computation.

6. Conclusion

The development of artificial intelligence in the digital drawing is one of the deepest changes in the history of visual art. What started as the mechanization of the art methods is now a mature dialogic collaboration of the human imagination and the computational intelligence. The AI has become an active participant in the artistic process through generative adversarial networks, diffusion systems, and reinforcement-based adaptive frameworks and is now able to make decisions, learn, and rethink the aesthetic possibilities alongside the human creators. Such a connection is provoking the conventional definition of authorship and agency, extending creativity into a new mental realm, and the paper proves that AI-assisted digital painting is not only a technological change but a social and even cultural transformation. The artist is no longer in the exclusive role of author but participates in the technique of co-creation with smart systems that enhance the intuition in a manner of data-driven interpretation. This synergy changes painting not only into a living object but also into the process of endless education and self-influence, in which human emotional richness is combined with algorithmic accuracy. The tools under analysis, namely, DALL•E, Midjourney, Runway ML, and Adobe Firefly, represent a wide range of ideas of collaboration, starting with the synthesis of texts using prompts and ending with the fusion of ethical datasets, which can be viewed as the manifestation of an augmented artistic intelligence. Ethical and philosophical thinking also persists with the development of hybrid creativity. Any innovation should be based on transparency in model training, recognition of the sources of data, and respect of artistic work. The future of digital painting will be based on the construction of responsible ecosystems in which the human creative spirit is enabled instead of being overwhelmed by computers. This new creative frontier will be dominated by education, artists have to learn to be algorithmically literate in order to move through the frontier consciously and with some sense of agency. Finally, the intersection of AI and art transforms the meaning of creating art.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Al-Khazraji, L. R., Abbas, A. R., Jamil, A. S., and Hussain, A. J. (2023). A Hybrid Artistic Model Using DeepDream Model and Multiple Convolutional Neural-Network Architectures. IEEE Access, 11, 101443–101459. https://doi.org/10.1109/ACCESS.2023.3309419

Cheng, M. (2022). The Creativity of Artificial Intelligence in Art. Proceedings, 81, 110. https://doi.org/10.3390/proceedings2022081110

Déguernel, K., and Sturm, B. L. T. (2024). Bias in Favour or Against Computational Creativity: A Survey and Reflection on the Importance of Socio-Cultural Context in its Evaluation. KTH DivA Portal.

Elgammal, A., Liu, B., Elhoseiny, M., and Mazzone, M. (2017). CAN: Creative Adversarial Networks, Generating “art” by Learning About Styles and Deviating from Style Norms. arXiv.

Gatys, L. A., Ecker, A. S., and Bethge, M. A. (2015). A Neural Algorithm of Artistic Style. arXiv. https://doi.org/10.1167/16.12.326

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. (2025). Generative Adversarial Networks. Communications of the ACM, 63, 139–144. https://doi.org/10.1145/3422622

Guo, D. H., Chen, H. X., Wu, R. L., and Wang, Y. G. (2023). AIGC Challenges and Opportunities Related to Public Safety: A Case Study of ChatGPT. Journal of Safety Science and Resilience, 4, 329–339. https://doi.org/10.1016/j.jnlssr.2023.08.001

Guo, Y., Lin, S., Acar, S., Jin, S., Xu, X., Feng, Y., and Zeng, Y. (2022). Divergent Thinking and Evaluative Skill: A Meta-Analysis. Journal of Creative Behavior, 56, 432–448. https://doi.org/10.1002/jocb.539

Jiang, Q. L., Zhang, Y. Z., Wei, W., and Gu, C. (2024). Evaluating Technological and Instructional Factors Influencing the Acceptance of Aigc-Assisted Design Courses. Computers and Education: Artificial Intelligence, 7, 100287. https://doi.org/10.1016/j.caeai.2024.100287

Lee, U., et al. (2024). LLaVA-docent: Instruction Tuning with Multimodal Large-Language Model to Support art Appreciation Education. Computers and Education: Artificial Intelligence, 7, 100297. https://doi.org/10.1016/j.caeai.2024.100297

Li, G., Chu, R., and Tang, T. (2024). Creativity Self-Assessments in Design Education: A Systematic Review. Thinking Skills and Creativity, 52, 101494. https://doi.org/10.1016/j.tsc.2024.101494

Lou, Y. Q. (2023). Human Creativity in the AIGC era. Journal of Design Economics and Innovation, 9, 541–552. https://doi.org/10.1016/j.sheji.2024.02.002

McCormack, J., Gifford, T., and Hutchings, P. (2019). Autonomy, Authenticity, Authorship and Intention in Computer-Generated art. In Proceedings of the EvoMUSART: International Conference on Computational Intelligence in Music, Sound, Art and Design (EvoStar) (pp. 35–50). Springer. https://doi.org/10.1007/978-3-030-16667-0_3

Oksanen, A., et al. (2023). Artificial Intelligence in Fine Arts: A Systematic Review of Empirical Research. Computers in Human Behavior: Artificial Humans, 1, 100004. https://doi.org/10.1016/j.chbah.2023.100004

Tamm, T., Hallikainen, P., and Tim, Y. (2022). Creative Analytics: Towards Data-Inspired Creative Decisions. Information Systems Journal, 32, 729–753. https://doi.org/10.1111/isj.12369

Tang, Z. C., Wang, D. L., Xia, D., and Li, X. T. (2020). “Artificial Intelligence + Design”: A New Exploration of Teaching Practice of Product Design Courses for Design Majors. Art and Design, 1, 120–123.

Utz, V., and DiPaola, S. (2020). Using an AI creativity System to Explore How Aesthetic Experiences are Processed Along the Brain’s Perceptual Neural Pathways. Cognitive Systems Research, 59, 63–72. https://doi.org/10.1016/j.cogsys.2019.09.012

Wammes, J. D., Roberts, B. R. T., and Fernandes, M. A. (2018). Task Preparation as a Mnemonic: The Benefits of Drawing (and Not Drawing). Psychonomic Bulletin and Review, 25, 2365–2372. https://doi.org/10.3758/s13423-018-1477-y

Xie, H., and Zhou, Z. (2024). Finger Versus Pencil: An Eye-Tracking Study of Learning by Drawing on Touchscreens. Journal of Computer Assisted Learning, 40, 49–64. https://doi.org/10.1111/jcal.12863

Zhang, Y. C., Li, Z. F., Feng, X. Y., Suo, X. C., and Hu, P. (2023). Visualization Research on the Status Quo of Virtual-Reality Education in China: Knowledge-Graph Analysis Based on Citespace. Modern Information Technology, 7, 135–141.

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.