ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Integrating AR and AI for Immersive Sculpture Exhibitions

Dr. J. Vijay 1![]()

![]() ,

Romil Jain 2

,

Romil Jain 2![]()

![]() ,

Guntaj J 3

,

Guntaj J 3![]()

![]() ,

Dr. Senduru Srinivasulu 4

,

Dr. Senduru Srinivasulu 4![]()

![]() , Vinod Chandrakant Todkari 5

, Vinod Chandrakant Todkari 5![]() , Dr. Pooja Srishti 6

, Dr. Pooja Srishti 6![]()

1 Assistant

Professor Gr. II, Department of Electronics and Communication Engineering, Aarupadai Veedu Institute of Technology, Vinayaka Mission’s

Research Foundation (DU), Tamil Nadu, India

2 Chitkara

Centre for Research and Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

3 Centre of Research Impact and Outcome, Chitkara University, Rajpura-

140417, Punjab, India

4 Professor, Department of Computer Science and Engineering, Sathyabama Institute of Science and Technology, Chennai, Tamil Nadu, India

5 Department of

Mechanical Engineering, Vidya Pratishthans Kamalnayan

Bajaj Institute of Engineering and Technology, Baramati, Pune, India

6 Assistant Professor, School of Business Management, Noida International

University 203201, India

|

|

ABSTRACT |

||

|

The paper

addresses the issue of combining Augmented Reality (AR) and Artificial

Intelligence (AI) to create the experience of thicker and adaptive sculpture

display that will change the interactions of the audience in the digital era.

The proposed scheme outlines how AR technologies can be employed to add to

physical sculptures some dynamic and interactive depth of information and

sensory enhancement and AI will personalize these experiences to their

current preferences of use behavior. The cognitive and emotional associations

between visitors and artworks are deeper are formed with the help of the

visual augmentation of the system along with clever content adaptation. The

concept framework is based on experiential learning, aesthetic perception,

and human-computer interaction theories to provide grounds on hybrid AR-AI

exhibition environments. The research design used in the study involves a

combination of qualitative interviews, observational analysis, and

quantitative usability testing with the use of a mixed-methods research

design. To create the prototype system, there are tools, including Unity 3D, ARCore, TensorFlow, and 3D scanning technologies. It is

characterized by an AR interface that allows visualizing sculpture in

real-time, AI algorithms that examine the behavior of users, and a

feedback-based data pipeline that optimizes the system in the long run. The

metrics to be evaluated are immersion, engagement, and learning outcomes

which are assessed by user experience studies and comparison with traditional

settings of exhibits. |

|||

|

Received 28 January 2025 Accepted 19 April 2025 Published 16 December 2025 Corresponding Author Dr. J.

Vijay, vijay.ece@avit.ac.in DOI 10.29121/shodhkosh.v6.i2s.2025.6718 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Augmented Reality, Artificial Intelligence, Immersive Art, Sculpture Exhibitions, User Engagement, Personalization |

|||

1. INTRODUCTION

The dynamism of digital technologies has

changed the environment of the art, culture and the human experience with the

creative expressions. Generally, Augmented Reality (AR) and Artificial

Intelligence (AI) are the two most significant technologies in transforming

artistic experiences and the museum practice. The AR is an overlay of digital

content, e.g. a picture, animation, or background information onto the physical

environment which enables the audiences to interact with artworks, not only

through the visual perception. Instead, AI gives systems the ability to process

data, identify trends, as well as dynamically generate content depending on

user preferences and behaviors. The combination of these technologies creates a unique

possibility of developing immersive, intelligent, and personal art exhibitions

especially in the field of sculpture, where spatial and tactile experiences are

the main aspect of it. Conventional sculpture displays in most cases are based

on a passive experience of viewing artwork and they

lack interactive and contextual enhancement Chang (2021). The

traditional model restricts the richness of interaction and interpretive

possibility. Nonetheless, the AR can be incorporated so that sculptures act as

interactive screens, with digital overlay being used to demonstrate the

historical background, intention of the artist, or even to repackage the art in

a three-dimensional state. Simultaneously, AI systems can use the prospect to

personalize these expanded experiences to each visitor depending on their

background, learning style, or emotional response, and create adaptive and

inclusive art experiences. As an example, AI-driven recommendation engines can

suggest some viewing points, highlight information about the interests of the

user, or change the narration based on the real-time feedback Barath et al. (2023). It is not

only that the interaction between AR and AI augments the visual and cognitive

experiences but a solution to the dilemma concerning the interwoven world of

art and technology. It enables museums and galleries to stop existing as fixed

exhibitions, becoming hybrid interactive eco systems, where the visitor is not

a spectator but an actor. Such intelligent exhibition space can record the behavior of the user such as gazing, dwell time and user

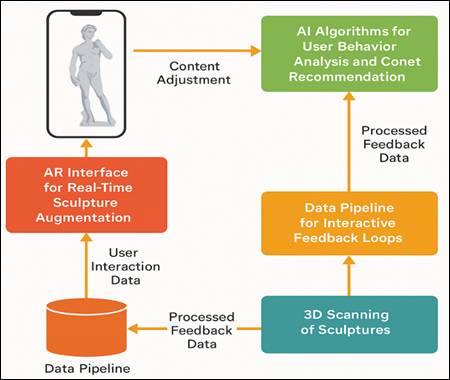

navigation to improve future displays Cheng et al. (2023). Figure 1 illustrates multilayer AR-AI system which

allows the exhibition of sculptures in an immersive way. This feedback

contributes to the iterative optimization of delivery of user experience and

content, which is done by the curators and system designers.

Figure 1

|

Figure 1 Multilayer System Architecture for AR–AI Integrated Immersive Sculpture Exhibition |

Furthermore, the combination of AR and AI is a useful factor

in education and culture propagation. Considering the example of students

studying the history of art, it is possible to use sculptures to gain multiple

benefits or layers of information, such as visualizing how the object has been

restored, the techniques applied in the creation of the artwork, or the

symbolic meaning it conveys, with the help of AI-generated narratives Wang (2022). Equally, by converting visual data into a multisensory or

text-based format, sculpture exhibitions may be made available to a wider range

of individuals, including those with physical or other sensory constraints,

through the use of AR-guided tours.

2. Related Work

The multidisciplinary approach to art, technology and the

interaction between humans has been more attentive of how digital technologies

can be involved and used to give aesthetic experiences. A number of scholars

have talked about the possibilities of Augmented Reality (AR), which can be

utilized in the context of museums and galleries to improve the engagement and

interpretation process. As it has been proved, the AR-based museum applications

can enhance the learning and satisfaction of visitors by adding contextual

information over artifacts Matthews and Gadaloff (2022). Moreover, the use of AR has created spaces of narration

into which people are actively involved in an active manner with

three-dimensional content, which leads to greater emotional and cognitive

involvement with the art object. The same trends in the cultural and creative

industry have recorded substantial advancement in Artificial Intelligence (AI)

in personalizing its technologies to users. Curatorial systems and

recommendation models that use AI can be used to interpret visitor information

to make predictions and create customized content. These developments show how

AI can overcome the challenge of the curatorial intent and personal interaction

Sovhyra (2022). New approaches to computer vision and natural language

processing also make it possible to interpret user behaviour in real time,

whether by tracking gaze, detecting sentiment or creating an adaptive

narrative, enabling each visitor to have a unique pattern of interaction.

Although the application of AR and AI in sculpture exhibition is a

comparatively underdeveloped field, their integration has been widely discussed

separately. The past attempts have generally focused on either visual

augmentation or smart recommendation, but not the combined incorporation of

both technologies Wang and Lin (2023). In Table 1, studies,

which are summarized, are mixing AR and AI to exhibitions. The combination of

AR visualization and AI-supported personalization will likely lead to

synergistic effects and contribute to the level of immersion and

interpretation.

Table 1

|

Table 1 Summary of Related Work on AR and AI

for Immersive Sculpture Exhibitions |

||||

|

Study |

Technology Focus |

Application Domain |

Methodology |

Future Scope |

|

“A Survey of Augmented Reality” |

AR Frameworks |

Mixed Reality Foundations |

Literature Review |

Early theoretical model, lacked AI integration |

|

“Interaction in Augmented Reality” |

AR Interaction Design |

Human–Computer Interaction |

Experimental Studies |

No adaptive personalization |

|

“Beyond Virtual Museums” Al‑Kfairy et al. (2024) |

AR Visualization |

Virtual Heritage |

Case Study |

Limited user analytics |

|

“AR in Art Exhibitions” |

Mobile AR Apps |

Art Galleries |

Prototype Testing |

Lack of personalization mechanisms |

|

“Personalized Museum Experiences” Alkhwaldi, A.

F. (2024) |

AI Recommendation |

Cultural Heritage |

User Study |

Limited integration with AR visualization |

|

“Immersive Technologies in Museums” |

AR / VR Systems |

Museum Interaction |

Survey and Case Analysis |

High technical setup cost |

|

“Mixed Reality for Heritage Education” Gong et al.

(2022) |

AR + 3D Reconstruction |

Education and Museums |

Experimental Design |

Focused on heritage, not sculpture art |

|

“AI-Powered Museum Curation” |

AI-driven Analytics |

Exhibition Design |

Machine Learning Implementation |

Data privacy concerns |

|

“Embodied Museography” |

Immersive Interfaces |

Interactive Art |

Conceptual Framework |

No quantitative validation |

|

“Emotion-Aware AR Systems” |

Affective AI + AR |

Art Installations |

Deep Learning + Vision Analysis |

Still in prototype phase |

|

“AI-Based Art Recommendation Systems” |

Recommendation Algorithms |

Digital Art Platforms |

Neural Network Model |

Lacked spatial immersion |

|

“Hybrid AR–AI Museum Interfaces” Sylaiou et al. (2024) |

AR + AI Hybrid |

Museum Visitor Studies |

Field Experiment |

Requires cross-device optimization |

|

“3D Scanning and AR Display for Sculptures” |

3D Reconstruction |

Sculpture Exhibitions |

Photogrammetry and AR Visualization |

Limited personalization integration |

3. Conceptual Framework

3.1. THEORETICAL BASIS FOR IMMERSIVE ART

EXPERIENCES

Immersive art experiences are based on the convergence of

psychology, aesthetics, and the human-computer interaction. Immersion is the

extent to which a person is mentally and emotionally engrossed into an

environment which creates the sense of presence and involvement. Experiential

learning theory (Kolb, 1984) supports this phenomenon theoretically where

knowledge is said to develop when a person engages in activities and reflects

on them. Immersion, in the art world, applies the concept of passive observation

into participatory interpretation, by means of which the audiences jointly

create the meaning during the interaction Galani and Vosinakis (2024). Moreover, the aesthetic experience theory (1934) by Dewey

implies the continuity of perception and action in art practice, and thus, that

technology-enhanced experience may improve aesthetic experience. In its use

with sculpture displays, immersive structures help to stimulate a convergence

of the senses- visual, auditory, spatial signals to produce a greater emotional

appeal De Fino et al. (2023). In a digital sense, the presence theory provides emphasis

on the fact that AR can provide spatial realism so that users can feel that

digital additions are authentic extensions of physical sculptures. In the

meantime, AI adds to the cognitive immersion, in which user behavior

is analyzed by adaptive systems, which provide users

with personalized narratives and contextual information Kovács and Keresztes (2024).

3.2. INTEGRATION MODEL FOR HYBRID AR–AI

SYSTEMS

The hybrid AR-AI system integration model will be structured

to meet the objective of integrating sensory augmentation and intelligent

interactivity to create a continuous digital ecosystem of immersive sculpture

exhibitions Newman et al. (2021). This model works on the three fundamental layers that are

perceptual augmentation, cognitive adaptation, and feedback optimization. AR

technologies (ARCore, ARKit, Vuforia) are offered in

the perceptual layer, and they present real-time object recognition, spatial

mapping, and overlay rendering. Photogrammetry or LiDAR are used to scan

sculptures and create the precise 3D models so that users can explore the

dynamic visualization of sculptures, including re-creating the artistic process

or visual layers. The AI-driven cognitive layer is an algorithmic processing of

behavioral data with the help of machine learning and

neural networks. It examines the way the users look, move or even spend time on

the site to determine the level of engagement and dynamically adjust the

content of the exhibition. To illustrate, when a user hovers around a certain

sculpture, the AI can elicit more profound interpretive stories or other pieces

of art.

4. Methodology

4.1. Research design and data collection methods

The study design is a mixed-method approach, which involves

qualitative and quantitative designs because it will help to fully analyse the

incorporation of AR and AI in immersive sculpture exhibitions. In this way, the

technological efficiency and the practicality of the suggested system will be

carefully tested. The qualitative aspect is concerned with the perception,

interest, and feelings of the users. Artists, curators, and visitors of the

exhibits are interviewed semi-structured and focus groups are discussed to

provide information on the expectations of users, their aesthetic satisfaction,

and their perception of interactivity. Real- time interactions between users

and augmented sculptures in prototype exhibition settings are observed and

documented by observational studies which identify patterns of behavior and issues of usability. The quantitative element

supplements such findings through use of structured surveys as well as behavioral analytics. Such information as time per art

piece, usage frequency of AR features, and the accuracy of responding to the

AI-improved recommendations are gathered. The data of eye-tracking and

motion-sensing can further inform the research on spatial attention and in the behavior of navigation.

4.2. TOOLS AND TECHNOLOGIES EMPLOYED

4.2.1. AR SDKs

Immersive and interactive sculpture exhibition development

has been built on AR SDKs. Such SDKs allow the system to overlay digital

objects on top of physical sculptures in a way that the virtual and the real

world are seamlessly connected. Vuforia and Wikitude

are also the victors of the game at the expense of the high-quality image

recognition and markerless tracking which is

essential to the validation of the sculpture augmentation inside the gallery.

These tools enable the developers to make the visual layers to be responsive to

the gaze of the viewer and his or her view. Furthermore, inbuilt lighting

approximation and occlusion imaging techniques simulate natural lighting and,

therefore, augmented images appear natural. With them together, the system can

offer high-fidel, interactive, and context-aware AR

experiences that transform otherwise lifeless sculptures into interactive,

multisensory artistic experiences.

4.2.2. AI models

Machine learning frameworks like TensorFlow, PyTorch and Scikit-learn are used to process data of user

interaction, gaze tracking, navigation patterns and dwell times. These streams

are fed into supervised and unsupervised learning models in which the

preferences, engagement levels, and interaction tendencies of the users are

identified. Transformer based model Natural Language Processing (NLP) models,

such as BERT or GPT, allow conversational interfaces to make the visitor talk

to an AI-driven virtual guide. Also, reinforcement learning algorithms make it

easier to deliver content in an adaptive way with the system learning based on

user response to improve content recommendations in real time. Computer vision

is used to create emotion recognition networks that recognize facial

expressions and facial movements to determine emotional engagement. All these

AI models create human-friendly interactions that make the experience of the

exhibition more responsive and have a stronger emotional impact on the relationship

of the visitors with the sculptures.

4.2.3. 3D scanning

The technologies in 3D scanning are necessary to properly

digitalize sculptures and provide augmentation in AR to be realistic.

High-resolution geometric and textural data of physical works of art are

captured by using methods like photogrammetry, structured light scanning, and

LiDAR (Light Detection and Ranging). The AR overlay is built on the resulting

3D models, making it possible to add dynamic visual effects, historical

reconstructions, or interpretive animations and ensure that the resulting 3D model

fits perfectly around the original sculptures. Moreover, 3D scanning also

provides correct spatial calibration that enhances the performance of AR

tracking within an exhibition space. Such combination of extreme digitizations and real-time images allows creating

immersive, interactive, and faithful images of sculptural art in hybrid AR-AI

space.

4.3. PROTOTYPE DEVELOPMENT AND USER TESTING

PROCEDURES

The prototype development stage is the real-life application

of the conceptual and architectural scheme, where visualization of AR and

personalization through AI is to be combined into a unified system of

interaction. It starts with the 3D modeling and

digitization of the chosen sculptures in the 3D model via LiDAR or

photogrammetry process to provide proper geometric fidelity. The models are

externally brought into Unity 3D and AR functionality is applied with the help

of AR Foundation to make it cross-platform (ARCore

and ARKit devices). Contextual commentaries, artistic interpretations and

animation layers (all called dynamic overlays) are planned to enhance

interaction with the users. Machine learning algorithms operating on the AI

side are developed with behavioral data to forecast

user preferences and provide a personalized content recommendation. The system

has a feedback loop where actual interaction data are gathered in real-time

enabling the AI to reactively adjust AR experiences to engagement patterns.

Integration testing is used in order to facilitate seamless coordination among

AR rendering, AI inference and user interface elements.

5. System Architecture

5.1. AR INTERFACE FOR REAL-TIME SCULPTURE

AUGMENTATION

The interface of the Augmented Reality (AR) is the main

entry point of the user to the digitally enhanced sculpture and its interaction

in real time. The interface is made in Unity 3D with AR Foundation as it

implements ARCore and ARKit frameworks to make it

compatible with Android and iOS platforms.

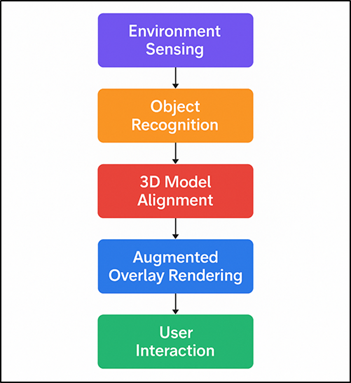

Figure 2

|

Figure 2 Flowchart of AR Interface for Real-Time

Sculpture Augmentation |

This element performs the task of environmental mapping,

surface identification and spatial matching, which allows digital overlay to be

fitted effortlessly to real-life sculptures. The AR interface uses object

recognition algorithms and markerless tracking

features of the AR interface, including feature point detection and plane

estimation to maintain high accuracy of the virtual content registration with

real world objects. AR interface flow includes real-time sculpture

augmentation, and that is presented in Figure 2.

Sculptures can be seen using handheld devices or AR glasses, with dynamic

additions, such as overlaying information, time lapse creation process, or

historical reconstructions. There are interactive features (gesture-based

controls, voice, touch-based navigation, and others) that enable users to look

at sculptures in various angles. It has realistic lighting estimation,

occlusions rendering and shading to render virtual images to blend seamlessly

in the physical environment.

5.2. AI ALGORITHMS FOR USER BEHAVIOR

ANALYSIS AND CONTENT RECOMMENDATION

The AI component of the system architecture serves as the

smart core that comprehends the interaction of the user and customizes the AR

experience. It uses a mix of machine learning (ML), deep learning (DL), and

natural language processing (NLP) models to process behavioral

information gathered when the user is interacting with the AR interface. The

special parameters like gaze, movement, and the frequency of gestures along

with the duration of learning particular sculptures are processed with the help

of ML models created in TensorFlow and PyTorch. These

types of models categorize the level of engagement, identify user intent, and

forecast interests. The adaptive content presentation in reinforcement learning

algorithms is used to achieve personalization based on feedback of each user to

the system, which learns over time to be better personalized. To achieve

conversational interactivity, NLP models like BERT models or GPT-based models

are used to drive a virtual art assistant that can answer questions by visitors

or tell them stories about their current context.

5.3. DATA PIPELINE FOR INTERACTIVE FEEDBACK

LOOPS

The main connective equipment that will enable communication

between the AR interface, AI modules, and user interaction logs is the data

pipeline. It guarantees data capture, processing and delivery in real time to

establish an improved, adaptive ecosystem of an exhibition. At the input level,

the pipeline receives multimodal inputs, such as visual tracking data,

interactive data, speech data and emotional reactions, as collected by sensors,

cameras, and devices that support AR. This unprocessed information is sent to a

cloud-based or on-premise edge server database, based

on the urgency of the latency. Filtering, normalization and anonymization of

data is done by data preprocessing modules to ensure accuracy and privacy. The

intermediate of the pipeline incorporates the processing systems of streams

like Apache Kafka or Firebase to perform real-time analytics. These data

streams are then analyzed using AI engines in order

to detect the behavioral trends, the level of

engagement, and provide adaptive content recommendations. The refined

intelligence is reported back to the AR interface with the visualizations,

story-telling styles, or even contextual overlays updated in real-time.

6. Evaluation and Results

6.1. METRICS FOR ASSESSING IMMERSION,

ENGAGEMENT, AND LEARNING OUTCOMES

Evaluation metrics will deal with the measurement of user

immersion, engagement, and learning outcomes that will be attained with the

help of the AR UI integrated exhibition. The immersion is measured by presence

questionnaire, spatial awareness scale, and physiological measures such as gaze

time and frequency of interaction. The metrics of engagement are the time spent

on each piece of art, the diversity of navigation, and the emotions associated

with the artwork based on the facial analysis. The learning outcomes are

assessed through pre and post experience quizzes, retentions exams and

qualitative feedback.

Table 2

|

Table 2 Metrics for Assessing Immersion,

Engagement, and Learning Outcomes |

|||

|

Evaluation Parameter |

Mean Score (AR–AI System) |

Mean Score (Traditional) |

Improvement (%) |

|

Presence Questionnaire (%) |

85.7 |

53.2 |

67.30% |

|

Emotional Response Rate (%) |

82.5 |

49 |

68.40% |

|

Knowledge Retention Test (%) |

86.3 |

61.7 |

39.90% |

Table 2 shows clearly that the model of AR-AI

integrated exhibition is more effective in comparison with the traditional

display of sculptures. The Presence Questionnaire score had a high change to

85.7% compared to 53.2, which means that the users felt much a sense of

immersion and spatial presence when dealing with augmented sculptures.

Figure 3

|

Figure 3 Comparison of AR–AI

System vs Traditional Methods Across Evaluation Parameters |

This enhancement is an indication of the capability of AR to

develop real-life interactive experiences which occupy both visual and

cognitive senses of users. The evaluation parameters indicate that AR -AI

performs better than the traditional techniques as illustrated in Figure 3. Equally, the Emotional Response Rate

increased by 49 percent to 82.5 percent demonstrating that personalization

based on AI and adaptive storytelling increased emotion.

Figure 4

|

Figure 4 Percentage Improvement in Learning and Engagement Metrics with AR–AI System |

The system, by reacting to the behavior

and preferences of users, enhanced intimate affective relationships between the

viewers and artworks. Figure 4 represents

the learning and engagement improvement percentage after using AR -AI. Lastly,

the Knowledge Retention Test increased to 86.3% as opposed to 61.7% which

validates that AR-AI experiences augment learning by supplying contextual,

interactive and multisensory information.

6.2. COMPARATIVE ANALYSIS WITH TRADITIONAL

EXHIBITIONS

The AR–AI prototype was compared with the traditional

sculpture exhibitions in terms of evaluation. Those who participated in the

hybrid environment had much more engagement, more time of interaction and

remembered more contextual information. The integrated system also created an

emotional connection by using interactive stories and personalization compared

to the unchanging display of objects. Qualitative comments revealed that

accessibility and better sense of artistic connection were gained. Traditional

exhibits, even though they were appreciated because of the authenticity, were

not dynamic in their interpretation and lacked personalized learning

experiences.

Table 3

|

Table 3 Comparative Analysis with Traditional

Exhibitions |

|||

|

Evaluation Criterion |

Traditional Exhibition (Mean) |

AR–AI Exhibition (Mean) |

Difference (%) |

|

Average Viewing Time (min) |

6.8 |

13.5 |

98.50% |

|

Emotional Engagement (%) |

50.1 |

85.6 |

68.60% |

|

Knowledge Retention (%) |

60.4 |

84.8 |

40.40% |

|

Visitor Satisfaction (1–100 SUS) |

72.3 |

90.5 |

25.20% |

Table 3 presents the results of the research

and shows marked benefits of the AR-AI combined exhibition model relative to

the old-fashioned display of sculptures in many aspects of experience. The

Average Viewing Time increased almost twice, by 6.8 to 13.5 minutes which

represents an 98.5 percent increment. Figure 5 demonstrates that AR-AI exhibitions are

better than traditional ones in major metrics. It means that the interaction

environment provided by the AR-AI system and its dynamic feature effectively

kept the attention of the visitors and stimulated extended activities with

every sculpture.

Figure 5

|

Figure 5 Comparative Evaluation

of AR–AI and Traditional Exhibitions Across Key Performance Metrics |

The emotional engagement also increased unusually, the level

of 50.1% reached 85.6% showing that the personalization by AI and the use of

immersive AR images contributed to the emotional engagement of the visitors

towards the art pieces. This augmented sense of affective experience increases

the exhibition to a participatory rather than an observation experience, more

emotionally charged. In addition, the Knowledge Retention increased

significantly, and the percentage of the same increased by 60.4- 84.8, which

again confirms the worth of the educational advantage of contextual and

adaptive content delivery. Lastly, Visitor Satisfaction (SUS score) was also

improved since 72.3 and 90.5 showed a difference of 25.2 percent, that is, the

usability and general experience were improved. Together, these results prove

that AR-AI hybrid exhibitions prove more effective than the traditional ones in

preserving the engagement, level of emotion, and learning effectiveness.

7. Conclusion

The integration of the Augmented Reality (AR) and Artificial Intelligence (AI) is the new idea of reconsidering the sculpture exhibition, which is the possibility to unite the physical piece of art with the online engagement. The paper demonstrates that a combination of these technologies may yield immersive, adaptive, and educational experiences which can transcend paradigms of looking at art. AR contributes to the perception through the visual addition of the digital enhancements of the real sculpture due to the visualization, the contextual narration, and the active exploration. Meanwhile, the AI will provide the opportunity to tailor the content smartly, i.e. analyze the user behavior, preferences, and emotional responses to tailor the content dynamically and thus make the experience of each visitor personal and meaningful. The proposed framework and system architecture is a nice balance of AR interfaces, AI-driven recommendation systems and data-driven feedback loops with feedback and a continuous learning cycle that is built as people interact with it. The experiment on the prototype validated measurable increase in immersion, engagement and knowledge retention in comparison to the regular exhibitions. There was also higher emotional attachment of the subjects, interactivity and heightened sense of agency on the hybrid digital space. Not only the viewers, but also the curators, educators, or even digital artists, such integration can be helpful and can enable them to make a data-informed decision and to come up with adaptive content. It facilitates inclusivity since it contributes to multiple styles and requirements of accessibility and expands the participation in the arts.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Al‑Kfairy, M., Alomari, A., Al‑Bashayreh, M., Alfandi, O., and Tubishat, M. (2024). Unveiling the Metaverse: A Survey of User Perceptions and the Impact of Usability, Social Influence and Interoperability. Heliyon, 10, e02352. https://doi.org/10.1016/j.heliyon.2024.e02352

Alkhwaldi, A. F. (2024). Investigating the Social Sustainability of Immersive Virtual Technologies in Higher Educational Institutions: Students’ Perceptions Toward Metaverse Technology. Sustainability, 16, 934.

Barath, C.‑V., Logeswaran, S., Nelson, A., Devaprasanth, M., and Radhika, P. (2023). AI in Art Restoration: A Comprehensive Review of Techniques, Case Studies, Challenges, and Future Directions. International Research Journal of Modern Engineering Technology and Science, 5, 16–21.

Chang, L. (2021). Review and Prospect of Temperature and Humidity Monitoring for Cultural Property Conservation Environments. Journal of Cultural Heritage Conservation, 55, 47–55.

Cheng, Y., Chen, J., Li, J., Li, L., Hou, G., and Xiao, X. (2023). Research on the Preference of Public Art Design in Urban Landscapes: Evidence from an Event‑Related Potential Study. Land, 12(10), 1883. https://doi.org/10.3390/land12101883

De Fino, M., Galantucci, R. A., and Fatiguso, F. (2023). Condition Assessment of Heritage Buildings Via Photogrammetry: A Scoping Review from the Perspective OF Decision Makers. Heritage, 6, 7031–7066.

Galani, S., and Vosinakis, S. (2024). An Augmented Reality Approach for Communicating Intangible and Architectural Heritage Through Digital Characters and Scale Models. Personal and Ubiquitous Computing, 28, 471–490. https://doi.org/10.1007/s00779-023-01867-4

Gong, Z., Wang, R., and Xia, G. (2022). Augmented Reality (AR) as a Tool for Engaging Museum Experience: A Case Study on Chinese Art Pieces. Digital, 2, 33–45.

Kovács, I., and Keresztes, R. (2024). Digital Innovations in E‑Commerce: Augmented Reality Applications in Online Fashion Retail—A Qualitative Study Among Gen Z Consumers. Informatics, 11, 56. https://doi.org/10.3390/informatics11020056

Matthews, T., and Gadaloff, S. (2022). Public Art for Placemaking and Urban Renewal: Insights from Three Regional Australian cities. Cities, 127, 103747. https://doi.org/10.1016/j.cities.2022.103747

Newman, M., Gatersleben, B., Wyles, K., and Ratcliffe, E. (2021). The Use of Virtual Reality in Environment Experiences and the Importance of Realism. Journal of Environmental Psychology, 79, 101733. https://doi.org/10.1016/j.jenvp.2021.101733

Sovhyra, T. (2022). AR‑Sculptures: Issues of Technological Creation, their Artistic Significance and Uniqueness. Journal of Urban Cultural Research, 25, 40–50.

Sylaiou,

S., Dafiotis, P., Fidas, C., Vlachou, E., & Nomikou, V. (2024).

Evaluating the impact of XR on user experience in the Tomato Industrial Museum

“D. Nomikos”. Heritage, 7(3), 1754–1768. https://doi.org/10.3390/heritage7030082

Wang, Y. (2022). The Interaction between Public Environmental Art Sculpture and Environment Based on the Analysis of Spatial Environment Characteristics. Scientific Programming, 2022, Article 5168975. https://doi.org/10.1155/2022/5168975

Wang, Y., and Lin, Y.‑S. (2023). Public Participation in Urban Design with Augmented Reality Technology Based on Indicator Evaluation. Frontiers in Virtual Reality, 4, 1071355. https://doi.org/10.3389/frvir.2023.1071355

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.