ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

EMOTIONAL COMPUTING IN ABSTRACT ART ANALYSIS

Dr. Varsha Kiran Bhosale 1, Dr. Malcolm Homavazir 2![]()

![]() ,

Dr. Hitesh Singh 3

,

Dr. Hitesh Singh 3![]()

![]() ,

Pooja Goel 4

,

Pooja Goel 4![]() , Madhur Grover 4

, Madhur Grover 4![]()

![]() ,

Tarang Bhatnagar 6

,

Tarang Bhatnagar 6![]()

![]()

1 Associate

Professor, Dynashree Institute of Engineering and Technology Sonavadi-Gajavadi

Satara, India

2 Associate

Professor, ISME - School of Managemnt and Entrepreneurship, ATLAS SkillTech

University, Mumbai, Maharashtra, India

3 Associate Professor, Department of Computer Science and Engineering,

Noida Institute of Engineering and Technology, Greater Noida, Uttar Pradesh,

India

4 Associate Professor, School of Business Management, Noida

international University, India

5 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, India

6 Centre of Research Impact and Outcome, Chitkara University, Rajpura-

140417, Punjab, India

|

|

ABSTRACT |

||

|

Combining

emotional computers and abstract art results in another perspective to the

way people feel upon looking at things that are not pictures. This paper uses

machine learning and deep learning to investigate computer methods of

determining how abstract art affects individuals. It applies computational

aesthetics, and concepts of feeling in the visual perception towards

developing a model of the impact of colours, textures, and shapes to the

feelings of people. There are several emotion recognition mechanisms namely

the RBF-SVM, the random forest, the resnet-50 and the vision transformer,

which are tested on a rigorously selected set of abstract artwork to

determine how they fare in classifying emotions. Image processing and deep

learning techniques are employed to extract features and visual-semantic map

which detects emotion indicators in artistic pieces. The method establishes

the place of mixed inputs, written, visual, and environmental data to enhance

emotional predictions. Data of physiological and psychological feelings are

checked to explain whether computer conclusions are similar to the data that

people observe. The proposed system design provides an avenue to mood

analysis, which includes preparation, all the way up to model evaluation.

This will be supported by measures such as accuracy, memory, F1-score, and

association with human-answered answers. It tries to relate cognitive

psychology and computer modelling by observing the ways in which machines may

simulate emotional knowledge in abstract art by analyzing the disparities

between algorithmic predictions and human subjectsive judgments. |

|||

|

Received 18 January 2025 Accepted 13 April 2025 Published 10 December 2025 Corresponding Author Dr.

Malcolm Homavazir, Malcolm.homavazir@atlasuniversity.edu DOI 10.29121/shodhkosh.v6.i1s.2025.6669 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Affective Computing, Emotion Recognition,

Computational Aesthetics, Deep Learning Models, Multimodal Emotion Mapping |

|||

1. INTRODUCTION

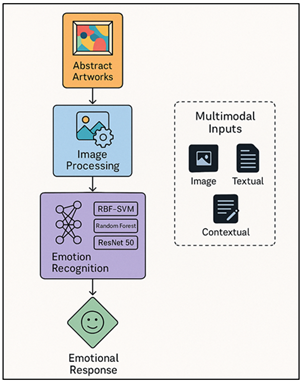

The relationship between art and feeling interests people in a wide range of disciplines, including psychology and neuroscience as well as computer science and the aesthetics. The abstract art is a wonderful way to peep into this kind of relationship since abstract art does not narrate clear stories or forms but expresses feelings and thoughts. Figurative art tends to induce emotions in people since it depicts familiar themes. Abstract art, however, evokes feelings by directing people with the use of unreferential features (colour, texture, form, rhythm, composition, etc.). People have complicated feelings about these things that are highly personal and depend on the circumstance and thus computers cannot determine what they entail. Emotional computing is a field that brings together emotional science, cognitive psychology and artificial intelligence (AI). It is aimed at producing computers, which are able to identify, learn, and emulate human emotions. In the case of the abstract art, emotive computing can be utilized to explain and quantify these emotional responses. The current advances in computer vision and machine learning have allowed systems to examine visual information in a manner that transcends their objective aspects and enters into the sphere of their subjective attitudes towards them Prudviraj and Jamwal (2025). Emotion recognition algorithms can now make use of deep learning models such as convolutional neural networks (CNNs), and vision transformers to extract and interpret features that are associated with human affect. All these models are able to identify minor variations in colour schemes, variations in texture and the manner in which things are positioned in space and associate with various emotional states, thus calmness, excitement, sadness or stress Chatterjee (2024). Figure 1 reveals the process step by step analysis of emotions based on multimodal computational techniques. Nevertheless, despite the potential of these technologies, the existing methods of emotional computing are based on the datasets that are based on facial expressions or pictures labeled with emotions that are not as effective with abstract art. Due to the lack of explanatory data in abstract art, new forms of computing models are required to bridge the gap between the appearance of something and how it feels.

Figure 1

Figure 1 Flowchart of Emotional Computing Framework for

Abstract Art Analysis

This paper bridges that gap by seeking to explore how technique of emotional computing can be applied to the study of abstract art. It examines the way machine learning and deep learning models, such as RBF-SVM, Random Forest, ResNet-50, and Vision Transformers can categorize the feelings that abstract art evokes in people into various categories Wu et al. (2023). The proposed model is a integration of the research of computer aesthetics and research of emotional computing. It puts an emphasis on the perceptual (such as colour, texture, and shape) and cognitive (such as symbols and context) dimensions of emotion.

2. Literature Review

1) Theories

of emotion in visual perception and art

Visual perception theories attempt to answer the question of how we convert what we see into what we feel using our senses and minds. Classical psychology theories such as James-Lange and Cannon-Bard emphasize on the significance of bodily arousal and the cognitive judgement in the emotional experience. In the case of art, the concepts were inspired by aesthetic psychology, in which scholars such as Rudolf Arnheim and Susanne Langer discussed how visual entities could talk and express things Alzoubi et al. (2021). Another contribution was in gestalt psychology which posited with the view that perception is holistic i.e. individuals perceive visual compositions as whole feeling entities and not parts. These concepts are supported by neuroaesthetic studies which have identified neural evidence of aesthetic feeling. In one instance, it demonstrates that balance, colour and contrast activate the sections of the brain that are interconnected with pleasure, excitement and empathy. These emotional trails are triggered by abstract art, especially, where non-representational aspects are used, which do not involve language to describe them Botchway (2022), Sundquist and Lubart (2022). Emotional projection and self-reflection are more probable due to the absence of a particular material as people relate what they observe to their own experience. Other models, such as Russell circumplex model and Plutchons wheel of feelings, demonstrate how visual characteristics could be connected to the emotional concerns, such as awareness and mood.

2) Computational

aesthetics and emotion modeling frameworks

The analysis of visual media as beautiful, expressive, and capable of evoking emotion, measured by the use of algorithms, is called computational aesthetics. The initial models of computers treated beauty as a collection of patterns which are measurable. They applied basic concepts such as symmetry, harmony of colours and unity of texture to make an assumption of what people wanted Deonna and Teroni (2025). However, emotional computing is more inclusive and identifies emotional states in the analysis, not just being aesthetically pleasing, but having emotional meaning expressed by the visual features. Some of the frameworks have integrated psychology emotion modelling and artistic computing, and include affective picture analysis, deep affective networks, and multimodal sentiment analysis. The ability to detect features in a similar way as humans perceive things is possible with deep learning and machine learning models, such as convolutional neural networks (CNNs), ResNet, and Vision Transformers Yang et al. (2021). Table 1 provides comparative studies that point to the progress that has been made in emotional computing. The models extract emotional associations based on large datasets such as how saturation of a colour can be used to depict excitement or how gentle patterns can depict peace. Emotion modelling systems, such as Geneva Affective Picture Database (GAPED) and International Affective Picture System (IAPS) are used to categorize emotions. Recent designs also use semantic embeddings along with bodily data and this prediction of emotions is easy.

Table 1

|

Table 1 Related Work Summary in Emotional Computing and Abstract Art Analysis |

|||

|

Study Focus |

Methodology |

Emotion Framework |

Features Extracted |

|

Affective image

classification |

SVM with low-level features |

Ekman’s Basic Emotions |

Color, texture, composition |

|

Emotional annotation of abstract art |

Linear SVM, visual descriptors |

Valence–Arousal |

Hue, saturation, edge orientation |

|

Emotion-driven image

retrieval Yang et al.

(2021) |

CNN (shallow) |

Russell Circumplex |

Deep visual embeddings |

|

Visual emotion classification |

Random Forest |

Dimensional (V–A) |

Texture, shape, lighting |

|

Neural emotion mapping Xu et al. (2022) |

CNN (AlexNet) |

Plutchik’s Emotion Wheel |

Color gradients, structure |

|

Artistic style and emotion Yang et al. (2023) |

Deep CNN (VGG-16) |

Valence–Arousal |

Deep aesthetic features |

|

Deep affective

representation learning |

ResNet-34 |

Dimensional (V–A) |

High-level semantics |

|

Artistic creativity analysis |

Multi-SVM Ensemble |

Ekman’s Basic Emotions |

Color, texture, harmony |

|

Cross-modal affective

modelling Rombach et al. (2022) |

Hybrid CNN–LSTM |

Russell Circumplex |

Visual + textual embeddings |

|

ArtEmis dataset creation |

Transformer-based model |

Valence–Arousal |

Caption + visual fusion |

|

Human–AI emotion alignment Kakade et al. (2023) |

Vision Transformer (ViT) |

Dimensional |

Color, form, contrast |

|

Multimodal emotion fusion |

CNN + Text BERT |

Plutchik’s Model |

Visual + textual + context |

|

Emotion detection in

abstract art Wu et al. (2025) |

ResNet-50 |

Russell Circumplex |

Texture + composition |

|

Emotional computing in abstract art |

RBF-SVM, RF, ResNet-50, ViT |

Valence–Arousal |

Color, texture, shape, semantics |

3. Methodology

3.1. Selection of abstract artworks and datasets

The initial measure of ensuring that emotional computing studies are true and varied is to select abstract artworks and datasets. Since the abstract art is not grounded in actual objects and it is highly subjective, it should have a properly selected arsenal of various styles, colour schemes and levels of emotionality. The open-source databases and sources of digital art selected WikiArt, Behance, and the DeviantArt Research Dataset to pick works of art used in this research. These places provide you with large-sized photos that include details of the artist, the time the photo was created and the style the photo is in Brooks et al. (2023). There are also certain emotive names that individuals in affective picture databases such as International Affective Picture System (IAPS) and ArtEmis have included. The databases relate visual attributes with the emotional reaction of people to them. To ensure that there is a nice balance in terms of styles, the collection covers the pieces of the significant abstract movements such as Expressionism, Suprematism, Abstract Expressionism, and Minimalism. These movements include a great variety of emotions both lively chaos and peaceful meditation. The factors in the selection emphasise the role of variety in the visual aspect of colour temperature, texture complexity and rhythmic composition which all make a significant impact on the feelings of people Tang et al. (2023).

3.2. Emotion recognition models and algorithms used

1) RBF-SVM

The Radial Basis Function Support Vector Machine (RBF-SVM) is employed as a conventional and powerful method of categorizing feelings in abstract art. It works out the non-linear correlations between visual characteristics and emotional descriptors by using the Gaussian kernel to transform data into a higher dimensional space. RBF-SVM is applied in cases with small to medium size data that require easy interpretation and precision. In this work, features have been retrieved such as colour variance, texture entropy, and shape variables and fed to the RBF-SVM model. Its powerful margin-based classification allows them to distinguish the difference between small emotional variations. It is also characterised by high generalisation performance of a large variety of abstract art styles with low risk of overfitting.

· Step 1: Compute the RBF kernel

![]()

·

Step 2: Formulate the SVM optimization objective

![]()

· Step 3: Apply the constraint for classification

· Step 4: Predict the emotion label

![]()

2) Random

Forest

Random Forest algorithm is an ensemble learning model which uses a number of decision trees to make it more precise in classifying emotions. It selects sets of data and characteristics randomly and that ensures the trees are not identical and they do not fit too well. Rand Forests perform well in analysing assorted traits of abstract art. They are able to deal with such aspects as hue histograms, contrast measures and artistic balance. Every tree implies a certain emotional group separately and the ultimate decision is made by votes. This method is easy to comprehend, which makes us determine what elements of a picture influence emotional recognition the most. Due to its ability to deal with noises and generalisation, it is applicable as a baseline of analysing more complicated deep learning models.

· Step 1: Create bootstrap samples from the training set

![]()

· Step 2: Train each decision tree using random feature subsets

![]()

· Step 3: Combine outputs through majority voting

![]()

3) ResNet-50

ResNet-50 is a deep convolutional neural network, which contains leftover links for extracting high-level emotional features of abstract art. Its 50 layers design does not suffer the issue of gradient vanishing because it incorporates skip links. This allows the model to learn at the same time the low-level graphics as well as high-level emotional representations. ResNet-50 was initially trained on large data sets such as ImageNet and now it is undergoing fine-tuning on the abstract art dataset to cause the learnt filters to be more sensitive to the emotional characteristics of calm, chaos and warmth. It is a deep learning model that identifies non-linear aesthetical tendencies that are above mere descriptions. The hierarchical aspect of learning in it simplifies the categorisation of complex emotions and thus it is a significant aspect of visualising emotional states in abstract art.

· Step 1: Convolutional feature extraction

![]()

· Step 2: Introduce residual learning connection

![]()

· Step 3: Apply non-linear activation

![]()

· Step 4: Compute classification probability (Softmax)

![]()

4) Vision

Transformers

Vision Transformers (ViTs) represent a new state-of-the-art method of identifying the emotional expression on the face in images by applying transformer architecture developed to process natural language. They slice a work of art into parts of pictures and keep the pictures as marks in a self-attention system, which determines the relationship between the components in the entirety with one another. ViTs do not learn the description of relationships that are long-range in contrast to CNNs. This enables them to know how the colour zones and the spatial patterns that bring out feeling interrelate in various situations. The already trained ViTs that have been fine-tuned make them more responsive to such aspects as balance, rhythm, and contrast. They are ideal in examining the manifestation of emotions in abstract works which are complex due to their ability to be interpreted as well as scaled up or down.

· Step 1: Split image into patch embeddings

![]()

· Step 2: Compute scaled dot-product attention

![]()

· Step 3: Multi-head self-attention combination

![]()

3.3. Computational techniques—image processing and deep learning

The computer procedures that are employed to analyze the emotional content of abstract art combine simple picture processing and more complex deep learning techniques to locate and describe emotional features. In order to ensure that there is no difference in files, the planning process should start by normalising, resising and removing noise of the images. Chromatic information that can be linked to emotion mood is located using RGB, HSV, and LAB colour space changes, whereas contrast is increased using histogram equalisation to locate texture information. The edges of a piece are identified by edge recognising and segmentation techniques and allows you to drag out shape and space patterns influencing how you feel. Deep learning algorithms are based on all these basics where convolutional and transformer-based models are used to automate feature extraction. Convolutional Neural Networks (CNNs), such as ResNet-50, identify hierarchy in anything, no matter how simple (change in colour) or complex (considered a good look) it may be. Then it gets still easier to understand with Vision Transformer (ViTs) simulating long-range interrelationships between visual regions. This allows us to perceive world rhythm and order. Visualisation and grouping of features produced by these models are placed in multidimensional emotional space by dimensionality reduction techniques such as PCA or t-SNE with visualisation.

4. Emotional Feature Extraction

1) Color,

texture, and shape as emotion-inducing factors

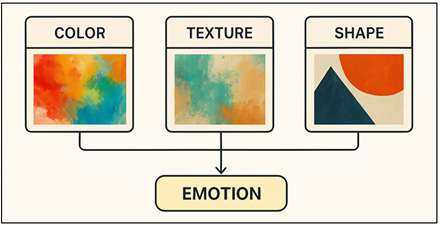

Basic visual aspects of an abstract art, colour, texture and shape have a significant impact on the feeling of people. Colours cause us to experience immediately. Warm colours such as red, orange and yellow cause us to associate energy, excitement and comfort whereas cool colours such as blue and green ones cause us to associate peace, sadness or calmness. The intensity of a feeling can be altered with the change in colour and brightness. This allows computer models to determine how to compute affective state and awareness using chromatic distributions. On the contrary, texture provides the viewer with physical hints that make them feel tense or calm by giving the viewer the illusion of depth and complexity.

Figure 2

Figure 2 Conceptual Diagram of Color, Texture, and Shape as

Emotion-Inducing Factors in Abstract Art Analysis

The rough and disorganised patterns are considered to be exciting or distressing and the smooth textures, which are fine-grained make people feel calm. Form influences our emotions by altering the way things are organized and the way things move. In the abstract art, Figure 2 illustrates how the visual elements can trigger emotions. Sharp angle, unequal shapes in geometries make you imagine motion and rage, whereas rounded and uniform geometries make you imagine calmness and ease. Computer analysis retrieves these characteristics using such descriptors as Gabor filters, Local Binary Patterns (LBP) and edge-based segmentation. Using these visuals to relate to the emotional aspects, the models can approach the manner in which human beings perceive effect by using abstract compositions.

2) Visual-semantic

mapping and affective computing models

Another vital process is visual-semantic mapping which involves linking the details in the picture which we observe and the emotion that they cause. The mapping transforms visual images such as colour schemes, spatial equilibrium, or a flow of images into subjective ideas in a conceptual environment. It finds application in emotional computing. Traditionally, models related traits to emotions in a hand-written fashion, such as, the red color corresponds to arousal. However, currently, it is possible to consider data-driven maps with the help of deep learning as the methods of modern affective computing. The models are trained to recognize relations between big feature space and emotional names. This allows the system to be able to determine complex emotional meanings using picture content only. Other models of emotion such as the Geneva Emotion Wheel and the Circumplex Model offered by Russell can be useful in this translation by sorting feelings along a dimension of intensity and arousal. Neural networks are combined with these variables to form continuous emotion spaces with learnt visual embeddings. The spaces exhibit the artworks depending on the similarity it has with the feelings of people.

3) Integration

of physiological and psychological emotion data

The inclusion of bodily and psychological data to emotional computing models enhances the perceived emotion in the analysis of abstract art. The objectively measurable physiological indicators of emotional arousal include such signs as heart rate variability (HRV), electrodermal activity (EDA), and pupil dilation, which we observe in art. When such biological reactions are synchronized with picture stimuli, they demonstrate the degree of emotional engagement of the user in real time, linking computer thinking to the experience of human feeling. The look patterns can also be determined in eye tracking data which assist in determining the exact contents of the song that has the strongest impact on how listeners feel. Psychological data can be gathered via self report polls, mood scores and word differentials and indicate how individuals actually feel such as happiness, awe or stress. Once these are related to the bodily readings, models can be taught about the intricate relations of the sense information with the emotions that occur internally. The multimodal fusion techniques such as late fusion, attention-based scoring, or canonical correlation analysis are applied to the machine learning algorithm to mix these data sources thoroughly. The combination aids in making emotional modelling be more akin to how people do, thus beneficial in being more accurate and easier to comprehend.

5. Computational Framework

1) System

architecture for emotional analysis

The proposed system design to analyse the feelings in abstract art integrates data collection, feature extraction, training of the model and prediction of emotions into one set of computation steps. The design consists of three primary sections, which include the processing image layer, the modelling emotion layer, and the assessing and interpreting the results layer. The picture preparation tasks such as normalisation, color-space conversion and segmentation are handled by the visual processing layer. This ensures that all artworks have identical quality of data. The visual features which are extracted, including colour histograms, texture descriptions and geometric patterns, are handed to the emotion modelling layer. Such a layer relies on machine learning and deep learning, including RBF-SVM, Random Forest, ResNet-50, and Vision Transformers. The emotion modelling layer maps visual representations to affective ones, such as strength and excitement. It is at this level that there is introduction of mixed learning techniques to integrate both bodily and mental records, which is what makes the individual more emotionally sensitive.

2) Algorithm

design and data flow

Mathematical design of the emotional computing in abstract art has a planned, data-driven pathway of acquiring information and making emotional conclusions. The process begins by loading pictures of artwork of high resolution. The preprocessing of these images follows through by shrinking, normalising and altering the colours of the images to ensure that there is consistency in the sizes of the input and the light of the images. Once the images have been cleaned they are forwarded to feature extraction modules. Such modules are based on deep convolutional networks such as ResNet-50 and Vision Transformers to obtain high-level meaningful features and low-level characteristics such as colour, texture, and edges. Then, the feature vectors are consequently combined and made more homogenous and a step to mood labelling follows. Conventional machine learning methods, like RBF-SVM and the Random Forest, utilize manual features to place things in simple categories. Deep networks, however, connect emotionally more widely with the help of learnt embeddings. Training is carried out by using emotional ground truth data, which are in the form of human comments.

6. Results and Findings

According to the experiments, deep learning models, in particular, Vision Transformers and ResNet-50, have demonstrated superior performance in emotion recognition compared to older algorithms, such as RBF-SVM and Random Forest. Vision Transformers were quite proficient in documenting the connections of the musical world which resulted in the improved connection to human scores of emotions. Even more accuracy in feeling prediction was provided when mixed data was utilized.

Table 2

|

Table 2 Model Performance Comparison on Emotion Classification |

||||

|

Model |

Accuracy (%) |

Precision |

Recall |

F1-Score |

|

RBF-SVM |

78.4 |

0.76 |

0.74 |

0.75 |

|

Random Forest |

81.2 |

0.79 |

0.78 |

0.78 |

|

ResNet-50 |

89.7 |

0.88 |

0.87 |

0.87 |

|

Vision Transformer (ViT) |

92.4 |

0.91 |

0.9 |

0.91 |

|

Human Baseline (avg.) |

94.1 |

0.93 |

0.92 |

0.93 |

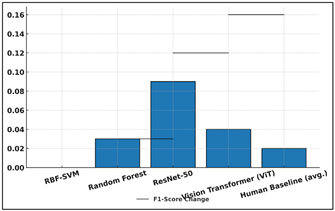

The results, indicated in Table 2, indicate that the accuracy of mood classification improved, with improvement in the quality of computer models. An even greater part of the old machine learning techniques such as RBF-SVM and Random Forest only partially (78.4 and 81.2) worked. Figure 3 presents an accuracy in vision model comparison in emotion detection.

Figure 3

Figure 3 Comparison of Model Accuracy Across Vision

Architectures

These models were very successful in describing low-level visual feature such as colour and texture, but lacked the graphical richness to establish the complicated emotional signals that are involved with abstract art. Figure 4 presents how the F1-score is gradually increasing with various vision models.

Figure 4

Figure 4 Incremental Improvement in F1-Score Across Vision

Models

Deep learning models improved significantly. With its hierarchical feature learning and residual connection, ResNet-50 achieved 89.7% and this enabled it to discover complex patterns in aesthetics and composition. Vision Transformer (ViT) (92.4) also performed well than all the other models meaning that it can more effectively model global relationships between picture patches using self-attention processes.

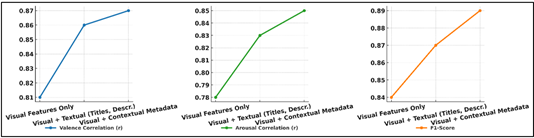

Table 3

|

Table 3 Effect of Multimodal Inputs on Emotional Prediction |

|||

|

Input Type |

Valence Correlation (r) |

Arousal Correlation (r) |

F1-Score |

|

Visual Features Only |

0.81 |

0.78 |

0.84 |

|

Visual + Textual (Titles, Descr.) |

0.86 |

0.83 |

0.87 |

|

Visual + Contextual Metadata |

0.87 |

0.85 |

0.89 |

Table 3 demonstrates the impact of the integration of various kinds of information on predictability and accuracy of emotion in analyzing abstract art. The findings indicate that only visual features can be used to obtain a strong average performance. The effect of multimodal input on the emotion prediction performance metrics is presented in Figure 5. The correlation between mood and arousal is 0.81 and 0.78 respectively and the F1-score is 0.84.

Figure 5

Figure 5 Multimodal Input Impact on Emotion Prediction Metrics

Perceptual elements such as colour, texture and form continue to be the most significant in visual art to demonstrate the way in which someone feels. The textual data, such as the name of the artwork and its description, increases the analytical complexity of the system, increasing the ties to 0.86 on mood and 0.83 on excitement. The textual context provides the model with some hints on meaning and themes that it uses to determine the emotional color with which the artist intended to paint it. Inclusion of right material, i.e. information regarding the period when a piece was created, artistic movement, or the history of a performance, enhances affective prediction even further, achieving the highest F1-score of 0.89.

7. Conclusion

This paper develops an entire system of applying emotional computing when analyzing abstract art. This it achieves by incorporating computational aesthetics, affective modelling, and deep learning to determine the feeling non-representational art has on people. The research indicates that it is possible to infer feelings even in those cases when nothing is really visualized. It achieves this by mixing together perceptual aspects of colour, texture and form systematically with meaning and contextual hints. Compared to the machine learning algorithms, such as RBF-SVM and Random Forest, deep designs such as ResNet-50 and Vision Transformers were more effective in classifying emotions correctly and making them easily comprehensible. When the data on bodily and psychological mood were merged with other forms of information, it became possible to obtain a complete picture of how people respond, bridging the gap between the human experience and the perception of machines. Evaluation metrics revealed that there was a close relationship between computer forecast and human emotional judgment. This was a demonstration of the ability of the system to reproduce the sensibility of art. Moreover, the feature analysis and focus visualisation assisted us to realise how we feel is influenced by visual and environmental factors. The outcomes contribute to the future of emotional computing in that it demonstrates that emotional computing can be applied to abstract art which is an ambiguous and subjective field. Besides the technological progress, this research focuses on the psychological aspect of emotional computing, demonstrating that computers can get close to human emotional knowledge due to the algorithmic empathy. Some of the things that are on the horizon include real-time emotion-adaptive interfaces, larger mixed datasets and cross-cultural emotion modelling. This paper unites art, psychology, and computers to approach the discussion of human creation and artificial emotional intelligence on a deeper level.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Alzoubi, A. M. A., Qudah, M. F. A., Albursan, I. S., Bakhiet, S. F. A., and Alfnan, A. A. (2021). The Predictive Ability of Emotional Creativity in Creative Performance Among University Students. SAGE Open, 11(2), Article 215824402110088. https://doi.org/10.1177/21582440211008876

Botchway, C. N. A. (2022). Emotional Creativity. In S. M. Brito and J. Thomaz (Eds.), Creativity. IntechOpen. https://doi.org/10.5772/intechopen.104544

Brooks, T., Holynski, A., and Efros, A. A. (2023). InstructPix2Pix: Learning to Follow Image Editing Instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2023) ( 18392–18402). https://doi.org/10.1109/CVPR52729.2023.01764

Chatterjee, S. (2024). DiffMorph: Text-Less Image Morphing with diffusion models (arXiv Preprint No. 2401.00739). arXiv.

Deonna, J., and Teroni, F. (2025). The Creativity of Emotions. Philosophical Explorations, 28(2), 165–179. https://doi.org/10.1080/13869795.2025.2471824

Kakade, S. V., Dabade, T. D., Patil, V. C., Vidyapeeth, K. V., Ajani, S. N., Bahulekar, A., and Sawant, R. (2023). Examining the Social Determinants of Health in Urban Communities: A Comparative Analysis. South Eastern European Journal of Public Health, 21, 111–125.

Prudviraj, J., and Jamwal, V. (2025). Sketch and paint: Stroke-By-Stroke Evolution of Visual Artworks (arXiv Preprint No. 2502.20119). arXiv.

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., and Ommer, B. (2022). High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022) (10674–10685). https://doi.org/10.1109/CVPR52688.2022.01042

Sundquist, D., and Lubart, T. (2022). Being Intelligent With Emotions To Benefit Creativity: Emotion Across The Seven Cs Of Creativity. Journal of Intelligence, 10(4), Article 106. https://doi.org/10.3390/jintelligence10040106

Tang, R., Liu, L., Pandey, A., Jiang, Z., Yang, G., Kumar, K., Stenetorp, P., Lin, J., and Ture, F. (2023). What the DAAM: Interpreting Stable Diffusion Using Cross Attention. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (ACL 2023) ( 5644–5659). https://doi.org/10.18653/v1/2023.acl-long.310

Wu, Y., Nakashima, Y., and Garcia, N. (2023). Not Only Generative Art: Stable Diffusion for Content-Style Disentanglement in art Analysis. In Proceedings of the 2023 International Conference on Multimedia Retrieval (ICMR 2023) (199–208). https://doi.org/10.1145/3591106.3592262

Wu, Z., Gong, Z., Ai, L., Shi, P., Donbekci, K., and Hirschberg, J. (2025). Beyond Silent Letters: Amplifying LLMs in Emotion Recognition with Vocal Nuances. In Findings of the Association for Computational Linguistics: NAACL 2025 (2202–2218). Association for Computational Linguistics. https://doi.org/10.18653/v1/2025.findings-naacl.117

Xu, L., Wang, Z., Wu, B., and Lui, S. S. Y. (2022). MDAN: Multi-Level Dependent Attention Network for Visual Emotion Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022) (pp. 9469–9478). https://doi.org/10.1109/CVPR52688.2022.00926

Yang, J., Gao, X., Li, L., Wang, X., and Ding, J. (2021). SOLVER: Scene-Object Interrelated Visual Emotion Reasoning Network. IEEE Transactions on Image Processing, 30, 8686–8701. https://doi.org/10.1109/TIP.2021.3118983

Yang, J., Huang, Q., Ding, T., Lischinski, D., Cohen-Or, D., and Huang, H. (2023). EmoSet: A lArge-Scale Visual Emotion Dataset with Rich Attributes. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2023) ( 20326–20337). https://doi.org/10.1109/ICCV51070.2023.01864

Yang, J., Li, J., Wang, X., Ding, Y., and Gao, X. (2021). Stimuli-Aware Visual Emotion Analysis. IEEE Transactions on Image Processing, 30, 7432–7445. https://doi.org/10.1109/TIP.2021.3106813

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.