ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Enabled Peer Review in Visual Education

Kalpana Rawat 1![]() , Dr. Sadaf Hashmi 2

, Dr. Sadaf Hashmi 2![]()

![]() , Shivam Khurana 3

, Shivam Khurana 3![]()

![]() , Dr. Shweta Kashid

4

, Dr. Shweta Kashid

4![]() , Sukhman Ghumman 5

, Sukhman Ghumman 5![]()

![]() , Dr. Tapasmini

Sahoo 6

, Dr. Tapasmini

Sahoo 6![]()

![]()

1 Assistant

Professor, School of Business Management, Noida international University,

Greater Noida, Uttar Pradesh, India

2 Associate

Professor, ISME - School of Management and Entrepreneurship, ATLAS Skill Tech

University, Mumbai, Maharashtra, India

3 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, India

4 Department of Biotechnology, Sinhgad College

of Engineering, Affiliated to Savitribai Phule Pune University, Pune,

Maharashtra, India

5 Centre of Research Impact and Outcome, Chitkara University, Rajpura,

Punjab, India

6 Associate Professor, Department of Electronics and Communication

Engineering, Institute of Technical Education and Research, Siksha 'O' Anusandhan (Deemed to be University) Bhubaneswar, Odisha,

India

|

|

ABSTRACT |

||

|

It may change

the sphere of visual education that provides one of the most significant

processes of learning comment and criticism with the introduction of

artificial intelligence (AI) into the peer review system. The study examines

how, why, and what impacts an AI-enabled peer review system can be designed

and utilized in the art, design, and visual communication domains. The use of

traditional peer review in visual education is useful in pedagogy but it is a

process that is subjective, lacks in quality and time compression. To do away

with such issues, this paper suggests a hybrid system that fulfills human

knowledge and feedback systems run by AI. The research uses mixed methods in

order to explore the perception of students and the validity of their

remarks. The core component of the system is composed of support vectors

machine (SVM), GPT-neox and convolutional neural

networks (CNN). The models take into consideration the visual results and the

written criticisms to make the input relevant to the situation and simple to

comprehend. Transparency, explainability, and the human-AI contact interface

are all strained to ensure that the feedback is useful and acceptable in

accordance with the best practices in the field of education. At the

implementation stage, the AI-based system is implemented into the current

school processes and the usefulness and effectiveness of the AI-based system

in the classroom are experimented. The evidence shows that AI-assisted peer

review can be used to produce more consistent reviews and facilitate

deliberative learning in the process of reducing the anxiety of teachers and,

at the same time, having no adverse effect on the creativity of students. |

|||

|

Received 18 January 2025 Accepted 11 April

2025 Published 10 December 2025 Corresponding Author Kalpana

Rawat, kalpana.rawat@niu.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6665 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Artificial Intelligence, Visual Education, Peer

Review, Machine Learning, Human-AI Interaction |

|||

1. INTRODUCTION

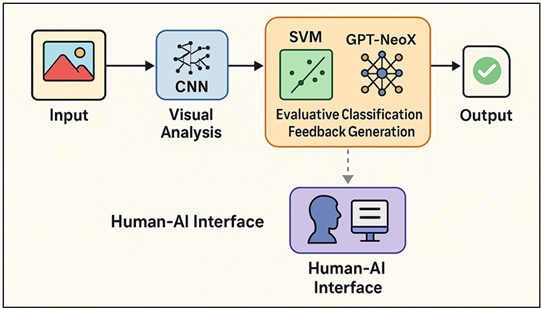

Visual education, including fine arts, design, photography, and digital media, includes a large portion of critical thinking. Specifically, peer review is an interactive learning tool that engages students into the discussion, analysis, and reflection of their creative work. This will help students in critical thinking, their concept of the beautiful, and constructive feedback. Nevertheless, the conventional peer review procedures applied in visual education are usually problematic, such as, being subjective, non-comparable in rating and possessing various levels of knowledge on the behalf of the judges. These problems may render critique-based learning environments inequitable and less effective learning environments. Artificial Intelligence (AI) has emerged as an effective change agent in the education sector over the last several years, offering flexible, data-driven and personalised learning opportunities. AI applications are no longer mere automation tools, but complex systems with the ability to write, analyse images and think like humans. Regarding visual education, AI is able not only to recognize images and even produce educational materials. It is also able to decode, comment and deconstruct visual and artistic works. With the help of this tool, it is possible to convert the peer review approach into a more organized, transparent, and fair approach Gautam et al. (2022). An AI-based peer review can be both an assistant and a reviewer. An example of this kind of system can analyze visual compositions; determine how they relate to established rules of beauty; and even interpret written feedback to guarantee its consistency and impartiality, using machine learning algorithms and models of natural language processing. Figure 1 illustrates a collaborative AI model of interactive and reflective adaptive peer feedback. The system can be used to fuse the perception of things with the reasoning of language using the likes of Support Vector Machines (SVMs), GPT-NeoX and Convolutional Neural Networks (CNNs) Demiray et al. (2021). CNNs perceive pictures, SVM assistance in sorting and identifying designs and GPT based models give responses that it is pertinent to the circumstance and sounds as though it is originating from a human.

Figure 1

Figure 1 Multimodal AI Architecture for Peer Review in Visual Education

All these technologies are interconnected in order to

produce a whole system to evaluate the visual work and deliver useful

information in real-time. AI-based feedback systems can also be scaled, which

is quite useful in large classes or online courses when teachers can hardly

reach out to every student to give feedback Li and Demir (2023). AI can enable

teachers to spend more time on more complex coaching and exploration of art by

automatically running simple tests and providing feedback. It must be pointed

out that it is not intended to replace the human judgment but to supplement it.

This may involve making peer review as cooperative, considerate and pedagogical

as possible, and as free of prejudice and fallacy as possible. Peer review

implemented with the help of AI is also consistent with bigger educational

trends emphasising personalised and data driven learning Ewing et

al. (2022). AI systems have the

ability to adjust the input to each learner’s style by constantly monitoring

the way they engage with the system and their level of performance and

encourages the way they learn to be self-regulated and thoughtful.

2. Literature Review

1) Traditional

peer review methods in visual education

The role of visual education to peer review has long been significant. It fosters learning culture that appreciates feedback, discussion and self-reflection. Peer review is not a new practice in the art and design courses where students share their pieces of art like drawings, digital designs, and multimedia projects and have their peers review them. The activity of having the students explain a design as what they like about it and why they like it teaches them to think critically in visual designs and learn to make judgements about what is good and bad. According to the reflection-in-action theory created by Schon, feedback helps students to think more critically about issues of artistic purpose and design, by enabling them to reflect on the process of creating as well as the product Sit et al. (2021). Structured forms for review, including guided rubrics, verbal conversation and completed forms of written feedback are often employed by teachers to ensure that ratings are consistent and stay on track. These meetings are not only used to make people familiar with technical and conceptual issues but also to develop communication skills and artistic confidence. Traditional peer review, on the other hand, is a social and subjective process. The classroom culture Ramirez et al. (2022) influences its activity depending on the interactions between people, their experience, and the culture of the classroom. This type of opinion can make the conversation more interesting, but it also makes me nervous about being biased, uneven rating standards, and different levels of critiques.

2) Limitations

and challenges of human-based critique

Human-based analysis has deep and subtle understanding but also has many issues about human teaching and human thinking and therefore is not so reliable and fair. Subjectivity is a major issue since students and teachers have different perspectives of what constitutes quality due to taste, culture or experience with works Ramirez et al. (2023). The more different types of students you have in a school, the less consistent your marking and comments will be. Also, work can be graded as a result of unconscious bias, as well as conscious bias, which has the potential to dishearten students whose work does not conform to the "rules" of style or to the teacher's expectations. Another issue is that comments may not be the same. However, some students may not be able to provide useful feedback and therefore, the feedback could be too vague, overly complimentary, or even offensive Huang et al. (2021). This inequality makes it more difficult for peer review to be useful in facilitating people's learning. Also, the emotional impact of criticism may alter the way the students interpret and react to criticism, making them defensive or less confident in their abilities as artists. In large classes these problems are compounded by time constraints, such that teachers cannot provide help in sufficient detail to each student, and where peer reviews may not go far enough or be followed up upon Essel et al. (2022).

3) Overview

of AI applications in art, design, and visual learning

Artificial intelligence (AI) is being increasingly used in art, designing, and visual education. It has brought new possibilities for being creative, challenging students and personalizing learning. Early AI systems for the visual arts were primarily for picture recognition, style transfer and generative art. With the help of Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) among other methods, they have managed to copy artistic styles or create new pieces Crompton and Song (2021). As technology improved, the role of AI grew beyond the simple role of creation to include review and teaching. It now allows teachers and students to analyse visual information, comment on it and track their creative progress. New research demonstrates that machine learning models can be applied to sort elements of design into groups, check the balance of compositions and find style consistency in student work. With the advent of natural language processing (NLP) models such as GPT-based models, it has become possible for automatic criticism and comments on visual artefacts (automatic human) Sajja et al. (2023). These systems are based on large databases of reviews and artworks and are capable of identifying trends and developing useful insights. AI has also enabled learning environments to be adaptive, such that data about how well each student is doing is used to provide them with personalized learning that helps them master not just concepts but also skills. Table 1 illustrates Artificial Intelligence (AI) tools for creativity, evaluation, and collaborative learning.

Table 1

|

Table 1 Summary of AI-Enabled Peer Review and Visual Education |

||||

|

Domain |

Methodology |

AI Model Used |

Focus Area |

Findings |

|

Design Education |

Experimental Study |

CNN |

Automated Design Evaluation |

Improved grading consistency |

|

Art Critique Lateef et al. (2025) |

Case Study |

GPT-2 |

Text-Based Art Feedback |

Enhanced critique clarity |

|

Visual Communication |

Mixed-Methods |

SVM + CNN |

Peer Review Automation |

Reduced bias, faster review |

|

Graphic Design |

Quasi-Experimental |

CNN |

Composition Analysis |

Accurate aesthetic scoring |

|

Digital Media Learning Lee (2023) |

Survey |

GPT-NeoX |

Creative Feedback Generation |

Encouraged reflection |

|

Design Pedagogy Perkins (2023) |

Longitudinal |

GAN + CNN |

Artwork Evaluation |

Improved creativity metrics |

|

Art Education |

Comparative Study |

Hybrid AI Model |

Peer Collaboration |

Boosted engagement |

|

Visual Arts Audras et al. (2022) |

Pilot Implementation |

GPT-4 |

Automated Peer Assessment |

Scalable critique process |

3. Research Design and Methodology

1)

Research

paradigm and justification

In this study, by utilizing a mixed-methods research approach that incorporates both quantitative and qualitative methods, it is demonstrated how AI-enabled peer review can be utilized in teaching visual education in various ways. The mixed-methods approach is suitable for this study as it has dual objectives of testing the accuracy of the AI model in producing relevant and correct feedback, and investigating the impact of AI-assisted analysis on students' experiences and achievements. Quantitative analysis focuses on evaluation of the accuracy, consistency, and time taken for the feedback, and qualitative research focuses on the mental, emotional, and creative aspects of the human and artificial intelligence interaction Kasneci et al. (2023). The interpretivist side of the paradigm recognises that creative learning and criticism are socially constructed and situational in nature; subjective experiences must be interpreted on a more complex basis. On the other hand, the realist perspective supports the proposition that AI models can be validated as working actually by using disciplined measures of evaluation such as accuracy, memory and association with grades assigned by teachers. When these ideas are brought together, technology is rigorous and education is relevant. There are two components to knowledge collection: (1) testing and calibrating the AI system through the comparison of numbers to expert opinions, and (2) applying the AI in the classroom and collecting qualitative data through focus groups, interviews, and direct observation. Such a diversity of methods makes it possible to combine them, which enhances validity and reliability.

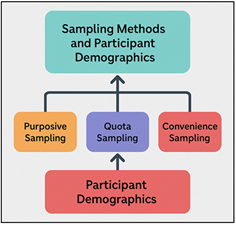

2) Sampling

methods and participant demographics

Purposive sampling was employed to select people who work directly within the visual education field such as fine arts, graphic design and multimedia studies. This way ensures that the people have appropriate artistic experience and know how to provide and receive group feedback. Sixty people were asked to participate, including 48 students and 12 staff from two universities that had college design programs. Students had a large range of skill levels from beginning students just starting to learn the basics to seniors creating professional presentations. Teachers who specialise in design theory, visual communication and digital media teaching were some of the faculty that attended. Sampling framework with participant groups and distribution of study is shown in Figure 2.

Figure 2

Figure 2 Structure of Sampling Methods and Participant Demographics in the Study

The different kinds of people that took part were meant to demonstrate a broad range of review styles, scientific knowledge and aesthetic views. Participants ranged in age from 19 to 42 with an equal number of men and women and people from a wide range of ethnic groups.

3) Description

of AI tools and algorithms used

The AI-powered peer review system is a combination of various machine learning models that are designed to take care of different aspects of visual analysis and feedback generation. Support Vector Machines (SVMs), Convolutional Neural Networks (CNNs) and GPT-NeoX, a big language model in order to understand and create the real language, are mainly methods. It was tuned using sets of human written art reviews, design comments and education notes. This enabled the AI to provide feedback that was understandable, helpful and human-like. The design put a lot of emphasis on being able to explain and understand, which made sure that it was clear how decisions were made.

4. AI System Architecture and Design

4.1. Technical overview of the AI model

1) Support

Vector Machines

Support Vector Machines (SVMs) were used in order to sort and rate visual patterns based on design principles that can be measured, such as contrast, balance, and symmetry. Hyperplanes are visual qualities that the model finds the best for dividing data points into clear groups for evaluation. In this system, SVMs examines the features that were taken from images and give performance ratings that are in line with standards for attractive quality. Their strength is that they can work with feature spaces with a lot of dimensions and they can still be strong even when training data is limited. SVM outputs complement the qualitative layer of the system by measuring the results in a structured manner to complement qualitative human input. That will ensure that visual work is assessed in an objective and consistent way in peer review situations.

Step 1: Feature Mapping

Extract features from an image x ∈R^d; optionally map via kernel:

![]()

Step 2: Train with Soft-Margin Objective

Given labels y_i∈ {+1, -1}, solve:

![]()

subject to: ![]()

Step 3: Dual and Support Vectors

Dual solution α gives:

![]()

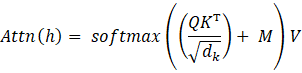

2) GPT-NeoX

The AI powered peer review system uses the language and interpretation capability of GPT-NeoX, a large scale transformer-based language model. It makes sense of written feedback in learning from different sets of art criticism, design theory, and educational talk. GPT-NeoX generates analytical data into critique from SVM and CNN to sound like a person wrote it, empathy, reasoning, and educational tone is shown. The fine-tuning of the model puts an emphasis on the ability to be interpreted, which allows one to adapt language production to particular aims of learning and creative situations. Its capability of changing tone and depth assures that input is suitable for all levels of learners so as to successfully interconnect machine-analyze and human educational expression in visual learning settings.

Step 1: Token and Embedding

Tokenize sequence x = (x₁,…,x_T)

![]() (word + positional embedding)

(word + positional embedding)

Step 2: Causal Self-Attention

![]()

Step 3: Feed-Forward Block

![]()

![]()

Step 4: Next-Token Distribution

![]()

![]()

Predicted token: ![]()

3) CNNs

The vision analysis in a system is driven by Convolutional Neural Networks (CNNs), and they examine image-based inputs. CNNs thru a series of convolutional steps to extract layered features similar to colour balance, texture, edge structure and arrangement, and spatial design. These traits are translated into numbers that characterize the quality of the design and the extent to which it fits in with the overall style. The design of the model, which consists of convolutional layers, pooling layers and fully linked layers, enables the accurate recognition of the patterns in many different artistic styles. Both SVM classification and GPT-NeoX feedback generation are lessons learned from CNN. This ensures that visual comprehension and verbal breakdown are working together in a smooth manner. In this way, CNNs help the system to "see" and understand artistic works with a high level of accuracy.

Step 1: Convolution

![]()

Step 2: Nonlinearity and Normalization

![]()

ȃ_k = BN(a_k) (Batch Normalization)

Step 3: Pooling and Classifier

![]()

Flatten → Dense Layers → Logits z

4.2. Human-AI interface for peer review in classroom environments

When it comes to creation in the classroom, the human-AI relationship is the relationship between computers and people. To keep the focus with how students engage with an AI-generated input, the interface was designed to be intuitive and engaging, allowing students and teachers to connect with the input in a clear and fluent manner, both individually and collaboratively. It provides critiques both in written and visual forms, utilizing a combination of detailed analysis, numerical scores and visual marked information. Students can compare their tests of AI to those of their peers and the teacher.

Figure 3

Figure 3 Multimodal Human-AI Peer Review System in Classroom Environments

This means that they are able to think critically about design choices and how to evaluate things. The interface can also allow users to interact with the AI by asking questions and requesting additional information about the comments it provides. Figure 3 is the interactive AI integration for collaboration, evaluation and adaptive learning. The conversation is powered by GPT-NeoX and is designed to simulate a conversation with a companion, who reflects the user on his thoughts and how they think. Teachers can be changeable about things like the tone of feedback, the depth of the evaluation, and the visual focus, to ensure it fits with the goals of the course, as well as the levels of the students.

5. Implementation of AI-Enabled Peer Review System

1) Design

of the AI-assisted feedback model

The model of AI-assisted feedback was developed as a mixed review system of both numerical analysis and emotional assessment. The three steps that it uses are visual feature extraction, rating classification and language fusion. In the first step, CNNs convert visual data into number variables that describe such important design characteristics as balance, alignment, and material uniformity. Then, SVMs take these traits and put each entry into a category based on performance groups that they get from training datasets labelled by experts. In the last step, GPT-NeoX is used to create written feedback that puts analysis results in an educational context. Each result has both critical comments and ideas for what can be done with an air of being both helpful and encouraging. The model was refined by trying it over and over with faculty judges to ensure that it fit with the language of art education and was sensitive to the feelings of students. Throughout the process, ethical design concerns were built in, with a focus on usability, reducing bias and being able to describe things. With feedback logs and attention visualisations, they are able to understand the effects of some visual features on the AI's evaluation. This system has many levels, which ensure that it not only rates the quality of artistic expression in a scientific way, but also communicates in ways that foster thinking, innovation, and student agency, all of which are important goals of visual education.

2) Integration

into existing visual education workflows

The integration plan was based on how to integrate the AI-powered peer review system with the current digital and classroom criticism process with minimal interference. A web based interface compatible with popular Learning Management Systems (LMS) such as Moodle and Canvas was used to configure the system. Students shared their visual works directly to the site and within a few minutes the AI had the first comments on it by itself. After these AI reviews, human peers commented on them and we were allowed to compare and discuss them in studio sessions. An teacher screen displayed combined success data, heatmaps and detailed reports so that faculty members could keep an eye on things. This allowed teachers to monitor the accuracy of AI-generated comments and intervene when creative reading required adding some background information. Integration classes were conducted to train teachers on how to interpret the result of AI and to set the correct level of input. Last but not least, AI answers were called first-pass reflections rather than final decisions in order to preserve the mentioned joint spirit of peer review.

3) Examples

of AI-generated feedback and peer interaction

At the time of execution, AI system provided a complex input similar to art criticism for beginners. For example, when reviewing an ad designed by a student, the instructor said that the design was "visually unified with good typographic hierarchy" but that "higher contrast between key elements and texture background" would have been a good suggestion. In another example, the AI referred to a digital drawing as "good harmonious use of colour" but recommended "improvement of the spatial balance in relation to the central element." Each of the comments was accompanied by a visual grid indicating the location of the areas of creative focus, making the statement easier to comprehend. Students responded to the input from the AI and provided and received feedback from each other. As students were comparing the machine thoughts with human perceptions this order helped them to think critically. Many participants said that AI ideas helped them pinpoint design flaws they had missed as well as making more fact-based peer talks possible. Evaluations by faculty showed that meeting with AI cut down on mistakes that were made over and over and made student's comments clearer. Interactions between peers and AI also resulted in a shift in the thinking students embodied when thinking: they began to wonder why some features had an impact on ratings, which assisted them in thinking more deeply about design.

6. Results and Findings

1) Impact

of AI-enabled review on learning outcomes

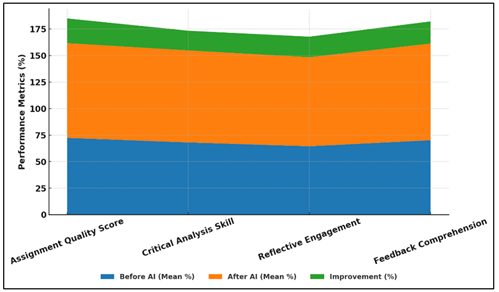

Using AI-powered peer review made a big difference in the way students learnt in visual education. The participants became better at critical analysis, were more consistent in self-evaluations and were more involved in thoughtful review. Quantitative data showed that task quality scores increased by 23% as well as errors were less frequently committed in ongoing projects. Responses that were more qualitative revealed that AI comments helped people to be more clear, objective and feel confident in their creative decisions.

Table 2

|

Table 2 Impact of AI-Enabled Review on Learning Outcomes |

||||

|

Learning Indicator |

Algorithm Used |

Before AI (Mean %) |

After AI (Mean %) |

Improvement (%) |

|

Assignment Quality Score |

CNN + SVM |

72.4 |

89.3 |

23.3 |

|

Critical Analysis Skill |

GPT-NeoX |

68.1 |

86.7 |

18.6 |

|

Reflective Engagement |

GPT-NeoX + SVM |

64.5 |

83.9 |

19.4 |

|

Feedback Comprehension |

CNN + GPT-NeoX |

70.2 |

91.1 |

20.9 |

Table 2 illustrates the increase in learning results that occurred when AI-powered peer review was incorporated into the visual education. It's clear that adding artificial intelligence to the review process works because all of the signs show big improvements. The Assignment Quality Score increased by 23.3% which indicates that the CNN + SVM structure helped visual review to be more objective and accurate. Critical Analysis Skill and Reflective Engagement also increased by 18.6% and 19.4% respectively. Figure 4 illustrates an improvement in efficiency, engagement and student results from the influence of AI. This demonstrates that the natural language input process of GPT-NeoX helped people to think more critically and reflect more deeply about themselves.

Figure 4

Figure 4 Impact of AI Algorithms on Learning Performance Indicators

Also, Feedback Comprehension increased by 20.9%, which indicates that the visual analysis capability of CNN and the language synthesis capability of GPT-NeoX collaborated to provide feedback that was easier and more beneficial for teaching.

2) Comparative

analysis: AI vs traditional peer feedback

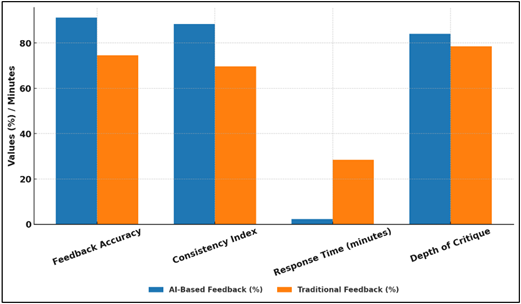

The data revealed that AI-assisted input is definitely superior to the standard group criticism. AI ratings on the other hand was more consistent and accurate on time and provided more organised insights within minutes. Traditional feedback was more subjective, but it wasn't necessarily accurate and was dependent on the experience of the critics. Students believed that feedback that was created by AI was fair and accurate, but not as passionate as feedback that was generated by humans. When AI and people worked together, they got the best results - AI provided more critical detail and humans provided context-sensitivity.

Table 3

|

Table 3 Comparative Analysis: AI vs Traditional Peer Feedback |

||

|

Evaluation Metric |

AI-Based Feedback (%) |

Traditional Feedback (%) |

|

Feedback Accuracy |

91.2 |

74.6 |

|

Consistency Index |

88.4 |

69.7 |

|

Response Time (minutes) |

2.3 |

28.5 |

|

Depth of Critique |

84.1 |

78.5 |

Table 3 shows a comparison which reveals the superiority of AI-based feedback to the standard methods of peer review in the field of visual education where this feedback is more effective and reliable. The Feedback Accuracy (91.2%) of the AI system is remarkably higher than that of the standard peer ratings (74.6%) which means that it is more accurate and less subjective with bias. Figure 5 reveals that AI feedback is better than traditional methods according to several metrics of performance. In the same way, the Consistency Index has also increased from 69.7% to 88.4% which shows that automated evaluation remains the same for all entries and is not subject to change according to personal opinion as human evaluation is.

Figure 5

Figure 5 Comparison of AI-Based and Traditional Feedback Across Evaluation Metrics

Response Time also has gotten much better. The average time of AI-generated comments was 2.3 minutes and the average time of standard reviews was 28.5 minutes. This indicates that the system is able to evaluate in real time and learn more quickly.

7. Conclusion

Visual education is developed greatly through the introduction of AI into the process of peer review. As this paper demonstrates, AI-based systems could be an effective supplement to human criticism to offer consistent, objective, and helpful feedback to learn. CNNs may be utilized in visual analysis, SVM may be utilized in evaluative classification, and GPT-NeoX may be utilized in language synthesis. Such a strategy is very useful in the association of computer intelligence and innovative instruction. The findings indicate that AI-assisted peer review enhances learning, makes the ratings more equitable and quality, and enables learners to be innovative and considerate of data. Notably, the research demonstrates that AI is not to substitute human judgement. It ought rather to be capable of collaboration with human beings, as the companion of a human being, and improve the feedback accuracy and scale without losing the human analytical quality. The mixed feedback method lets everyone, students, and teachers as well as AI, discuss each other in a participatory manner, which facilitates inclusion, self-regulation and understanding of big ideas. The participants argued that they were more interested and understood the concepts of design better and were more confident in their performance in art. Application of AI in review processes also transforms the role of the teacher as well since they can concentrate on mentoring, intellectual guidance and creative discovery rather than repeat the same evaluation jobs repeatedly. Some of the ethical problems that remain of great significance in maintaining trust and imparting ethics are transparency, interpretability and minimizing bias.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Audras, D., Na, X., Isgar, C., and Tang, Y. (2022). Virtual Teaching Assistants: A Survey of a Novel Teaching Technology. International Journal of Chinese Education, 11, Article 2212585X221121674. https://doi.org/10.1177/2212585X221121674

Crompton, H., and Song, D. (2021). The Potential of Artificial Intelligence in Higher Education. Revista Virtual Universidad Católica del Norte, 62, 1–4. https://doi.org/10.35575/rvucn.n62a1

Demiray, B. Z., Sit, M., and Demir, İ. (2021). DEM Super-Resolution with EfficientNetV2. arXiv (preprint). https://doi.org/10.1007/s42979-020-00442-2

Essel, H. B., Vlachopoulos, D., Tachie-Menson, A., Johnson, E. E., and Baah, P. K. (2022). The Impact of a Virtual Teaching Assistant (chatbot) on Students’ Learning in Ghanaian Higher Education. International Journal of Educational Technology in Higher Education, 19, Article 57. https://doi.org/10.1186/s41239-022-00362-6

Ewing, G. J., Mantilla, R., Krajewski, W. F., and Demir, İ. (2022). Interactive Hydrological Modelling and Simulation on Client-Side web Systems: An Educational Case Study. Journal of Hydroinformatics, 24, 1194–1206. https://doi.org/10.2166/hydro.2022.061

Gautam, A., Sit, M., and Demir, İ. (2022). Realistic River Image Synthesis Using Deep Generative Adversarial Networks. Frontiers in Water, 4, Article 784441. https://doi.org/10.3389/frwa.2022.784441

Huang, J., Saleh, S., and Liu, Y. (2021). A Review on Artificial Intelligence in Education. Academic Journal of Interdisciplinary Studies, 10, 206. https://doi.org/10.36941/ajis-2021-0077

Kasneci, E., Seßler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., Gasser, U., Groh, G., Günnemann, S., Hüllermeier, E., et al. (2023). ChatGPT for good? On Opportunities and Challenges of Large Language Models for Education. Learning and Individual Differences, 103, Article 102274. https://doi.org/10.1016/j.lindif.2023.102274

Lateef, S. A., Ansari, M. A., Ansari, M., and Manwatkar, T. (2025, May). AI Image Generator Using Open Source for Text to Image. International Journal of Electrical and Electronics and Computer Science (IJEECS), 14(1), 217–220.

Lee, H. (2023). The Rise of ChatGPT: Exploring its Potential in Medical Education. Anatomical Sciences Education, 17, 926–931. https://doi.org/10.1002/ase.2270

Li, Z., and Demir, İ. (2023). U-Net-Based Semantic Classification for Flood Extent Extraction Using SAR Imagery and GEE Platform: A Case Study for 2019 Central US Flooding. Science of the Total Environment, 869, Article 161757. https://doi.org/10.1016/j.scitotenv.2023.161757

Perkins, M. (2023). Academic Integrity Considerations of AI Large Language Models in the Post-Pandemic Era: ChatGPT and Beyond. Journal of University Teaching and Learning Practice, 20, Article 7. https://doi.org/10.53761/1.20.02.07

Ramirez, C. E., Sermet, Y., and Demir, İ. (2023). HydroLang Markup Language: Community-Driven Web Components for Hydrological Analyses. Journal of Hydroinformatics, 25, 1171–1187. https://doi.org/10.2166/hydro.2023.149

Ramirez, C. E., Sermet, Y., Molkenthin, F., and Demir, İ. (2022). HydroLang: An Open-Source Web-Based Programming Framework for Hydrological Sciences. Environmental Modelling and Software, 157, Article 105525. https://doi.org/10.1016/j.envsoft.2022.105525

Sajja, R., Sermet, Y., Cwiertny, D. M., and Demir, İ. (2023). Platform-Independent and Curriculum-Oriented Intelligent Assistant for Higher Education. International Journal of Educational Technology in Higher Education, 20, Article 42. https://doi.org/10.1186/s41239-023-00412-7

Sit, M., Langel, R. J., Thompson, D. A., Cwiertny, D. M., and Demir, İ. (2021). Web-Based Data Analytics Framework for Well Forecasting and Groundwater Quality. Science of the Total Environment, 761, Article 144121. https://doi.org/10.1016/j.scitotenv.2020.144121

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.