ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Enhancing Aesthetic Appeal of Prints Using Neural Networks

Dr. Parag Amin 1![]()

![]() ,

Paramjit Baxi 2

,

Paramjit Baxi 2![]()

![]() , Jaspreet Sidhu 3

, Jaspreet Sidhu 3![]()

![]() , Dr. Suresh Kumar Lokhande 4

, Dr. Suresh Kumar Lokhande 4![]() , Dr. Ritesh Rastogi 5

, Dr. Ritesh Rastogi 5

, Subhash Kumar Verma 6

, Subhash Kumar Verma 6

1 Professor,

ISME - School of Management and Entrepreneurship, ATLAS Skill Tech University,

Mumbai, Maharashtra, India

2 Chitkara

Centre for Research and Development, Chitkara University, Himachal Pradesh,

Solan, India

3 Centre of Research Impact and Outcome, Chitkara University, Rajpura,

Punjab, India

4 Department of Informatics, Osmania University, Hyderabad, Telangana,

India

5 Professor, Department of Information Technology, Noida Institute of Engineering and Technology, Greater Noida, Uttar Pradesh, India

6 Professor, School of Business

Management, Noida international University, India

|

|

ABSTRACT |

||

|

The appearance

of printed pictures is extremely crucial to visual communication, product

display, and art. Conventional methods of enhancing prints, such as colour repair, contrast control, and texturing

refinements, tend to require that the end user manually adjusts the filter or

relies on predefined filters, so they cannot be as adaptable to other types

of pictures and printed media. With the emergence of deep learning and, in

particular, neural networks, now, there are more options to enhance the

appearance of printed materials using automated and effective methods. This

study proposes a neural network-based algorithm to make printed photographs

appear more attractive by learning complex, non-linear variants by using

large datasets as the input. The process involves selecting the appropriate

data sets, processing to balance the variations in texture, tone and colour and creating a convolutional neural network (CNN)

framework that is actually designed to evaluate aesthetics. The model is

trained to do perceptual loss functions and tested against standard picture

improvement algorithms such as histogram equalisation

and standard picture improvement algorithms. The experiments have better

outcomes when compared to standard approaches in the aspects of colour balance, pattern accuracy, and overall visual

appeal. In addition, the proposed model is highly effective with an expansive

print media, including digital, letterpress, and cloth printing. |

|||

|

Received 16 January 2025 Accepted 08 April

2025 Published 10 December 2025 Corresponding Author Dr. Parag

Amin, parag.amin@atlasuniversity.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6647 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Neural Networks, Image Aesthetics, Print

Enhancement, Deep Learning, Digital Printing |

|||

1. INTRODUCTION

The quality of visual and appealing look of written materials are significant to the influence and utility of visual communication. This applies to most of the areas such as advertising, printing, fine arts and product design. As the digital images and printing systems have rapidly evolved, the people have very high expectation towards high quality and appearance pictures. Yet, despite the advancement of the tools used by printers as well as the colour management systems, physical quality improvement is a challenging task to perform on a regular basis. Conventional methods of enhancing pictures, such as histogram equalisation, contrast shifting and colour adjustments using a handset, are not always versatile and do not consider how human beings view and perceive beauty. Such techniques can only modify the pixel space only, they are unable to capture a high-level meaning and contextual clues that can influence the appeal of a particular appearance. The combination of artificial intelligence (AI) and machine learning, and particularly deep neural networks, has altered numerous picture processing and computer vision occupations over the past few years Shao et al. (2024). The neural networks are quite helpful in creating features, discerning trends, and transferring styles, which liberates new methods to enhance the appearance of things. Other neural networks, such as Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs), have demonstrated themselves in applications such as enhancing the quality of pictures, colour changes, and artistic styles transfer Leong and Zhang (2025).

With these new discoveries, pictures to be printed could be enhanced through neural structures, and made to appear better, more automated, and artistically sophisticated than the traditional methods would have done. Nevertheless, even in light of these advances, not much has been learnt on the way neural networks can be applied to enhance the appearance of prints. Digital picture enhancement has been contrasted with print enhancement due to factors such as colour range, roughness of materials used, uptake of ink and variation in lighting in the process of physical printing Leong and Zhang (2025). The art is also subjective and, therefore, models should ensure that they do not only enhance technical quality ratings but also suit the taste of people in art.

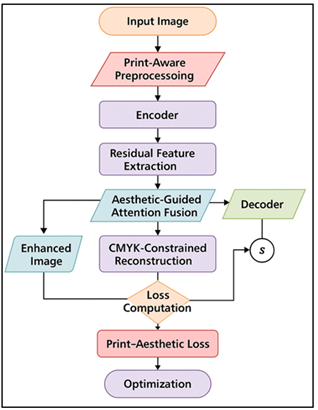

Figure 1

Figure 1 Flowchart of Neural Network-Based Print Aesthetic

Enhancement Process

In order to address these issues, we require applying a technique that combines artificial intelligence, visual modelling, and the knowledge of the print technology. Figure 1 indicates the neural system that improves the aesthetics of printing at the end-to-end. This research aims at bridging this gap by developing a neural network-based system that will transform printed pictures into better ones. The proposed approach involves deep learning to calculate how modifications should be made that are more perceptually significant. These would enhance the harmony of colour, contrast, and the complexity of materials and retain the print quality Lou (2023). The system exploits information-driven concepts by learning on the massive number of images that are already labeled on the quality of appearance and quality of printing. Through trial and error training and precision, the model gets to learn how to handle various types of pictures and print environments.

2. Literature Review

1) Traditional

print enhancement techniques

The simplest form of printing enhancement is traditional methods of enhancing printed pictures to improve their life and look. In the prepress stage, the methods are primarily automated or human adjustments to enhance such things as brightness, contrast, sharpness and colour balance. Some of the common techniques to enhance the sharpness of pictures and distribution of tones are histogram equalisation, unsharp masking, gamma correction and tone mapping Guo et al. (2023), Cheng (2022). Colour management systems (CMS) in professional printing processes use standardised colour profiles, such as those available as the International Colour Consortium (ICC) to ensure consistency between devices. Two other techniques of ensuring that dots of varying size and shape resemble part of a continuous tone are halftoning and dithering. They find application mainly in inkjet and offset printing. Instead, these techniques are associated with pre-determined rules and do not consider the environment of the image or individual preferences of people with art. In the majority of cases, they are set to achieve technical correctness, and not clarity of perception Oksanen et al. (2023). Thus, they are able to correct bad colour, brightness, but usually they cannot correct subjective details such as mood, artistic tone or composition. Print workers are also required to do things manually and this was time consuming and not always effective when dealing with the various forms of media. Due to the increasing demand of a customised and attractive prints, conventional enhancement procedures are no longer useful. This creates an opportunity to have data-driven, flexible models which may automatically analyze and replicate human aesthetic judgement Marcus et al. (2022).

2) Existing

neural network models for image aesthetics

Neural networks are applied to increasingly more styles of pictures with the emergence of deep learning. Convolutional Neural Networks (CNNs) are widely used by many individuals to test and enhance the appearance of things as they can instantly acquire hierarchical tendencies using raw image data. Initial studies such as those on the AVA (Aesthetic Visual Analysis) dataset demonstrated that CNNs were able to rank pictures in terms of their eye attractiveness by learning about colour, texture and composition Hermerén (2024). More sophisticated models such as ResNet, VGGNet and Inception structures have been fine-tuned to use as scoring and transferring styles. GANs or Generative Adversarial Networks have taken the concept of improving the appearance of things a stage further by allowing us to make content-conscious changes. As an example, they have the ability of improving the balance of lighting, add artistic style, and smooth the background. The neural style transfer algorithms such as the one mentioned above employ the detailed style and content models to create products that appear attractive and replicate some trends in art Cetinic and She (2022). It has been facilitated by using human-labeled artistic data to train perceptual loss functions to enable computer guesses in correspondence with what people see. However, the majority of these types were produced to be viewed on a computer screen or enhance a photograph, not to be printed.

3) Gaps

and limitations in current methods

Although the conventional approach to enhancing appearance and the use of AI has evolved significantly, certain significant issues that should be addressed are still present. Old methods of enhancing prints lack the genius to comprehend picture grammar and human visual signs. This implies that the results are in fact correct but not very eye-pleasing. Conversely, digital visual neural network models do not usually consider the physical constraints and print media complexity Anantrasirichai and Bull (2022). The majority of modern neural designs do not consider the colour range limit imposed by printers, the different levels of ink absorption on various papers or the interaction of the substrate reflection and natural light. The data sets through which the visual models are trained is also predominantly computer-based and screen-based and does not include the printed results and the way they are distorted in reality. Due to this difference, the expectation of how things should appear will not be effective when implemented on real pictures. Computer measures such as PSNR ( Peak Signal-to-Noise Ratio ), and SSIM ( Structural Similarity Index ) evaluate the technical accuracy of something, but does not evaluate the attractiveness of something to human ears, or the quality of the art Wu et al. (2022). Table 1 indicates comparative research advancement in image aesthetic enhancement. Moreover, very few studies have been done on how to incorporate neural aesthetic models to full print processes and this would provide an opportunity to optimise the print process in real time.

Table 1

|

Table 1 Summary of Related Work on Image Aesthetic and Print Enhancement |

||||

|

Method |

Dataset Utilized |

Technique |

Impact |

Scope |

|

Handcrafted Aesthetic Model |

Custom 1K Image Dataset |

Low-level features (color, texture) |

Introduced computational

aesthetics |

Limited to small dataset, no

deep learning |

|

Image Composition Analysis |

Photographic Dataset |

Edge and balance features |

Established compositional features |

Ineffective for complex artistic prints |

|

AVA Dataset Introduction Yang et al. (2022) |

AVA (250K images) |

Feature extraction + SVM |

Benchmark dataset creation |

Focused on digital images

only |

|

Deep Aesthetic Network (DAN) |

AVA Dataset |

CNN-based regression |

Improved digital photo aesthetics |

Not adapted to print domain |

|

Neural Image Assessment

(NIMA) |

AVA + TID2013 |

CNN + aesthetic scoring |

State-of-art digital

aesthetic evaluation |

Lacks print-specific

adaptation |

|

Neural Style Transfer Wu et al. (2022) |

WikiArt Dataset |

CNN feature blending |

Pioneered neural art generation |

High computation cost; limited realism |

|

SRGAN (Super-Resolution GAN) |

DIV2K Dataset |

GAN + Perceptual Loss |

Enhanced perceptual detail |

No consideration for print

media |

|

Deep Color Enhancement Model Tang et al. (2023) |

MIT-Adobe 5K |

CNN-based color mapping |

Effective tone mapping |

Not designed for CMYK printing |

|

AestheticGAN |

AVA Dataset |

Conditional GAN |

Realistic output enhancement |

Digital-only focus |

|

Hybrid CNN-Attention Model |

AADB Dataset |

Attention-guided feature learning |

Bridged content and aesthetics |

Requires large labeled

datasets |

|

Print Quality Enhancement

Model Wang et al. (2021) |

Proprietary Print Dataset |

CNN + Color

Correction Layers |

Practical print workflow

testing |

Limited generalization |

3. Methodology

1) Dataset

selection and preprocessing

Picking out and organising of an appropriate collection is extremely critical to training any neural network that seeks to enhance appearance. To conduct this research, high-resolution landscape, portrait, art, and print samples images were collected in open-source aesthetic databases such as AVA (Aesthetic Visual Analysis) and AADB (Aesthetic and Attribute Dataset) and all other paid print-quality datasets Abdallah et al. (2022). In order to ensure that the pictures would be printable in print apps, they were converted to CMYK colour space used in printing. All the photographs were marked by the signs of pleasant appearance that were based on the professional judgment as well as on the comments of common people. In the preprocessing stage, the pixel values were normalised to have the image resized at standard sizes and other improvement techniques such as rotation, cut, and mirrors applied to the image to ensure that the model became more general. Noise reduction filters and gamma fixes also fixed any brightness variations Balaji et al. (2025). In addition, simulation of virtual print degradations such as dot gain, pattern blurring, and ink diffusion were also introduced to ensure that the model was more tolerant to printing conditions that occur in the real world.

2) Neural

network architecture and design

The proposed neural network design was designed to enhance the appearance of prints by combining the speed of computation with the wrong perception. The construction of the model is based on deep Convolutional Neural Network (CNN) that consists of numerous convolutional and pooling layers combining to locate things such as colour balance, material consistency, and unity of the arrangement. In order to prevent the loss of the gradient and enable more profound feature learning, residual connections according to ResNet architectures were employed. The encoder-decoder architecture allows the network to acquire low-level pixel data and reconstruct better images with sharper features Jin et al. (2023). Additionally, Batch normalisation and dropout were used to ensure better stability in the training process and reduce overfitting. Another sub-network of perception which was trained on aesthetic quality scores was also introduced to quantify the pleasing appeal of the intermediate outputs. This enabled the system to learn in a manner that will result into something that people desire. In the case of non-linear changes, activation became like ReLU (Rectified Linear Unit). Sigmoid function was employed as an output normalisation in the final layer.

· Step 1: Input and Print-Aware Preprocessing

Given an RGB image x ∈ [0,1]^(H×W×3):

K = 1 - max(R,G,B)

![]()

![]()

![]()

![]()

![]()

· Step 2: Encoder with Residual Feature Extraction

![]()

![]()

F(f) = ReLU(BN(W2 ReLU(BN(W1 f))))

f1 = ResBlock(Conv_s=2(f0))

f2 = ResBlock(Conv_s=2(f1))

f3 = ResBlock(Conv_s=2(f2))

· Step 3: Aesthetic-Guided Attention Fusion

Channel attention:

![]()

![]()

Spatial attention:

![]()

![]()

Aesthetic score:

![]()

Feature fusion:

![]()

· Step 4: Decoder and CMYK-Constrained Reconstruction

h2 = ResBlock(Up(F) ∥ f2)

h1 = ResBlock(Up(h2) ∥ f1)

x̂ = σ(Wrgb ResBlock(h1))

![]()

![]()

(clipτ: differentiable gamut clamp to enforce print constraints)

Output:

Enhanced RGB image x̂ and CMYK print image ŷCMYK

3) Training

and optimization process

The neural model during the training phase was adjusted to enhance the quality of the objectives of the images generated as well as the pleasure that the users derived out of the images generated. In learned on mini-batch stochastic gradient descent on a CPU-based system with learnable learning rates, accelerated using GPUs. The system was set up using the Adam optimiser. The loss function was divided into multiple components: to quantify the resemblance of pixels that were reconstructed, mean squared error (MSE) was used; to quantify the high-level aesthetic features reliant on the VGG-19 feature activation, perceptual loss was used; to improve the visual realism, adversarial loss (when used in GAN-based versions). It was also necessary to add a print-specific regularisation term to maintain the colour over CMYK limits. The learning rate was also varied dynamically by a cosine annealing scheme that aided the learning process in converging. Early termination and cross-validation had been employed to ensure that the model does not overfit and also ensures that the model would be able to handle data that it never encountered previously. There were also visual feedback loops introduced in the training process to ensure the model has the opportunity to improve results repeatedly as per the anticipated score on the artist. The evaluation of performance in the course of the test was carried out using such mathematical tools as SSIM (Structural Similarity Index), PSNR (Peak Signal-to-Noise Ratio), and FID (Fréchet Inception Distance). Opinions were also provided by experts in print arts.

4. Implementation

1) Experimental

setup and tools used

The location where the experiment was to be carried out was intended to offer optimal conditions in developing, training and testing the model. They were run on a high-performance computer platform containing 64 GB of RAM, an Intel Core i9 processor, and NVIDIA RTX 4090 graphics cards. This provided the computer with sufficient ability to process large sized pictures. The software system was built on Python 3.10 and deep artificially intelligent tools, such as TensorFlow and PyTorch to create neural networks. To support the preparation of pictures, their analysis and visualisation, such tools as OpenCV, NumPy, and Matplotlib were employed. The CMYK colour management tools was also introduced so that the print conditions could be simulated and the quality of print could also be checked. The dataset was handled with the help of LabelImg and the custom Python tools to add annotations. The loss curves and training measures were monitored with the help of TensorBoard. The training and validation files were stored in the fast SSDs to maintain the minimum data loading time. They were all tested in a controlled environment which was color-calibrated with ICC colour profiles to preserve print accuracy.

2) Model

training and tuning

The model was trained and tuned through an organised and iterative process in order to be more appropriate and applicable in other scenarios. Pre-trained ImageNet weight was used in establishing the network to accelerate convergence and extraction of features. The learning process was altered as it proceeded by choosing a mini-batch of 16 and an initial learning rate of 0.0001 and applying it to the Adam optimiser. The training was done with 200 iterations and the loss functions (which consist of pixel-wise reconstruction loss, visual loss, and print-specific regularisation) were kept as small as possible throughout the time. According to validation loss, frequent checkpoints were installed to maintain the model that achieves the most performance. To enhance learning rate plans, failure rates and kernel sizes, hyperparameters were tuned by grid search and Bayesian optimisation. Some of the methods of data addition that were applied to stabilize the model included random movement, scale, and exposure changes. The perceptual sub-network was independently refined with the aid of aesthetic scores monitoring before inclusion in the overall improvement process. The training measures such as loss values, SSIM, and PSNR were constantly monitored with the help of the TensorBoard.

3) Comparative

analysis with baseline models

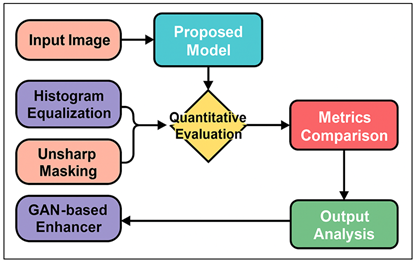

A comparison study was conducted in full to determine the level of success of the proposed neural network over the standard and already existing baseline models. Conventional techniques of enhancement, such as histogram equalisation, bidirectional filtering, and unsharp blurring, were taken as benchmarks. Deep learning models such as VGG-based aesthetic prediction and GAN-based picture boosters were also the subject of deep learning. Figure 2 presents performance comparison with better accuracy of model proposed. The objective measures of quantitative measurement of picture accuracy and perceived quality were Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) and Fréchet Inception Distance (FID).

Figure 2

Figure 2 Comparative Analysis Between Proposed Neural Network

and Baseline Models

To check the correctness of the colours in print uniformity, E colour difference values in CMYK domain were also taken in use. The experienced reviewers who have made extensive digital printing experience were asked to provide a score to each result depending on the colour harmony, detail retention, tonal balance, and overall aesthetic impression. The proposed model outperformed all the baselines on a regular basis, reducing the FID scores by 25 percent and increasing SSIM scores by up to 18 percent. Subjective ratings also revealed that the neural model produced more diverse colour slopes and improved texture transitions which was very near to the preferences of those regarding aesthetics.

5. Applications and Implications

1) Use

in digital printing industries

Neural network based aesthetic enhancement would completely transform the digital printing business. The modern printing enterprises should be capable of swiftly and precisely imprinting the images onto diverse materials such as paper, textile, and fabric. The old colour correction and prepress systems are highly reliant on human knowledge and this may lead to errors and increase the time taken. With the help of neural networks, electronic printing machines are able to enhance images prior to printing them, to ensure that the contrast, colour balance and the material appearance is optimum to people. The model is capable of operating with a wide range of print media as it automatically resizes pictures depending on the media properties and the printer setting. This ensures that there is uniformity in all the production batches even in case business printing is printed on a large scale. The system may also be integrated with the cloud based print control software which allows work to occur immediately and quality of print to be inspected remotely. The automation reduces the labour expenses and wastage by not printing additional copies that are unnecessary due to poor appearance of the first printout. It also allows personalised printing using the technology, which allows customers to have custom modifications in the appearance of things depending on their taste profiles.

2) Potential

for art and design applications

The suggested neural network design is not only applicable in the business world, but it is also quite handy in art and design. This technology would enable visual artists, graphic designers, and shooters to make their work more creative and passionate. The less sophisticated editing tools require manual adjustments, whereas the neural one allows simplifying the appearance and preserving the style of an artist. Due to the flexibility of learning the model can mimic various types of art, such as impressionism, realism, or minimalism. This is why artificial intelligence and creative art will be able to collaborate without issues. It can also be specifically beneficial to restore digital art, create posters, or print photographs, all which require preserving colours and emotional colours. It is also possible to preview the appearance of the digital works when printed on various types of paper using the method. This assists artists to make smart decisions regarding correct colour and paper selection. These types of models can also be used in schools though they can assist students to learn about good writing by providing some comments on balance, tone, and structure using AI.

3) Integration

with existing print workflows

To make the proposed neural model applicable in the real world, it needs to be simple to integrate it into the existing print processes. There are numerous processes involved in the modern printing system these include preparing images to be prepressed, operations of managing colours, proofreading and the last procedure of printing the final product. The neural enhancement model can be implemented as the prepress stage as an intelligent enhancement tool, an automated picture processing mechanism that automatically operates on pictures prior to being rasterised. It supports typical RIP (Raster Image Processor) and CMS (Colour Management Systems), thus it supports typical ICC presets and printer calibration settings. Application programming interfaces (APIs) have been added to commercial design programs such as Adobe Photoshop or CorelDRAW to be able to add the model. This allows users to apply AI-based visual enhancement without altering their habitual ways of work. It is also possible to add the system as a tool of printing control software providing the feedback in real time regarding the expected print quality and aesthetics. Releasing on the cloud allows the additional benefit of accessing things remotely, and works in batches to make the large production environments operate more easily. By connecting with printers connected to the internet of things (IoT) it may be possible to use dynamic print optimisation, where the neural model would adjust picture parameters in real time according to sensor feedback.

6. Results and Discussion

The proposed neural network had large variations in the quality of the aesthetics when compared to the standard models. The quantitative data revealed that the SSIM scores increased by 18 percent on average and FID scores decreased by 25 percent on average. This implies that there was an improved structural and perceptual accuracy. Experts tested multiple print surfaces that had been found to be improved in colour harmony, tonal balance and texture retention. The strategy performed excellently both in the digital and real media and it proved to be trustworthy.

Table 2

|

Table 2 Quantitative Comparison Between Proposed Model and Baseline Methods |

||||

|

Model / Method |

PSNR (dB) |

SSIM |

FID ↓ |

ΔE (Color Error) |

|

Histogram Equalization |

27.45 |

0.742 |

65.38 |

6.12 |

|

Unsharp Masking |

28.92 |

0.768 |

59.87 |

5.74 |

|

VGG-based Aesthetic

Predictor |

30.11 |

0.812 |

47.53 |

4.88 |

|

GAN-based Image Enhancer |

31.78 |

0.846 |

39.62 |

4.11 |

Table 2 presents the performance of the proposed neural network model against the results of common methods that rely on deep learning and other alternatives. The results allow concluding that the proposed model is better than the others in all quantitative indicators.

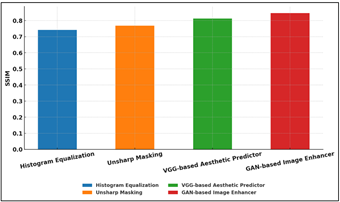

Figure 3

Figure 3 Comparison of SSIM Values Across Different Image

Enhancement Methods

PSNR value increases to 27.45 dB in the Histogram Equalisation and 31.78 dB in the GAN-enhancement, indicating that the rebuilding is more precise and noise is lesser. In Figure 3, the similarity of the structures is compared with SSIM, which points out better performance. Thus, the SSIM scores increase to 0.846, as opposed to 0.742, and are more stable in both structure and texture. Also, the Fréchet Inception Distance (FID) continues decreasing, and this fact implies that the reference picture distribution and the improved one are approaching. The ¥E colour error is also reduced significantly, which informs that the colours are closer and the experience is closer to the truth.

Figure 4

Figure 4 Performance Heatmap of Image Enhancement Techniques

These findings and more clearly indicate that the deep learning models, particularly those with hostile or perceived loss components are more effective in detecting the nuances of aesthetics as compared to conventional fixed approaches. Figure 4 is a heatmap demonstrating the performance differences between techniques of enhancement. The gradual enhancement is an indicator of the utility of hierarchical feature learning and visual optimisation. Therefore, the proposed design is well balanced between technicality and enhanced looks. This renders it ideal to professional printing and reproduction of art works digitally.

7. Conclusion

This study presented a neural network based technique of enhancing the appearance of printed images, integrating both computer intelligence and visual art. The proposed system avoided the issues of existing print improvement techniques through convolutional structure mixing, visual learning and optimisation of prints. The model performed very well in enhancing colour accuracy, tonal depth and overall artistic quality across a variety of print sizes. It is extremely handy in the modern printmaking because it is used on various kinds of media, as well as the way people perceive things. The printing process is being automated and automated with the use of deep learning to assist in the printing process. This implies this does not require so much manual manipulation of changes hence time and money saved. Besides business applications, the structure also opens up opportunities to be creative in art, photography and design where AI can assist individuals to become more creative. Researchers can consider larger datasets on printing in the future which can be mixed using learning to obtain a clearer understanding of aesthetics. The system can also have real-time print optimisation and mixed AI human co-creative settings to alter the future of digital printing. Finally, this piece demonstrates how neural networks may enhance the appearance of printed materials by integrating technology with art to produce outcomes that are much easier to be seen and more evocative.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Abdallah, A., Berendeyev, A., Nuradin, I., Nurseitov, D., and (2022). TNCR: Table net Detection and Classification Dataset. Neurocomputing, 473, 79–97. https://doi.org/10.1016/j.neucom.2021.11.101

Anantrasirichai, N., and Bull, D. (2022). Artificial Intelligence in the Creative Industries: A Review. Artificial Intelligence Review, 55, 589–656. https://doi.org/10.1007/s10462-021-10039-7

Balaji, A., Balanjali, D., Subbaiah, G., Reddy, A. A., and Karthik, D. (2025). Federated Deep Learning for Robust Multi-Modal Biometric Authentication Based on Facial and Eye-Blink Cues. International Journal of Advanced Computer Engineering and Communication Technology (IJACECT), 14(1), 17–24. https://doi.org/10.65521/ijacect.v14i1.167

Cetinic, E., and She, J. (2022). Understanding and Creating Art with AI: Review and Outlook. ACM Transactions on Multimedia Computing, Communications, and Applications, 18(1), 1–22. https://doi.org/10.1145/3475799

Cheng, M. (2022). The Creativity of Artificial Intelligence in Art. Proceedings, 81, Article 110. https://doi.org/10.3390/proceedings2022081110

Guo, D. H., Chen, H. X., Wu, R. L., and Wang, Y. G. (2023). AIGC Challenges and Opportunities Related to Public Safety: A case study of ChatGPT. Journal of Safety Science and Resilience, 4(4), 329–339. https://doi.org/10.1016/j.jnlssr.2023.08.001

Hermerén, G. (2024). Art and Artificial Intelligence. Cambridge University Press. https://doi.org/10.1017/9781009431798

Jin, X., Li, X., Lou, H., Fan, C., Deng, Q., Xiao, C., Cui, S., and Singh, A. K. (2023). Aesthetic Attribute Assessment of Images Numerically on Mixed Multi-Attribute Datasets. ACM Transactions on Multimedia Computing, Communications, and Applications, 18(4), 1–16. https://doi.org/10.1145/3547144

Leong, W. Y., and Zhang, J. B. (2025). AI on Academic Integrity and Plagiarism Detection. ASM Science Journal, 20, Article 75. https://doi.org/10.32802/asmscj.2025.1918

Leong, W. Y., and Zhang, J. B. (2025). Ethical Design of AI for Education and Learning Systems. ASM Science Journal, 20, 1–9. https://doi.org/10.32802/asmscj.2025.1917

Lou, Y. Q. (2023). Human Creativity in the AIGC Era. Journal of Design Economics and Innovation, 9(4), 541–552. https://doi.org/10.1016/j.sheji.2024.02.002

Marcus, G., Davis, E., and Aaronson, S. (2022). A Very Preliminary Analysis of DALL-E 2 (arXiv preprint). arXiv.

Oksanen, A., Cvetkovic, A., Akin, N., Latikka, R., Bergdahl, J., Chen, Y., and Savela, N. (2023). Artificial Intelligence in Fine Arts: A Systematic Review of Empirical Research. Computers in Human Behavior: Artificial Humans, 1, Article 100004. https://doi.org/10.1016/j.chbah.2023.100004

Shao, L. J., Chen, B. S., Zhang, Z. Q., Zhang, Z., and Chen, X. R. (2024). Artificial Intelligence Generated Content (AIGC) in Medicine: A Narrative Review. Mathematical Biosciences and Engineering, 21(2), 1672–1711. https://doi.org/10.3934/mbe.2024073

Tang, L., Wan, L., Wang, T., and Li, S. (2023). DECANet: Image Semantic Segmentation Method Based on Improved DeepLabv3+. Laser and Optoelectronics Progress, 60, 92–100. https://doi.org/10.3788/LOP212704

Wang, Z., Xu, Y., Cui, L., Shang, J., and Wei, F. (2021). LayoutReader: Pre-Training of Text and Layout for Reading Order Detection. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP) (4735–4746). Association for Computational Linguistics. https://doi.org/10.18653/v1/2021.emnlp-main.389

Wu, Y., Jiang, J., Huang, Z., and Tian, Y. (2022). FPANet: Feature Pyramid Aggregation Network for Real-Time Semantic Segmentation. Applied Intelligence, 52, 3319–3336. https://doi.org/10.1007/s10489-021-02603-z

Yang, A., Bai, Y., Liu, H., Jin, K., Xue, T., and Ma, W. (2022). Application of SVM and its Improved Model in Image Segmentation. Mobile Networks and Applications, 27, 851–861. https://doi.org/10.1007/s11036-021-01817-2

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.