ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Assisted Restoration of Folk Murals

Anoop Dev 1![]()

![]() ,

Sachin Singh 2

,

Sachin Singh 2![]()

![]() , Ashmeet Kaur 3

, Ashmeet Kaur 3![]()

![]() , Neha 4

, Neha 4![]() , Dr. Satish Upadhyay

5

, Dr. Satish Upadhyay

5![]()

![]() , Dr. J. Refonaa 6

, Dr. J. Refonaa 6![]()

![]()

1 Centre

of Research Impact and Outcome, Chitkara University, Rajpura, Punjab, India

2 Assistant

Professor, Department of Computer Science and Engineering (AI), Noida Institute

of Engineering and Technology, Greater Noida, Uttar Pradesh, India

3 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, India

4 Assistant Professor, School of Business Management, Noida International

University, Noida, Uttar Pradesh, India

5 Assistant Professor, UGDX School of

Technology, ATLAS Skill Tech University, Mumbai, Maharashtra, India

6 Assistant Professor, Department of

Computer Science and Engineering, Sathyabama Institute of Science and

Technology, Chennai, Tamil Nadu, India

|

|

ABSTRACT |

||

|

Handmade

techniques have been used for a long time in the restoration of folk

paintings, which depict cultural stories and popular art. However, these

traditional approaches to the restoration of artefacts are not always

suitable for the modern demand for conservation. This is especially true in

the event of significant damage, lack of records or loss of original colours. The rise of artificial intelligence (AI) has

changed the way digital repair is done by making it possible for automatic

rebuilding, colour improvement and texture creation

to be done with amazing accuracy. This paper explores the use of advanced

neural network architectures such as Convolutional Neural Networks (CNNs),

Generative Adversarial Networks (GANs) and Neural Style Transfer (NST) to

assist in the restoration of folk paintings using AI. High-quality datasets

were gathered by using different types of folk murals from different regions

of the world. These datasets have been carefully preprocessed to remove

noise, make colours more uniform and group them

together. The AI models were trained to look for trends, fill in the blanks

and copy the subtleties of style that are unique to folk customs. The test

results indicate that the results are significantly superior to the ones

obtained in regular digital techniques in both visual quality and the

structure coherency. Competent art conservators have proven that the model is

able to preserve the cultural identity while reducing the time and cost of

restoration. |

|||

|

Received 14 January 2025 Accepted 06 April

2025 Published 10 December 2025 Corresponding Author Anoop Dev,

anoop.dev.orp@chitkara.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6643 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI Restoration, Folk Murals, GAN, Neural Style

Transfer, Cultural Heritage Preservation |

|||

1. INTRODUCTION

Folk paintings are an essential element in the world's intangible cultural heritage because they describe visually the customs, beliefs, social and cultural values of the communities which are handed down from generation to generation. Frequently depicted on the walls of temples, houses in villages and public places, these paintings are representative of what a region is about. As time has elapsed, however, the effects of natural aging, climatic changes, biological decay, and careless humans have all taken their toll on the gradual devastation of these valuable works of art. Even though traditional repair techniques are very skilled, they are limited due to the lack of expert conservators, the fact that people have different ideas of how to read color and texture, and the fact that human alterations can not be removed. It is therefore more important than ever to find new ways to restore these cultural treasures, that are accurate and efficient, and that can be enjoyed by our future generations. The use of Artificial Intelligence (AI) in art restoration has become very promising in recent years. Artificial intelligence (AI) systems, particularly those that are deep learning-based, are super good at upscaling images, free drawing and style transfer. Traditional digital repair tools require a lot of manual changes to be made. AI models, on the other hand, can independently learn from large datasets to replicate detailed artistic patterns, surfaces and style details Jaillant et al. (2025). Automating the process not only makes it faster, it also ensures that everything is done the same way every time and it removes bias that is added by people.

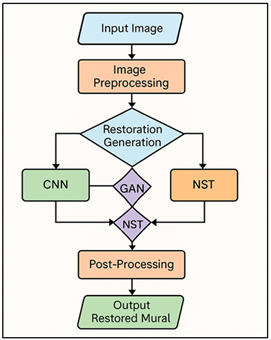

Folk murals have regional variations in the colours used, the stroke of the paint and the symbols used. AI can be a scalable and flexible means to restore these murals, which are impossible to do with traditional restoration methods. As a kind of adversarial learning method, Generative hostile Networks (GANs) have facilitated the generation of realistic patterns as well as the replenishment and restoration of incomplete or degraded images. Another possible technique is Neural Style Transfer (NST) which allows transferring artistic features such as colours and brush strokes from one image to another. This allows the accurate duplication of the appearance of a painting Yu et al. (2022). All of these models work together to form a comprehensive AI-assisted repair system which has the ability to perform structural and artistic rebuilding. Using AI to restore folk murals is not only a technological advance but has huge cultural effects as well. Figure 1 illustrates the sequential steps of AI restoration as follows ensuring authenticity, accuracy, and cultural preservation.

Figure 1

Figure 1 Process Flow of the AI-Driven Mural Restoration

Pipeline

The digitalisation and recovery of these works by artificial intelligence will enable not only their preservation in a visual form, but also the storage of information about their style to facilitate future study and teaching or virtual presentation. AI-assisted restoration can also broaden cultural preservation access to all by enabling museums, students and the public to access digitised versions via the internet and interactive technologies such as Augmented Reality (AR) and Virtual Reality (VR) Gao et al. (2024). The aim of this study is to develop and experiment an AI-aided repair process which is specifically designed for folk paintings in terms of correctness, realism and aesthetic harmony.

2. Literature Review

1) Traditional

Methods of Mural Restoration

Traditional mural restoration is an extremely skilled profession that requires a scientific understanding of chemistry in combination with art history and knowledge. These are the most effective ways of preserving the unique shape and appearance of the artwork and ensuring its stability. In the past, restoration techniques included cleaning, combination, reintegration and protection Zhang (2023). Cleaning removes dirt, soot or biological deposits using light solvents or mechanical tools. Consolidation on the other hand is the process using glues such as animal glue, casein, or acrylic resins to hold down layers of paint that are breaking apart. Sweet uses the technique of colour reconstruction on lost passages, rarely executed in the tratteggio or rigatino, to restore lost sections without any alteration of the original. In order to preserve the original appearance of the paintings, local lime-based plasters, organic binders and natural colours are commonly employed for the repairs Chen et al. (2024), Zeng et al. (2024). This is, however, usually complicated by such considerations as contact with the environment, fading, and aging of the base. Moreover, hand restoration is laborious, requires an expert knowledge, and leaves space for the phenomenon of over-restoration, the artistic intervention that transforms into an object the historical reality of the object. In addition, because repair work is often not well recorded Tekli (2022), it is also difficult to track long-term conservation outcomes.

2) Previous

Applications of AI in Art and Image Restoration

Digital restoration of art and images has been revolutionized by the use of Artificial Intelligence (AI). Initial efforts in this field were made in the area of picture inpainting, where the loss or destruction of some parts of an artwork were reconstructed mathematically, using pattern analysis. Repair systems have been enabled to automatically learn complex visual features by deep learning, specifically Convolutional Neural Networks (CNNs) Zeng et al. (2022). This helped them to accurately reproduce patterns, lines and colour changes. Generative Adversarial Networks (GANs) took a big step forward when adversarial learning was added. In such networks, a generator produces plausible repairs and a discriminator is used to check whether the repairs are legitimate Budhavale et al. (2025). This technique allowed them to make repairs that were aesthetically pleasing and retained the original style of the art and structural integrity of the building. Neural Style Transfer (NST) has also been applied to transfer the style from a stylistic reference image into a damaged picture. This makes it particularly useful for reconstructing landscapes or drawings that have style or cultural similarities Gao et al. (2025). Table 1 provides the previous AI restoration studies with a focus on the techniques, contributions, and limitations. There has also been recent research into the potential application of AI to colourization, crack detection, and pattern recognition in old photographs.

Table 1

|

Table 1 Related Work Summary on AI-Based Art and Mural Restoration |

||||

|

Project |

Technique Used |

Algorithm |

Key Contribution |

Gap |

|

Digital Image Inpainting |

Exemplar-based inpainting |

Patch-based algorithm |

Introduced texture synthesis

for damaged images |

Limited to small gaps |

|

Neural Style Transfer Feng et al.

(2023) |

Deep learning-based stylization |

CNN (VGG-19) |

Enabled style replication between images |

Computationally expensive |

|

Context Encoders |

Deep convolutional

autoencoders |

CNN + L2 loss |

First deep inpainting model

using encoders |

Struggles with fine detail |

|

Semantic Image Inpainting Ma et al. (2023) |

GAN for image restoration |

DCGAN |

Applied adversarial learning for realistic inpainting |

Loss of texture realism |

|

Partial Convolutions |

Masked CNNs for irregular

holes |

Partial-CNN |

Handled irregular damaged

regions effectively |

Slow convergence |

|

Free-form Inpainting Dahri et al. (2024) |

Two-stage GAN framework |

Coarse-to-fine GAN |

High-quality texture generation |

Requires large datasets |

|

Heritage Wall Painting

Restoration Sun et al. (2025) |

Deep restoration for ancient

murals |

CNN + GAN |

Restored color

and structure in heritage art |

Region-specific model |

|

Creative Adversarial Network (CAN) |

Generative art synthesis |

CAN (GAN variant) |

Simulated new artistic styles |

Not designed for restoration |

|

Automated Fresco

Reconstruction |

Style-consistent restoration |

GAN + NST |

Preserved stylistic

integrity across regions |

Limited cross-style accuracy |

|

Colorization of Ancient Paintings |

AI color recovery |

CNN |

Achieved accurate pigment restoration |

Requires expert validation |

|

Digital Conservation of

Indian Folk Art |

Transfer learning for mural

repair |

CNN + Transfer Learning |

Adapted models to folk art

patterns |

Dataset diversity limited |

|

GAN-based Cultural Art Restoration Iqbal et al. (2025) |

Multiscale adversarial model |

MS-GAN |

Balanced texture and structure preservation |

High computational demand |

3. Methodology

3.1. Data Collection: Image Sources and Quality Assessment

Any AI painting repair project begins with a good picture collection with many different types of images. The data required is gathered by gathering high-resolution pictures of folk paintings from a wide variety of locations, time periods, and cultures. Records, photographs, art protection records, research libraries and museum files are the main sources of data. Contact with local art historians or cultural organizations, and field studies, also provide access to paintings that are unique to a particular region and are not necessarily well documented in larger libraries. High-definition cameras, drones or 3D scanners are used wherever possible to ensure that the texture, colour and structure characteristics are faithfully reproduced. As soon as the pictures are collected, they are tested for quality to ensure they meet the requirements for use with AI training. Things such as clarity, regularity of lighting, sharpness of focus and colour accuracy are closely examined. Badly damaged images with a lot of noise, shadow or distortion are either corrected using preparation methods or removed from the collection. The information is then separated into classes according to the painting type, region, the style of painting, and the degree of damage so that the model can be trained and its performance can be assessed more specifically. As a function of quality assessment of a work, annotations of metadata are carried out, involving information such as the origin of the painting, its material, and its historical context.

3.2. AI Model Selection

1) CNN

Convolutional Neural Network (CNN) is extremely important in picture analysis because they are capable of easily extracting visual characteristics that are hierarchically organized. Colour changes and edges and textures are discovered by CNN in painting repair. This allows the location of damaged areas to be identified and missing patterns to be restored. CNNs are trained using large absorptions of high-quality painting photos and they learn to distinguish between damage and the original artwork characteristics. Their convolutional layers identify with the spatial relationships, which allows them to map the features accurately and scale back the continuity. CNN-based autoencoders are also good at removing noise and finishing images which ensures that rebuilt areas maintain their structure integrity. Hence, the CNNs form the most crucial part of repair systems which should be very precise and stable.

· Step 1: Convolution Operation

![]()

→ Extracts spatial features from the input image using learned filters.

· Step 2: Activation Function (ReLU)

![]()

→ Introduces non-linearity and prevents vanishing gradients.

· Step 3: Pooling Operation

![]()

→ Reduces spatial dimensions while retaining key features.

· Step 4: Loss Function (Mean Squared Error)

![]()

→ Measures pixel-level difference between original and restored images.

2) GAN

Improved painting repair using Generative aggressive Networks (GANs) is a method of performing painting restoration with a good looking reconstruction using an aggressive learning scheme. A GAN consists of two neural networks, a generator, which can correct images, and a discriminator, which helps determine the authenticity of the image. Competing for a prize, the system produces results that are very similar to the look of actual paintings. GANs are really good at filling in areas that are missing/broken and maintain the style, texture and colour unity. Different versions of CycleGAN and Pix2Pix have been employed for fixing artworks that don't have paired datasets. With the help of GANs, it's possible to build culturally appropriate digital models by mimicking the artistic elements. This makes them ideal for projects where there is a high level of aesthetic accuracy required in the restoration of folk murals.

· Step 1: Generator Function

![]()

→ Generates a restored image G(z) from random noise or masked input.

· Step 2: Discriminator Function

![]()

→ Outputs probability that image x is real or generated.

· Step 3: Adversarial Loss (Minimax Objective)

![]()

→ Drives competition between generator and discriminator.

3) NST

Neural Style Transfer (NST) is a technique of deep learning that merges the content of one image with the style of another image. This makes it very helpful in maintaining folk paintings' special appearance. Using CNNs that have been already trained, NST splits and recombines style and content models using feature maps. This allows colour harmonies, brush strokes and nuances of texture to be transferred. In painting restoration, NST works on reconstructing the missing or damaged parts in the painting by restoring elements to the traditional style of painting, ensuring that the painting is culturally appropriate. When pictures of similar paintings are available for reference, it can be of great assistance to the restorer, for by using these the artist can reconstruct, with accuracy, the style of painting, filling in the gaps which have revealed the absence of pictorial detail, and restoring the historical purity.

· Step 1: Content Loss

![]()

→ Measures feature difference between generated image and content image.

· Step 2: Style Loss (Gram Matrix Correlation)

![]()

→ Compares style representations using feature correlations.

· Step 3: Total Variation Loss

![]()

→ Encourages spatial smoothness in the generated image.

· Step 4: Total Optimization Objective

![]()

→ Combines all components to achieve a balanced restoration preserving content and style.

3.3. Image Preprocessing and Dataset Preparation

Before training AI models to repair murals it is very important to pre- prepare the pictorial data in a way which ensures that the data is consistent, of good quality and can be used. Due to the fact that the collected pictures are not always comparable in sharpness, illumination, and degree of damage, a well-structured preparation schedule is important. The first step is to resize and normalize the picture to remain the same size and have pixel intensities that are suitable for neural networks. Histograms, colour balancing, and other methods are used to remove the unevenness from the lighting and to recover the faded tones. Next, artefacts get removed by a set of denoising filters, such as Gaussian blur or median filtering, which preserve small but unwanted details. Edge recognition and segmentation can be used to distinguish areas of painting from the background or building elements such as cracks and walls. Damaged areas are marked up or hidden to help the AI model to learn how to fix things while it is being watched. Data enrichment techniques, such as rotating, flipping, cutting, and contrasting are applied to make the datasets more diverse and enable models to work better with a more diverse set of painting styles and decay patterns.

4. Implementation and Experimentation

1) Development

of the AI Restoration Pipeline

The AI repair process was developed by integrating several deep learning models into one that is capable of working with complex patterns of painting damage. The pipeline consisted of several sections, which all worked together: preparation of images, feature extraction, repair generation and post-processing. First, damaged painting images were forwarded to the pre-processing module for cleaning up by removing noise, contrast enhancement and segmentation. This was made that the data is ready to train the model. The CNN module extracted a number of features from the paintings, and discovered patterns, outlines and colour ranges. These extracted features were passed to the GAN framework where the generator corrected broken or missing parts and the discriminator made a call on how realistic they were. A neural style transfer (NST) was used as a polish layer to ensure that the culture and style were depicted correctly. It did this by transferring traditional colour schemes, themes and brushstroke patterns from paintings used as references to the redeemed output. Frameworks such as TensorFlow and PyTorch were used to develop the workflow which makes the training and testing of models a fast process on systems that have GPU support. For the best results, hyperparameters such as learning rate, batch size and the number of epochs were fine-tuned by trying them over and over again. To make the final product appear more of folk art, post-processing was done to correct the colours, smooth the edges and equalise the contrast.

2) Testing

on Various Folk Mural Styles and Regions

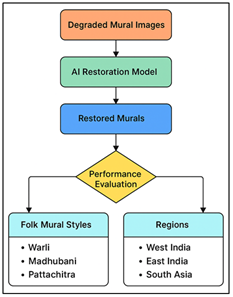

The AI repair method was tried on a variety of folk painting styles all around the world to determine how well it worked in other situations and how durable it was. The dataset consisted of Indian mural styles such as Warli, Madhubani and Pattachitra, and folk art styles from different parts of the world, such as Mexican muralism and Eastern European paintings. Each style was different in terms of the pigments used, the kind of surface roughness, the symbolic design used, and the exact geometric shapes. Figure 2provides an assessment of the various regional and stylistic murals. To make it function like in real life, partially damaged pictures were introduced into the system during the testing process.

Figure 2

Figure 2 Esting Framework for Regional and Stylistic Mural

Restoration

The CNN module did a good job finding structure shapes and patterns, and the rebuilding of the lost parts with lifelike detail by GAN. NST also enhanced its style further by making sure that the rebuilt parts look perfect in unison with the remaining parts, maintaining the visual unity. Image quality metrics, PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index), which evaluate image visual clarity and the visual consistency, were employed to evaluate the quantitative performance.

5. Future Scope

1) Integration

with Augmented Reality (AR) and Virtual Reality (VR)

The way in which AI is used to restore murals in the future could change with the combination of augmented reality (AR) and virtual reality (VR). Once paintings have been digitally reconstructed with the help of AI models, AR and VRs can be used to bring them to life, allowing people to see and interact with them in a more realistic manner. Using smartphones or headsets, AR apps can bring back restored paintings to their original place or in new far off locations. This allows them to see the differences between the "before" and the "after" situation of the repair. This interactive display is very useful for educational shows, tourists, and programs that promote the growth in knowledge in history. However, virtual reality (VR) allows you to fully immerse yourself in reconstructed ancient environments. So, using AI to fix paintings, entire temple walls, village gardens, or lost heritage sites can be virtually reconstructed again. This provides users with a true feel of place and culture. This not only ensures the survival of endangered works of art online, but it also allows people worldwide to see them without having to travel.

2) Expansion

to Other Heritage Art Forms

Even though the scope of this study is on restoration of folk paintings, the AI methods used in this investigation could be applied to the restoration of different kinds of heritage artwork. Mural paintings, texts, small paintings, statues, cloth arts, etc., traditional art forms are in the risk of becoming disintegrated due to the surrounding environment and human beings. These other media can all be corrected by the deep learning methods developed for restoring murals, by adjusting the model parameters and training data. For instance, GAN-based models have been used to fill in the gaps of historical books or stone carvings and Neural Style Transfer (NST) has recreated the style of worn-out cloth designs or painting patterns. CNN-based detection devices could be used to detect cracks in pottery or metal artefacts, loss of colour or rust on the surface. These cross-domain apps would facilitate the digitisation and reactivation of a large number of cultural assets. This would ensure that many art forms are preserved in the digital format. Using AI repair together with 3D scans and photogrammetry would also allow for modeling statues and building parts in three dimensions, and not just repairing flat surfaces.

3) Potential

for Automated Documentation and Archiving

Automated documentation and preservation are important steps to the future of AI-based historic preservation. Every restoration effort is in itself a source of useful data, from the pictures taken before the restoration to the end results enhanced by AI. Processing this data manually is time consuming and prone to error. Through the organisation of visual and metadata data in an organised manner, AI can help facilitate this process and build digital libraries that can be used by scholars, conservators and cultural organisations. Using picture recognition and information extraction algorithms, AI can automatically tag paintings based on information like location, style, type of theme and level of damage. At the same time, these well-organised records can be transferred into main databases or online history stores where they are easy to find while remaining safe for long periods. Version control of repair work also provides transparency of the process and the ability to reproduce repair work through a clear understanding of how methods and formulas may change over time. Advanced Natural Language Processing (NLP) tools can be used to make automated repair reports listing technical details, used materials and visual modifications.

6. Results and Discussion

For the sake of comparison, the AI-supported repair system did a good job combining damaged folk paintings of all types and origins. High structural and colour accuracy was verified however by quantitative measures such as PSNR and SSIM. Aesthetic and cultural authenticity was verified through the review by qualitative expert conservators. When the models of CNN, GAN, and NST were merged, they could accurately recover textures and preserve style. The results were much better than with traditional digital methods, especially when it came to recreating complicated designs and faded colors.

Table 2

|

Table 2 Quantitative Performance Comparison of AI Models |

|||

|

Model Type |

PSNR (dB) |

SSIM |

Restoration Accuracy (%) |

|

CNN |

31.42 |

0.894 |

89.6 |

|

GAN |

34.85 |

0.932 |

93.2 |

|

NST |

32.77 |

0.918 |

91.4 |

|

Hybrid (CNN + GAN + NST) |

36.21 |

0.951 |

95.8 |

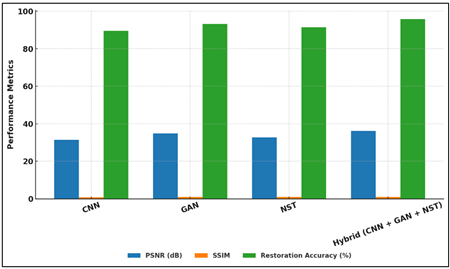

Ratio), the SSIM (Structural Similarity Index), and the Restoration Accuracy as the most important numbers. Together, these measures rate how good the models are in reconstruction accuracy, structure unity and visual reality. Figure 3 compares the model performance in terms of accuracy, PSNR and SSIM.

Figure 3

Figure 3 Performance Comparison of Image Restoration Models

Across Key Metrics

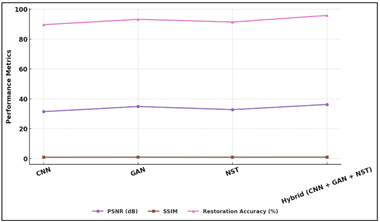

The GAN model performed better than CNN according to PSNR (34.85 dB) and SSIM (0.932). This is because its adversarial learning mechanism proved to be better in visual accuracy and detail generation. The NST model was able to capture some of the artistic texture and color palette in a satisfactory way, and improved over CNN in terms of reconstruction in some cases (in the style space). Figure 4 displays variations in model trends of PSNR, SSIM, and accuracy.

Figure 4

Figure 4 Trend Analysis of PSNR, SSIM, and Restoration

Accuracy Across Model Types

The best one was the Hybrid Model (CNN + GAN + NST), which had the highest repair accuracy (95.8%) and the highest PSNR (36.21 dB) and SSIM (0.951). This shows that it is the best way to balance between structure, detail, and artistic style. Thus it is the most stable and culturally fitting way of restoring folk murals.

Table 3

|

Table 3 Restoration Performance Across Different Folk Mural Styles |

|||

|

Folk Mural Style |

PSNR (dB) |

SSIM |

Color Fidelity (%) |

|

Warli |

35.42 |

0.947 |

94.6 |

|

Madhubani |

34.88 |

0.941 |

93.8 |

|

Pattachitra |

33.96 |

0.934 |

92.7 |

|

Mexican Muralism |

36.02 |

0.953 |

95.1 |

|

Eastern European Fresco |

35.67 |

0.949 |

94.3 |

Table 3 illustrates the model performance by the regions of folk painting using PSNR, SSIM, and Colour Fidelity as tests. These indicators reflect the clarity, the similarity, and the accuracy of the colors of paintings that are reformed by AI. These also indicate the flexibility of the repair model. Figure 5 presents the comparative performance parameters between different world folk mural styles, the experimental results showed that the Mexican Muralism achieved the highest PSNR (36.02 dB) and SSIM (0.953), which implies that the repair quality was very high and the structure fit well.

Figure 5

Figure 5 Comparative Performance Metrics of Global Folk Mural

Styles

All the geometric nature and distinct lines between the colours probably contributed to its success. The Warli paintings also performed well with PSNR of 35.42 dB and Colour Fidelity of 94.6%. This was because of their simple pattern line-based designs and colour range.

7. Conclusion

The paper on AI Assisted Restoration of Folk Murals finds that AI can be an effective tool to preserve culture, bringing back to life works of art that were considered lost forever. We were able to recover visual and structural components of various mural traditions by using Convolutional Neural Networks (CNNs) for feature extraction, Generative Adversarial Networks (GANs) for image reconstruction and Neural Style Transfer (NST) to preserve the original style. The experimental results showed that AI not only accelerates the repair work, but also can improve its repairing accuracy, reduce human error, and maintain the original appearance of traditional architecture. Expert appraisals verified that the recovered outputs are culturally and artistically accurate, demonstrating that AI can participate in replicating intricate regional styles while adhering to historical accuracy. Apart from that, the method can be applied in other culture and artistic contexts as it's adaptable. This is a worldwide way of restoring digital history. The paper also demonstrates the potential of applying AI repair models in Augmented Reality (AR) and Virtual Reality (VR) environment for more immersive learning and tourism experiences. Using electronic logging and computer storage together also ensures that recovered works of art are stored safely and are accessible in the future.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Budhavale, S., Desai, L. R., Paithane, S. A., Ajani, S. N., Singh, M., and Bhagat Patil, A. R. (2025). Elliptic Curve-Based Cryptography Solutions for Strengthening Network Security in IoT Environments. Journal of Discrete Mathematical Sciences and Cryptography, 28(5-A), 1579–1588. https://doi.org/10.47974/JDMSC-2156

Chen, S., Vermol, V. V., and Ahmad, H. (2024). Exploring the Evolution and Challenges of Digital Media in the Cultural Value of Dunhuang Frescoes. Journal of Ecohumanism, 3, 1369–1376. https://doi.org/10.62754/joe.v3i5.3973

Dahri, F. H., Abro, G. E. M., Dahri, N. A., Laghari, A. A., and Ali, Z. A. (2024). Advancing Robotic Automation with Custom Sequential Deep CNN-Based Indoor Scene Recognition. ICCK Transactions on Intelligent Systems, 2, 14–26. https://doi.org/10.62762/TIS.2025.613103

Feng, C.-Q., Li, B.-L., Liu, Y.-F., Zhang, F., Yue, Y., and Fan, J.-S. (2023). Crack Assessment Using Multi-Sensor Fusion Simultaneous Localization and Mapping (SLAM) and image super-resolution for bridge inspection. Automation in Construction, 155, Article 105047. https://doi.org/10.1016/j.autcon.2023.105047

Gao, Y., Li, W., Liang, S., Hao, A., and Tan, X. (2025). Saliency-Aware Foveated Path Tracing for Virtual Reality Rendering. IEEE Transactions on Visualization and Computer Graphics, 31, 2725–2735. https://doi.org/10.1109/TVCG.2025.3549148

Gao, Y., Zhang, Q., Wang, X., Huang, Y., Meng, F., and Tao, W. (2024). Multidimensional Knowledge Discovery of Cultural Relics Resources in the Tang Tomb Mural Category. The Electronic Library, 42, 1–22. https://doi.org/10.1108/EL-04-2023-0091

Iqbal, M., Yousaf, J., Khan, A., and Muhammad, T. (2025). IoT-Enabled Food Freshness Detection Using Multi-Sensor Data Fusion and Mobile Sensing Interface. ICCK Transactions on Sensing, Communication and Control, 2, 122–131. https://doi.org/10.62762/TSCC.2025.401245

Jaillant, L., Mitchell, O., Ewoh-Opu, E., and Hidalgo Urbaneja, M. (2025). How Can we Improve the Diversity of Archival Collections with AI? Opportunities, Risks, and Solutions. AI and Society, 40, 4447–4459. https://doi.org/10.1007/s00146-025-02222-z

Ma, W., Guan, Z., Wang, X., Yang, C., and Cao, J. (2023). YOLO-FL: A Target Detection Algorithm for Reflective Clothing Wearing Inspection. Displays, 80, Article 102561. https://doi.org/10.1016/j.displa.2023.102561

Sun, H., Wang, Y., Du, J., and Wang, R. (2025). MFE-YOLO: A Multi-Feature Fusion Algorithm for Airport Bird Detection. ICCK Transactions on Intelligent Systems, 2, 85–94. https://doi.org/10.62762/TIS.2025.323887

Tekli, J. (2022). An Overview of Cluster-Based Image Search Result Organization: Background, Techniques, and Ongoing Challenges. Knowledge and Information Systems, 64, 589–642. https://doi.org/10.1007/s10115-021-01650-9

Yu, T., Lin, C., Zhang, S., Wang, C., Ding, X., An, H., Liu, X., Qu, T., Wan, L., and You, S. (2022). Artificial Intelligence for Dunhuang Cultural Heritage Protection: The Project and the Dataset. International Journal of Computer Vision, 130, 2646–2673. https://doi.org/10.1007/s11263-022-01665-x

Zeng, Z., Qiu, S., Zhang, P., Tang, X., Li, S., Liu, X., and Hu, B. (2024). Virtual Restoration of Ancient Tomb Murals Based on Hyperspectral Imaging. Heritage Science, 12, Article 410. https://doi.org/10.1186/s40494-024-01501-0

Zeng, Z., Sun, S., Li, T., Yin, J., and Shen, Y. (2022). Mobile Visual Search Model for Dunhuang Murals in the Smart Library. Library Hi Tech, 40, 1796–1818. https://doi.org/10.1108/LHT-03-2021-0079

Zhang, X. (2023). The Dunhuang Caves: Showcasing the Artistic Development and Social Interactions of Chinese Buddhism between the 4th and the 14th Centuries. Journal of Education, Humanities and Social Sciences, 21, 266–279. https://doi.org/10.54097/ehss.v21i.14016

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.