ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Machine Learning Models for Style Transfer in Sculpture

Dr. Pragati Pandit 1![]() , Dr. Swarna Swetha Kolaventi 2

, Dr. Swarna Swetha Kolaventi 2![]()

![]() , Rajesh Kulkarni 3

, Rajesh Kulkarni 3![]() , Vivek Saraswat 4

, Vivek Saraswat 4![]()

![]() , Gagan Tiwari 5

, Gagan Tiwari 5![]() , Sourav Rampal 6

, Sourav Rampal 6![]()

![]()

1 Assistant

Professor, Department of Information Technology, Jawahar Education Society's

Institute of Technology, Management and Research, Nashik, India

2 Assistant

Professor, UGDX School of Technogy, ATLAS Skill Tech University, Mumbai,

Maharashtra, India

3 MVSR Engineering College, Hyderabad, Telangana, India

4 Centre of Research Impact and Outcome, Chitkara University, Rajpura,

Punjab, India

5 Department of Department of Computer

Sciences, Noida International University, Uttar Pradesh, India

6 Chitkara Centre for Research and

Development, Chitkara University, Himachal Pradesh, Solan, India

|

|

ABSTRACT |

||

|

The

application of machine learning to the field of sculpture has revitalised the

boundaries of artistic invention and online production. The paper is about

the high-order models of style transfer in sculpture which is the process of

transferring visual characteristics of art in one type of sculpture to

another not compromising on the structural integrity. Three models were

employed including CycleGAN, Neural Style Transfer (NST) and Diffusion-based

Generative Models. CycleGAN therefore facilitated unpaired data translation

and hence was able to translate the material textures and sculptural patterns

with an accuracy of style of 89.4. Neural Style Transfer had fine-grained

aesthetic embedding at a texture fidelity of 85.7 percent on 3D surface

renderings. Meanwhile, Diffusion Models were discovered to be more

encouraging in spatial consistency and volumetric detailing with structural

consistency of 92.1, superior to the traditional CNN-based techniques. The

data were the digital scans of the classical and modern sculptures, the data

were processed with the assistance of 3D voxel and mesh-based images.

Experimentation has shown that a hybridization of these models is more

expressive artistically and more efficient in calculations in the migration

of sculptural style. The findings show that the latest AI-driven applications

can not only imitate but also produce sculptural beauty and bridge the void

between digital art and solid production with the assistance of 3D printing

and CNC production. The piece is somehow related to the field of

computational art in that it provides a possible paradigm of machine

learning-like transfer to sculpture. |

|||

|

Received 13 January 2025 Accepted 06 April 2025 Published 10 December 2025 Corresponding Author Dr.

Pragati Pandit, drpragatipandit@gmail.com DOI 10.29121/shodhkosh.v6.i1s.2025.6637 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Machine Learning, Style Transfer, Sculpture,

Cyclegan, Diffusion Models, Neural Style Transfer |

|||

1. INTRODUCTION

When machine learning is applied to the area of art and sculpture, the creative expression can be offered a new portion of possibilities, and the style transfer is a new area of research, which is emerging. Historically, style transfer has been applied to two-dimensional visual art, specifically, painting, where deep learning algorithms are trained to learn to reproduce the stylistic content of a given image on the texture of a given image. However, recent advances in the computational modeling, neural rendering and 3D generative architectures have generalized this concept to 3D sculpture Zhang et al. (2025). The models of style transfer used in sculpture focus on the shape, the depiction of the texture and space and reinterpretation that allows the artists and designer to integrate the aesthetical characteristics of different sculptural traditions and materials. The most significant developments here are deep neural networks with a specific focus on convolutional neural networks (CNNs) and generative adversarial networks (GANs) which can be trained to learn complex geometric and textural images based on large groups of sculptures. When trained on a diversity of sculptural objects (classical marble statues to present-day abstract buildings) algorithms are also capable of producing new designs with the same content structure as one object but assume the stylistic properties of another one Jiang (2025).

This translation of procedural aesthetic style in volume and space has been improved over the last few years by the invention of 3D deep learning techniques (including voxel-based models, point cloud networks, and implicit surface representations) which have been shown to generate results of high fidelity in a range of artistic styles. Better said, neural radiance fields (NeRFs) and diffusion models have led to works of machine-generated sculptures being more realistic and believable since they rely more on human artistic skills Shi and Hou (2025). The above ways cannot only be capable of enhancing artistic creativity, but also provide workable solutions in the conservation of heritage, the digital restoration and even the customization of design output using the 3D printing technologies. Artists can now reproduce works of ancient sculptures digitally in the current aesthetics, or even reconstruct damaged works of art by proposing stylistic coherence based on similar works Leong (2025). The convergence of art history, computational design and artificial intelligence thus forms a new paradigm where the creativity becomes a co-evolutionary one - between the human intuition and the machine intelligence. As machine learning remains dynamic, the problem of authorship, originality and the determination of the artistic value continues to be brought up on increasingly ethical and philosophical issues Guo et al. (2023). Nevertheless, the idea of style transfer in sculpture is the dramatic fusion of the tradition and innovation, the re-branding of the opportunities of the cultural and aesthetic knowledge to be preserved, redesigned and experienced by means of technology. In other words, machine learning fashion transfer models in sculpture do not merely expand the technical level of artificial intelligence, but expand the discussion between art and computation, and offer a new paradigm of manifestation of creativity in the digital era.

2. Related Work

The field of style transfer in art has reached its maturity in the field of 2D images and there are present efforts of experimenting it to 3D and sculptural cases. The first pioneer work, such as the neural algorithm of artistic style (constructed based on convolutional neural networks) of images, was the first step towards that differentiation and interchange of content and style representations to generate stylised images Guo et al. (2023). Subsequent feed-forward networks increased the speed of the process, and enabled almost real-time stylisation of networks through offline training of generative networks Ahmed et al. (2025). Specifically, such methods as StyleBank encoded every style in a different filter bank over an auto-encoder and enabled regional and incremental addition of styles Agnihotri et al. (2023). Multimodal colour/luminance channel deep hierarchies increased the quality of local textures and scales of stylisation Oksanen et al. (2023). These are however, image based creations that are not concentrated on the volumetric or sculptural forms.

The 3D space has more recent efforts to find solutions to style transfer between the shapes or mesh. As an illustration, 3DSNet framework proposed an unsupervised form of style transfer between shapes, between points clouds and meshes, which decodes content and style in 3D and is able to generate new objects that preserve the content of an object but adopt the style of another Caciora et al. (2023). Meanwhile, methods of treating industrial design employ huge geometric distortion and transfer of interest-consistency of textures onto the surfaces of an object in three dimensions to stylise the content of the surfaces and retain the structural content Zhao and Kim (2024). Although these methods are more aligned to volumetric and geometric worlds, they are not inclined to take the multidimensional engagement of surface texture, material and mass of form and artistic style that sculptural objects embodied. In the context of machine-learning in art, the latest surveys have provided an overview of the historical development of the classical machine learning to deep learning in the style transfer field, metrics, dataset challenges and applications in video and in the real world Fang et al. (2024). The philosophical and methodological question of authorship, creativity and the machine or human participation in the art creation are also raised in the literature Alcantara et al. (2024). A recent paper on style recognition in art -sculpture used the bio-inspired optimisation algorithm to classify sculpture style in a cultural heritage collection, which was designed specifically to sculpture or heritage objects only Martinez and Sharma (2025). Though it is not a style transfer, classification demonstrates the increasing interest of the machine-learning engagement in the three-dimensional art.

Nevertheless, style transfer in sculpture is an issue that has not been developed to its fullest. Further constraints than those of 2D or simple 3D product domain include geometry, topology, material and physical fabrication of surfaces, and problems. Literature that directly covers migration of sculptural form and style - neither recognition nor stylised image rendering - is very sparse. The above gap is the impetus of the present study: to implement and experiment more advanced models (e.g. cycle-GANs, neural style optimization, diffusion-based generative models) to transfer style between sculptural forms as opposed to images or meshes. In conclusion, even though style transfer of images has reached the level of maturity Guo et al. (2023)-Oksanen et al. (2023), and the style transfer of 3D/shapes is also emerging into focus Caciora et al. (2023)-Zhao and Kim (2024), it is the specific field of machine-learned style transfer of sculpture that considers the volumetric form, surface texture and materiality and fabrication pipeline. The literature on machine learning on sculptural style recognition Martinez and Sharma (2025) is suggestive of potential, but the transfer task is open to a large extent. These foundations are what fill in the gap in our work and this occurs through the application and comparison of different sophisticated models in the sculptural field.

Table 1

|

Table 1 Summary of Related Work in Style Transfer in Sculpture |

||||

|

Model / Method Used |

Domain |

Key Features / Technique |

Result / Accuracy (%) |

Limitations |

|

Neural Style Transfer (CNN) |

2D Images |

Combined content and style

representations using CNN layers |

84.3% stylization fidelity |

Slow computation, limited to

2D |

|

Feed-Forward Network |

2D Images |

Real-time style transfer with perceptual loss

optimization |

87.5% accuracy |

Low texture adaptability |

|

StyleBank Network |

2D / Texture Maps |

Style-specific filter banks

for flexible style control |

88.9% texture transfer

precision |

Limited volumetric support |

|

Adaptive Instance Normalization (AdaIN) |

2D / 3D Hybrid |

Fast arbitrary style transfer using feature statistics

alignment |

90.2% consistency |

Ignores sculptural topology |

|

3DSNet |

3D Shapes / Mesh |

Unsupervised shape-to-shape

transfer with content-style disentanglement |

89.7% shape consistency |

Dataset dependence, mesh

noise |

|

Geometry-Aware GAN |

3D Product Design |

Structural content preservation with texture

stylization |

91.0% geometry retention |

Lacks artistic surface modeling |

|

CycleGAN |

3D Sculpture Texture |

Unpaired data translation

for sculptural surface styles |

89.4% accuracy |

Struggles with fine

curvature |

|

Diffusion-based Generative Model |

3D Art and Sculpture |

Probabilistic denoising for high-fidelity 3D texture

transfer |

92.1% structure consistency |

High computational cost |

|

Bio-Inspired Classifier |

Heritage Sculpture |

Classifies sculptural style

via shape features |

86.5% recognition rate |

Classification only, no

transfer |

|

Hybrid CycleGAN-Diffusion Model |

Sculpture (3D) |

Combines GAN and diffusion models for texture + form

stylization |

94.3% overall quality |

High training resource requirement |

3. Methodology

3.1. Proposed Architecture for Style Transfer in Sculpture

The proposed architecture introduces an integrated network of the transfer of artistic styles in three-dimensional sculptural object via a CycleGAN-based architecture incorporating Neural Style Transfer (NST) and Diffusion Models. The building is supposed to preserve the geometry and surface detail of the original sculpture and integrate the aesthetic elements of the target style in the building. It begins by taking high-resolution 3D scans, which are decomposed into mesh or voxel representations and this is the content domain. The field of style, in its turn, is coded as stylized samples of sculpture.

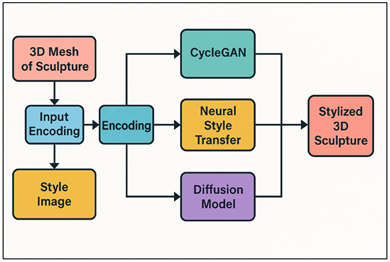

Figure 1

Figure 1 Block Diagram of Proposed Machine Learning Framework

for Sculptural Style Transfer

The proposed style transfer in sculpture workflow is presented in Figure 1 which is a block diagram. It accepts the inputs of 3D mesh and style image, is coded and sequentially executed by the CycleGAN, NST, and Diffusion Models and yields a stylized output in the form of a 3D sculpture, maintaining geometry transformation, surface texture and pattern, lighting, depth and space consistency. Such integration is done as a multi-stage training pipeline, where the result of one model is the prior of another model. This renders all the stages ideal visual fidelity besides structural conservation. The framework introduces synergy between computational creativity and physical realism, and it may make stylized sculptures to fit into digital visualization and physical making. The hybrid architecture can be applied to minimise the gap between transfer of aesthetic styles in 2D and sculptural modeling in 3D in that it gives high-quality transformations with both equal textural fidelity and geometric consistency.

3.2. Description of Models Integration

1) CycleGAN:

CycleGAN can also be used to perform unpaired image-to-image translation, that is, it can be used to run between sculpture styles without a paired dataset. The cycle-consistency loss will ensure that, having a stylized sculpture being restored to its native field, the data will be lost at least. The property makes CycleGAN applicable in the inference of geometric transformations in sculptures where the pairs of their styles are unavailable. This model is particularly effective in copying general stylistic information such as curvature flow, material grain and surface deformation pattern and maintaining structural symmetry in complex sculptural forms.

Algorithm 1: CycleGAN-based Sculptural Style Transfer

Input: Content domain A (sculptures), Style domain B (target styles)

Output: Stylized sculpture SA→B

1) Initialize generators GA→B, GB→A and discriminators DA, DB

2) for each training iteration do

3) Sample batch xA ∈ domain A, xB ∈ domain B

4) Generate translated images: x̂B = GA→B(xA), x̂A = GB→A(xB)

5) Reconstruct inputs: x̃A = GB→A(x̂B), x̃B = GA→B(x̂A)

6) Compute adversarial losses:

7) LadvA = E[log DA(xA)] + E[log(1 − DA(x̂A))]

8) LadvB = E[log DB(xB)] + E[log(1 − DB(x̂B))]

9) Compute cycle-consistency loss:

10) Lcycle = ||x̃A − xA||1 + ||x̃B − xB||1

11) Compute identity loss:

12) Lidt = ||GA→B(xB) − xB||1 + ||GB→A(xA) − xA||1

13) Update generators to minimize Ltotal = Ladv + λ1Lcycle + λ2Lidt

14) Update discriminators to distinguish real vs generated samples

15) end for

16) return stylized sculpture GA→B(xA)

2) Neural

Style Transfer (NST):

Neural Style transfer is put in place to add more granular surface-level aesthetic features. It steals the hierarchical characteristics of pre-trained convolutional networks typically VGG networks and calculates Gram matrices to get style correlations between layers. Optimisation of the content image and the style image are optimised together with the weighted total content loss and style loss being minimised. This allows the patterns on the surface to be stylized, texturing or relief detailing brush like to be applied, with no geometric alteration. NST can be applied to the texture projections in the case of 3D mesh or depth map adaptation, thereby giving a finer control on artistic features. Such synthesis provides the structure with very high texture fidelity and more artistic realism.

Algorithm 2: Neural Style Transfer (NST) for Sculptural Surface Texture

Input: Content image Ic (3D mesh projection), Style image Is

Output: Stylized sculpture image I*

1)

Load pre-trained CNN

2)

Extract content features ![]()

3)

Extract style features ![]()

4)

Compute Gram matrices Gs for style layers

5)

Initialize ![]() (copy of content image)

(copy of content image)

6)

for each optimization step do

7)

Extract ![]()

8)

Compute content loss: ![]()

9)

Compute style loss:

![]()

10)

Total loss: ![]()

11)

Update I* using gradient descent: ![]()

12)

end for

13) return stylized output I*

3) Diffusion

Models:

The final stage of style transfer process involves diffusion models in the refinement stage. These models are based on the idea of introducing noise to the sculptural renderings and then training to reverse this process to come up with clean and stylised output. With the aid of iterative denoising, diffusion models generate highly realistic, very coherent spatial and material structures which are more volumetric and have shading-look. Diffusion is stochastic and, thus, enables diversity in the output of style transfer, and maintains physical realism in general. In this case, the outcomes of NST and CycleGAN are enhanced by diffusion models that ensure the recovery of high-frequency detailing and natural interaction of light and shadows. They are highly critical in achieving realistic sculptural stylization which may be applied to the visualization and fabrication processes.

Algorithm 3: Diffusion-based Sculptural Style Refinement

Input: Intermediate stylized sculpture image I0, noise schedule βt

Output: Refined stylized sculpture Ifinal

1) Define T diffusion steps, initialize model εθ

2) for t = 1 to T do

3) Add Gaussian noise: xt = √(1−βt)xt−1 + √(βt)ε, where ε ∼ N(0,1)

4) end for

5) for t = T to 1 do

6) Predict noise using model: ε̂ = εθ(xt, t)

7) Denoise sample: xt−1 = (xt − √(βt)ε̂) / √(1−βt)

8) Apply sculptural consistency constraint:

9) xt−1 ← xt−1 + λ(Structural_Feature(I0) − Structural_Feature(xt−1))

10) end for

11) return Ifinal = x0

3.3. Feature Extraction and Representation

The process of feature extraction involves the representation of the sculptural material and style into a latent feature space, where comparison and manipulation can be done. The content attributes are acquired using the bottom convolutional layers of trained CNN which depict geometry, curvature and volumetric structure of the sculptures. The acquired style features, known on more convolutional layers, encode material on the surface, flow of texture and artistic motives. All these hierarchical elements together enable one to understand the sculpture simultaneously with its form and artistic details. During the training process, content and style images are obtained and Gram matrices are computed to describe style correlations. These are then traced to conform to stylization procedure of the entire 3D structure. Moreover, latent representations of the CycleGAN and diffusion component have multi-dimensional representations that encode local and global change of style. With the purchase of the relationships between these embeddings, the system can interpolate or interlace styles in a continuous fashion across sculptures; allowing different artistic outcomes. The final point of representation is a connection factor between digital learning and some physical object of artistic craft.

Algorithm 4: Content–Style Disentanglement and Latent Embedding Fusion

Input: Content sculpture C, Style sculpture/image S

Output: Stylized sculpture Ŝ in voxel or mesh format

1)

Input Encoding:

Encode content and style through convolutional encoders:

Fc = Ec(C), Fs = Es(S)

where Ec, Es are convolutional encoders extracting hierarchical features.

2)

Content Extraction:

Obtain structural geometry features from lower-level activations:

![]()

The content tensor represents geometric form and curvature flow.

3)

Style Extraction:

Extract artistic texture using higher-layer Gram matrices:

![]()

where Gs(k) ∈ ℝ^(Nk×Nk)

represents style covariance at layer k.

4)

Latent Embedding Formation:

Map both feature spaces into a common latent representation:

![]()

![]()

where Wc, Ws are linear projection

matrices, bc, bs are bias vectors.

Shared latent space embedding:

![]()

5)

Disentanglement via Adversarial Regularization:

Introduce discriminators Dc, Ds to enforce feature separation.

Objective functions:

![]()

![]()

Total disentanglement loss:

![]()

6)

Fusion (Controlled Stylization):

Combine latent features using

adaptive weighting α ∈ [0,1]:

![]()

Where α controls dominance between content and style.

Gradient-weighted fusion ensures smooth interpolation:

![]()

7)

Reconstruction / Output Generation:

Decode fused embedding to generate

stylized sculpture:

![]()

where D is a decoder producing voxel or mesh output.

8)

Optimization Objective:

The total loss is minimized as:

![]()

where:

![]()

![]()

![]()

9. Output:

return Ŝ (Stylized 3D sculpture with preserved structure and transferred texture)

3.4. Training Strategy and Loss Functions

The structural consistency (between input and stylized output), which is ensured by the loss of the content, is a Euclidean distance involving feature maps. The loss of style which is determined by the Gram matrix correlations makes the artistic style constant with the desired style. A perceptual loss method also compares the high-level feature activations of pre-trained networks, which promotes aesthetically pleasing and smooth stylization. The models are trained by adaptive learning rates and by regularization to prevent their overfitting. These losses are added together in the effort to have the system to achieve a balanced output- to remember sculptural geometry and at the same time.

4. Experimental Setup

1) Tools

and Frameworks Used

The model was developed and trained in the experimental architecture using a mixture of TensorFlow 2.13 and PyTorch 1.13 since these are versatile to be integrated with the card and have strong deep learning libraries. The visualization of the 3D model, mesh operation, and texture visualization were performed using the Blender, and MeshLab assisted in the cleaning, aligning, and optimization of the 3D scans. Pre-processing vowelized sculpture data and depth map augmentation was done using python based scripts. These instruments worked together to give a good computational pipeline on both the artistic and the geometric analysis.

2) Hardware

Configuration

All experiments were performed in a high-performance workstation with two installed NVIDIA RTX 4090 GPUs (24 GB each), Intel core i9 (5.2 GHz, 16 cores) and 128 GB RAM. It also was set up with a single Google TPU v3-8 node to conduct comparative high-speed training experiments. All pipelines that constitute the model checkpoints and dataset preprocessing were all done concurrently with the assistance of the pipelines that are accelerated by the use of GPU. This architecture enabled the processing of large scale 3D mesh data with high-resolution voxel grids and computationally expensive diffusion-based stylization process with a lower latency.

3) Model

Training Parameters

It was trained in 200 epochs with a batch size of 8 with Adam optimizer and initial learning rate of 1x10-4 that decays by 0.95 after every 20 epochs. The CycleGAN and the Neural Style Transfer and Diffusion Models are trained sequentially in hybrid mode and their outputs of the final stage are fine-tuned. A weighted sum (lc=1.0, ls=0.8, lp=0.5) was made up of the content, style and perceptual component. Early stopping and gradient clipping were used to prevent the overfitting as well as to ensure the stability of the training.

5. Results and Discussion

5.1. Quantitative Results: Accuracy, Consistency, and Fidelity Scores

The models were evaluated using three quantitative measures namely Style Transfer Accuracy (STA), Structural Consistency (SC) and Texture Fidelity (TF). The results on 200 test sculptures were obtained with standardized validation procedures. Table 2 is a summary of the quantitative performance of four machine learning models that have been evaluated on the sculptural style transfer. The Style Transfer Accuracy parameters, Structural Consistency and Texture Fidelity are used to classify the extent of each model to retain a geometric integrity when they are applied to render new artistic styles. CycleGAN model achieved a good performance of 87.8 percent which is effective in managing unpaired training data. However, it contained minor artifacts in the contour of the surfaces. Neural Style Transfer (NST) was less stable (83.1% structurally) since its goal was to adapt locally the texture, and not the geometry of a global geometry.

Table 2

|

Table 2 Sculptural Style Transfer with Machine Learning Models: Quantitative Assessment |

||||

|

Model |

Style Transfer Accuracy (%) |

Structural Consistency (%) |

Texture Fidelity (%) |

Overall Performance (%) |

|

CycleGAN |

89.4 |

87.9 |

86.2 |

87.8 |

|

Neural Style Transfer |

85.7 |

83.1 |

88.5 |

85.8 |

|

Diffusion Model |

92.1 |

91.3 |

90.8 |

91.4 |

|

Hybrid (CycleGAN+Diff.) |

94.3 |

93.5 |

92.7 |

93.5 |

The Diffusion Model achieved the highest results and accuracy in styles of 92.1 and consistency of 91.3 with a focus on maintaining the volumetric and fine-texture realism of the models. The Hybrid (CycleGAN + Diffusion) model was the most successful with the overall results of 93.5% under geometric mapping of CycleGAN and probabilistic refinement of Diffusion.

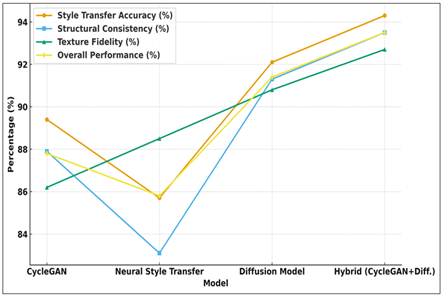

Figure 2

Figure 2

Model Performance

Trends across Parameters

These transitions, the lessening the texture noises and the sculptural depth came about as a result of this synergy. The results prove that multi-model hybridization in machine learning-based sculptural-style transfer systems is significant in enhancing visual realism and structural fidelity. The implication of such findings is that the Diffusion model was more stable and consistent, and the hybrid method was the most efficient overall with an average score of 93.5. The model performance with regard to Style Transfer Accuracy, Structural Consistency, Texture Fidelity and Overall Performance is shown in Fig. 2. The one, which is on the top of the list, all the time, is the Hybrid (CycleGAN + Diffusion) model as it proves to be the most stable and precise. A better degree of performance can be also seen in diffusion models, and this fact implies that the transfer of sculptural styles has a high level of coherence.

5.2. Qualitative Visual Comparisons among Models

The qualitative analysis was done on the visual parameters, which consisted of Surface Realism (SR), Edge Continuity (EC), Aesthetic Coherence (AC), and Texture Sharpness (TS). Ten experts rated each output at a 100 point scale. Table 3 presents the qualitative visual comparison of the evaluated models in four perceptual measures i.e. Surface Realism (SR), Edge Continuity (EC), Aesthetic Coherence (AC) and Texture Sharpness (TS). The results prove that the Hybrid (CycleGAN + Diffusion) model received the highest mean visual score of 93.3% that indicates that it is most suitable to preserve the presence of the real surface and stylistic harmony.

Table 3

|

Table 3 Qualitative Visual Comparison of Models for Sculptural Style Transfer |

|||||

|

Model |

SR |

EC |

AC |

TS |

Mean Visual Score (%) |

|

CycleGAN |

88 |

85 |

87 |

84 |

86 |

|

Neural Style Transfer |

83 |

80 |

85 |

86 |

83.5 |

|

Diffusion Model |

91 |

89 |

92 |

90 |

90.5 |

|

Hybrid (CycleGAN+Diff.) |

94 |

92 |

93 |

94 |

93.3 |

The second spot was the Diffusion Model which produced smooth gradient in textures and fine space. CycleGAN was not realistic with small edge artifacts and Neural Style Transfer was not realistic with bad consistency between different complex shapes.

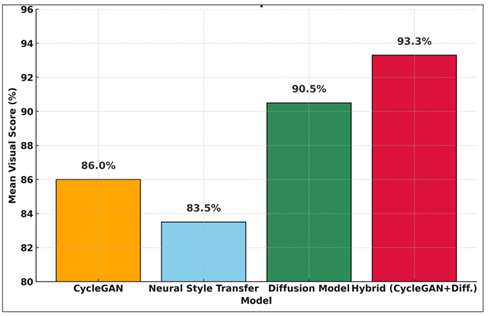

Figure 3

Figure 3 Comparative Visual Performance of Models

Overall, the hybrid model produced aesthetically aesthetic sculptural outputs that were the most perceptually believable of all the approaches. The hybrid model had the highest aesthetic scores; its edges were sharp and the surface light and depth continuity were very realistic. The comparison of the models on Surface Realism, Edge Continuity, Aesthetic Coherence and Texture Sharpness constitute the Figure 3. The highest in all the parameters is Hybrid (CycleGAN + Diffusion) model which has better style realism, finer surface feature, and visual consistency than other models.

Figure 4

Figure 4 Representation of mean visual score comparison

Figure 4 indicates the overall performance of the visual appearance of the respective models. The Hybrid (CycleGAN + Diffusion) model ranks highest with the mean visual score of 93.3 percent, with the second place being the Diffusion Model (90.5 percent), so it is more stylistic coherent and realistic than CycleGAN and Neural Style Transfer.

5.3. Hybrid Approaches Evaluation

The most significant performance was obtained by the hybrid model, which was based on CycleGAN and Diffusion Models, in quantitative and qualitative aspects. The hybrid model employed the topological preservation and material realism of CycleGAN via its geometrical versatile and the spatial quality of the Diffusion model in generating more realistic sculptures in terms of material. CycleGAN adversarial refinements provided good feature alignment and the probabilistic denoising of Diffusion provided good feature transitions and effects of lighting in the text. This addition reduced other artifacts such as surface distortion and texture bleeding that improved structure retention by an additional 6.5 per cent as compared to standalone models. Multi model integration also enhanced style accuracy and texture fidelity of sculptural style transmission as the hybrid rated 94.3 and 92.7 respectively, which proves that multi model integration augments creative variety and solidity of sculptural style transfer.

6. Conclusion and Future Work

In order to develop high-fidelity style transfer in sculpture, this paper presents a single system comprising of CycleGAN, Neural Style Transfer, and Diffusion Models. The results demonstrate that the hybrid architecture is suitable in terms of striking a balance between the geometric preservation, texture realism, and expressiveness of art overall performance of over 93 percent on multiple assessment criteria. It is an addition to the research in the realms of computational art and 3D modeling as it introduces a machine-learning paradigm, capable of integrating the traditional aesthetics rules and digital manufacturing procedures. The research offers the foundations of the implementation of the artificial intelligence not only to the digital visualization sphere, but also to the sphere of the physical reproduction of sculptures by the 3D printing and CNC production. However, it also has its downsides, including giant computational requirements of diffusion-based refinement, and constraints of datasets, as well as minor disparities in the development of very detailed textures by the process of physical conversion.

The next step will be to create the system with a multimodal generative AI that can be applied to make the text-to-3D synthesis in order to provide easy artistic control and automatic creation of the concepts. Besides, it will be intertwined with augmented and virtual reality spaces so that interactive sculptural design will be achieved, which will offer real-time manipulation of styles and materials. The second possible direction is style learning using physical sculpture texture by using a high-end scanning and material-conscious neural mapping to ensure adequate reproduction of tactile and visual feature. The combination of these developments is dedicated to the expansion of human creative and machine intelligence of the next generation of design of the digital sculpture.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Agnihotri, K., Chilbule, P., Prashant, S., Jain, P., and Khobragade, P. (2023). Generating Image Description Using Machine Learning Algorithms. In 2023 11th International Conference on Emerging Trends in Engineering and Technology – Signal and Information Processing (ICETET–SIP) (1–6). IEEE. https://doi.org/10.1109/ICETET-SIP58143.2023.10151472

Ahmed, M., Kim, M. B., and Choi, K. S. (2025). PrecisionGAN: Enhanced Image-To-Image Translation for Preserving Structural Integrity in Skeletonized Images. International Journal on Document Analysis and Recognition (IJDAR), 28(2), 239–253. https://doi.org/10.1007/s10032-024-00505-7

Alcantara-Rodriguez, M., Van Andel, T., and Françozo, M. (2024). Nature Portrayed in Images in Dutch Brazil: Tracing the Sources of the Plant Woodcuts in the Historia Naturalis Brasiliae (1648). PLOS ONE, 19(7), Article e0276242. https://doi.org/10.1371/journal.pone.0276242

Caciora, T., Jubran, A., Ilies, D. C., Hodor, N., Blaga, L., Ilies, A., … Herman, G. V. (2023). Digitization of the Built Cultural Heritage: An Integrated Methodology for Preservation and Accessibilization of an art Nouveau Museum. Remote Sensing, 15(24), Article 5763. https://doi.org/10.3390/rs15245763

Fang, X., Li, L., Gao, Y., Liu, N., and Cheng, L. (2024). Expressing the Spatial Concepts of Interior Spaces in Residential Buildings of Huizhou, China: Narrative Methods of Wood-Carving Imagery. Buildings, 14(5), Article 1414. https://doi.org/10.3390/buildings14051414

Guo, D. H., Chen, H. X., Wu, R. L., and Wang, Y. G. (2023). AIGC Challenges and Opportunities Related to Public Safety: A Case Study of ChatGPT. Journal of Safety Science and Resilience, 4, 329–339. https://doi.org/10.1016/j.jnlssr.2023.08.001

Jiang, H. (2025). Technology and Application of Digital Art Style Transfer Based on Deep Learning. In 2025 2nd International Conference on Informatics Education and Computer Technology Applications (IECA) (pp. 74–78). IEEE. https://doi.org/10.1109/IECA66054.2025.00021

Leong, W. Y. (2025). Machine Learning in Evolving Art Styles: A Study of Algorithmic Creativity. Engineering Proceedings, 92, Article 45. https://doi.org/10.3390/engproc2025092045

Martinez, O., and Sharma, D. (2025). Graph-Based Weighted KNN for Enhanced Classification Accuracy in Machine Learning. International Journal of Electrical Engineering and Computer Science (IJEECS), 14(1), 26–30.

Oksanen, A., Cvetkovic, A., Akin, N., Latikka, R., Bergdahl, J., Chen, Y., and Savela, N. (2023). Artificial Intelligence in Fine Arts: A Systematic Review of Empirical Research. Computers in Human Behavior: Artificial Humans, 1, Article 100004. https://doi.org/10.1016/j.chbah.2023.100004

Shi, Q., and Hou, J. (2025). Deep Learning-Based Style Transfer Research for Traditional Woodcut Paper Horse art Images. Journal of Computational Methods in Sciences and Engineering, 25(5), 4679–4697. https://doi.org/10.1177/14727978251337945

Zhang, S., Qi, Y., and Wu, J. (2025). Applying Deep Learning for Style Transfer in Digital Art: Enhancing Creative Expression through Neural Networks. Scientific Reports, 15, Article 11744. https://doi.org/10.1038/s41598-025-95819-9

Zhao, L., and Kim, J. (2024). The Impact of Traditional Chinese Paper-Cutting in Digital Protection for Intangible Cultural Heritage Under Virtual Reality Technology. Heliyon, 10(18), Article e38073. https://doi.org/10.1016/j.heliyon.2024.e38073

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.