ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Image Restoration for Heritage Photography Using AI

Dr. Vaishali

Sandeep Baste 1![]() , Mohit Malik 2

, Mohit Malik 2![]() , Dr. Parag Amin 3

, Dr. Parag Amin 3![]()

![]() , Priya Dahiya 4

, Priya Dahiya 4![]()

![]() , Mithhil

Arora 5

, Mithhil

Arora 5![]()

![]() , Akhilesh Kalia 6

, Akhilesh Kalia 6![]()

![]()

1 Associate

Professor, E and TC, SKNCOE Vadgaon, Pune, India

2 Assistant

Professor, School of Business Management, Noida International University, India

3 Professor, ISME - School of Management and Entrepreneurship, ATLAS

Skill Tech University, Mumbai, Maharashtra, India

4 Assistant Professor, Department of Computer Science and Engineering (AI),

Noida Institute of Engineering and Technology, Greater Noida, Uttar Pradesh,

India

5 Chitkara Centre for Research and

Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

6 Centre of Research Impact and

Outcome, Chitkara University, Rajpura- 140417, Punjab, India

|

|

ABSTRACT |

||

|

A significant

portion of cultural and historical heritage is represented by photographic

materials that are frequently damaged due to deterioration, poor storage or

improper archival use. Traditional repair methods work to some extent, but

are limited due to the need for physical work, analysis based on personal

experience and processes that take a lot of time. Using the latest

advancement of deep learning, this study proposes an AI-driven mechanism for

old photos to be restored automatically. The study looks into the way to

combine Convolutional Neural Networks (CNNs), Generative Adversarial Networks

(GANs), and Diffusion Models to fix and enhance the damaged pictures. CNNs

are used to get rid of noise and rebuild structures, GANs are used to create

new textures and restore colours and Diffusion

Models are used to improve fine-grained details and get rid of artefacts. The

way they do it is getting the information from historical records and

museums, primarily pictures, that have been damage d in various ways, such as

faded, scratched, stained, and blurred. In order to prepare the information

for the training of the models preprocessing techniques such as normalisation, damage segmentation and contrast reduction

are applied. Frameworks such as TensorFlow and PyTorch

are used in the application to create models and find the best parameters. To

improve the modelling generalization and model stability, the normalisation of the dataset and the expansion of the

data-set are employed. The proposed AI-based approach significantly improves

the quality of repair over the standard digital approaches, as demonstrated

by the results of experiments. |

|||

|

Received 13 January 2025 Accepted 06 April 2025 Published 10 December2025 Corresponding Author Dr.

Vaishali Sandeep Baste, vaishali.baste@gmail.com DOI 10.29121/shodhkosh.v6.i1s.2025.6636 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Heritage Photography, Image Restoration, Deep

Learning, Gans, Diffusion Models, Cultural Preservation |

|||

1. INTRODUCTION

It is important to keep alive the national, social and political identities through the heritage photographs. People, places, and customs which form the common memory of civilisations are represented in these photos. However, over the years many of these important photographs have deteriorated in quality due to their age or because they were not stored properly, were exposed to moisture, or were produced in chemicals that deteriorate camera materials. The visible quality of these historical items is often affected by such things as fading, discolouration, scratches, tears, and spots. The damage not only deprives them of their beauty and usefulness, but exposes the future protection of cultural artefacts. Because of this, picture repair has become an important area of study, which connects computer vision, digital studies and culture protection. Image repair techniques such as hand editing, interpolation, and filters have been employed to prevent images from losing their quality for a long time. Most often these methods rely on human knowledge and simple mathematical models in order to reconstruct missing or destroyed parts of pictures. These methods can give good-looking results, but they typically require a lot of work, take a long time and are open to individual bias. Also, standard algorithms find it hard to cope with complicated degradations such as texture loss, severe damage or colour fading, which occur a lot in old photos Azizifard et al. (2022). Due to the flaws of traditional and human digital repair methods, people are seeking smarter, more advanced, and automatic methods.

The area of picture repair has changed a lot since Artificial Intelligence (AI) and deep learning came along. Deep learning models, specifically Convolutional Neural Networks (CNNs), Generative Adversarial Networks (GANs), and Diffusion Models, are very good at things such as denoising images, adding details, and making them very clear. These models learn complex patterns and relationships in picture data and this allows them to guess and fill in blank information very accurately. AI-based systems are capable of learning from big data, fix different types of damage, and make repairs that look a lot like the original content (unlike traditional algorithms where a clear set of rules is required) Liu et al. (2022). CNNs are good at restoring structures due to the fact that they can locate spatial systems and local patterns in pictures. GANs, on the other hand, employ adversarial learning in order to produce realistic results and high-quality patterns, often resulting in a better look than regular methods. Recently, Diffusion Models have become popular as one strong creative tools to improve picture results over and over again, giving users more control and accuracy when creating and improving images. When these designs are put together they create a complete framework to fix up old photos that have different levels of damages Maiwald et al. (2021). AI is being used to restore historical images, which not only makes the process easier but also results in a more consistent and scalable process. With the help of powerful machine learning techniques, the process of digital repair can be done fast and easy on huge collections of damaged photos. This new technology makes it easier for museums, libraries and other culture organisations to maintain digital copies of past images Kimura et al. (2021).

2. Literature Review

1) Overview

of traditional image restoration methods

Traditional ways of restoring images are concentrated on making old photos look better by applying mathematical modelling and hand-correction methods. The purpose of these methods is to repair or reduce the effects of picture degradation such as blurring, noisy, scratches, and fading. Space and frequency domain filtering, such as linear filters, Wiener filters and inverse filters, were frequently used in the early stages of picture repair to get rid of noise and improve clarity. These methods work well for simple degradations, but they rely on the knowledge of how degradation models work ahead of time, which might not always be the case for real-world heritage pictures Garozzo et al. (2021). Other common methods include interpolation and partial differential equations (PDEs), which reconstruct lost or damaged areas of the picture by diffusion of information of pixels around them. Exemplar-based or diffusion-based approaches have become common for the task of filling in blank areas. For colour repair and improving, histogram equalisation and contrast shifting were also often used Croce et al. (2021). Traditional repair methods are helpful, but there are some problems with them. With this comes that they rely on factors that are made by hand, they can't fix complicated damage or big missing areas. Manual fixing, in which experts use software to make changes, takes a lot of time and can be biassed. As a result, pictures produced by conventional means are often too smooth or full of artefacts. The motivation to move towards digital and AI-driven repair methods was due to the inability to adapt to various types of damage and keep small details Felicetti et al. (2021). In spite of this, old methods helped build the modern research of repair by laying the foundation for important ideas in signal processing, picture filters and pixel correction that are still being used today.

2) Advances

in digital restoration techniques

As digital photography became more popular, the methods of repair changed to utilize computer methods for more accuracy and to make the repair more of an automated process. Digital repair was made possible through mathematical optimisation and statistical modelling in order to correct issues such as colour fade, geometrical distortion and texture loss. Wavelet transforms, sparse representation and total variation regularisation were some of the most important tools for getting rid of noise and keeping edges. These techniques provide the possibility of repair at different sizes which increases the contrast and brightness of a picture without making it too smooth Hatir et al. (2020). Another major step in the right direction was digital inpainting, which automatically fills in broken areas by using the structure and pattern of pixels around them. Patch-based and exemplar-based inpainting algorithms fill in the gap by examining spatial patterns and thus make the fill-in more realistic than interpolation. It became even easier to deal with nonlinear degradations such as spots or cracks which are common in old pictures by incorporating morphological screening and frequency domain fixes. Digital processing including histogram matching, gray-scale assumptions and Retinex-based models, in which faded or discoloured areas are corrected, were also helpful for colour restoration. Also, fusion methods began to merge statistical models with perceptual models to make the pictures appear more realistic Idjaton et al. (2023). Digital repair methods, on the other hand, are more sophisticated, but they still require a lot of manual control through parameter setting. They have a difficult time with badly damaged pictures where important background details are lost.

3) AI

and deep learning approaches for image enhancement

Deep learning and artificial intelligence (AI) has revolutionized the world of image repair creating data-driven models that are able to learn the complex decay patterns to restore pictures with a certain level of accuracy. The deep learning models learn the hierarchical representations directly from the data and in this way they can generalize across different types of damages. This is different from traditional and digital methods used by explicit mathematical formulas Garcia et al. (2025). Convolutional Neural Networks (CNNs) is very important in this field since it is very good at tasks like denoising, deblurring, and inpainting because they can find spatial connections between pixels. Architectures such as those based on U-Net and ResNet have been demonstrated to be helpful in fixing ruined historical photos and bringing back small details Mishra and Lourenço (2024). By adding antagonistic training to Generative antagonistic Networks (GANs) restoration quality has become even better. In this type of training, a discriminator is used to check the accuracy of the restorations and a generator to learn how to make realistic restorations. This competitive system helps make results to look good, especially when it comes to colourization and texture mixing. Literature techniques, datasets, architectures and degradation types are shown in Table 1. Models based on Generative Adversarial Networks (GANs) such as Pix2Pix and CycleGAN have done a great job of restoring old, faded photos by restoring accurate colours and surface inking. As of late, Diffusion Models have become the most advanced instruments to improve images.

Table 1

|

Table 1 Summary of Literature Review |

|||

|

Technique Used |

Dataset Type |

Model Architecture |

Degradation Type Addressed |

|

Traditional Filtering |

Grayscale Heritage |

Wiener Filter |

Noise, Blur |

|

Wavelet + PDE Bonazza and Sardella (2023) |

Ancient Prints |

Hybrid Model |

Scratches, Stains |

|

Sparse Representation |

Museum Archives |

K-SVD |

Noise Reduction |

|

CNN-Based Denoising Padghan et al. (2025) |

Color Heritage Photos |

CNN (U-Net) |

Fading, Blur |

|

GAN for Colorization |

Historical Portraits |

Pix2Pix GAN |

Fading, Discoloration |

|

Deep Autoencoder Ghosh (2013) |

Archival Documents |

Autoencoder |

Noise, Tears |

|

Attention-based CNN |

Heritage Artworks |

AttentionNet |

Scratches, Texture Loss |

|

CycleGAN Restoration |

Old Indian Archives |

CycleGAN |

Fading, Color Shift |

|

Hybrid CNN-GAN |

Damaged Photographs |

CNN-GAN Fusion |

Multi-type Degradation |

|

Diffusion-Based Inpainting Li and Zhang (2025) |

Heritage Monochrome |

Diffusion Model |

Cracks, Missing Parts |

|

Transformer-CNN Hybrid |

Digitized Archives |

Vision Transformer |

Blur, Discoloration |

|

Diffusion + GAN Fusion |

Colorized Heritage |

DiffGAN |

Severe Damage |

|

CNN + GAN + Diffusion |

Multi-source Heritage |

Hybrid Ensemble |

All Degradation Types |

3. Methodology

3.1. Data collection from heritage archives and museums

Any AI system that fixes pictures is based on the size and variety of data set that will be used to train and test the system. When it comes to vintage photos, data gathering refers to obtaining old pictures from private collections, museums, archives and libraries. These places have huge collections of pictures that show cultural, social, and political events that happened over many years or even hundreds of years. The files may contain black and white photos, photos in sepia tones, and early colour photographs. Each of them has a distinct method of deterioration by age, light, moisture, or chemical action on the film materials. Using high resolution scanners or cameras to retain as much information as possible, real photos are first converted to digital files. Smaller damages such as cracks or film grain and fine details can be replicated when a higher resolution of 600 DPI is used. Details such as date, the type of film used, the state, and where the picture came from are all written down as well to assist with the context analysis and for recovery in the future. Legal and moral issues need to be carefully considered in collecting data. To ensure that copyright and preservation regulations are observed, the permission is sought from cultural groups.

3.2. Preprocessing techniques for damaged images

Preprocessing is an important part of getting historical photos ready for AI-based repair because it makes sure that the pictures that are used are clean, uniform, and ready for good model training. Heritage pictures often have a lot of damage, like scratches, spots, fading and uneven lighting, that needs to be fixed before they can be fed into deep learning networks. Image normalisation is the first process in the workflow of planning. This is the process of scaling the values of the intensity for each pixel to a regular range (e.g. 0-1 or -1-1). This helps to keep the model stable and reduces the bias in computing. Another important part of preparation is to get rid of noise.

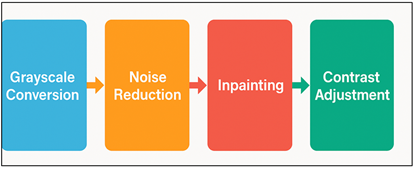

Figure 1

Figure 1 Architecture Diagram of Preprocessing Techniques for

Damaged Heritage Images

The Gaussian, median and bidirectional filters are some of the classical filters that are used to get rid of random noise while keeping important edges and textures. Figure 1 is an improved flowchart for preprocessing sequence - noise reduction, color correction, and normalization. Non-local means and wavelet-based denoising can be added to improve the performance in the case of extreme degradation. Some methods for fixing colours, like histogram equalisation, white balance adjustment and contrast-limited adaptive histogram equalisation (CLAHE) help to make tones more even and quality of the image as a whole better.

3.3. Model architecture selection

1) CNN

As they can extract hierarchical spatial data, convolutional neural networks (CNNs) are highly relevant in picture reconstruction. Through convolutional layers, they detect the local patterns such as edges, surfaces and structures. This makes them good for denoising, deblurring and inpainting. Skip connections that retain spatial information are used in architectures such as U-Net and ResNet that tend to work well for repair tasks. CNNs are great in putting back together small features and lowering noise, but they might not be great in adding new texture or colour information, when large areas are missing. For more realistic results often more models such as GANs are used.

Step 1 — Forward feature extraction

Given degraded input ![]() each layer applies convolution, bias, and

nonlinearity:

each layer applies convolution, bias, and

nonlinearity:

![]()

with denoting

convolution and ![]() Skip

connections use:

Skip

connections use:

![]()

Step 2 — Reconstruction head

Produce restored image:

![]()

Optionally add residual prediction:

![]()

2) GAN

Generative Adversarial Networks (GANs) consist of two competing networks, one is known as a generator, and the other is known as a discriminator. These networks are used to create realistic replacements for pictures. The discriminator inspects whether or not the product is genuine and the creator repairs broken or missing components. This is a competitive learning system to allow the construction of high-quality colours and textures that appear real. GANs such as Pix2Pix and CycleGAN have been found to be better at adding colour, getting rid of scratches, and improving details. They work better than other methods as they make repairs which look and feel real. However, they have to be carefully tuned in order to avoid issues such as overfitting or creating artefacts during training.

Step 1 — Generator prediction (conditional)

![]()

Step 2 — Discriminator judgment (conditional)

![]()

![]()

Step 3 — Losses

Adversarial and content losses:

![]()

![]()

![]()

![]()

3) Diffusion

Models

The diffusion model is a new development in generative AI that does amazing work in picture repair. By learning how to perform the inverse diffusion, these models convert noisy images to high-quality images over and over again. They get back fine features, smooth curves, and natural surfaces with amazing accuracy with the use of progressive denoising. Diffusion Models are great at fixing up badly damaged historical pictures, giving stable, varied, and error-free results. Their probability method allows them to have more control over picture quality and variation, than GANs, which makes them perfect for difficult repair tasks that require accuracy, consistency, and an accurate rebuilding of the image.

Step 1 — Forward (noising) process

With schedule ![]()

![]()

where x_0 = x (clean target), conditioned on x_d.

Step 2 — Denoiser training objective (ε-prediction)

Train ![]() to

predict noise:

to

predict noise:

![]()

Step 3 — Reverse (sampling) step

Parameterize:

![]()

where

![]()

![]()

3.4. Training and validation setup

Constructing the training and testing is an extremely important aspect of generating a robust AI-based system for restoring the past photographs. It ensures that the model learns how to properly recreate damaged pictures and can also do this with the data that it hasn't seen before. The first thing to do is divide up the information into training, validation, and testing information, typically in the ratios 70:20:10 or 80:10:10. This splitting up allows the model to be optimised, but prevents it from fitting too well and ensuring that the performance review is fair. During training groups of preprocessed pictures are sent to the network at the same time. Optimisers such as Adam or RMSprop are employed in order to minimize loss functions such as Mean Squared Error (MSE), Structural Similarity Index (SSIM) or subjective loss. These loss functions find the difference between the output that was recovered and the data that was original. This allows the model to produce outputs that appear to be reasonable. For the GAN-based design, additional hostile and content losses are added to make produced pictures look more real. Methods such as random cutting, rotation, and colour jittering are used during training to add to data. This makes the model more general and less susceptible to various forms of degradation. Overfitting is prevented even further by regularisation techniques (e.g. dropout, weight decay). To get the best results, hyperparameter setting is done over and over again. This includes varying the speed of learning, the batch size and the depth of the network. After each training, validation is performed in order to monitor accuracy and loss curves for the model. Early stopping methods are used to stop the training process when the performance in validation stops improving. This avoids unnecessary calculations as well as overfitting.

4. Implementation

1) Frameworks

and tools used (e.g., TensorFlow, PyTorch)

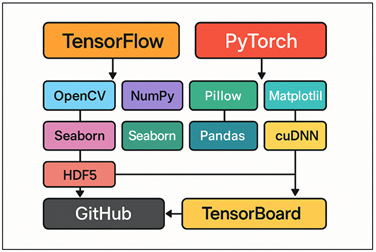

AI-based picture repair for vintage photography requires robust machine learning frameworks and computing tools that can manage the handling of large amounts of data, train deep models and display the results. The most popular of these are TensorFlow and PyTorch as they are flexible, run faster on GPUs and provide plenty of assistance to build neural networks. Keras is TensorFlow's high-level API, it enables quick model prototyping and deployment while PyTorch's dynamic computation graph is easy to read and understand, making debugging and testing easier. Figure 2 illustrates the combination of frameworks and tools for AI restoration. Libraries like OpenCV, NumPy, and Pillow are used to change the size, filter, and improve the contrast of data and do other preparation and data editing.

Figure 2

Figure 2 Workflow of Frameworks and Tools Used in Image

Restoration.

Scikit-image is useful for edge detection, histogram equalization, denoising, and other tasks that are necessary to restore old, damaged images. To ensure that the assessment of the model is clear, Matplotlib and Seaborn are used to display training measures and repair results. For organised data storing, Pandas and HDF5 forms are used for dealing with big numbers at a faster rate. Driven by CUDA and cuDNN, GPU-enabled settings are the usual ones for deep networks where the installation of the deep network is much faster. It is also possible to perform scalable experiments on cloud-based tools using Google Colab, AWS SageMaker, or Nvidia DGX Devices.

2) Dataset

augmentation and normalization

Adding to and normalising datasets are important steps to take in order to make models more general, and to enhance performance in AI-driven picture repair. Because heritage datasets tend to be small and contain a large variety of damage types, enrichment is used to make the data more diverse so that the model can learn how to effectively address all of the damage cases. Augmentation methods such as random rotation, turning at angle in the horizontal and vertical directions, cutting, scaling, and brightness adjustment make the training samples more varied, which is similar to the changing of degradation in the real world. These changes make the model not overfit and help the model to handle the repair problems that haven't been seen yet. Advanced enhancement is done using noise addition (Gaussian, salt and pepper), as well as synthetic degradation models (i.e. blurring, fade, and scratch patch) to make damage patterns appear more realistic. To make it look like old photos with spots and scratches colour jittering and changing of hue-saturation are also used. These additions bring us a fair dataset with different degrees of difficulty of a repair, with conceptual continuity. Normalisation ensures that the value of pixel intensities are all shifted to the same range which is usually [0, 1] or [-1, 1]. This makes the numbers more firmly grounded while training. In order to make raw pictures more uniform, then methods such as z-score normalisation or min-max scaling are taken.

3) Network

training process and parameter tuning

Iterative optimisation for network training method is used for historical picture repair to reduce rebuilding mistakes and enhance the quality of perception. At first, datasets which have been preprocessed and added to are divided into set for training and confirmation. No matter if it is the network CNN-, GAN- or Diffusion based it is taught with a set of data and the parameters are changed using backpropagation and gradient descent optimisation. Because they are able to adapt to the new information, optimisers such as Adam, SGD and RMSprop are frequently used. The loss functions play a significant role in the training process. Some common goals are Mean Squared Error (MSE), for pixel-level accuracy, Structural Similarity Index (SSIM), for consistency in terms of how things are seen and Perceptual Loss, from pre-trained networks such as VGG, on how real things look. Adversarial and content losses are employed together in GAN based designs to ensure that both validity and structural correctness are fulfilled. To get the best results, parameter setting means, making regular changes to the learning rate, batch size, number of epochs, filter sizes, and layer depth. A slower rate of learning ensures that convergence is stable while the right batch size finds a balance between the speed of computed calculations and the stability of the gradient. Early stopping and timing of learning rate are utilized to overcome over fitting and to enhance the ability to generalize. On a regular basis, model checkpoints are saved to maintain the best setups.

5. Results and Discussion

Compared with conventional digital approaches, the image structure and the quality of images were significantly changed in the AI-based repair system. Quantitative tests using PSNR and SSIM demonstrated a better quality and less noise at various degrees of degradation. The GAN and Diffusion Models enabled the accurate colour tones and texture rebuilding which was better than the CNNs as far as quality of perception was considered. Better brightness, fewer artefacts, natural colour balance in visual tests was demonstrated. The results show that AI-driven repair is a good way to maintain the originality and beauty of old photos with little to no involvement from humans.

Table 2

|

Table 2 Quantitative Evaluation of Restoration Models |

|||

|

Model |

PSNR (dB) |

SSIM |

FID |

|

Traditional Filtering |

21.45 |

0.612 |

78.34 |

|

CNN (U-Net) |

27.89 |

0.842 |

45.62 |

|

GAN (Pix2Pix) |

30.72 |

0.894 |

27.18 |

|

Diffusion Model |

33.85 |

0.932 |

18.46 |

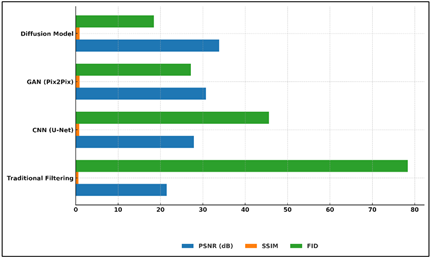

The numbers in Table 2makes it very clear how the quality of repair has been getting better and better with the help of advanced AI models. On the other hand, the results of the PSNR and SSIM values for traditional filtering methods were 21.45 dB and 0.612, respectively, which demonstrates that they were not very successful in restoring small picture features and texture accuracy. In Figure 3, we see a comparison of the performance of CNN, GAN and Diffusion models.

Figure 3

Figure 3 Performance Trends Across Image Generation Models

The CNN (U-Net) model made both measures much better (PSNR = 27.89 dB, SSIM = 0.842) because it was good at learning spatial association and extracting features. However, the results obtained from CNN were sometimes too smooth although they were physically sound. Comparative analysis of Image quality metrics for models is shown in Figure 4. Through adversarial learning, the GAN (Pix2Pix) model gave even higher perceived accuracy. (PSNR = 30.72 dB, SSIM = 0.894). It did so by faithfully reproducing the colour tones in nature and the differences in texture.

Figure 4

Figure 4 Comparative Evaluation of Image Quality Metrics by

Model Type

The Diffusion Model performed the best, having a PSNR of 33.85 dB, SSIM of 0.932, and FID of 18.46, which was the lowest. This means that the quality and accuracy of the picture have been better. Its repeated denoising process was able to fix even the worst damaged areas. In the end, the diffusion-based repair produced the best results balancing structure accuracy and perceived realism in a quantitative manner.

Table 3

|

Table 3 Model Performance on Different Degradation Types |

|||

|

Degradation Type |

CNN |

GAN |

Diffusion Model |

|

Fading / Discoloration |

28.34 |

31.22 |

33.48 |

|

Scratches / Tears |

0.841 |

0.893 |

0.928 |

|

Noise / Grain |

26.91 |

29.65 |

32.87 |

|

Blur / Distortion |

0.834 |

0.877 |

0.919 |

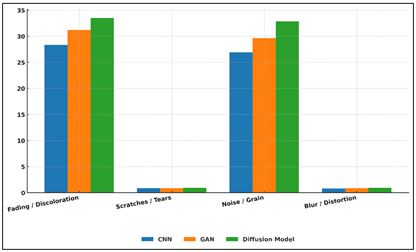

Table 3 illustrates the outcome of CNN, GAN, and Diffusion Models in terms of the effectiveness of correcting 4 kinds of damage commonly found in old photos, such as fading, scratches, noise and fuzz. Even though all of the models did better at repairing, the Diffusion Model inevitably did better than the others in each area. Comparative performance of models with multiple image degradations is shown in Figure 5. CNN got a PSNR of 28.34 dB for fading and discolouration, GAN got it to 31.22 dB and the Diffusion Model came in first at 33.48 dB which did a good job in recovering natural tones and balancing contrast.

Figure 5

Figure 5 Model Performance Comparison Across Image

Degradation Types

When SSIM was used to measure scratches and tears, again the Diffusion Model had the best structure similarity (0.928), beating out GAN (0.893) and CNN (0.841). This means that it was better at rebuilding fine edges and surface patterns. The diffusion effect achieved the lowest diffusion of 32.87 dB for noise and grain, which reduced the random defects while preserving the texture content. In same way it had the best SSIM (0.919) for noise and blurring, retain the sharpness and the clearness.

6. Conclusion

This study is a successful one in showing how Artificial Intelligence can be used to revitalise old photos which have been damaged. By using deep learning models such as CNN, GAN, and Diffusion Models, the proposed system is able to determine pictures that have been damaged, faded, or exposed to noise and restore it accurately in a visual way. The complete procedure through the gathering of data, the preparation of data, the training of the models and the evaluation of their performances, demonstrates a workable template for a digital heritage protection system that is scalable, efficient and replicable. The study shows that artificial intelligence driven repair is superior to standard and human approaches, especially when it comes to handling complicated damage patterns such as scratches, fading and texture loss. CNNs were great to get things in place, and the GANs and Diffusion Models made things look more real and extracted small details. Both objective measures such as PSNR and SSIM and intuitive ratings concluded that the AI-based models were better at making repairs that looked good and were true to the original. The suggested way also helps with large-scale digitisation projects as the repair task can be automated, which reduces time, money and eliminates mistakes made by humans. Not only did the repaired pictures retain the value of their cultural heritage, they would also be easier to locate for study, storage, and public display.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Azizifard, N., Gelauff, L., Gransard-Desmond, J.-O., Redi, M., and Schifanella, R. (2022). Wiki Loves Monuments: Crowdsourcing the Collective Image of the Worldwide Built Heritage. Journal on Computing and Cultural Heritage, 16(1), 1–27. https://doi.org/10.1145/3569092

Bonazza, A., and Sardella, A. (2023). Climate Change and Cultural Heritage: Methods and Approaches for Damage and Risk Assessment Addressed to a Practical Application. Heritage, 6(4), 3578–3589. https://doi.org/10.3390/heritage6040190

Croce, V., Caroti, G., De Luca, L., Jacquot, K., Piemonte, A., and Véron, P. (2021). From the Semantic Point Cloud to Heritage-Building Information Modeling: A Semiautomatic Approach Exploiting Machine Learning. Remote Sensing, 13(3), Article 461. https://doi.org/10.3390/rs13030461

Felicetti, A., Paolanti, M., Zingaretti, P., Pierdicca, R., and Malinverni, E. S. (2021). Mo.Se.: Mosaic Image Segmentation Based on Deep Cascading Learning. Virtual Archaeology Review, 12(25), 25–38. https://doi.org/10.4995/var.2021.14179

Garcia-Moreno, F. M., del Castillo de la Fuente, J. M., Rodríguez-Simón, L. R., and Hurtado-Torres, M. V. (2025). ArtInsight: A Detailed Dataset for Detecting Deterioration in Easel Paintings. Data in Brief, 61, Article 111811. https://doi.org/10.1016/j.dib.2025.111811

Garozzo, R., Santagati, C., Spampinato, C., and Vecchio, G. (2021). Knowledge-Based Generative Adversarial Networks for Scene Understanding in Cultural Heritage. Journal of Archaeological Science: Reports, 35, Article 102736. https://doi.org/10.1016/j.jasrep.2020.102736

Ghosh, S. K. (2013). Digital Image Processing. Alpha Science International.

Hatir, M. E., Barstuğan, M., and İnce, İ. (2020). Deep Learning-Based Weathering Type Recognition in Historical Stone Monuments. Journal of Cultural Heritage, 45, 193–203. https://doi.org/10.1016/j.culher.2020.04.008

Idjaton, K., Janvier, R., Balawi, M., Desquesnes, X., Brunetaud, X., and Treuillet, S. (2023). Detection of Limestone Spalling in 3D Survey Images Using Deep Learning. Automation in Construction, 152, Article 104919. https://doi.org/10.1016/j.autcon.2023.104919

Kimura, F., Ito, Y., Matsui, T., Shishido, H., Kitahara, I., Kawamura, Y., and Morishima, A. (2021). Tourist Participation in the Preservation of World Heritage: A Study at Bayon Temple in Cambodia. Journal of Cultural Heritage, 50, 163–170. https://doi.org/10.1016/j.culher.2021.05.001

Li, Y., and Zhang, D. (2025). Toward Efficient Edge Detection: A Novel Optimization Method Based on Integral Image Technology and Canny Edge Detection. Processes, 13(2), Article 293. https://doi.org/10.3390/pr13020293

Liu, Z., Brigham, R., Long, E. R., Wilson, L., Frost, A., Orr, S. A., and Grau-Bové, J. (2022). Semantic Segmentation and Photogrammetry of Crowdsourced Images to Monitor Historic Facades. Heritage Science, 10, Article 27. https://doi.org/10.1186/s40494-022-00664-y

Maiwald, F., Lehmann, C., and Lazariv, T. (2021). Fully Automated Pose Estimation of Historical Images in the Context of 4D Geographic Information Systems Utilizing Machine Learning Methods. ISPRS International Journal of Geo-Information, 10(11), Article 748. https://doi.org/10.3390/ijgi10110748

Mishra, M., and Lourenço, P. B. (2024). Artificial Intelligence-Assisted Visual Inspection for Cultural Heritage: State-of-the-Art Review. Journal of Cultural Heritage, 66, 536–550. https://doi.org/10.1016/j.culher.2024.01.005

Padghan, N. P., Sapekar, K. N., Sonakneur, M. S., Masram, N. M., Lolure, S. R., and Madavi, S. C. (2025). Muscle Sensor Driven 3D Printed Prosthetic arm. International Journal of Technological Advances and Research in Modern Engineering (IJTARME), 14(1), 5–11.

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.