ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

AI-Powered Beat Detection and Its Educational Uses

Manish Gudadhe

1![]() , Gunveen Ahluwalia 2

, Gunveen Ahluwalia 2![]()

![]() ,

Prince Kumar 3

,

Prince Kumar 3![]() , Ansh Kataria 4

, Ansh Kataria 4![]()

![]() ,

Dr. Ronald Doni A. 5

,

Dr. Ronald Doni A. 5![]()

![]() ,

Hanna Kumari 6

,

Hanna Kumari 6![]()

![]()

1 Department

of Computer Science and Engineering (Data Science), St. Vincent Pallotti

College of Engineering and Technology, Nagpur, India

2 Chitkara

Centre for Research and Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

3 Associate Professor, School of Business Management, Noida International University 203201, Uttar Pradesh, India

4 Centre of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab, India

5 Associate Professor, Department of Computer Science and Engineering, Sathyabama Institute of Science and Technology, Chennai, Tamil Nadu, India

6 Assistant Professor, Department of Interior Design, Parul Institute

of Design, Parul University, Vadodara, Gujarat, India

|

|

ABSTRACT |

||

|

AI powered

beat recognition is a huge step forward in audio signal processing since it

brings together the power of machine learning and the intricate rhythms of

music. Earlier methods for the search of beats were based on energy analysis

of the signal and frequency decomposition, which were many times limited by

the variation of the pace, the type and the quality of the recordings.

Nowadays, deep learning has led to the ability of computer systems to learn

rhythmic patterns from large datasets. This allows beats to be found in a

much greater variety of musical styles more accurately and adaptively.

Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs)

work with the data particularly well because they extract out the

hierarchical time patterns as well as finds relationships between events in

audio data. The effects this technology will have on the way we teach are

huge. AI-driven beat recognition in music classes helps to improve rhythm

training by providing students with feedback in real time in order to help

them improve their timing and rhythmic awareness. In addition to the normal

means of teaching music, AI beat recognition enables interesting learning

tools and games with rhythm, as well as virtual instruments that change

according to the action of the user. These systems promote engagement in both

the online and school environments through learning environments that are

both flexible and personalized with feedback and apps that tie music and math

to cognitive science. Case studies show that platforms with AI beats

recognition make learning more fun, keeps students motivated and helps them

to understand the rhythmic ideas. |

|||

|

Received 25 January 2025 Accepted 20 April 2025 Published 10 December 2025 Corresponding Author Manish Gudadhe, mgudadhe@stvincentngp.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6627 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: AI Beat Detection, Audio Signal Processing, Machine

Learning in Music, Neural Networks, Music Education, Interactive Learning |

|||

1. INTRODUCTION

The joining of artificial intelligence (AI) and digital signal processing has transformed how people listen to and play music in the last couple of years. AI-powered beat recognition is one of the most significant moves in this regard. It is a computer technique for the automatic finding of rhythmic patterns and time beats in audio information. A lot of technologies that deal with music are based on beat recognition. These include automatic music recording and genre classification and real-time audio visualisation and synchronisation in media apps. Adding the power of AI to beat recognition, specifically deep learning algorithms, has made recognition far more accurate, flexible and aware of its surroundings. This means that it can be used for more than just fun such as in educational settings. In the past, finding beats depended on signal processing techniques that were required to be prepared carefully by hands, such as start recognition, spectral flux and speed estimations. These methods worked fine for organised or studio-quality recordings, but struggled with complicated beats, changing tempos dynamically, and pieces with multiple instruments Topol (2019). As AI has developed, with the development of neural network architectures such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), it has been possible for systems to learn rhythmic details directly from very large amounts of data. These systems have now the ability to recognize temporal and spectral relationships that constitute musical rhythm. This change from mathematical rules to data driven intelligence is a paradigm shift that allows computers to understand rhythm in a very similar way to humans Chung and Lee (2019).

Deep learning beat recognition effects on schooling are huge. One of the most important elements of teaching music is rhythm since it controls time, balance, and musical expression. AI-based beat recognition tools enable teachers to provide students with immediate rhythm analysis, personalized feedback, and dynamic challenges based on their proficiency levels, driving student engagement and development. For instance, a student playing the drums or the piano is able to obtain rapid visual cues indicating where he or she is taking a detour from the intended tempo Romero and Ventura (2020). This allows them to correct their errors and helps them to be more accurate rhythmically. In the same way, rhythm training apps that make use of AI make learning a game, providing students with tasks and tracking their progress. Beyond teaching music, AI-powered beat recognition assists with other aspects of education in terms of innovation, cross-disciplinary learning, and knowing how to use technology. It can be incorporated into multimedia tools in digital classes which allow sounds to sync with graphics.

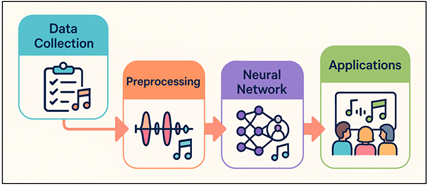

Figure 1

Figure 1 Multistage Architecture of AI-Powered Beat Detection and Its Educational Applications

This helps students to understand how rhythm and maths have a correlation and how to put things into order of time. Figure 1 illustrates the data flow from data collection to educational applications based on artificial intelligence. Students of computer science, cognitive psychology or artificial intelligence can also use beat detection systems as case studies to learn about signal processing, brain structures and how people and computers communicate Kucak et al. (2018). That way, it's not only a teaching tool, but it's also a means to bridge the arts education with STEM education. The emergence of online learning and interesting digital places worldwide makes AI-powered beat recognition even more handy. Intelligent systems with the ability to assess and advise students independently are very relevant in remote environments where conventional feedback loops between teachers and students are not readily available. They allow students to practise alone without forfeiting useful comments based on data Hamim et al. (2021). Beat recognition also facilitates collaboration between people, enabling students to experiment with a rhythm-based form of composition publicly in a platform. Despite these advantages, it is not easy to implement AI beat recognition in education.

2. Background and Literature Review

2.1. Evolution of Beat Detection Techniques

The evolution of beat recognition techniques reflects a constant change from the basic analysis of the signal to intelligent, flexible systems to comprehend difficult rhythmic structures. In the 1980s and 1990s, the first methods largely relied on signal processing techniques based on the tracking of amplitude envelopes, spectral flux measurement and onsets. These methods discovered fast changes in the energy of audio data and associated them with potential beats Bozak and Aybek (2020). Even though they were pretty good at what they did, they often had trouble with changing tempos, irregular rhythms, and multiple sounds which meant they were only good at simple or monophonic pieces. With computers and digital music, more and more frequency-domain techniques were published, becoming increasingly sophisticated as computers got faster. These relied on Fast Fourier Transform (FFT) and wavelet analysis to be able to better pick up rhythmical cues across a wider range of frequencies. By the use of timing estimations and flexible filtering, these methods made things more stable Rebai et al. (2019). But they still relied on features and rules that were created by hand and were therefore difficult to let them be used in a wide range of musical styles. In the 2000s, statistic algorithms of machine learning such as Support Vector Machine (SVMs) and Hidden Markov Models (HMMs) were introduced to describe rhythmic patterns in a statistical manner. This was a turning point.

2.2. Role of Machine Learning in Audio Signal Processing

Audio signal processing has been transformed completely by the use of machine learning (ML) that allows systems to extract, analyse and create sound features with a level of accuracy that was previously never observed. In the past, digital signal processing (DSP) jobs such as finding beats, guessing pitches and categorising genres of music depended on rules written by hand. Even though they worked well in controlled settings, they weren't able to handle complex voice data from real life Costa-Mendes et al. (2021). Machine learning developed an adaptive framework in which models have the ability to learn patterns and structures from large datasets that have been labelled which reduces the need for human feature engineering. ML algorithms, especially deep learning model, look at raw waveforms or spectral representations in order to find important links between time and harmony in the audio processing Aslam et al. (2021). Convolutional Neural Networks (CNNs) are very good at learning spatial and spectral properties from spectrograms which allows them to quickly spot patterns such as beat or sound. On the other hand, Recurrent Neural Networks (RNN) and its variants, such as Long Short-Term Memory (LSTM) networks, are very good at modeling the dependency between events in a sequential fashion. They're capable of recording the way that the speech and music data flow in time. ML can be used in a lot of different areas of audio, from source separation to real-time audio composition, automatic song recording to speech recognition and mood detection Martínez-Abad (2019). Some of the key studies, techniques, accuracy, and effects in education are summarized in Table 1 By learning generalisable rhythmic models, ML helps to make beat recognition more immune to noise, changes in speed and different kind of music.

Table 1

|

Table

1 Summary of Related Work on AI-Powered Beat

Detection and Educational Applications |

||||

|

Technique |

Dataset Used |

Application Domain |

Advantages |

Limitations |

|

Onset detection + spectral flux |

Ballroom Dataset |

Music signal analysis |

Simple implementation |

Limited to clear rhythmic audio |

|

Adaptive Beat Tracking |

Private dance music set |

Tempo estimation |

Handles tempo variation |

Poor generalization to live music |

|

RNN with LSTM Nalawade et al. (2025) |

GTZAN Dataset |

Real-time beat tracking |

Captures temporal dependencies |

Computationally heavy |

|

CNN for onset detection |

Ballroom + Beatles |

Beat localization |

Improved feature extraction |

Sensitive to noise |

|

Hybrid CNN–RNN Salas-Pilco and Yang (2022) |

Custom rhythm dataset |

Multi-genre beat detection |

Learns spatial-temporal features |

High training cost |

|

Bidirectional LSTM |

Extended Ballroom |

Real-time beat tracking |

Accurate tempo detection |

Needs GPU acceleration |

|

Deep Recurrent BeatNet |

OpenMIC Dataset |

AI-based rhythm generation |

Enables creative music education |

Complex implementation |

|

CNN + Real-time tempo mapping |

Proprietary |

Music education app |

Real-time learner feedback |

Commercial limitations |

|

Neural Rhythm Analyzer Araneo (2024) |

Academic datasets |

Classroom rhythm evaluation |

Teacher dashboard and analytics |

Subscription required |

|

Reinforcement Learning-based Beat Tracking |

Internal dataset |

Interactive rhythm training |

Gamified rhythm education |

Limited research data |

|

Transformer-based Beat Detection Pouresmaieli

(2024) |

GTZAN + FMA |

Polyphonic music |

Captures global temporal context |

Large model size |

|

Hybrid CNN–RNN Educational Model |

Multi-source datasets |

AI-integrated rhythm learning |

Adaptive feedback and analytics |

Minor latency challenges |

3. Methodology of AI-Powered Beat Detection

3.1. Data Collection and Preprocessing of Audio Signals

How well and how many different ways that AI powered beat recognition system collects and processes data is what makes them work. The neural networks to be used for recognition of beat must be able to process data well so that they can find rhythmic structures in a wide range of musical styles, speeds, and qualities of sound. The first step is to put together audio files which usually have a lot of different songs, musical tracks and beat patterns put together. Many times, publicly available databases such as GTZAN, Ballroom, and Million Song Dataset are used, as well as personally labelled datasets where the experts carefully mark the beats in the songs Dos Santos (2022). When supervised machine learning models are being used these comments are used as the truth. After being recorded, the raw audio data go through a long process of preparation before they can be analysed. In this step, audio is resampled to a stable sampling rate (usually 44.1 kHz), stereo recordings are converted to mono recordings to make the processing easier. Normalisation adjusts the amplitude levels so that all the samples have the same volume, which reduces the impact of difference in volume. The sound is then broken up into short frames that meet, which allows for small detail in time to be recorded. After that, the signals are transformed into time frequency representations such as spectrograms or Mel Frequency Cepstral Coefficients (MFCCs). These pictures demonstrate the importance of regular energy flows and changes in frequency to neural networks in learning.

3.2. Neural Network Architectures Used

1) CNNs

Because CNNs are effective at extracting spatial and spectral features from audio spectrograms, they have been adopted by many to detect beats. CNNs are able to detect the energy fluctuations of beats through the use of neural filters to detect localized rhythmic and harmonic patterns in the time and frequency domains. Adding more pooling layers makes these features even smaller and therefore speeds up computations and makes them more resistant to noise. Convolutional neural network (CNN) is capable of detecting repeated rhythmic patterns, so it can be used to track beats in a variety of music. CNNs can be used to automatically learn important features in the data, if the data has been trained on a large, labeled set of data. This means that beat localisation is very accurate. Because they can be used for a multiple task at once, they can also be used in real-time teaching and interactive music apps.

Step 1: Convolution Operation

![]()

→ These computes feature map Z by convolving the input spectrogram X with kernel W and bias b.

Step 2: Non-linear Activation (ReLU)

![]()

→ Applies Rectified Linear Unit (ReLU) to introduce non-linearity and enhance rhythmic feature learning.

Step 3: Fully Connected Output

![]()

→ Flattens pooled features and passes them through a fully connected layer to produce the final beat probability output y.

2) RNNs

Recurrent Neural Networks (RNNs) are important for modelling the timing relationships in the audio data and used in beat recognition. Whereas CNNs focus on drawing features in space, RNNs can see connection that take place sequentially so the system can grasp the changes in rhythm over time. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks are more sophisticated networks that address the disappearing gradient issues and look further in the environmental context to predict pace and beat. RNN go through audio frames one after the other guesses the chances of beats based on rhythmic patterns they have learnt. When combined with CNNs they form hybrid architectures that are a combination of spectral and temporal learning. This gives them more ability to track beats and have more flexibility in transforming real-life music classrooms.

Step 1: Hidden State Update

![]()

→ Updates hidden state h_t using current input x_t and previous state h_{t-1} to preserve rhythm continuity.

Step 2: Output Calculation

![]()

→ Produces an output vector o_t representing beat likelihood at each time step t.

Step 3: Sequence Activation (Softmax)

![]()

→ Applies the softmax function to generate normalized beat probabilities across the sequence.

Step 4: Loss Function (Cross-Entropy)

![]()

→ Computes cross-entropy loss between true beats y_t and predicted beats ŷ_t to optimize learning.

3.3. Evaluation Metrics for Accuracy and Efficiency

Objective evaluation criteria for AI-based beat recognition systems are necessary, where the performance of AI systems is assessed in terms of the accurate beat prediction and the available computing power. Through these steps, the model is accurately identified at a high rate and identified in an optimized manner in terms of working speed and resources when compared to the ground truth data and humanly marked beats. The most popular Precision Indicators are F-Measure (F1 Score), Precision, and Recall. For these metrics, a 1.0 is best for the model to identify beats while providing as few false positives as possible, while a 0.0 is best for the model to provide as few false negatives as possible. The Beat Accuracy (BA) or Beat Tracking Accuracy (BTA) number is used to check the accuracy of expected and reference beats lining up with each other in terms of time within a certain range (usually 70 ms). Continuity-Based Evaluation (CMLc and CMLt) is another popular measure which checks the frequency with which the system tracks beats across entire sequences which tells apart continuous beats from broken. Processing delay, real-time factor (RTF) and resource utilisation are some of the computing variables that are used to measure efficiency. I/O indicates the speed at which the music can be processed in relation to the time taken.

4. Applications in Education

4.1. Music Education and Rhythm Training

AI Jakebeat has introduced a new dimension to the learning of music and rhythm, which has transformed the way that students learn, practice, and master rhythm. In the past, the rhythm taught relied on feedback and pace fix by human teachers that could be irregular or only occur when teaching one-on-one lessons. Learners can receive correct input in real-time on their timing, beat stability, and synchronisation with AI-based systems. The system listens to students play instruments, clap or sing and notes when they get off the goal beat. It then provides them with virtually immediate feedback on what to do to correct their errors.

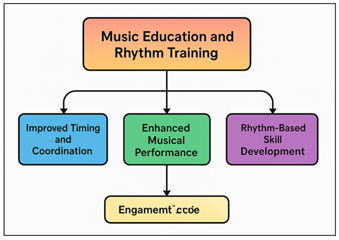

Figure 1

Figure 2 Flow of Music Education and Rhythm Training Process

Neural beat trackers and tempo analysers are used to visualize rhythmic patterns in wave or digital beat grids allowing students to improve their ability to keep time. Stepwise rhythm learning along with AI feedback is demonstrated in Figure 2 Also, the difficulty of beat tasks can be changed by AI systems based on how well a learner is doing. This customisation helps in people to make steady progress while keeping the people interested and motivated. AI-powered rhythm tools are also self-paced learning resources, so students need not be watched by a teacher all the time. They also enhance band training by putting multiple performers in sync with each other by using shared beat mapping, as it makes group performances more cohesive. Using AI analytics, teachers can look at their student's regular growth numerically and see patterns of change or mistakes which keep happening.

4.2. Interactive Learning Tools and Applications

A growing community of dynamic learning tools and apps that make rhythm education more fun, approachable and immersive are based upon AI-powered beat recognition. These tools rely on machine learning techniques and have game-like interfaces to get trainees to actively get involved and maintain motivation. Rhythm based mobile apps, virtual drums models, AI-assistance software to create music are all examples of things changing based on the accuracy of the given time and the progress of the performance. In these kinds of systems, the AI is always listening to the music to find beats, sync up the playback and provide real-time feedback. Rhythm can be demonstrated visually to students with simple to understand graphics such as moving bars or coloured pulses that change colour with every beat. By linking hearing with seeing and touching these visualisations enhance the harmony between the two. AI tools employ game-like features such as scores, badges, levels and tasks to make learning rhythm fun and competitive, to keep people engaged in a long-term way. Adding AI also makes flexible learning possible, in which the software changes the speed, difficulty or musical patterns based on how well the learner has done in the past. This constant feedback process ensures that the success of each individual is individualised and that newbies do not get frustrated. These tools can also connect to cloud-based platforms, which allow teachers to check up on students progress from far away from them and make lesson plans based on data.

4.3. Integration into Online and Classroom Settings

Using beat recognition in the classroom and online with AI technology has shifted the way rhythm education is taught to students and is more open, data-driven, and engaging to everyone. Teachers in regular classes can use AI-based software to check the progress of students managing time during practice sessions or live shows. Real-time beat tracking allows teachers to see how well a group is synchronised with one another, find problems with the speed and give quick advice on how to fix them. This makes group music lessons, practice and rhythm work more effective. AI tools are very important for online learning as they help students as well as teachers who are far away to communicate. Platforms that are able to find beats permit virtual rhythm tests in which students can post audio files to be automatically graded. The method provides input about the accuracy of the beats, regularity of the pace, and feeling of the emotions, which assists the player in improving himself or herself. Also, AI models can respond to various internet conditions, which ensures that live virtual lessons keep in sync.

5. Case Studies and Implementations

5.1. Examples of Educational Platforms Using AI Beat Detection

A number of current teaching sites have managed to incorporate AI-powered beat recognition for making learning rhythm and music more fun. Machine learning techniques are used by apps like Yousician, SmartMusic, and Melodics by analyzing user real-time playing abilities and finding beats, pacing, and rhythmic accuracy. These platforms provide the learner with immediate audio and visible feedback, which aids in correcting their time and improving their coordination in movements. For instance, Yousician also utilizes AI to determine users' abilities to match their rhythms to backing tracks. Its beat-tracking tool indicates where the goal pace is not being met and provides specific comments to assist with the regularity. In addition, SmartMusic, the mostly used software in schools, uses intelligent beat recognition to grade group performances and individual practice sessions. Teachers use performance screens to keep an eye on progress of students and find common mistakes while students get thorough rhythmic breakdowns. Melodics is onboarded to drill the drums and electronic instruments. It uses AI beat recognition to make complicated rhythm exercises fun and change-based upon the skill level of the student. Apart from the business software, there are research projects such as Google's Magenta Studio that explores the chance of AI rhythm generation and live beat alignment. These projects relate the study of learning music to the freedom of experimenting creatively. The case studies demonstrate that AI beat recognition increases student interest and increases the accuracy of teaching. With its real-time analysis, personalised feedback, and flexible learning pathways these tools transform the way music is taught and encourage students to practice by themselves and advance their skills in a variety of learning settings.

5.2. Analysis of Learning Outcomes and Student Engagement

Adding AI-powered beat recognition to school systems has made a big difference to the way the students learn and how engaged they are in their work. Studies and real life applications in the classroom demonstrate that students who use AI powered rhythm tools are more motivated, have more time and are able to tell the difference between sounds than students who use traditional teaching methods. By having students receive instant input if their pacing is incorrect, they can immediately adjust and repeat, and in so doing, reiterate, the proper way of doing the exercise. AI analytics monitor things such as tempo stability, beat alignment, and reaction time to provide teachers with exact information about the progress of each student. This is the method based on data which allows teachers to tailor their lessons and support students where they need it, so every student is learning at the right speed. Students are more interested when the game-like features are integrated into music learning with AI, such as progress tracking, tasks, and prizes. With these additions, practicing rhythm becomes a monotonous repeated task to a fun, and engaging task. AI-powered systems are also conducive to open learning, which implies that they can work with students of all skill levels, backgrounds, and learning speeds.

5.3. Challenges and Limitations in Implementation

Even though it has a lot of promise, using AI for beat recognition in schools comes with a lot of technical, educational, and moral problems. Noise in the background, bad recording, everything from a big array of instruments can alter the accuracy of beat tracking and it's a big problem. When AI models have been trained to play music with particular datasets, they may struggle with live recordings, beats that are not of a typical nature, regional styles of music etc. This demonstrates the issue of dataset bias and generalisation. From a technology standpoint, real-time beat recognition is very resource demanding for even deep learning systems that rely on CNNs and RNNs. Putting these kinds of systems to use on low-power devices or in places with unreliable internet, which happens a lot in schools, can make them less fast and easy to use. One of the main goals of study is to make sure that low-latency performance doesn't effect the accuracy of analysis. When teachers try to use the AI systems in their lessons, they run into problems regarding how they teach. It's possible that teachers don't know how to read computer comments or translate the findings of AI into lessons that are useful. Over-reliance on automated review would also lessen the emotional and creative engagement with music if the review is not accompanied by that of a human. Concerns around data protection, student tracking and openness of algorithms still exist from an ethical perspective.

6. Result and Discussion

The research shows that using AI to find beats has the advantage of greatly improving the accuracy of playing rhythms, learning speed, and student interest. Learners using rhythm tools with AI-integrated were found to have a better beat stability and faster mistake repair than those who used traditional methods. Real time input and flexible tools allowed people to teach themselves and be motivated. Data-driven observations helped teachers in many ways by allowing them to customize their lessons and monitor the progress of the students. Even though there are some small problems with computing limits and dataset bias, the results show that AI beat recognition is a good way to combine artificial intelligence with creative rhythm education, making both the teaching and learning experience better.

Table 2

|

Table

2 Comparison of Rhythm Accuracy Between Traditional

and AI-Assisted Learning |

||||

|

Group |

Average Beat Accuracy (%) |

Tempo Stability (%) |

Error Reduction Rate (%) |

Engagement Score (%) |

|

Traditional Learning |

68.5 |

72.4 |

14.2 |

68.8 |

|

AI-Assisted Learning (CNN Model) |

88.9 |

90.2 |

36.7 |

87.9 |

|

AI-Assisted Learning (RNN Model) |

91.4 |

92.7 |

39.8 |

94.2 |

|

Hybrid CNN–RNN Model |

94.6 |

95.8 |

42.5 |

95.6 |

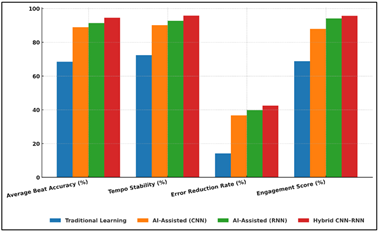

In Table 2, the success of the rhythm learning is compared between the old-fashioned method and AI assisted method using CNN, RNN and mixed CNN-RNN architectures. The results make it clear that AI-enhanced learning makes beat accuracy, time stability, and interest from pupils much better than traditional teaching. Figure 3 presents the differences in accuracy and efficiency of learning models.

Figure 3

Figure 3 Performance Metrics Comparison Across Learning Models

The average beat accuracy of the usual learning group was 68.5% and tempo stability was 72.4%, which means that they had some success, but not great regularity with keeping the pace. CNN models with AI on the other hand did 88.9% correct, proving the success of convolutional layers in plucking out of rhythmic patterns from the audio sources. Further, RNN-based systems improved even more to 91.4% accuracy. Figure 4 reveals successive improvements in performance from traditional to hybrid models. This demonstrates how well they are able to determine time relationships in rhythmic order.

Figure 4

Figure 4 Progressive Improvements from Traditional to Hybrid AI Learning Models

With 94.6% accuracy and 95.8% tempo stability the CNN-RNN model combined showed better performance than all the others. This illustrates the advantage of learning features both in space and time. Comparative strengths of various types of AI learning are shown in Figure 5.

Figure 5

Figure 5 Multidimensional Performance Profile of Learning Approaches

Also the involvement number went from 68.8% in normal learning to 95.6% in hybrid model, which indicates that real-time feedback and adjustable rhythm tasks really make students more motivated to learn.

7. Conclusion

At the point where music technology, AI and education all meet, beat recognition spurred by AI is a huge step forward. These systems make use of neural network designs such as CNNs and RNNs to analyse complex rhythmic patterns very accurately. This allows them to identify, visualise and provide feedback in real-time. This feature changes the way that rhythm is taught by allowing students to receive instant and objective feedback on their act, while fostering freedom and creativity in the learning of music. Adaptive algorithms in rhythm exercises ensure that these exercises evolve according to the child's proficiency level, promoting continuous progress and engagement. In addition to new technologies, the effects on schooling is huge. Rhythm systems run by AI make learning music more open and interesting so that more people can get good lessons outside of school. Whatever their walk in life, students can learn rhythmic skills using gamified learning tools allowing them to learn at their own pace and in their own way. AI beat recognition provides teachers with important information to focus their comments, organize lessons, and test the result of the learning of students. But in order for implementation to go well, some big problems have to be solved. These include different types of datasets, slow systems and social worries about data privacy. More study and teamwork between different fields are required so that these tools can be better and can be used in more schools.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Araneo, P. (2024). Exploring Education for Sustainable Development (ESD) Course Content in Higher Education: A Multiple Case Study Including What Students Say They Like. Environmental Education Research, 30(4), 631–660. https://doi.org/10.1080/13504622.2023.2280438

Aslam, N. M., Khan, I. U., Alamri, L. H., and Almuslim, R. S. (2021). An Improved Early Student’s Academic Performance Prediction Using Deep Learning. International Journal of Emerging Technologies in Learning, 16, 108–122.

Bozak, A., and Aybek, E. C. (2020). Comparison of Artificial Neural Networks and Logistic Regression Analysis in PISA Science Literacy Success Prediction. International Journal of Contemporary Educational Research, 7(2), 99–111. https://doi.org/10.33200/ijcer.693081

Chung, J. Y., and Lee, S. (2019). Dropout Early Warning Systems for High School Students Using Machine Learning. Children and Youth Services Review, 96, 346–353.

Costa-Mendes, R., Oliveira, T., Castelli, M., and Cruz-Jesus, F. (2021). A Machine Learning Approximation of the 2015 Portuguese High School Student Grades: A Hybrid Approach. Education and Information Technologies, 26(2), 1527–1547. https://doi.org/10.1007/s10639-020-10316-y

Dos Santos, L. M. (2022). Online Learning After the COVID-19 Pandemic: Learners’ Motivations. Frontiers in Education, 7, Article 879091.

Hamim, T., Benabbou, F., and Sael, N. (2021). Survey of Machine Learning Techniques for Student Profile Modeling. International Journal of Emerging Technologies in Learning, 16, 136–151.

Kucak, D., Juricic, V., and Dambic, G. (2018). Machine Learning in Education—A Survey of Current Research Trends. In B. Katalinic (Ed.), Proceedings of the 29th DAAAM International Symposium (406–410). DAAAM International. https://doi.org/10.2507/29th.daaam.proceedings.059

Martínez-Abad, F. (2019). Identification of Factors Associated with School Effectiveness with Data Mining Techniques: Testing a new Approach. Frontiers in Psychology, 10, Article 2583.

Nalawade, P. V., Bhandare, S., Bhole, S., Bhongale, V., and Ghayal, M. (2025). Acadlinker: Design and Evaluation a sKill-Based Student Network with a Touch of AI. International Journal of Recent Advances in Engineering and Technology, 14(2), 57–61.

Pouresmaieli, M., Ataei, M., Nouri Qarahasanlou, A., Barabadi, A. (2024). Building Ecological Literacy in Mining Communities: A Sustainable Development Perspective. Case Studies in Chemical and Environmental Engineering, 9, Article 100554.

Rebai, S., Ben Yahia, F., and Essid, H. (2019). A Graphically Based Machine Learning Approach to Predict Secondary Schools Performance in Tunisia. Socio-Economic Planning Sciences, 70, Article 100724. https://doi.org/10.1016/j.seps.2019.06.009

Romero, C., and Ventura, S. (2020). Educational Data Mining and Learning Analytics: An Updated Survey. WIREs Data Mining and Knowledge Discovery, 10, Article e1355. https://doi.org/10.1002/widm.1355

Salas-Pilco, S. Z., and Yang, Y. (2022). Artificial Intelligence Applications in Latin American Higher Education: A sYstematic Review. International Journal of Educational Technology in Higher Education, 19, Article 21. https://doi.org/10.1186/s41239-022-00326-w

Talal, H., and Saeed, S. (2019). A Study on Adoption of Data Mining Techniques to Analyze Academic Performance. ICIC Express Letters, Part B: Applications, 10, 681–687.

Topol, E. J. (2019). High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nature Medicine, 25, 44–56. https://doi.org/10.1038/s41591-018-0300-7

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.