ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Virtual Reality Art Installations Powered by AI

Dr.

Poonam Singh 1![]()

![]() ,

Nimesh Raj 2

,

Nimesh Raj 2![]()

![]() ,

Dr Varsha Kiran Bhosale 3, Shikhar

Gupta4 4

,

Dr Varsha Kiran Bhosale 3, Shikhar

Gupta4 4![]()

![]() ,

Nishant Trivedi 5

,

Nishant Trivedi 5![]()

![]() , Abhijeet Panigra 6

, Abhijeet Panigra 6![]()

1 Associate

Professor, ISME - School of Management and Entrepreneurship, ATLAS Skill Tech

University, Mumbai, Maharashtra, India

2 Centre

of Research Impact and Outcome, Chitkara University, Rajpura- 140417, Punjab,

India

3 Associate Professor Dynashree Institute of Engineering and Technology

Sonavadi-Gajavadi Satara, India

4 Chitkara Centre for Research and Development, Chitkara University,

Himachal Pradesh, Solan, 174103, India

5 Assistant Professor, Department of Animation, Parul Institue of Design

Parul University, Vadodara, Gujarat, India

6 Assistant Professor, School of Business Management, Noida

international University 203201, India

|

|

ABSTRACT |

||

|

This paper

looks at the intersection between Artificial Intelligence (AI) and Virtual

Reality (VR) and the way it is shaping the next generation of digital

artworks. With the ease of expression created by technology, AI-assisted VR

environments are altering the process of creation, the ownership of the

piece, and how it reaches the masses. The paper will analyse how the virtual

art has been developed over the course of years, starting with the initial

digital art, to the most recent visual and interactive art driven by AI. The

development of machine learning and creative algorithms allows computers to

construct pictures independently, and the work of neural networks allows the

appearance of the things in virtual locations to be altered in real time.

Natural Language Processing (NLP): It is also employed in order to make the

stories interactive. This will enable the users to co-create and modify the

art experience through conversation and mood tracking. The paper also

ventures into technical details of the manner in which such kinds of systems

are developed and assembled, the hardware, software structure and model

fusion methodologies. Besides discussing the scientific aspect of it, it

discusses the effects of creativity and culture, poses the question of what

is the evolving role of the artist, as well as the social issues of

AI-generated creation. All these are authorship, computer bias, and cultural

portrayal that are critically discussed. The other social and cultural

consequences of interactive art using AI include consideration of the

accessibility, emotional appeal and social engagement of the kind of art. |

|||

|

Received 23 January 2025 Accepted 18 April 2025 Published 10 December 2025 Corresponding Author Dr.

Poonam Singh, poonam.singh@atlasuniversity.edu.in DOI 10.29121/shodhkosh.v6.i1s.2025.6622 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Virtual Reality, Artificial Intelligence, Generative

Art, Immersive Installations, Interactive Storytelling, Digital Creativity |

|||

1. INTRODUCTION

Over the last few years, the concept of creativity has changed because of the art and technology integration. This has created extremely intense experiences in such a way that one cannot clearly define the real and the simulated. One of such game changers is the virtual reality (VR) art galleries. They are strong tools of appeal to sensory appeal and narration of captivating tales. Combined with Artificial Intelligence (AI), such works emancipate the traditional concept of beauty and allow accomplishing the creation of art areas that are dynamic, changing and sensitive Chen et al. (2024). The presence of AI-created VR art exhibitions represents a vast change in the process of creating art, production, and consumption. They combine computer science and people to come up with spaces where individuals are free to be creative either alone or together. The idea behind virtual reality art is to engulf the individuals into totally simulated computer worlds where the individual can actively exercise his five senses, feelings and thoughts. In comparison to the static artworks, which can only be confined to the physical media, VR exhibits enable individuals to explore, become part and even transform the experience in real-time Ray (2023). This interaction is further more powerful with the help of AI since the systems can look at how individuals use them to learn sense data and generate new modes of responding to them. This reciprocal interdependence of human contribution and output of a computer brings viewer into a co-creator and forms a new dimension of interactive art. The recent advances in machine learning, computer vision, and natural language processing (NLP) and creative algorithms have enabled AI to be applied to virtual reality art Botti and Baldi (2024). With these tools, artists can create works that aren't simply pre-programmed for them, but can also change themselves. For example, generative adversarial networks (GANs) can make a huge number of different visual forms, and systems that use reinforcement learning can change the way space looks or the plot by responding to the way people interact with them.

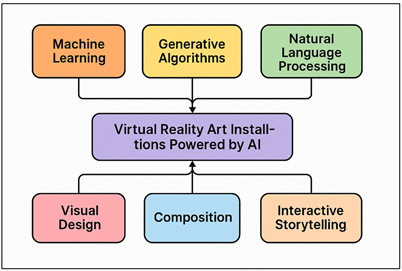

Additionally, NLP develops talking tools that allow one to have a conversation with virtual people or places that create deeply personal and emotional experiences of art. When it comes to creativity, the artist becomes not only the only person who makes things but the planner of systems: setting up the rules, sources of data, and aesthetic guidelines that the AI uses Cruz et al. (2024). This change calls into question long held ideas regarding who writes, what is original and who controls the work. The process of creativity is both human and computer-based. The Artificial Intelligence (AI) integration, interaction and creative workflow in immersive VR are illustrated in Figure 1. This creates a new form of intelligence which enables artists to try out more ideas.

Figure 1

Figure 1 Functional Structure of AI-Based VR Art

Installations

There is also the added benefit that when people are immersed in AI enabled VR displays it makes them deeply think about the nature of creation itself and whether or not art that is created by computers can be just as emotional, has the same purpose or cultural significance as art created by humans. The growing popularity of VR art exhibitions which are AI-driven and shows how the society as a whole is shifting towards a digital experience and artificial control. Individuals are conversing, having fun and learning in the virtual worlds more and more and art is being carried in those involving environments Far et al. (2023). Not only are these works beautiful to watch, but they are the locations where one can discuss identity, empathy, and group awareness in the world that is more technologically developed. Moreover, they create new methods that allow individuals of any social status to have a chance to come close to art creation and be a part of it, without being restricted by the real room or conventional media.

2. Background and Theoretical Framework

1) Evolution

of digital and immersive art forms

These technologies allowed artists to have realistic 3D spaces that people could move around, see, and change the art. These new ideas went beyond sight and included sound, movement and feedback from multiple senses making art experiences totally realised. Museums such as the ZKM Centre for Art and Media Karlsruhe and the Ars Electronica in Vienna made great efforts in the promotion of virtual and interactive installations that stretched the boundaries of how people normally consume art Davison et al. (2022). In the 21st century, Artificial Intelligence (AI) and machine learning have become closely related to digital art Yang et al. (2022). This has brought about the emergence of more generative art and data driven aesthetics. Artists now create programs that create or change works of art on their own, making it harder to distinguish the differences between human creation and machine agency.

2) Technological

foundations of VR and AI

Artificial intelligence (AI) and virtual reality (VR) are great companions because they both desire to simulate and enhance the human experience using computers. Virtual reality (VR) is based on the use of the computer images, in motion tracking, visual input and spatial computing for the generation of three-dimensional worlds like or better than the real world. Immersive devices, such as head-mounted displays (HMDs), motion controls, haptic interfaces and spatial sensors make it possible for the user to interact with virtual space in real-time Santalo et al. (2023). Rendering systems (that is, Unity and Unreal Engine) manage visual accuracy, physics modelling and interaction. These parts function in conjunction with them. Artificial intelligence, by contrast, is what provides the mental structure that gives these virtual worlds their life by providing adaptable behaviour and creative intelligence Chen (2024). AI programs can generate procedural landscapes, response music or storylines that vary depending on the audience's engagement with the show. Table 1 presents some key concepts of the evolution of digital art and technology. These tools coming together make it possible for people to connect in ways that have never been seen before. Neural style transfer and generative adversarial networks, for example, can alter images in real time and reinforcement learning systems can alter how people experience art based on their feelings or behaviors.

Table 1

|

Table 1 Summary of Background and Theoretical Framework |

||||

|

Project |

Focus Area |

Technology Used |

Findings |

Source |

|

AARON: The Painting Robot |

AI-generated art |

Expert systems, rule-based

AI |

Early example of autonomous

generative art |

University of California,

San Diego |

|

Machine Hallucinations |

Immersive AI visuals |

GANs, projection mapping, VR |

Explored data-driven aesthetics in architecture |

Artechouse NYC |

|

Drawing Operations Unit |

Human–AI collaboration |

Neural networks, robotic

arms |

Showcased co-creation

between artist and AI |

MIT Media Lab |

|

AMIs Experiments Nalbant and Uyanik (2022) |

Interactive art and ML |

TensorFlow, GANs |

Enabled artist access to AI tools for creativity |

Google Research |

|

Chalkroom |

VR storytelling |

VR, Unity Engine |

Created immersive narrative

through text and flight |

Sundance Film Festival |

|

BOB (Bag of Beliefs) Fraser et al. (2024) |

Live simulation art |

Reinforcement learning, Unity |

Explored AI consciousness through evolving virtual

entities |

Serpentine Galleries, London |

|

Neural Glitch Studies |

Generative aesthetics |

GANs, style transfer |

Investigated randomness and

bias in AI art |

Ars Electronica |

|

Tilt Brush |

Immersive art creation |

VR painting tools |

Enabled 3D artistic expression in VR |

Google |

|

Hyper-Reality |

AR and identity |

Mixed reality, AI narrative |

Critiqued digital mediation

of human experience |

Keiichi Matsuda Studio |

|

Unsupervised Barrow et al. (2024) |

AI data sculpture |

Machine learning, projection, VR |

Transformed MoMA’s collection data into immersive

visuals |

Museum of Modern Art |

|

AI Interactive Environment

Project Drofova et al. (2024) |

Generative virtual scenes |

GANverse3D, deep learning |

Synthesized dynamic 3D

environments in real-time |

Nvidia AI Labs |

|

VR Presence Project |

Cognitive immersion |

AI avatars, haptic systems |

Advanced realism in social VR art spaces |

Meta (Facebook) |

3. AI Technologies in Virtual Reality Art

1) Machine

learning and generative algorithms in visual design

AI powered virtual reality (VR) art displays are creatively based on machine learning and generative algorithms. With these algorithms, complex and changing visual compositions can be created either autonomously or semi-autonomously. In the standard digital design, all visual pieces are made by hand. But with machine learning, artists have the ability to set parameters, data sets and influences that allow for the system to generate new looks dynamically. Different types of algorithms, such as Generative Adversarial Networks (GANs), VariationalAutoencoders (VAEs), and methods based on evolutionary computation, can generate new colours, shapes and patterns in real time, based on user actions or depending on the information received from the environment. In case of VR art, these dynamic models make it possible for the visual scenery to change all the time Latham et al. (2021). Biometric monitors can pick up on things like how a person is looking, moving or feeling, which can alter the colour scheme or shape of an installation. This real-time change of digital art is comparable to the way living things grow and natural events occur.

2) Role

of neural networks in dynamic visual composition

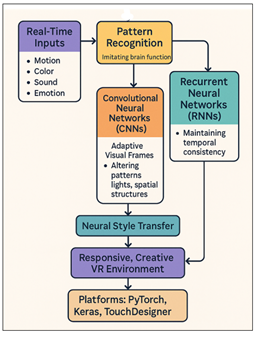

In AI driven VR shows, neural networks and specifically deep learning models play a very important role in making visual arrangements which are changing according to the situation. These systems are designed to mimic the way the brain pattern recognizes objects and events. This enables the machine to comprehend complicated inputs such as motion, colour, sound and feeling and convert them into smooth visual reactions Gode et al. (2025). Artists can find convolutional neural networks (CNNs) and recurrent neural networks (RNNs) useful to generate visual frames that can change and adapt as per the real-time inputs.

Figure 2

Figure 2 AI Neural Architecture for Responsive VR

Environments

This makes the virtual world responsive as well as creative. CNNs can, for example, use a participant's movements or look direction to process life sense data and change the spatial structures, patterns of lights, or patterns of light in the VR environment in real time. Figure 2 Neural networks for enabling adaptive, intelligent virtual art environments. Also, recurring topologies such as LSTMs (Long Short-Term Memory networks) make animations more consistent with time; it ensures that changes in the visualizations are natural rather than artificial. Neural style transfer methods improve these works even more by combining artistic style with environmental cues to make images that are always changing and show both artificial intelligence and human feeling. Visual meaning is constructed from various sources of data by neurons which are basically the brains behind interactive art Spano et al. (2023). They allow the VR displays to go beyond merely digital rendering and allow the system and the user to work together to create something in real-time. Platforms such as PyTorch, Keras and TouchDesigner are used by artists to make these neural systems which is a combination of art and deep computing. This results in a shifting relationship between the way we perceive things and the way our computers are smart. We now live in a world where visual form is not only coded, but learnt, adapted and emotionally powerful. This makes the boundaries of what it means to experience art in virtual places.

3) Natural

language processing for interactive storytelling

Natural Language Processing or NLP adds verbal intelligence to virtual reality (VR) art pieces so that people can connect with things through conversation, feeling and story. NLP makes watching a story active co-creation with computers that understand, create and react to human language. VR setups can understand the spoken or written input of users and change the story, behaviour of characters, or the way the world moves, based on that information. They do this using models, such as transformers (e.g. GPT-based designs) and speech recognition systems. In AI-driven interactive stories, NLP is implemented not only in order to create new ideas but also to understand what they mean. For example, when an individual speaks to a fictional figure the system examines the tone, meaning and emotion in the speech to make replies appropriate for the situation. Interactive stories allow users to influence the direction of the narrative, which can have an impact on the user's feelings, the location events, or even the appearance of the virtual world. By doing this NLP adds a flexibility of story which means that each experience is played out differently depending on how people talk to each other and how computers understand what people are saying. Also, multimodal NLP (which includes text, voice and motion recognition) enhances absorption by lining up spoken and unspoken signs. This combination makes it possible, the art to "listen" and "feel" and blurs the line between real-life talk and computer-generated art. To incorporate features that allow language learning into the VR systems, artists get to use tools like OpenAI's GPT models, Google Dialogflow, and IBM Watson Assistant.

4. Design and Implementation of AI-Powered VR Installations

1) Concept

development and creative process

AI-powered VR art displays begin with an idea vision, bringing together artistic purpose, capability of the technology to work, as well as the design of experience. Artists and coders collaborate to determine what the goals of the installation are emotionally, physically and interactively. They make decisions for the audience; how they should feel, what they should see, and how they can contribute to making the space. Usually the first step in this phase is the creation of a story or theme idea such as exploring awareness, identity or nature. The idea is then transformed to visual, audio, and bodily metaphors through AI-powered processes. In the creative process, both human thought and machine intelligence are put into the air in attempts at trying things out over and over again. Artists use the datasets they select or make inputs during training to enable generative models to achieve a certain style or mood. For instance, graphical representation of natural phenomena can be used as data sources, and poem or philosophical text can be used to train language models that change the tone of stories. When working together, artists and engineers, data scientists and sound designers ensure that the purity of the art is preserved alongside making the most of the processing power of computers. That is followed by storyboarding and testing (often with 3D modeling and simulation environments) and how space moves and how people move through space. It is very important that the interaction design considers the way people interact with the work through words, movement or look.

2) Technical

setup: hardware, software, and frameworks

In order for realistic and flexible worlds to be made possible, AI-powered VR displays require good integration of hardware, software, and computer tools. Usually, head-mounted displays (HMDs) such as Meta Quest, HTC Vive or Valve Index are used. These provide you with stereoscopic imagery of high resolution and motion tracking. Spatial audio systems, haptic gloves, depth cameras and motion sensors are all complementary systems that enhance embodiment and interaction. This allows the users to experience and alter the surrounding in a natural way. Some of the most important software tools that are available to create VR worlds are Unity3D and Unreal Engine. These tools allow you to show in real time, simulation physics and plugins for content AI. Python, C# or Blueprint scripts are commonly used by artists to integrate AI models to visual and behavioural components. AI processing can occur right on the GPU powered computers or in the cloud, which allows complex models such as neural networks and generative systems to work fast and with no delays. Frameworks such as TensorFlow, PyTorch, and RunwayML are commonly utilized in AI development to train and deploy models that generate or transform pieces of audio, video content as well as text content. Tools and APIs for middleware such as OpenAI API, Google Cloud AI, and AWS Deep Learning AMIs make it easier to integrate vast amounts of data and make conclusions in real-time.

3) Integration

of AI models with VR platforms

The most important step in the process of bringing creative and technical design to life is the combination of AI models with VR platforms. The ability to connect artificial intelligence (generative graphics, adaptable storylines, or emotional responses) with the interesting design of virtual worlds is what this process is all about. The first step in the merging process is to establish means for the AI model and the VR engine to share data. Real-time communication is configured through the use of APIs, SDKs, or custom tools in order to enable the AI to process input from the user and alter visual, audio, or story elements in real time. A Generative Adversarial Network (GAN), for example, might alter patterns and space designs all the time based on the way a person moves or looks that can be tracked through motion or gaze tracking. Equally, if not more importantly, models of Natural Language Processing (NLP) embedded in the VR system enable interaction by voice input. The AI knows what is being said and tweaks the world/story to suit. Reinforcement learning algorithms can make the experience even more unique by adjusting the level of difficulty, speed or emotional tone depending on the state of the user. Plugins or tools that are only developed for an engine, such as Unity ML-Agents, Unreal's AI Toolkit or special Python links, make it possible to integrate across an engine. These ensure that AI reasoning methods and VR graphics systems interact in real time. Integration with the cloud also makes it possible to do complicated AI calculations by sending the work to faraway computers for scaling. In the end, integration works best when there is no delay in the contact and the aesthetics remain the same. AI answers must feel natural, quick and emotionally constant.

5. Artistic and Cultural Implications

1) Transformation

of the artist’s role in AI-driven creation

As AI-powered VR displays have become more popular, they have completely changed the job of the artist who now does more idea organising and system design instead of direct human production. In the past, artists created things or computer works that were not changing, physical things. AI-driven art, on the other hand, requires artists to be creative coders, data gatherers, and experience creators in addition to framework designers that make it possible for the art to expand on its own. With this change, the artist is no longer only a maker, but also a partner with smart systems, which sets the rules for creation instead of being responsible for every result. AI art focuses on the process more than the result and the creation occurs on the fly between algorithms, interacting. Artists select datasets, train models and make decisions about the models' behavior which can influence the generation of the work. Due to the difficulty of predicting AI output, it fosters new types of creativity and experimentation, which frequently produces new styles that would have been impossible to predict. This shifting connection raises questions of traditional notions of ownership and creativity since the art is the result of a conversation between gut feelings of the artist and machine learning. Also, artists are more and more go betweens between technology and the public; using interactive tools to make people feel, think and interact.

2) Ethical

considerations: authorship, bias, and creativity

When it comes to AI being used in creating art, difficult ethical questions arise about ownership, bias and the purity of the work. However, it is difficult to determine if the artist of a work of art is human or an algorithm. Such questions include: Who owns the work of art - the creator, the AI system or those who supplied the data? Concerns about intellectual property and creative credit due to AI outputs based on huge datasets. This is especially the case when training data contains stolen material or images sensitive to different cultures. There is a big moral problem with algorithmic racism, as well. AI systems learn from records that were put together by humans which may include racial, cultural or gender biases that were not meant to be there. Many opponents of AI art believe that it devalues human creativity, while others recognize that it is a means of enhancing art and discovering new methods to be creative in the digital age. Ethical models need to change along with these tools, putting an emphasis on working together, being responsible and being critical.

3) Socio-cultural

impact of immersive AI art

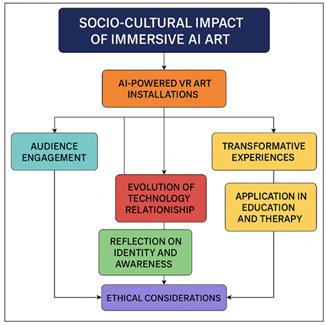

Modern culture is being altered by immersive AI art. altering the way people experience creation, feeling and group identity. In contrast to traditional passive art, AI-powered VR displays encourage viewers to interact and take part in the art experience, making the art process a conversational and collaborative process. Participatory model implies that art can be more accessible by more people due to the increased accessibility of complex digital expression. Artificially intelligent art gives us the experiences of how our relationship with technology has evolved over the years in a cultural context. It makes you reflect on the issue of identity, consciousness, and nature and unnaturalness. Its peculiar combination of immersive experiences and artificial intelligence has made people think and feel in a different way compared to the traditional media, which offers them life-changing experiences.

Figure 3

Figure 3 Conceptual Diagram of AI-Driven Immersive Art and

Its Societal Impact

These incidences can assist individuals to interpret, nurture and sympathize with other individuals belonging to different cultures, particularly when discussing global matters like climate change, migration or internet spying. Figure 3 depicts societal, cultural and emotional effects of immersive AI art. In therapy, education and social action Immersive AI art is increasingly being used as well. It has interactive capabilities that enable individuals to learn and recover emotionally. It also leaves people questioning how much they are becoming slaves of technology and their reality as virtual realms are becoming closer and closer to real social spaces. Thus, artists and organisations should find the balance between new ideas and the mental health of people so that the designs could be responsible and inclusive. Ultimately, the implications of the AI as interactive art are even greater than the appearance of it on the society and culture. It is changing the lifestyle of the 21st century. It brings art to life as a social interface in which AI and human experience converge to transform the manner in which we consider meaning, connection and fantasy by a combination of creating, technology and group involvement.

6. Future Directions and Innovations

1) Advancements

in multimodal AI and haptic technologies

The next stage in AI-driven VR art is the unification of the two-way AI and physical technologies, and it will eventually provide a more immersive experience. Multimodal AI systems operate using a mixture of input data including vision, sound, language, and movement in a combined and situational-conscious system to form coherent experiences. Such systems are able to scan human behavior and generate content based on such behavior. This provides them with exterior senses, senses that are able to perceive, hear and react with emotional vigor. As an example, in the future, displays may be exploited to integrate real-time speech recognition, gesture tracking, and visual synthesis in order to render simulated worlds, which evolve depending upon how people interact with them. Virtual art will be something people can touch as the haptic interfaces, including touch gloves, full body suits and brain feedback devices will become a reality. Such interaction on touch enables the digital and real world to be linked and the interactive experiences to be more tangible and experienced. These technologies will enable the artists to explore the use of texture, resistance and sound as an expression tool. This will make virtual places real emotional places. The more the multimodal AI develops, the easier will be the collaboration between humans and machines in a more natural way, whereby it will be less apparent what is sense and what is art. The combination of them with the awareness of AI and the haptic reality will not only influence the development of interactive art, but also open the new opportunities of telling more emotional, empathic, and physical stories. These novel ideas imply that the future of art is really going to be multimodal and clever, a coexistence between computer and human created experiences, which will engage all of our senses and feelings.

2) Expanding

participatory and collaborative VR environments

The next step for AI-powered VR art is to involve and encourage group creation to make viewers active co-creators rather than just spectators. As networked virtual environments become more robust, VR environments will morph into socialized, collaborative communities, where people from across the world will interact with each other in real time. With the help of AI, these participatory platforms can promote social creation by allowing people to collaborate to create images, sound or narratives within one virtual space. AI will enable people to collaborate and bring together all of their work by analyzing what each person is saying and assembling all of their work into coherent artistic experiences. For example, generative algorithms could use different types of creativity, such as spoken words, gestures, or drawings, and put them together to make a visual and auditory performance. Also, advances in cloud-based VR and decentralised digital infrastructures will enable the involvement to be scalable so that artists, teachers and groups can participate and create across institutions and locality. These places to work together will also encourage conversation between people of different cultures, making art a way in which everyone can feel welcome and understand each other.

3) Cross-disciplinary

potential in education and therapy

The combination of AI and VR has the potential to change many different fields, especially education and therapy where virtual art can be used as both a way to learn, and learn how to heal. In educational environments, AI-powered VR displays can be used to model difficult concepts by means of hands-on learning, allowing students to explore the concept such as physics, history or philosophy in an environment that is both visually and emotionally engaging. In order to make these experiences more relevant to each student, adaptive AI systems can vary the difficulty level, speed, and the material according to the way students think and feel. When used in therapy situations, interactive AI art can be used for mental health, recovery, and processing emotions. Through facilitated sensory exposure, VR environments can be used to help people overcome trauma, reduce anxiety, or increase awareness through the use of immersive visuals, soundscapes, and responsive narratives. AI-powered biofeedback systems can examine body indicators, such as the motion of the eyes or heart rate, to alter the tone, colour, or rhythm of the environment in real-time to make it more relaxing or stimulating depending on the need. Since these tools can be applied in various fields, they also facilitate collaboration between artists, psychologists, educators, and neuroscientists. This associates art with science. This sort of merging is helpful in making all-round, people-focused apps which are creative, understanding and intelligent.

7. Conclusion

The convergence between Artificial Intelligence (AI) and Virtual Reality (VR) is a massive milestone in the history of art and technology. It transforms Pur experience of creation, consciousness and participation in the digital era. The VR art exhibition through AI stretches the boundaries of the art norm, in that it creates life-like worlds that think, feel, and react. Not only are they an improvement in the medium, but they represent a shift in the manner in which art was created and the manner in which people associated with it. With the help of machine learning, neural networks, and natural language processing among other technologies, these pieces become the living ecosystems that never stop changing and creating something new and connecting with people in real-time. On their bottom line, these kinds of works demonstrate the fact that art is not a fixed image any more, but a conversation between the human emotion and computer intelligence that is never idle. The artist is no longer to create things but rather to collaborate with other people in order to create programs, information and systems that create meaning. This new order does not conform to the traditional concept of originality, authorship and sincerity. It means that the creation in the age of AI is a novel communal event. Culture wise, interactive art based on AI is more likely to promote inclusion, accessibility, and experiences that transcend language and location distances.

CONFLICT OF INTERESTS

None.

ACKNOWLEDGMENTS

None.

REFERENCES

Barrow, J., Hurst, W., Edman, J., Ariesen, N., and Krampe, C. (2024). Virtual Reality for Biochemistry Education: The Cellular Factory. Education and Information Technologies, 29, 1647–1672. https://doi.org/10.1007/s10639-023-11826-1

Botti, A., and Baldi, G. (2024). Business Model Innovation and Industry 5.0: A Possible Integration in GLAM Institutions. European Journal of Innovation Management. Advance online publication. https://doi.org/10.1108/EJIM-09-2023-0825\

Chen, H. (2024, March 9–10). Comparative Analysis of Storytelling in Virtual Reality Games vs. Traditional Games [Conference presentation]. 7th International Conference on Financial Management, Education and Social Science, Paris, France.

Chen, H., Duan, H., Abdallah, M., Zhu, Y., Wen, Y., Saddik, A. E., and Cai, W. (2024). Web3 Metaverse: State-of-the-Art and Vision. ACM Transactions on Multimedia Computing, Communications, and Applications, 20, 1–42. https://doi.org/10.1145/3630258

Cruz, M., Oliveira, A., and Pinheiro, A. (2024). Metaverse Unveiled: From the Lens of Science to Common People Perspective. Computers, 13, Article 193. https://doi.org/10.3390/computers13080193

Davison, R. M., Wong, L. H., and Peng, J. (2022). The Art of Digital Transformation as Crafted by a Chief Digital Officer. International Journal of Information Management, 69, Article 102617. https://doi.org/10.1016/j.ijinfomgt.2022.102617

Drofova, I., Richard, P., Fajkus, M., Valasek, P., Sehnalek, S., and Adamek, M. (2024). RGB Color Model: Effect of Color Change on a User in a VR Art Gallery Using Polygraph. Sensors, 24, Article 4926. https://doi.org/10.3390/s24154926

Far, S. B., Rad, A. I., Bamakan, S. M. H., and Asaar, M. R. (2023). Toward Metaverse of Everything: Opportunities, Challenges, and Future Directions of the Next Generation Of Visual/Virtual Communications. Journal of Network and Computer Applications, 217, Article 103675. https://doi.org/10.1016/j.jnca.2023.103675

Fraser, A. D., Branson, I., Hollett, R. C., Speelman, C. P., and Rogers, S. L. (2024). Do Realistic Avatars Make Virtual Reality Better? Examining Human-Like Avatars for VR Social Interactions. Computers in Human Behavior: Artificial Humans, 2, Article 100082. https://doi.org/10.1016/j.chbah.2024.100082

Gode, U., Jaiswal, N., Satfale, P., Japulkar, A., and Choudhary, A. (2025). Advanced T-Junction Visibility Enhancement System. International Journal of Electrical Engineering and Computer Science, 14(1), 193–198.

Latham, W., Todd, S., Todd, P., and Putnam, L. (2021). Exhibiting Mutator VR: Procedural Art Evolves to Virtual Reality. Leonardo, 54, 274–281. https://doi.org/10.1162/leon_a_01857

Nalbant, K. G., and Uyanik, S. (2022). A Look at the New Humanity: Metaverse and Metahuman. International Journal of Computers, 7, 7–13.

Ray, P. P. (2023). Web3: A Comprehensive Review on Background, Technologies, Applications, Zero-Trust Architectures, Challenges and Future Directions. Internet of Things and Cyber-Physical Systems, 3, 213–248. https://doi.org/10.1016/j.iotcps.2023.05.003\

Santalo, C., Corsa, A. J., Duque, A., and Baron-Robbins, A. (2023). The Boca Bauhaus Marcel Breuer, BRiC and Lynn University’s NFT museum. Digital Creativity, 34, 282–295. https://doi.org/10.1080/14626268.2023.2273532

Spano, G., Theodorou, A., Reese, G., Carrus, G., Sanesi, G., and Panno, A. (2023). Virtual Nature, Psychological and Psychophysiological Outcomes: A Systematic Review. Journal of Environmental Psychology, 89, Article 102044. https://doi.org/10.1016/j.jenvp.2023.102044

Yang, Q., Zhao, Y., Huang, H., Xiong, Z., Kang, J., and Zheng, Z. (2022). Fusing Blockchain and AI with Metaverse: A Survey. IEEE Open Journal of the Computer Society, 3, 122–137. https://doi.org/10.1109/OJCS.2022.3188249

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.