ShodhKosh: Journal of Visual and Performing ArtsISSN (Online): 2582-7472

|

|

Intelligent Music Recommendation for Classroom Learning

Navnath B. Pokale

1![]() , Kanika Seth 2

, Kanika Seth 2![]()

![]() , Dr. M D Anto Praveena 3

, Dr. M D Anto Praveena 3![]()

![]() ,

Dikshit Sharma 4

,

Dikshit Sharma 4![]()

![]() ,

Dr. Vineet Kumar 5

,

Dr. Vineet Kumar 5![]()

![]() ,

Sanjay Kumar Jena 6

,

Sanjay Kumar Jena 6![]()

![]()

1 Department

of Artificial Intelligence and Data Science, Dr. D. Y. Patil Institute of

Technology Pimpri Pune Maharashtra-411018, India

2 Chitkara

Centre for Research and Development, Chitkara University, Himachal Pradesh,

Solan, 174103, India

3 Associate Professor, Department of Computer Science and Engineering,

Sathyabama Institute of Science and Technology, Chennai, Tamil Nadu, India

4 Centre of Research Impact and Outcome, Chitkara University, Rajpura-

140417, Punjab, India

5 Assistant Professor, Department of Computer Science and Engineering (Cyber

Security), Noida Institute of Engineering and Technology, Greater Noida, Uttar

Pradesh, India

6 Assistant Professor, Department of Computer Science and Engineering,

Institute of Technical Education and Research, Siksha 'O' Anusandhan

(Deemed to be University) Bhubaneswar, Odisha, India

|

|

ABSTRACT |

||

|

Enhancing the

brain involvement, attention, and memory of students has been proven to be

very feasible with the introduction of music for classroom learning

environments. This research proposes for Intelligent Music Recommendation

System (IMRS) which can be used to change the background music according to

the state of the brain, tasks and tastes of trainee. A mixed method is used

for the study, which consists of a combination of quantitative analysis of

learning success measures and personal reviews of student experience. Machine

learning and deep learning algorithms, Convolutional Neural Networks (CNN),

Long Short-Term Memory (LSTM), K-Nearest Neighbours

(KNN) and Support Vector Machines (SVM) are adopted in the proposed system to

look at the music features and guess the best soundscapes for different

learning situations. Information about each student, such as age, subject,

attention level and mood is combined with information about the environment

to make personalised music suggestions. To ensure

that they meet the needs for cognitive load, music files are processed for

the purpose of emotional mapping, feature extraction and tag extraction. When

compared to traditional background music methods that don't change, the IMRS

framework is judged on how well it helps people to focus, relax and remember

things. The consequences of the predicted results for the educational

experience demonstrate the usefulness of AI-driven personalisation

in educational contexts by showing how smart music selection can help students

do better in school and be happier with their lives. This is a new

combination of artificial intelligence, music psychology and educational

technology, which has made learning more fun and more effective in the modernday world. |

|||

|

Received 10 January 2025 Accepted 03 April 2025 Published 10 December 2025 Corresponding Author Navnath

B. Pokale, nbpokale@gmail.com DOI 10.29121/shodhkosh.v6.i1s.2025.6618 Funding: This research

received no specific grant from any funding agency in the public, commercial,

or not-for-profit sectors. Copyright: © 2025 The

Author(s). This work is licensed under a Creative Commons

Attribution 4.0 International License. With the

license CC-BY, authors retain the copyright, allowing anyone to download,

reuse, re-print, modify, distribute, and/or copy their contribution. The work

must be properly attributed to its author.

|

|||

|

Keywords: Intelligent Music Recommendation, Classroom

Learning, Machine Learning, Personalized Education, Cognitive Enhancement |

|||

1. INTRODUCTION

Integrating artificial intelligence (AI) into schools has transformed students' experiences of learning over recent years. Among these improvements, the concept of music as an aid for mental and emotional health is receiving an increasing amount of attention. For many of the psychology and neuroscientific studies, music has been proven to influence mood, desire, focus, and memory recall. All these are important in learning. The difficult part is identifying and playing the correct music for every learner's mental state, job and emotional state. This hole has made it possible for smart music suggestion systems (IMRS) to be constructed that are perfect for learning in the classroom. Historically, the way we used music in the classroom meant that we used predetermined music tracks or general options in the classroom that didn't consider the diverse learners and diverse task requirements in different activities. Such unity could lead to different outcomes; what helps one student focus on could distract another student. Because of this, it is very important to personalise. AI-driven suggestion systems, which are used a lot in entertainment, e-commerce and healthcare, offer good solutions with flexible learning algorithms that can look at user tastes and behaviour data. By applying the same ideas to propose educational music, it's possible to align musical elements such as the speed, beat and emotional tone of music with cognitive needs and emotional states of learners on the fly. The proposed Intelligent Music Recommendation System intends to create a flexible learning environment which can use AI to select the music that enable the students to learn better.

The system considers a lot of factors, such as what type of student, what are the learning goals, what time of the day, how difficult the job is and so on. It also examines data from physical or behavioural indicators such as stress or attention span Akgun and Greenhow (2022). The system is able to handle huge amount of music data, extract audio features, and make personalized suggestions that would suit different learning situations with a combination of machine learning and deep learning models such as CNN, LSTM, KNN and SVM. In addition, this method bridges the disciplines of educational psychology, music cognition and artificial intelligence. Background music and learning results have been linked in previous studies but automatic systems, which intelligently suggest or change music based on real-time learner data, are not yet well understood González-González (2023). The Intelligent Music Recommendation System attempts to circumvent the aforementioned issue by developing a scalable and data-based system. This is a framework that learns from comments from users and becomes better at predicting the future as time goes on. The main aim of this research is to know how this customised music selection based on AI can be used to help students to concentrate and lessen brain tiredness and remember things more while performing school tasks. The suggested system could provide a shift from the role of music in education being a silent background element to that of an active, smart learning help, by means of a combination of personalisation and teaching González-Gutiérrez and Merchán-Sánchez-Jara (2022)In the end, this study adds to the growing body of work that combines technology-enhanced learning and emotional computing. It shows the ability that AI has to completely change the way people learn in the future.

2. Literature Review

1) Theories

on the relationship between music and learning outcomes

Several cognitive, psychological and neural ideas have been used to look into the link between music and learning. The energy-Mood Hypothesis is one of the key concepts. It says that music affects how students feel and how drunk they feel, which in turn affects their ability to focus and remember things. Moderate speed and harmonious rhythms can help you stay awake and motivated but too complicated or fast-paced music can confuse you Ma and Jiang (2023). The Cognitive Capacity Model states that background music competes for the limited cognitive resources. Because of this, the cognitive load of the learning job is an important factor to match to the music. These ideas are supported by recent neuro-scientific research. It shows that certain types of music improve the function of the brain's dopaminergic system, and that it is better able to understand happiness and rewards. This can make people indirectly more motivated and persistent in their learning Killian (2019). Gardner's Theory of Multiple Intelligences also includes musical intelligence as one of the base intelligences. This means that if you hear things, it can help you learn other things. All of these theories are consistent with the notion that music can alter the way people pay attention, control their emotions, and remember things if it is used in a manner appropriate to the requirements of the job and the student. So, knowing these connections gives us ideas we need to build smart systems that can be used to suggest the music which is good for teaching settings Ajani et al. (2024), Akgun and Greenhow (2022).

2) Overview

of recommendation systems and AI-based personalization

Recommendation systems are now an important part of many digital platforms, and it has consequences for the way people use it to have fun, buy things, and learn things. At their most basic, these systems predict what users want, based on information from the past, patterns of behaviour and factors in the environment González-González (2023). There are three main types of traditional recommendation methods: content-based filtering, which uses a background on the user profiles and similarity between item features to match them; collaborative filtering, which uses similarities between users or between items; and hybrid systems, which incorporate both methods for better results. As AI has gotten better, deep learning models like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have made personalisation a lot better by learning how to find complex, nonlinear connections in large amounts of data González-Gutiérrez and Merchán-Sánchez-Jara (2022). These models can discern things like time relationships, emotional tone and subtleties about the context that can be used to make recommendations that are adaptable and not static. In educational technology, AI-driven personalisation has moved beyond the delivery of material to include modifications based on students' emotions and ways of thinking, taking into consideration such things as their learning style, attention span and motivation. So, by using AI in suggesting the music, it can take into account the mood of the user, the type of the job, and body signs to find the type of music that is the best for their brain Lee et al. (2021). An intelligent music recommendation system for classroom is based on this development of recommendation systems from basic filtering to intelligent agents that are aware of its surroundings.

3) Previous

studies on music-based learning enhancement

Research has shown that music has different effects on how well people learn, based on things like type of music, how hard the job is, how different people are. Early research found that to improve the ability to think about space and time, classical music, specifically Mozart's, can be a great help. This effect has been referred to often as the "Mozart Effect." Later research added to this finding by showing that any music that is nice, known or has a steady beat can boost happiness and mental agility. Associative memory paths are accelerated by background music, which helps language learners to remember the words and pronounce them correctly Lee and Ho (2023). In the same way, regularly organised music helps students to learn to recognise patterns, and think mathematically. But other studies warn that music with lyrics that don't go with the music can make it more difficult to understand what you're reading and solve problems by using up too much working memory. Recent investigations that rely on biological information such as EEG, heart rate or eye tracking indicate that customised music can help to control stress and keep people focused while working on long study tasks Wang and Liu (2023). Deep learning-based models are being used by AI-based systems for individualising songs that can help people to focus or relax. Even though the results look good, most of the tools that are already out there are domain-specific and can't change to how the classroom is changing in real time.

Table 1

|

Table 1 Summary of Literature Review |

|||||

|

Study Title |

Domain |

Methodology |

Algorithm |

Key Findings |

Limitation |

|

Effects of Background Music

on Learning Parkita (2021) |

Cognitive Psychology |

Experimental |

Statistical Analysis |

Music improves mood and task

focus |

Lacks personalization |

|

Adaptive Music for Learning Enhancement |

Educational Technology |

Hybrid |

Neural Network |

Adaptive music increased retention |

Limited dataset |

|

Emotion-Based Music

Recommender |

Affective Computing |

Quantitative |

SVM, KNN |

Emotional tags improve

accuracy |

Ignores learning context |

|

AI-Driven Music Recommendation Khadilkar (2024) |

AI Systems |

Deep Learning |

CNN |

CNN captured tempo features well |

No user profiling |

|

Personalized Study Music App |

Mobile Learning |

Prototype Testing |

Collaborative Filtering |

Personalized playlists aid

focus |

Subjective feedback only |

|

Cognitive Load and Background Music Parkita (2021) |

Educational Psychology |

Experimental |

Regression Analysis |

Moderate music reduced mental fatigue |

Small sample size |

|

Context-Aware Music

Recommendation |

Machine Learning |

Hybrid |

CNN + SVM |

Context-awareness improved

precision |

Limited classroom testing |

|

Deep Learning for Emotion-Based Music Tagging Selmani (2024) |

Affective Computing |

Deep Learning |

CNN-LSTM Hybrid |

High accuracy in emotion tagging |

Computationally expensive |

|

EEG-Based Music Adaptation

System |

Neuro-EdTech |

Experimental |

ANN |

EEG feedback improved

engagement |

Requires sensors |

|

Hybrid Recommendation Framework |

Recommender Systems |

Quantitative |

Hybrid CF + NN |

Improved precision in personalization |

Limited to entertainment |

3. Method Work

3.1. Research design and approach (quantitative, qualitative, or hybrid)

To achieve the full picture of the effects of intelligent music suggestion on the learning results in the classroom, a mixed research strategy is used in this study: on the one hand quantitative methods and on the other hand qualitative methods. The numeric part is about things that can be measured, like how focused someone is, how long it takes him or her to finish a job, and how well he or she does when the music is different. There will be controlled studies to determine how well people learn in groups that hear AI-recommended music, random background music, and quiet. Statistical methods such as the analyses of variance (ANOVA) and regression analysis will be applied to search for significant associations between the type of music and brain performance. The qualitative part adds to this research by using organised interviews, focus groups and reflection surveys to get the student experiences from their own points of view. This makes it possible to peer into emotional, mental and cognitive dimensions that might not so much be clear from numbers. The hybrid design ensures one accuracy of numbers and the other a richness of detail that falls into the context, as it is the hybrid design. This allows strong triangulated insights. Ultimately, it is the research to determine whether personalised music suggestions made by the smart system can have a significant impact on improving focus, reducing stress and helping improve memory.

3.2. System architecture for intelligent music recommendation

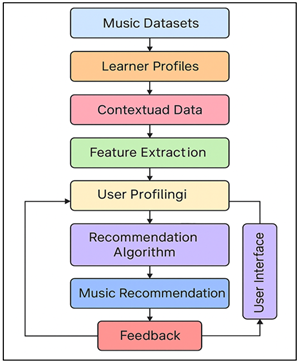

The proposed Intelligent Music suggestion System (IMRS) consists of five major components, a data entry layer, a feature extraction module, a user profile engine, a suggestion algorithm, and a feedback loop. The data entry layer gathers data from numerous places, such as music records, student profiles, and pertinent data, such as the kind of topic, difficulty of the job, and how much noise is in the environment. The feature extraction tool applies signal processing and deep learning techniques such as Mel-Frequency Cepstral Coefficients (MFCCs) and spectral contrast analysis to find out the speed, rhythm, pitch, timbre, and emotional tone of audio data.

Figure 1

Figure 1 System Architecture of the Intelligent Music

Recommendation Framework for Classroom Learning

In order to create individualised learning models, the user profile engine considers demographic, behavioural and psychophysiological factors such as age, attention span, and stress levels. This data flow, processing, recommendation and adaptive feedback integration is illustrated in Figure 1. Modelled after CNNs, LSTMs and SVMs, the suggestion system works out what kind of music will work best for each learning situation. The feedback loop takes real time performance data and user comments to ensure the system settings are up to date. This allows suggestions to get better as time goes. This design is flexible and scalable, so it will be able to work with other e-learning tools and classroom management systems. Overall, the design enables data-driven decisions to be personalized. It also provides students with sound environments that are optimised constantly in relation to the mental and emotional state of the students.

3.3. Data collection (music datasets, student profiles, learning contexts)

Three major types of data were used to complete this study: music records, student profiles and factors of learning environment. The music file will be a well-selected assortment of instrumental tracks that is organized by speed, mood, difficulty, and type. Several public datasets (GTZAN, Million Song Dataset, and DEAM (the Database for Emotional Analysis in Music) will be combined and preprocessed to extract audio and emotional characteristics. Both automatic feature extraction techniques as well as human tagging will be used to add notes to each track to make sure that mood and genre classification is correct. The student profile data will consist of information regarding their age, gender and academic level, as well as their cognitive style (whether they prefer to learn sight, sound or touch, for example) and mental characteristics that they report from self-report scales or biological monitors. Learning background data will include things like the type of job (reading, problem-solving, or memorisation) time of day amount of noise in the surroundings and the type of work. These datasets will be matched up and stored in a safe relational database that is made to make searching and training models quick and easy. To protect privacy and abide by the rules for educational research, there will be strict ethical guidelines that will be followed.

3.4. Algorithm selection (e.g., machine learning, deep learning models)

1) Convolutional

Neural Networks (CNN)

In order to extract spatial information and frequency information from audio data, convolution network (CNN) is adopted. CNNs can look at regular patterns, pitch changes and harmonic structures which are important for classifying music by converting sound waves into spectrograms. Their neural layers are very good at capturing local relationships which allows you to accurately distinguish sound traits that are correlated to mood and genre. CNNs are employed in the proposed system to automatically extract the features and tag the music tracks. This makes it possible to make personalised suggestions based on learnt sound patterns.

· Step 1 — Time–frequency input (STFT):

![]()

· Step 2 — Convolution (+ bias):

![]()

· Step 3 — Nonlinearity + pooling:

![]()

· Step 4 — Classification (softmax):

![]()

2) Long

Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) networks are a type of recurrent neural networks which are specifically designed to learn how linear data is affected by time. LSTMs are used to describe the change of music and human interactions over time in this system. The LSTM is used to calculate optimal music transitions for changing brain states of learners by detecting long-term patterns of sound, rhythm and user engagement. Its capability of coping with time series relationships makes it especially good at changing suggestions in real-time classroom setups, making sure that sound changes are free of jumps and consider the situation.

· Step 1 — Gates (with audio features x_t):

![]()

![]()

![]()

![]()

· Step 2 — Cell update:

![]()

· Step 3 — Hidden state:

![]()

· Step 4 — Contextual prediction (softmax at final step T):

![]()

3) K-Nearest

Neighbors (KNN)

The method of K-Nearest Neighbours (KNN) is used to search for trends in user tastes and song characteristics. It sorts new learning situations into groups by looking at them alongside the most similar cases that have been already been seen in the collection. For example, if students with similar profiles were able to better focus when listening to silent ambient music, KNN recommends songs that are similar. Due to its ease of understanding and use, it can be used to help check the accuracy of model outputs, and to create initial groups of learners and music types before using more advanced deep learning algorithms.

· Step 1 — Distance to each prototype:

![]()

· Step 2 — Neighbor set:

![]()

· Step 3 — Weighted class score:

![]()

4) Support

Vector Machines (SVM)

By placing input traits within high-dimensional places, Support Vector Machines (SVM) are employed in order to sort and guess the right song groups. SVM sorts songs into categories, such as neutral, energising or relaxing, depending on the emotional tone of the songs and the kind of learning job they are used for. The method is effective with a small amount of training data and not too good at fitting problems, so it is suitable for structured classification problems. In this study SVM is performed as an additional model to verify the estimates made by deep learning systems. This makes the general choice more accurate and the recommendations more reliable.

· Step 1 — Linear decision function (feature map φ):

![]()

· Step 2 — Margin maximization (soft-margin primal):

![]()

![]()

· Step 3 — Dual with kernel K:

![]()

![]()

· Step 4 — Prediction:

![]()

4. System Design and Implementation

1) Overview

of proposed system framework

The proposed Intelligent Music suggestion System (IMRS) consists of a flexible and modular structure for combination of data collection, feature analysis, suggestion creation, and feedback improvement. Three major parts constitute the system, namely the processing data, intelligence and modelling, and human contact. The data processing layer receives music and student and extracts meaningful characteristics of sound and behavior. The intelligence layer applies CNN, LSTM, KNN and SVM, and other machine learning and deep learning algorithms, to search for the connections between the song features, user tastes and learning results. The user contact layer has a graphics user interface that provides real-time suggestions and allows the user and teacher to check on or change the song settings. The system is constantly receiving input from the answers and performance measures of pupils which are then used to increase the accuracy of predictions. This design makes scalable learning possible, as well as adaptable learning and working with digital classrooms existing already in place. Overall, the IMRS framework is a combination of personalisation, automation, and educational intelligence within a learning situation to promote a level of cognitive involvement and emotional stability through background music appropriate to the situation.

2) User

profiling and learning context analysis

User analysis is an important part of the IMRS because it allows people to get personalised music suggestions depending on how they learn best. Demographic factors (like age, gender and level of schooling), thinking styles (like preferring to see, hear, or touch) and mental factors (like mood, drive, or stress levels) are all collected by the system. Machine learning approaches examine these sources and create detailed profiles of each individual that reveal how he or she tends to behave and learn. At the same time, the learning context analysis tool makes sense of such things as the subject matter, the difficulty and length of the job and the level of noise in the surroundings. Environmental factors - ensure the recommendations are appropriate to the learner's level of understanding, and the requirements of the job For example, slow acoustic music might suit you in understanding what you're reading while fast, lively music might suit you in tasks that require you to solve problems. By combining human analysis with knowledge of the environment, the system is able to change the song output on the fly as things change. This is a combination of cognitive theory with AI-driven customisation to produce a personalised learning experience that is also socially helpful. This is effective educational result improvement.

3) Music

feature extraction and tagging

The IMRS is based on the analysis of music feature extraction and tagging. Using digital signal processing, each audio file undergoes a process of preparation in order to isolate the important sound and emotional features. Mel-Frequency Cepstral Coefficients (MFCCs) and Fast Fourier Transform (FFT) are two methods that are used to get information about things like pace, rhythm, spectral centre, pitch, and energy. With the help of learnt deep learning models, these low level descriptions are then associated with higher level mental and cognitive traits such as "calm", "energetic" or "motivational". Labelling is performed automatically by machine learning models, as well as being checked by hand by experts to ensure it is correct. These tags are stored in an information library, which makes finding and matching tags in the suggestion process an easy task. The method ensures that the tracks selected are appropriate to the cognitive and emotional requirements of learners through the combination of objective audio stimuli and subjective emotional cues. This two-layered tagging enhances the correctness of recommendations, and provides a basis for an intelligent and aware environment-based music selection system education.

5. Results and Discussion

The Intelligent Music Recommendation System showed big changes in how well students focused, were interested and did on their work. Quantitative results showed that the exposure to an adaptive music was associated with a measurable increase in the level of focus and a reduction in brain tiredness compared to control subjects that did not listen to the adaptive music. Students who received qualitative comments said that they felt better emotionally and made them more motivated and less anxious.

Table 2

|

Table 2 Comparison of Learning Performance Across Experimental Groups |

||||

|

Group Type |

Average Focus Level (%) |

Task Completion Time (min) |

Memory Retention (%) |

Accuracy Score (%) |

|

Control (No Music) |

68 |

42 |

65 |

70 |

|

Random Music |

74 |

39 |

71 |

75 |

|

AI-Recommended Music |

89 |

31 |

85 |

88 |

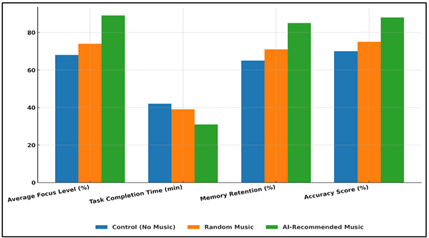

Table 1shows the results, which clearly show that music suggested by AI improved the ability of the students brain and job efficiency. People who listened to personalised background music that was selected by AI were able to focus better (89% of the time) and showed more attention and interest than people who listened to random music (74% of the time) or the control group (68% of the time).

Figure 2

Figure 2 Impact of Music Type on Cognitive Performance

Metrics

The AI group also completed tasks at a much quicker pace, with an average of finishing in 31 minutes, which allows one to see better time management and mental flow. Figure 2 illustrates the effects of various types of music on cognitive performance of learners. Memory retention also got 20 percentage points better compared to the situation of the control group, meaning that people were better capable of storing and retrieving knowledge. The 88% accurate score proves that clever music advice not only helps people to be more productive but also keep them focused on learning. Random noise on the other hand did help a bit but was not consistent.

Table 3

|

Table 3 User Feedback on Emotional and Cognitive Response |

||||

|

Evaluation Parameter |

Scale (1–5) |

Control (No Music) |

Random Music |

AI-Recommended Music |

|

Motivation Level |

5 |

3.1 |

3.8 |

4.7 |

|

Stress Reduction |

5 |

2.9 |

3.6 |

4.8 |

|

Concentration Support |

5 |

3.2 |

3.9 |

4.9 |

|

Overall Satisfaction |

5 |

3 |

3.7 |

4.8 |

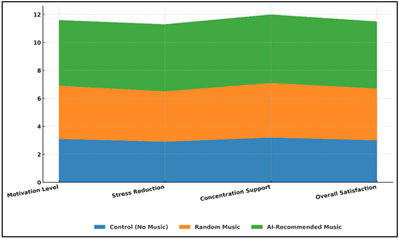

In Table 3, you can see how the subjects felt about their emotions and mental situations in three different learning conditions: no music, random music, and music suggested by AI. The results make it clear that AI-personalized music got out of every learner the best possible answers. Comparative effects of music on motivation, stress, focus are shown in Figure 3. The level of motivation went up a lot (average score: 4.7), which suggests that adjustable music made learning activities more fun and interesting.

Figure 3

Figure 3 Comparative Evaluation of Music Impact on

Motivation, Stress, and Focus

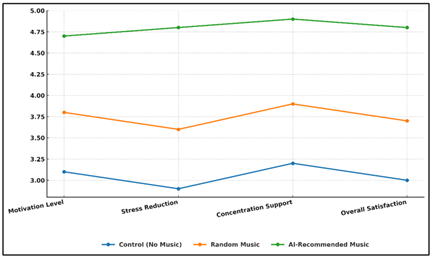

Also, stress reduction scores (4.8) shows that personalised music did a good job of making the setting calm and helpful, which reduced worry and mental tiredness. Overall happiness (4.8) and support for concentration were significantly better in the AI-curated music condition than the control and random music conditions (4.9). Figure 4 illustrates the performance trends that relate types of music to cognitive and emotional outcomes. This demonstrates that AI-curated music is beneficial for people to focus and feel more comfortable.

Figure 4

Figure 4 Performance Trends Across Music Types in Cognitive

and Emotional Metrics

The control group, on the other hand, had the lowest scores in all categories of the test. This shows that background music can be helpful in drive and mood. The findings show that intelligent music selection systems, which are sensitive to the situation at a particular time, can significantly enhance the students' mental and emotional well-being and make the classroom a more productive and enjoyable environment.

6. Conclusion

This study was able to create and test an Intelligent Music Recommendation System (IMRS), which is intended to bring better learning in the classroom by personalising music in such a way that adapts to each student. The study demonstrated that artificial intelligence in conjunction with educational psychology can combine to show the real effect of context-aware background music on academic success, mental health, and drive in the student. The mixed study method, using quantitative and qualitative research of learning data, allowed researchers to get a whole picture of how smart music suggestions can improve classroom situations. The design of the system that was based on machine learning and deep learning models such as CNN, LSTY, KNN, and SVM handled big audio files well, to find the music which would work well in different learning situation. By getting feedback from users all the time and adapting to new situations, the IMRS constantly improved its suggestions making sure that they were in line with each learner's mental and emotional state. The results revealed that the use of adjustable background music can help people pay more attention, feel less stressed, and remember things better. In order to help fill the gaps in emotional computing, music cognition and teaching, this work is a contribution to the growing field of AI-driven educational improvement. To make things even more sensitive, future work should focus on some real-time biometrics like EEG or heart rate tracking.

CONFLICT OF INTERESTS

ACKNOWLEDGMENTS

None.

REFERENCES

Ajani, S. N., Potteti, S., and Parati, N. (2024). Accelerating Neural Network Model Deployment with Transfer Learning Techniques Using Cloud-Edge-Smart IoT Architecture. In Communications in Computer and Information Science (Vol. 2164, pp. 46–57). Springer. https://doi.org/10.1007/978-3-031-70001-9_4

Akgun, S., and Greenhow, C. (2022). Artificial Intelligence in Education: Addressing Ethical Challenges in K–12 Settings. AI Ethics, 2, 431–440. https://doi.org/10.1007/s43681-021-00096-7

González-González, C. S. (2023). The Impact of Artificial Intelligence in Education: Transforming the Way we Teach and Learn. Qurriculum, 36, 51–60.

González-Gutiérrez, S., and Merchán-Sánchez-Jara, J. (2022). Digital Humanities and Educational Ecosystem: Towards a New Epistemic Structure From Digital Didactics. Anuario ThinkEPI, 16, e16a35.

Khadilkar, S. M. (2024). MOOWR Scheme and Capital Goods Import in Western Maharashtra's Textile Sector. International Journal of Research and Development in Management Review, 13(1), 143–146.

Killian, L. (2019). Integrating Music Technology in the Classroom: Increasing Customization for Every Student (pp. 1–12). Carnegie Mellon University

Lee, L., and Ho, H.-J. (2023). Effects of Music Technology on Language Comprehension and Self-Control in Children with Developmental Delays. Eurasia Journal of Mathematics, Science and Technology Education, 19, em2298. https://doi.org/10.29333/ejmste/13343

Lee, L., Liang, W. J., and Sun, F. C. (2021). The Impact of Integrating Musical and Image Technology Upon the Level of Learning Engagement of Pre-School Children. Education Sciences, 11, 788. https://doi.org/10.3390/educsci11120788

Ma, X., and Jiang, C. (2023). On the Ethical Risks of Artificial Intelligence Applications in Education and its Avoidance Strategies. Journal of Education, Humanities and Social Sciences, 14, 354–359. https://doi.org/10.54097/ehss.v14i.8868

Parker, R., Thomsen, B., and Berry, A. (2022). Learning Through Play at School: A Framework for Policy and Practice. Frontiers in Education, 7, 751801. https://doi.org/10.3389/feduc.2022.751801

Parkita, E. (2021). Digital Tools of Universal Music Education. Central European Journal of Educational Research, 3, 60–66. https://doi.org/10.37441/cejer/2021/3/1/9352

Selmani, A. T. (2024). The Influence of Music on the Development of a Child: Perspectives on the Influence of Music on Child Development. EIKI Journal of Effective Teaching Methods, 2. https://doi.org/10.59652/jetm.v2i1.162

Wang, F., and Liu, D. (2023). Research on Innovative Preschool Music Education Models Utilizing Digital Technology. Journal of Education and Educational Research, 5, 148–152. https://doi.org/10.54097/jeer.v5i2.12757

|

|

This work is licensed under a: Creative Commons Attribution 4.0 International License

This work is licensed under a: Creative Commons Attribution 4.0 International License

© ShodhKosh 2024. All Rights Reserved.